3 Backup and Recovery of Infrastructure Components

This chapter provides the steps for backing up and recovering the components of an Exalogic rack.

Note:

Before recovering a component, ensure that the component is not in use.It contains the following sections:

3.1 Exalogic Configuration Utility

The Exalogic Configuration Utility (ECU) is used to configure an Exalogic rack during initial deployment. After the initial deployment is complete, Oracle recommends that you back up the configuration and the runtime files generated by the ECU.

Note:

ExaBR does not automate the backup of the ECU files.3.1.1 Backing Up the ECU Files

You must perform the following steps manually to back up the configuration and runtime files generated by the ECU:

-

Log in to the node on which you installed the Exalogic Lifecycle Toolkit.

-

Create a directory called

ecuin theexalogic-lcdata/backupsdirectory. You should use this directory to manually store backups of the ECU files. -

If the

ExalogicControlshare is not mounted in themnt/ExalogicControldirectory, mount it. -

In the

ExalogicControldirectory, navigate to theECU_ARCHIVEdirectory. -

Copy the ECU file called

ecu_log-date&time_stamp.tgzto theecudirectory you created in step 2. This file contains the following:-

ecu_run_time.tgz: Contains tarball of the ECU configuration files -

ecu_home.tgz: Contains tarball of the ECU scripts -

ecu_archive.tgz: Contains tarball of the ECU log files

-

3.1.2 Recovering the ECU Files

Recover configuration and runtime files generated by the ECU, by doing the following:

-

Extract the tarball containing the configuration files to the

/opt/exalogicdirectory. -

Extract the tarball containing the runtime files to the

/var/tmp/exalogicdirectory. -

Extract the tarball containing the log files to the

/var/log/exalogicdirectory.

3.2 Exalogic Compute Nodes

This section contains the following subsections:

3.2.1 Backing Up Compute Nodes

You can back up compute nodes using ExaBR as follows:

3.2.1.1 Backing Up Linux Compute Nodes

ExaBR can back up compute nodes running Linux using Logical Volume Manager (LVM)-based snapshots.

Note:

To run ExaBR on STIG-hardened compute nodes, you must complete the following prerequisites:-

Run ExaBR locally on each compute node you want to back up.

-

Run ExaBR with the

sudocommand. Alternatively, you can log in to the compute node and runsu -to gain root access and run ExaBR commands without thesudocommand. -

Mount the ELLC shares on each component you want to back up. You can copy the ELLC installer to the node and use the

-moption to mount the shares as follows:# ./exalogic-lctools-version_number-installer.sh ZFS_Address -m

-

Run ExaBR as a user with

sudoerpermissions. Alternatively, you can log in to the compute node and runsu -to gain root access and run ExaBR commands without thesudocommand.

To automate the backups of STIG-hardened Linux compute nodes, you must additionally perform the following prerequisites:

-

Run the scheduler you use (such as

cron) as a privileged user. -

Since you must run the scheduler as a privileged user, the

sudocommand is not required when running ExaBR commands non-interactively as follows:./exabr backup local_address [options]

ExaBR backs up a Linux compute node using LVM-based snapshots by doing the following tasks:

-

Creates an LVM-based snapshot of the compute node file system.

-

Mounts the snapshot.

-

Creates a

tarbackup of the mounted file system from the snapshot. -

Unmounts and deletes the snapshot.

When restoring LVM-based backups, ExaBR replaces files that were backed up. However, any files that were created after the backup was made are not deleted during a restore.

By default, ExaBR excludes the following directories when backing up the compute node:

-

/dev -

/proc -

/sys -

/tmp -

/var/tmp -

/var/run -

/var/lib/nfs -

NFS and OCFS2 mounted file systems

You can use the --exclude-paths option to define your own exclusion list. The --exclude-paths option overrides the default exclusion list. The NFS and OCFS2 mounts, however, are automatically added to the exclusion list.

The --include-paths option can be used to back up only the specified directories. The --include-paths option is useful for backing up customizations. These options are described in Section 2.4.2, "ExaBR Options."

The following are the prerequisites for using LVM-based snapshots to back up Linux compute nodes with ExaBR:

-

When ExaBR takes the first backup, swap space should not be in use. During the first backup, ExaBR allocates 1 GB of the swap space for all subsequent LVM-based snapshots.

If there is free memory on the compute node, you can free the swap space by logging in to the compute node as the

rootuser and running the following commands:swapoff -av swapon -a

-

The logical volume group that contains the root (/) volume should have at least 1 GB of unused space or more than 512 MB of swap space. Use the

vgscommand to display the amount of available free space (VFreecolumn). -

The share you back up to must have enough free space for the extraction of the LVM-based snapshot.

To back up compute nodes running Linux or the configuration of their ILOMs by doing the following:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Use ExaBR to back up compute nodes or the configuration of their ILOMs in one of the following ways:

-

To back up specific compute nodes or ILOMs, run ExaBR as follows:

./exabr backup hostname1[,hostname2,...] [options]

-

To back up all compute nodes and their ILOMs, run ExaBR using the

all-cntarget as follows:./exabr backup all-cn [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr backup cn1.example.com,cn1ilom.example.com --exclude-paths /var,/tmp,/sys,/proc,/dev,/test

In this example, ExaBR backs up the first compute node and the configuration of its ILOM. It excludes the

/var,/tmp,/sys,/proc,/dev, and/testdirectories, as specified by the--exclude-pathsoption, as well as the NFS and OCFS2 mounted file systems. -

ExaBR stores the backup in the following files:

| File | Description |

|---|---|

cnode_backup.tgz |

Contains the backup of the compute node. |

ilom.backup |

Contains the configuration backup of the ILOM. |

guids.backup |

Contains the InfiniBand GUIDs for the compute node. |

version.backup |

Contains the firmware version number of the compute node and ILOM. |

backup.info |

Contains the metadata of the backup of the compute node and ILOM. |

checksums.md5 |

Contains the md5 checksums of the backup. |

The files for compute nodes and ILOMs are stored in different directories. The files for compute nodes are stored in the compute_nodes directory and the files for ILOMs in the iloms directory, as described in Section 2.1.2, "Backup Directories Created by ExaBR."

By default, the backups are stored in the /exalogic-lcdata/backups directory.

3.2.1.2 Backing Up Solaris Compute Nodes

The operating system of an Exalogic rack is installed on the local disk of each compute node. ExaBR creates a backup of the root file system and the customizations, if any.

Note:

To back up Solaris zones using ExaBR, you must installgtar on the zone. For information on backing up Solaris zones, see Backing Up Solaris Zones.By default, ExaBR excludes the following folders while backing up a Solaris compute node:

-

/proc -

/system -

/tmp -

/var/tmp -

/var/run -

/dev -

/devices -

NFS and HSFS mounted file systems

You can use --exclude-paths option to define your own exclusion list. The --exclude-paths option overrides the default exclusion list. The NFS and HSFS mounts are automatically added to the exclusion list.

Note:

For Solaris compute nodes and zones, apart from the directories listed in the default exclusion list, some files and directories cannot be backed up. In addition, some files and directory can be backed up by ExaBR, but cannot be restored to live Solaris file systems. The following are examples of such files and directories:-

/home -

/etc/mnttab -

/etc/dfs/sharetab -

/etc/dev -

/etc/sysevent/devfsadm_event_channel/1 -

/etc/sysevent/devfsadm_event_channel/reg_door -

/etc/sysevent/piclevent_door -

/lib/libc.so.1 -

/net -

/nfs4

For example, to back up the file system of a Solaris compute node, run the following command:

./exabr backup cn1.example.com --exclude-paths /var,/tmp,/system,/proc,/dev,/devices,/home,/etc/mnttab,/etc/dfs/sharetab,/lib/libc.so.1,/net,/nfs4,/etc/sysevent/devfsadm_event_channel/1,/etc/sysevent,/piclevent_door

This command backs up everything except the exclusion list and the additional files and directories listed in this note.

The --include-paths option can be used to only back up certain directories. The --include-paths option is useful for backing up customizations. These options are described in Section 2.4.2, "ExaBR Options."

To back up compute nodes running Solaris or the configuration of their ILOMs, do the following:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Use ExaBR to back up compute nodes or the configuration of their ILOMs in one of the following ways:

-

To back up specific compute nodes or ILOMs, run ExaBR as follows:

./exabr backup hostname1[,hostname2,...] [options]

-

To back up all compute nodes and their ILOMs, run ExaBR using the

all-cntarget as follows:./exabr backup all-cn [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr backup cn1.example.com, cn1ilom.example.com --exclude-paths /var,/tmp,/system,/proc,/dev,/devices,/home,/etc/mnttab,/etc/dfs/sharetab,/lib/libc.so.1,/net,/nfs4,/etc/sysevent/devfsadm_event_channel/1,/etc/sysevent/piclevent_door

In this example, ExaBR backs up the first compute node and configuration of its ILOM. As the

--exclude-pathsoption is used, it also excludes the directories specified in the previous note and NFS mounted file systems on the compute nodes. The--exclude-pathsoption overrides the default exclusion list. -

ExaBR stores the backup in the following files:

| File | Description |

|---|---|

cnode_backup.tgz |

Contains the backup of the compute node. |

ilom.backup |

Contains the configuration backup of the ILOM. |

guids.backup |

Contains the InfiniBand GUIDs for the compute node. |

version.backup |

Contains the firmware version number of the compute node and ILOM. |

backup.info |

Contains the metadata of the backup of the compute node and ILOM. |

checksums.md5 |

Contains the md5 checksums of the backup. |

The files for compute nodes and ILOMs are stored in different directories. The files for compute nodes are stored in the compute_nodes directory and the files for ILOMs in the iloms directory, as described in Section 2.1.2, "Backup Directories Created by ExaBR."

By default, the backups are stored in the /exalogic-lcdata/backups directory.

To back up Solaris zones, do the following:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Ensure that

gtaris installed in the zone you want to back up. -

Ensure that the IP address of the zone you want to back up has been added to the

compute_nodesparameter of theexabr.configfile. -

Use ExaBR to back up zones in one of the following ways:

-

To back up specific zones, run ExaBR as follows:

./exabr backup zone1[,zone2,...] [options]

-

To back up only the global zone, run ExaBR while excluding the non-global zones with the

--exclude-pathsoption as follows:./exabr backup globalzone --exclude-paths path_to_nonglobalzone1,[path_to_nonglobalzone2,...][other_directories_to_exclude] [options]

-

To back up all the zones on a compute node, run ExaBR as follows:

./exabr backup HostnameOfComputeNode[options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr backup cn1.example.com --exclude-paths /var,/tmp,/system,/proc,/dev,/devices,/home,/etc/mnttab,/etc/dfs/sharetab,/lib/libc.so.1,/net,/nfs4,/etc/sysevent/devfsadm_event_channel/1,/etc/sysevent/piclevent_door

In this example, ExaBR backs up the compute node

cn1.example.com. As the--exclude-pathsoption is used, it also excludes the directories specified in the previous note and NFS mounted file systems on the compute nodes. The--exclude-pathsoption overrides the default exclusion list. -

3.2.1.3 Backing Up Customizations on Oracle VM Server Nodes

The operating system of an Exalogic rack is installed on the local disk of each compute node.

Note:

Oracle VM Server nodes are stateless, so you do not need to back them up. You can use ExaBR to back up customizations on your Oracle VM Server node, by using the--include-paths option. You can reimage and recover an Oracle VM Server node using ExaBR, by following the steps described in Section 3.2.2.4, "Reimaging and Recovering Oracle VM Server Nodes in a Virtual Environment."By default, ExaBR excludes the following folders while backing up the compute node:

-

/dev -

/proc -

/sys -

/tmp -

/var/tmp -

/var/run -

/var/lib/nfs -

poolfs,ExalogicPool,ExalogicRepo,and NFS and OCFS2 mounted file systems. TheExalogicPooland theExalogicRepofile systems are mounted over NFS.

You can use the --exclude-paths option to define your own exclusion list. The --exclude-paths option overrides the default exclusion list. The NFS and OCFS2 mounts, however, are automatically added to the exclusion list.

The --include-paths option can be used to back up only the specified directories. The --include-paths option is useful for backing up customizations. These options are described in Section 2.4.2, "ExaBR Options."

To back up customizations on Oracle VM Server nodes or the configuration of their ILOMs, do the following:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Use ExaBR to back up customizations on Oracle VM Server nodes or back up the configuration of their ILOMs in one of the following ways:

-

To back up customizations on specific compute nodes, run ExaBR as follows:

./exabr backup hostname1[,hostname2,...] --include-paths path_to_customization1[,path_to_customization2...] [options]

-

To back up customizations on all compute nodes and back up their ILOMs, run ExaBR using the

all-cntarget as follows:./exabr backup all-cn --include-paths path_to_customization1[,path_to_customization2...] [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr backup cn1.example.com,cn1ilom.example.com --include-paths /custom --exclude-paths /var,/tmp,/sys,/proc,/dev,/test

In this example, ExaBR backs up the

/customdirectory on the first compute node and also backs up the configuration of its ILOM. It excludes the/var,/tmp,/sys,/proc,/dev,/testdirectories, NFS and OCFS2 mounted file systems on the compute nodes as the--exclude-pathsoption is used. The--exclude-pathsoption overrides the default exclusion list. -

ExaBR stores the backup in the following files:

| File | Description |

|---|---|

cnode_backup.tgz |

Contains the backup of the compute node. |

ilom.backup |

Contains the configuration backup of the ILOM. |

guids.backup |

Contains the InfiniBand GUIDs for the compute node. |

version.backup |

Contains the firmware version number of the compute node and ILOM. |

backup.info |

Contains the metadata of the backup of the compute node and ILOM. |

checksums.md5 |

Contains the md5 checksums of the backup. |

The files for compute nodes and ILOMs are stored in different directories. The files for compute nodes are stored in the compute_nodes directory and the files for ILOMs in the iloms directory, as described in Section 2.1.2, "Backup Directories Created by ExaBR."

By default, the backups are stored in the /exalogic-lcdata/backups directory.

3.2.2 Recovering Compute Nodes

You can recover a compute node using ExaBR as follows:

-

Section 3.2.2.1, "Recovering Compute Nodes in a Physical Environment"

-

Section 3.2.2.2, "Recovering Compute Nodes on Failure of the InfiniBand HCA"

-

Section 3.2.2.3, "Reimaging and Recovering Compute Nodes in a Physical Environment"

-

Section 3.2.2.4, "Reimaging and Recovering Oracle VM Server Nodes in a Virtual Environment"

Note:

To run ExaBR on STIG-hardened compute nodes, you must complete the following prerequisites:-

Run ExaBR locally on each compute node you want to restore.

-

Mount the ELLC shares on each compute node you want to restore. You can copy the ELLC installer to the node and use the

-moption to mount the shares as follows:# ./exalogic-lctools-version_number-installer.sh ZFS_Address -m

-

Run ExaBR as an admin user with

sudoerpermissions.

3.2.2.1 Recovering Compute Nodes in a Physical Environment

ExaBR restores the backed up directories to the compute node. By default, ExaBR excludes the following directories when restoring a backup:

Table 3-1 Default Excluded Directories by Platform

| Platform | Directories Excluded From Restore |

|---|---|

|

Linux |

|

|

Solaris |

|

You can modify the list of directories that are excluded during a restore by using the --exclude-paths option as described in Table 2-3, "ExaBR Options".

Restore Exalogic compute nodes, by doing the following:

Note:

If a compute node you want to restore has corrupted data in one of the following ways:-

Not accessible through SSH.

-

Essential binaries such as

bashandtardo not exist.

Reimage and recover the compute node as described in steps 2 and 3 of Section 3.2.2.3, "Reimaging and Recovering Compute Nodes in a Physical Environment."

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

View a list of backups by running ExaBR as follows:

./exabr list hostname [options]

Example:

./exabr list cn2.example.com -v

In this example, ExaBR lists the backups made for

cn2.example.comin detail, because the-voption is used. -

Restore a compute node or the configuration of an ILOM, by running ExaBR as follows:

./exabr restore hostname [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr restore cn2.example.com -b 201308230428 --exclude-paths /temp

In this example, ExaBR restores the second compute node from the backup directory

201308230428,while excluding the/tempdirectory from the restore.Note:

When restoring a Linux compute node, if you see the following error:"ERROR: directory_name Cannot change ownership to ..."When running the restore command, use the

--exclude-pathsoption to exclude the directory displayed in the error.For an error such as:

ERROR tar: /u01/common/general: Cannot change ownership to uid 1000, gid 54321:

Run the restore command as in the following example:

./exabr restore cn2.example.com -b 201308230428 --exclude-paths /u01/common/general

You can use the

--include-pathsoption to restore specified files or directories from a backup.

Note:

If the compute node that is being recovered is the master node of the Exalogic rack, restore the Exalogic Configuration Utility (ECU) configuration files that you backed up, as described in Section 3.1, "Exalogic Configuration Utility.". The master node in an Exalogic rack is the node from which the ECU was run.3.2.2.2 Recovering Compute Nodes on Failure of the InfiniBand HCA

To recover compute nodes after a failure of their InfiniBand Host Channel Adapters (HCA), do the following:

-

This step is required only for a virtual environment. Remove the compute node from the list of assets in Exalogic Control by running ExaBR as follows:

./exabr control-unregister hostname_of_node_with_failed_HCA -

Replace the failed HCA by following the standard replacement procedure.

-

Register the new GUIDs of the compute node HCA with the InfiniBand partitions that the compute node was previously registered to by running ExaBR as follows:

./exabr ib-register hostname_of_node_with_replaced_HCA [options]

hostname_of_node_with_replaced_HCAis the IP address or hostname of the compute node whose failed HCA you replaced in the previous step. InfiniBand port GUIDs are saved during the compute node back up. Theib-registercommand searches the ExaBR backup directory for old GUID values. If no GUIDs are found, you are prompted to enter the GUIDs.Example:

./exabr ib-register cn2.example.com --dry-run ./exabr ib-register cn2.example.com

In the first example command, the

ib-registercommand is run with the--dry-runoption, so ExaBR displays the operations that are run without saving the changes.In the second example command, ExaBR registers the GUIDs of the HCA card to the InfiniBand partitions.

Note:

Theib-registercommand performs operations such as partition registration and vNIC registration on the InfiniBand switches. For Solaris compute nodes with a replaced HCA, you should perform additional operations on the compute node, such as a restart of the network interface, configuration of link aggregation, and so on. -

This step is required only for a virtual environment. Rediscover the compute node and add it to the list of assets in Exalogic Control using ExaBR by running the

control-registercommand as follows:./exabr control-register hostname_of_replaced_compute_node

3.2.2.3 Reimaging and Recovering Compute Nodes in a Physical Environment

A compute node should be reimaged when it has been irretrievably damaged, or when multiple disk failures cause local disk failure. A replaced compute node should also be reimaged. During the reimaging procedure, the other compute nodes in the Exalogic rack are available. After reimaging the compute node, you can use ExaBR to recover your compute node using a backup. You should manually restore any scripting, cron jobs, maintenance actions, and other customizations performed on top of the Exalogic base image.

To reimage and recover a compute node in a physical environment, do the following:

-

This step is only required if you are replacing the entire compute node.

-

Open an Oracle support request with Oracle Support Services.

The support engineer will identify the failed server and send a replacement. Provide the support engineer the output of the

imagehistoryandimageinfocommands from a running compute node. This output provides the details about the correct image and the patch sets that were used to image and patch the original compute node, and it provides a means to restore the system to the same level. -

Replace the failed compute node, by following the standard replacement procedure.

-

-

Download the Oracle Exalogic base image from Oracle Software Delivery Cloud and patch-set updates (PSUs) from My Oracle Support.

-

Reimage the compute node.

The compute node can be reimaged either using a PXE boot server or through the web-based ILOM of the compute node. This document does not cover the steps to configure a PXE boot server, however it provides the steps to enable the compute node to use a PXE boot server.

If a PXE boot server is being used to reimage the compute node, log in to the ILOM of the compute node through SSH, set the

boot_devicetopxeand restart the compute node.If the web-based ILOM is being used instead, ensure that the image downloaded earlier is on the local disk of the host from which the web-based ILOM interface is being launched and then do the following:

-

Open a web browser and bring up the ILOM of the compute node, such as

http://host-ilom.example.com/ -

Log in to the ILOM as the

rootuser. -

Navigate to Redirection under the Remote Control tab, and click the Launch Remote Console button. The remote console window is displayed.

Note:

Do not close this window until the imaging process is complete. You will need to return to this window to complete the network configuration at the end of the imaging process. -

In the remote console window, click on the Devices menu item and select:

- Keyboard (selected by default)

- Mouse (selected by default)

- CD-ROM Image

-

In the dialog box that is displayed, navigate and select the Linux base image

isofile that you downloaded. -

On the ILOM window, navigate to the Host Control tab under the Remote Control tab.

-

Select CD-ROM from the drop-down list and then click Save.

-

In the Remote Control tab, navigate to the Remote Power Control tab.

-

Select Power Cycle from the drop-down list, and then click Save.

-

Click OK to confirm that you want to power cycle the machine.

This starts the imaging of the compute node. Once the imaging is complete, the first boot scripts prompts you to provide the network configuration.

-

-

Recover the compute node by running the steps described in Section 3.2.2.1, "Recovering Compute Nodes in a Physical Environment."

-

This step is only required if you replaced the entire compute node.

Note:

Do not run this step if you are restoring a repaired or existing compute node.-

Restore the configuration of the ILOM of the compute node by running ExaBR as follows:

Note:

ExaBR cannot restore the ILOM of the compute node from which it is running. To restore the ILOM of that compute node, run ExaBR on a different compute node../exabr restore hostname_of_ILOM [options]

Example:

./exabr restore cn2ilom.example.com -b 201308230428

In this example, ExaBR restores the configuration of the ILOM of the second compute node from the backup directory

201308230428. -

Register the new GUIDs of the replacement compute node with the same InfiniBand partitions that the compute node was previously registered to, using ExaBR as follows:

./exabr ib-register hostname_of_replaced_compute_node [options]

InfiniBand port GUIDs are saved during the compute node back up. The

ib-registercommand searches the ExaBR backup directory for the GUIDs of the replaced compute node. If no GUIDs are found, the user is prompted to enter the GUIDs. This command also creates new vNICs with the GUIDs of the replacement compute node.Example:

./exabr ib-register cn2.example.com --dry-run ./exabr ib-register cn2.example.com

In the first example command, the

ib-registercommand is run with the--dry-runoption, so ExaBR displays the operations that are run without saving the changes.In the second example, the GUIDs of the replacement compute node are registered to the InfiniBand partitions that were registered to the failed compute node. This command also creates vNICs with the GUIDs of the replacement compute node.

Note:

Theib-registercommand performs operations, such as partition registration and vNIC registration on the InfiniBand switches. For replaced Solaris compute nodes, you should perform additional operations on the compute node, such as a restart of the network interface, configuration of link aggregation, and other tasks required by your system administrator.

-

3.2.2.4 Reimaging and Recovering Oracle VM Server Nodes in a Virtual Environment

An Oracle VM Server node (compute node in a virtual environment) should be reimaged when it has been irretrievably damaged, or when multiple disk failures cause local disk failure. A replaced Oracle VM Server node should also be reimaged. During the reimaging procedure, the other Oracle VM Server nodes in the Exalogic rack are available. You should restore any scripting, cron jobs, maintenance actions, and other customizations performed on top of the Exalogic base image.

To reimage and recover a Oracle VM Server node in a virtual environment, do the following:

-

If an Oracle VM Server node from which Exalogic Control stack vServers were running is down or powered off, you must migrate the vServers:

-

If the Oracle VM Server node from which the Exalogic Control vServer was running is down or powered off, migrate the vServer by performing the steps in Section A.1 on a running Oracle VM Server node.

-

If the Oracle VM Server node from which either of the Proxy Controllers were running is down or powered off, migrate the vServer by performing the steps in Section A.2 on a running Oracle VM Server node.

-

Stop the components of the Exalogic Control stack by running ExaBR as follows:

./exabr stop control-stack

This command stops the Proxy Controller 2, Proxy Controller 1, and Exalogic Control vServers, in that order.

-

Restart the components of the Exalogic Control stack by running ExaBR as follows:

./exabr start control-stack

This command starts the Exalogic Control, Proxy Controller 1, and Proxy Controller 2 vServers, in that order.

-

-

Remove the Oracle VM Server node from the list of assets in Exalogic Control using ExaBR as follows:

./exabr control-unregister hostname_of_node -

Verify that the Oracle VM Server node and ILOM are not displayed in the Assets accordion by logging into Exalogic Control as a user with the Exalogic System Administrator role.

-

Verify that the Oracle VM Server node is not displayed in Oracle VM Manager, by doing the following:

-

Log in to Oracle VM Manager as the

rootuser. -

Click the Servers and VMs tab.

-

Expand Server Pools.

-

Under Server Pools, verify that the Oracle VM Server node you removed in step 2 is not displayed.

-

-

If required, you can now restart all non-HA vServers using Exalogic Control. HA-enabled vServers automatically restart, if an Oracle VM Server node fails.

-

Follow steps 1 to 3 from Section 3.2.2.3, "Reimaging and Recovering Compute Nodes in a Physical Environment."

Note:

Oracle recommends that you use the same credentials for the replacement Oracle VM Server node as those on the replaced Oracle VM Server node.Note:

Oracle VM Server nodes are stateless, so you do not need to back up or restore them. If required, you can use ExaBR to back up customizations on your Oracle VM Server node, by using the--include-pathsoption. -

In the

/var/tmp/exalogic/ecu/cnodes_current.jsonfile, update the following for the replaced compute node:Note:

To find the correct values to updatecnodes_current.json, use the information in/opt/exalogic/ecu/config/cnodes_target.json.-

The host name of the node.

-

The host name of the ILOM.

-

The IP address of the node on the

IPoIB-defaultnetwork. -

The IP address of the node on the

eth-adminnetwork.

-

-

Configure the Oracle VM Server node by using ExaBR to run the required ECU steps as follows:

./exabr configure Eth_IP_Address_of_CNThe Oracle VM Server node restarts.

-

After the Oracle VM Server node has restarted, run the

configurecommand again to synchronize the clock on the Oracle VM Server node:./exabr configure address_of_nodeNote:

Foraddress_of_node, use the address specified in theexabr.configfile. -

You can update the credentials for the discovery profile of the Oracle VM Server node and ILOM in Exalogic Control by doing the following:

Note:

Do this step only if:-

You replaced the Oracle VM Server node.

-

You configured different credentials for the replacement Oracle VM Server node than the credentials of the replaced Oracle VM Server node.

-

Log in to the Exalogic Control BUI.

-

Select Credentials in the Plan Management accordion.

-

Enter the host name of the Oracle VM Server node in the search box and click Search.

The IPMI and SSH credential entries for the Oracle VM Server node are displayed.

-

To update all four credentials, do the following:

i. Select the entry for the credentials and click Edit Credentials.

The Update Credentials dialog box is displayed.

ii. Enter your password in the Password and Confirm Password fields.

iii. Click Update.

iv. Repeat steps i to iii for each entry.

-

-

Patch the compute node to the version that the other Oracle VM Server nodes are at using either the PSU or the stand-alone patch that you used previously.

-

Rediscover the Oracle VM Server node and add it to the list of assets in Exalogic Control using ExaBR as follows:

./exabr control-register hostname_of_node -

Register the new GUIDs of the Oracle VM Server node HCA with the InfiniBand partitions, that the Oracle VM Server node was previously registered to, using ExaBR as follows:

Note:

This step is only required if the Oracle VM Server node was replaced../exabr ib-register hostname_of_node [options]

InfiniBand port GUIDs are saved during the Oracle VM Server node back up. The

ib-registercommand searches the ExaBR backup directory for old GUID values. If no GUIDs are found, the user is prompted to enter the GUIDs.Example:

./exabr ib-register cn2.example.com --dry-run ./exabr ib-register cn2.example.com

In the first example, because the

ib-registercommand is run with the--dry-runoption, ExaBR displays what operations will be run without saving the changes.In the second example, the GUIDs of the replacement Oracle VM Server node are registered to InfiniBand partitions that were registered with the failed Oracle VM Server node.

-

This step is required only for an Exalogic rack that was upgraded from EECS 2.0.4 to EECS 2.0.6. Remove the warning icon for the Oracle VM Server node you restored by doing the following:

-

Log in to the Oracle VM Server node you restored.

-

Edit the

/etc/sysconfig/o2cbfile. -

Set the

O2CB_ENABLEDparameter totrue.O2CB_ENABLED=true

-

Log in to Oracle VM Manager as the

adminuser. -

Click the Servers and VMs tab.

-

Expand Server Pools.

-

Expand the server pool which contains the Oracle VM Server node you restored.

-

Under the server pool, click the Oracle VM Server node you restored.

-

From the Perspective drop down box, select Events.

-

Click Acknowledge All.

-

3.3 NM2 Gateway and 36p Switches

The InfiniBand switches are a core part of an Exalogic rack and the configurations of all the Infiniband switches must be backed up regularly.

This section contains the following subsections:

3.3.1 Backing Up InfiniBand Switches

When backing up the InfiniBand switches, you must back up all the switches in the InfiniBand fabric.

Note:

In virtual environments, do not back up the InfiniBand switches separately. When backing up the Exalogic Control stack, ExaBR also backs up the InfiniBand switches. To back up the InfiniBand switches in virtual environments, Oracle recommends backing up the Exalogic Control Stack as described in Section 4.1, "Backing Up the Exalogic Control Stack."ExaBR backs up the InfiniBand data in the following files:

| File | Description |

|---|---|

switch.backup |

Contains the configuration backup of the InfiniBand switch made by the built-in ILOM back up. |

partitions.current |

Backup of InfiniBand partitions. |

smnodes |

Backup of the IP addresses of the SM nodes. |

version.backup |

Contains the firmware version number of the switch. |

backup.info |

Contains metadata of the backup of the switch. |

checksums.md5 |

Contains the md5 checksums of the backup. |

bx.conf |

Backup of bridge configuration file. This file is backed up for only the NM2 gateway switches. |

bxm.conf |

Backup of additional gateway configuration file. This file is backed up for only the NM2 gateway switches. |

opensm.conf |

Backup of OpenSM configuration file. This file is backed up for only the NM2 gateway switches. |

For the NM2 gateway switches, the following files are backed up only for verification:

-

/conf/bx.conf -

/conf/bxm.conf -

/etc/opensm/opensm.conf

You can compare these files with those in the backup directory to ensure that the switch content, such as VNICs, VLANs, and so on, were backed up correctly. ExaBR restores the data in these files from the switch.backup file.

You can back up the InfiniBand switches, by doing the following:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Use ExaBR to back up the InfiniBand switches in one of the following ways:

-

To back up a specific InfiniBand switch, run ExaBR as follows:

./exabr backup hostname1[,hostname2,...] [options]

-

To back up all the InfiniBand switches, run ExaBR using the

all-ibtarget as follows:./exabr backup all-ib [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr backup all-ib --noprompt

In this example, ExaBR backs up all InfiniBand switches without prompting for passwords because the

--nopromptoption is used. This option can only be used when key-based authentication has been enabled as described in Section 2.3.1, "Enabling Key-Based Authentication for ExaBR." This option can be used to schedule backups, as described in Section 2.3.4, "Scheduling ExaBR Backups."Backups must be created for all the switches in the fabric.

-

By default, the backups are stored in the /exalogic-lcdata/backups directory.

For security reasons, encrypted entries are removed from the backed up files. These entries include the following:

-

/SP/services/servicetag/password -

/SP/users/ilom-admin/password -

/SP/users/ilom-operator/password

3.3.2 Recovering InfiniBand Switches

ExaBR restores the configuration of the switch using the built-in SP restore of the switch. Ensure that no configuration changes are made while performing the restore. Configuration changes include vServer creation and vNet creation.

Note:

Restore only the switch that is not the SM master. If you want to restore the SM master, relocate the SM master as follows:-

Log in to the switch that you want to restore.

-

Relocate the SM master by running the

disablesmcommand on the SM master. -

Verify that the SM master has relocated to a different gateway switch by running the

getmastercommand. -

Once a different gateway switch has become master, re-enable the SM on the switch you want to restore by running the

enablesmcommand. -

Confirm that the switch you logged in to is not the SM master by running the

getmastercommand.

You can recover the InfiniBand switches by doing the following:

Note:

To restore the InfiniBand switch, you must run ExaBR as theroot user.-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Verify the smnodes list on the switch, by doing the following:

-

Log in to the InfiniBand switch you are recovering as the

rootuser. -

List the Subnet Manager nodes by running the following command:

# smnodes list

Note:

If thesmnodescommands do not work, you may need to run the following commands:# disablesm # enablesm

-

If you want the switch to run Subnet Manager, ensure that the IP address of the switch is listed in the output of the

smnodes listcommand. The list of nodes can be modified by using the following commands:# smnodes add IP_Address_of_Switch # smnodes delete IP_Address_of_Switch

Where

IP_Address_of_Switchis the IP address of the switch you want to run Subnet Manager.Note:

On all the gateway switches running the Subnet Manager, ensure that the output of thesmnodes listcommand is identical.

-

-

View a list of backups by running ExaBR as follows:

./exabr list hostname [options]

Example:

./exabr list ib01.example.com -v

In this example, ExaBR lists the backups for

ib01.example.comin detail, because the-voption is used. -

Use ExaBR to restore an InfiniBand switch as follows:

./exabr restore hostname [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Note:

During the restore, there will be a temporary disruption of InfiniBand traffic.Example:

./exabr restore ib01.example.com -b 201308230428

In this example, ExaBR restores an InfiniBand switch. The data is restored from the backup directory

201308230428, because the-boption is used.Note:

ExaBR binds to port 80 to send the configuration backup to the InfiniBand ILOM over HTTP. If the machine from which you run ExaBR has a firewall, you must open HTTP port 80. -

The InfiniBand partitions are not restored automatically. If you want to restore the partitions, do the following:

Note:

Oracle recommends that the partitions always be restored when the Exalogic rack is in a virtual environment.-

Review the

partitions.currentfile that was backed up by ExaBR, to ensure that your partitions were backed up correctly. -

Copy

partitions.currentto the master switch by running the following command:scp mounted_exabr_backup/ib_gw_switches/switch_hostname/backup_directory/partitions.current ilom-admin@master_switch_hostname:/tmp

In this command,

mounted_exabr_backupis the path to the share you created for ExaBR,switch_hostnameis the host name of the switch you backed up,backup_directoryis the directory from which you are restoring the switch, andmaster_switch_hostnameis the host name of your master switch. -

Log in to the master switch and copy

partitions.currentfrom the/tmpdirectory to the/confdirectory. -

Run the following command on the master switch to propagate the partitions and commit the change:

smpartition start && smpartition commit

-

3.3.3 Replacing InfiniBand Switches in a Virtual Environment

When Exalogic is deployed in a virtual configuration, do the following to replace and recover a failed InfiniBand switch.

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Remove the InfiniBand switch from the list of assets in Exalogic Control using ExaBR by running the

control-unregistercommand as follows:./exabr control-unregister hostname_of_IB_switch -

Replace the failed switch by following the standard replacement procedure.

Note:

Before connecting the switch to the InfiniBand fabric, disable Subnet Manager by running thedisablesmcommand on the switch. -

Restore the switch from a backup you created, by running all steps in Section 3.3.2, "Recovering InfiniBand Switches."

-

Register the gateway port GUIDs with the EoIB partitions using ExaBR by running the

ib-registercommand as follows:./exabr ib-register hostname_of_IB_switch [options]

Example:

./exabr ib-register ib02.example.com --dry-run ./exabr ib-register ib02.example.com

In the first example, ExaBR displays what operations will be run without saving the changes because the

ib-registercommand is run with the--dry-runoption.In the second example, the gateway port GUIDs of the InfiniBand switch are registered to the EoIB partitions.

-

You can update the credentials of the discovery profile for the InfiniBand switch and ILOM in Exalogic Control by doing the following:

Note:

Only do this step if:-

You replaced the InfiniBand switch.

-

You configured different credentials for the replacement switch node than the credentials of the replaced switch.

-

Log in to the Exalogic Control BUI.

-

Select Credentials in the Plan Management accordion.

-

Enter the host name of the InfiniBand switch in the search box and click Search.

The IPMI and SSH credential entries for the InfiniBand switch are displayed.

-

To update all four credentials, do the following:

i. Select the entry for the credentials and click Edit.

The Update Credentials dialog box is displayed.

ii. Update the password and confirm the password fields.

iii. Click Update.

-

-

Rediscover the switch and add it to the list of assets in Exalogic Control by running ExaBR with the

control-registercommand as follows:./exabr control-register hostname_of_IB_switch

3.4 Management Switch

The management switch provides connectivity on the management interface and must be backed up regularly.

This section contains the following subsections:

3.4.1 Backing Up the Management Switch

ExaBR uses the built-in mechanism of the management switch to back up the configuration data. You can back up the management switch, by doing the following:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Use ExaBR to back up the management switch as follows:

./exabr backup hostname [options]

Example:

./exabr backup mgmt.example.com

In this example, ExaBR backs up the management switch.

Note:

The management switch supports only interactive back ups. You will be prompted to enter both the login password and the password to enter privileged mode.By default, the backups are stored in the /exalogic-lcdata/backups directory.

By default, ExaBR connects to the management switch using the telnet protocol. You can change the connection protocol to SSH, as described in Section 2.3.2, "Configuring the Connection Protocol to the Management Switch."

ExaBR stores the backup in the following files:

| File | Description |

|---|---|

switch.backup |

Contains the backup of the management switch. |

backup.info |

Contains metadata of the backup. |

checksums.md5 |

Contains the md5 checksums of the backup. |

3.4.2 Recovering the Management Switch

You can recover the management switch by doing the following:

Note:

Ensure that configuration changes are not made while performing the restore.-

Perform the tasks steps described in Section 2.2, "Preparing to Use ExaBR."

-

Use ExaBR to restore the management switch as follows:

./exabr restore hostname [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr restore mgmt.example.comIn this example, ExaBR restores the management switch.

-

Verify that the switch was restored successfully.

Note:

If the management switch does not run as expected, you can restart the switch to revert the restore. -

If the switch is running correctly, do the following to make the restoration persist:

-

Log in to the CLI of the management switch.

-

Run the following command:

copy running-config startup-config

-

By default, ExaBR connects to the management switch using the telnet protocol. You can change the connection protocol to SSH, as described in Section 2.3.2, "Configuring the Connection Protocol to the Management Switch."

3.4.3 Replacing the Management Switch in a Virtual Environment

When Exalogic is deployed in a virtual configuration, do the following to replace a failed management switch:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Remove the management switch from the list of assets in Exalogic Control using ExaBR, by running the

control-unregistercommand as follows:./exabr control-unregister hostname_of_management_switch -

Replace the failed management switch, by following the standard replacement process.

-

Perform the steps in Section 3.4.2, "Recovering the Management Switch" to restore the switch from the latest backup.

-

Rediscover the switch and add it to the list of assets in Exalogic Control using ExaBR by running

control-registercommand as follows:./exabr control-register hostname_of_management_switch

3.5 Storage Appliance Heads

The storage appliance in an Exalogic rack has two heads that are deployed in a clustered configuration. At any given time, one head is active and the other is passive. If a storage head fails and has to be replaced, the configuration from the surviving active node is pushed to the new storage head when clustering is performed.

3.5.1 Backing Up the Configuration of the Storage Appliance Heads

To back up the configuration of the storage appliance heads or back up the configuration of their ILOMs, do the following:

-

Perform the tasks described in Section 2.2, "Preparing to Use ExaBR."

-

Use ExaBR to back up the configuration of a storage appliance head or back up the configuration of their ILOMs in one of the following ways:

-

To back up the configuration of a specific storage appliance head or an ILOM, run ExaBR as follows:

./exabr backup hostname1[,hostname2,...] [options]

-

To back up the configuration of all storage appliance heads and back up their ILOMs, run ExaBR using the

all-sntarget as follows:./exabr backup all-sn [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr backup sn01.example.com --noprompt ./exabr backup sn01ilom.example.com --noprompt

In these examples, ExaBR backs up the configuration of the first storage appliance head and backs up the configuration of its ILOM without prompting for passwords. This option can only be used when key-based authentication has been enabled as described in Section 2.3.1, "Enabling Key-Based Authentication for ExaBR." This option can be used to schedule backups, as described in Section 2.3.4, "Scheduling ExaBR Backups."

For more information on the contents of the configuration backup taken by ExaBR for the storage appliance head, see the following link:

ExaBR stores the backup in the following files:

File Description zfssa.backupContains the backup of the storage appliance head. ilom.backupContains the configuration backup of the ILOM, if it was backed up. checksums.md5Contains the md5checksums of the backup.backup.infoContains metadata of the backup of the storage appliance head and ILOM. The files for storage appliance head and ILOMs are stored in different directories. The files for switches are stored in the

storage_nodesdirectory and the files for ILOMs in theilomsdirectory as described in Section 2.1.2, "Backup Directories Created by ExaBR." -

3.5.2 Recovering the Configuration of Storage Appliance Heads

To recover the configuration of the storage appliance head, do the following:

Note:

The restore process takes several minutes to complete and will impact service to clients, while the active networking configuration and data protocols are reconfigured. A configuration restore should be done only on a development system, or during a scheduled downtime.-

Run the steps described in Section 2.2, "Preparing to Use ExaBR."

-

Identify the active and passive nodes by doing the following:

-

Log in to the storage appliance BUI.

-

Click the Configuration tab.

The Configuration page is displayed.

-

Click Cluster.

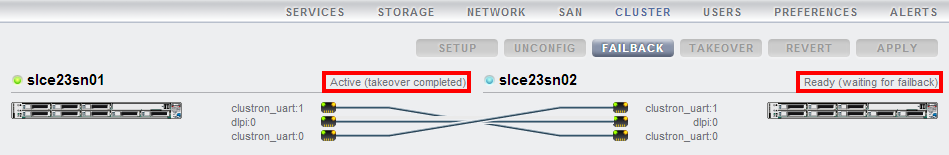

The Cluster page is displayed as shown in Figure 3-1.

-

The node described as

Active (takeover completed)is the active node and the node described asReady (waiting for failback)is the passive node. In this example,slce23sn01is the active node andslce23sn02is the passive node.

-

-

Perform a factory reset the passive node (

slce23sn02) by doing the following:-

Log in to the storage appliance BUI of the passive node (

slce23sn02) as therootuser. -

Click the Maintenance tab.

The Hardware page is displayed.

-

Click System.

The System page is displayed.

-

Click the FACTORY RESET button.

The storage node restarts.

-

-

Before the passive node (

slce23sn02) restarts completely, you must uncluster the active node (slce23sn01) by doing the following:-

Log in to the storage appliance BUI of the active node (

slce23sn01) as therootuser. -

Click the Configuration tab.

The Services page is displayed.

-

Click Cluster.

The Cluster page is displayed.

-

Click the UNCONFIG button.

-

Wait for the passive node (

slce23sn02) to restart. To check if the passive node has restarted, log in to the ILOM of the passive node.

-

-

Restore the active node (

slce23sn01) as it owns all the resources, by running ExaBR as follows:./exabr restore hostname1 [options]

For a complete list of options, see Section 2.4.2, "ExaBR Options."

Example:

./exabr restore slce23sn01.example.com -b 201308230428

In this example, ExaBR restores the active storage node, in our example

slce23sn01.example.comand from the backup directory201308230428. -

After the restoration is successful, reconfigure the clustering by doing the following:

-

For X2-2 and X3-2 racks:

a. Log in as

rootto the storage appliance BUI of the active node (slce23sn01).b. Click the Configuration tab.

The Services page is displayed.

c. Click Cluster.

The Cluster page is displayed.

d. Click the SETUP button.

f. In the host name field, enter the host name of the passive node (

slce23sn02).g. In the password field, enter the password of the passive node (

slce23sn02).i. Click the COMMIT button.

The passive node joins the cluster and copies the configuration from the restored active node.

-

For X4-2 and later racks:

a. Log in as

rootto the storage appliance BUI of the active node (slce23sn01).b. Click the Configuration tab.

The Services page is displayed.

c. Click Cluster.

The Cluster page is displayed.

d. Click the SETUP button.

e. Click the COMMIT button.

f. In the Appliance Name field, enter the host name of the passive node (

slce23sn02).g. In the Root Password field, enter the password of the passive node (

slce23sn02).h. In the Confirm Password field, enter the password of the passive node (

slce23sn02).i. Click the COMMIT button.

The passive node should join the cluster and copy the configuration from the restored active node.

-

-

Configure the access to the ethernet management network for the storage node you restored (the active node

slce23sn01) by doing the following:-

For X2-2 and X3-2 racks:

a. SSH to the active node as the

rootuser.b. Destroy the interface of the storage node you restored. In this example, destroy the

igb1interface because you reset the second storage node (slce23sn02). If you reset theslce23sn01storage node, you must destroy theigb0interface.configuration net interfaces destroy igb1

c. Re-create the interface you destroyed in the previous step and the route of the node you restored (the active node

slce23sn01) by running the following commands:configuration net interfaces ip set v4addrs=ip address of passive node (slce23sn02) in CIDR notation set links=igb1 set label=igb1 commit configuration net routing create set family=IPv4 set destination=0.0.0.0 set mask=0 set interface=igb1 set gateway=<gateway ip> commitd. Change the ownership of the

igb1resource, by running the following commands:configuration cluster resources select net/igb1 set owner=<inactive head host name> commit commit The changes have been committed. Would you also like to fail back? (Y/N) N

-

For X4-2 and later racks:

a. SSH to the active node as the

rootuser.b. Destroy the interface of the storage node you restored. In this example, destroy the

ixgbe1interface because you reset the second storage node (slce23sn02). If you reset theslce23sn01storage node, you must destroy theixgbe0interface.configuration net interfaces destroy ixgbe1

c. Re-create the interface you destroyed in the previous step and the route of the node you restored (the active node

slce23sn01) by running the following commands:configuration net interfaces ip set v4addrs=ip address of passive node (slce23sn02) in CIDR notation set links=ixgbe1 set label=ixgbe1 commit configuration net routing create set family=IPv4 set destination=0.0.0.0 set mask=0 set interface=ixgbe1 set gateway=<gateway ip> commit

d. Change the ownership of the

ixgbe1resource, by running the following commands:configuration cluster resources select net/ixgbe1 set owner=<inactive head host name> commit commit The changes have been committed. Would you also like to fail back? (Y/N) N

-

-

Perform a takeover to make the passive node (

slce23sn02) the active node by doing the following:-

Log in to the ILOM of the passive node (

slce23sn02) as therootuser. -

Run the following command to start the console:

start /SP/console

-

Log in to the passive node (

slce23sn02) as therootuser. -

Perform a takeover to make the node active:

configuration cluster takeover

The active node (

slce23sn01) restarts and becomes the passive node. -

Wait for the formerly active node (

slce23sn01) to start up.

-

-

Set the resource you re-created to private by doing the following:

-

For X2-2 and X3-2 racks:

a. Log in to the new active node (

slce23sn02) as therootuser.b. Run the following commands:

configuration cluster resources select net/igb1 set type=private commit commit The changes have been committed. Would you also like to fail back? (Y/N) N

-

For X4-2 and later racks:

a. Log in to the new active node (

slce23sn02) as therootuser.b. Run the following commands:

configuration cluster resources select net/ixgbe1 set type=private commit commit The changes have been committed. Would you also like to fail back? (Y/N) N

-