| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Java CAPS Master Data Management Suite Primer Java CAPS Documentation |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Java CAPS Master Data Management Suite Primer Java CAPS Documentation |

Oracle Java CAPS Master Data Management Suite Primer

About the Oracle Java CAPS Master Data Management Suite

Java CAPS MDM Suite Architecture

Master Data Management Components

Java CAPS Data Quality and Load Tools

Java CAPS MDM Integration and Infrastructure Components

Oracle Java CAPS Enterprise Service Bus

Oracle Java CAPS Business Process Manager

Oracle Java System Access Manager

Oracle Directory Server Enterprise Edition

Oracle Java System Portal Server

NetBeans Integrated Development Environment (IDE)

Java CAPS Master Data Management Process

About the Standardization and Matching Process

Java CAPS Master Index Overview

Java CAPS Master Index Features

Java CAPS Master Index Architecture

Master Index Design and Development Phase

Data Monitoring and Maintenance

Java CAPS Data Integrator Overview

Java CAPS Data Integrator Features

Java CAPS Data Integrator Architecture

Java CAPS Data Integrator Development Phase

Java CAPS Data Integrator Runtime Phase

Java CAPS Data Quality and Load Tools

Master Index Standardization Engine

Master Index Standardization Engine Configuration

Master Index Standardization Engine Features

Master Index Match Engine Matching Weight Formulation

Master Index Match Engine Features

Data Cleanser and Data Profiler

Data Cleanser and Data Profiler Features

Initial Bulk Match and Load Tool

Initial Bulk Match and Load Process Overview

The Java CAPS MDM Suite includes several data quality tools that provide capabilities to analyze, cleanse, standardize, and match data from multiple sources. The cleansed data can then be loaded into the central database using the high-performance Bulk Loader. Used with the master index application, these tools help ensure that the legacy data that is loaded into a master index database at the start of the MDM implementation is cleansed, deduplicated, and in a standard format. Once the MDM solution is in production, the tools provide continuous cleansing, deduplication, and standardization so your reference data always provides the single best view.

The Master Index Standardization Engine parses, normalizes, and phonetically encodes data for external applications, such as master index applications. Before records can be compared to evaluate the possibility of a match, the data must be normalized and in certain cases parsed or phonetically encoded. Once the data is conditioned, the match engine can determine a match weight for the records. The standardization engine is built on a flexible framework that allows you to customize the standardization process and extend standardization rules.

Data standardization transforms input data into common representations of values to give you a single, consistent view of the data stored in and across organizations. This common representation allows you to easily and accurately compare data between systems.

Data standardization applies three transformations against the data: parsing into individual components, normalization, and phonetic encoding. These actions help cleanse data to prepare it for matching and searching. Some fields might require all three steps, some just normalization and phonetic conversion, and other data might only need phonetic encoding. Typically data is first parsed, then normalized, and then phonetically encoded, though some cleansing might be needed prior to parsing.

A common use of normalization is for first names. Nicknames need to be converted to their common names in order to make an accurate match; for example, converting “Beth” and “Liz” to “Elizabeth”. An example of data that needs to be parsed into its individual components before matching is street addresses. For example, the string “800 W. Royal Oaks Boulevard” would be parsed as follows:

Street Number: 800

Street Name: Royal Oaks

Street Type: Boulevard

Street Direction: W.

Once parsed the data can then be normalized so it is in a common form. For example, “W.” might be converted to “West” and ”Boulevard” to Blvd” so these values are similar for all addresses.

Phonetic encoding allows for typos and input errors when searching for data. Several different phonetic encoders are supported, but the two most commonly used are NYSIIS and Soundex.

The Master Index Standardization Engine uses two frameworks to define standardization logic. One framework is based on a finite state machine (FSM) model and the other is based on rules programmed in Java. In the current implementation, the person names and telephone numbers are processed using the FSM framework, and addresses and business names are processed using the rules-based framework. Both frameworks can be customized as needed.

A finite state machine (FSM) is composed of one or more states and the transitions between those states. In this case, a state is a value within a text field, such as a street address, that needs to be parsed from the text. The Master Index Standardization Engine FSM framework is designed to be highly configurable and can be easily extended. Standardization is defined using a simple markup language and no Java coding is required.

The Master Index Standardization Engine rules-based framework defines the standardization process for addresses and business names in Java classes. This framework can be extended by creating additional Java packages to define processing.

Both frameworks rely on sets of text files that help identify field values and to determine how to parse and normalize the values.

The Master Index Standardization Engine provides proven and extensive standardization capabilities to the Java CAPS MDM Suite, and includes the following features:

Works with Java CAPS Master Index applications and can also be called from other applications, such as Data Integrator, web services, web applications, and so on.

Uses standardization algorithms based on research at the U.S. Census Bureau, Statistical Research Division (SRD).

Is highly configurable and can be used to standardize various types of data.

Supports data sets specific to Australia, France, Great Britain, and the United States by default, and can be extended to support additional locales.

Processes data using one of the defined locales or using multiple locales.

Supports a variety of data types, including addresses, person names, businesses, and telephone numbers. Additional data types can be easily added.

Provides comprehensive person name normalization tables for the four default locales.

Uses a probability-based mechanism to resolve ambiguity during processing.

Allows you to apply cleansing rules prior to the standardization processing.

Performs preprocessing, matching, and postprocessing during the parsing process based on customizable rules.

Is highly configurable and the standardization and matching logic can be adapted to specific needs. New data types or variants can be created for even more customized processing.

Supports pluggable standardization sets, so you can define custom standardization processing for most types of data.

The Master Index Match Engine provides record matching capabilities for external applications, including Java CAPS Master Index applications. It works best along with the Master Index Standardization Engine, which provides the preprocessing of data that is required for accurate matching. The match engine compares records containing similar data types by calculating how closely certain fields in the records match. A weight is generated for each field and the sum of those weights (the composite weight) is either a positive or negative numeric value that represents the degree to which the two sets of data are similar. The composite weight could also be a function of the match field weights. The match engine relies on probabilistic algorithms to compare data using a comparison function specific to the type of data being compared. The composite weight indicates how closely two records match.

The Master Index Match Engine determines the matching weight between two records by comparing the match string fields between the two records using the defined rules and taking into account the matching logic specified for each field. The match engine can use either matching (m) and unmatching (u) conditional probabilities or agreement and disagreement weight ranges to fine-tune the match process.

M-probabilities and u-probabilities use logarithmic functions to determine the maximum agreement and minimum disagreement weights for a field. When agreement and disagreement weights are used instead, the maximum agreement and minimum disagreement weights are specified directly as integers.

The Master Index Match Engine uses a proven algorithm to arrive at a match weight for each match string field. It offers a comprehensive array of comparison functions that can be configured and used on different types of data. Additional comparison functions can be defined and incorporated into the match engine. The master engine allows you to incorporate weight curves, validations, class dependencies, and even external data files into the configuration of a comparison function.

The Master Index Match Engine provides comprehensive record matching capabilities to the Java CAPS MDM Suite using trusted and proven methodologies. It includes the following features:

Uses proven matching methodologies based on research at the U.S. Census Bureau, SRD, and customized for stable, reliable, and high-speed matching.

Uses a combination of probabilistic and deterministic matching.

Is very flexible and generic, allowing you to customize existing matching rules and to define custom rules as needed.

Provides a comprehensive array of customizable comparators for matching on various types of fields, such as numbers, dates, single characters, and so on. Includes more specialized comparison functions for searching on specific types of data, such as person names, address fields, social security numbers, genders.

Supports the creation of custom comparison functions to enable matching against any type of data. This is possible because the match engine is built on a pluggable architecture.

Supports a variety of configurations for comparison functions, including validations, weighting curves, class dependencies, and the ability to access a data file that provides additional information on how to match data.

Allows you to create new validation and configuration rules.

Provides a simple and clear method to incorporate customizations into Java CAPS Master Index applications.

Matches on free-form fields by using the features of the Master Index Standardization Engine prior to matching.

Outputs the composite match weight as well as the individual, field-level match weights.

Data analysis and cleansing are essential first steps towards managing the quality of data in a master index system. Performing these processes early in the MDM project helps ensure the success of the project and can eliminate surprises down the road. The Data Cleanser and Data Profiler tools, which are generated directly from the Java CAPS Master Index application, provide the ability to analyze, profile, cleanse, and standardize legacy data before loading it into a master index database. The tools use the object definition and configuration of the master index application to validate, transform, and standardize data.

The Data Profiler examines existing data and gives statistics and information about the data, providing metrics on the quality of your data to help determine the risks and challenges of data integration. The Data Cleanser detects and corrects invalid or inaccurate records based on rules you define, and also standardizes the data to provide a clean and consistent data set. Together, these tools help ensure that the data you load into the master index database is standard across all records, that it does not contain invalid values, and that it is formatted correctly.

The Data Profiler analyzes the frequency of data values and patterns in your existing data based on rules you specify. Rules are defined using a rules definition language (RDL) in XML format. The RDL provides a flexible and extensible framework that makes defining rules an easy and straightforward process. A comprehensive set of rules is provided, and you can define custom rules to use as well.

You can use the Data Profiler to perform an initial analysis of existing data to determine which fields contain invalid values or format; the results will analysis highlight values that need to be validated or modified during the cleansing process. For example, if you find you have several dates in the incorrect format, you can reformat the dates during cleansing.

After the data is cleansed using the Data Cleanser, you can perform a final data analysis to verify the blocking definitions for the blocking query used in the master index match process. This analysis indicates whether the data blocks defined for the query are too wide or narrow in scope, which would result in an unreliable set of records being returned for a match search. This analysis can also show how reliably a specific field indicates a match between two records, which affects how much relative weight should be given to each field in the match string.

The Data Profiler performs three types of analysis and outputs a report for each field being profiled. A simple frequency analysis provides a count of each value in the specified fields. A constrained frequency analysis provides a count of each value in the specified fields based on the validation rules you define. A pattern frequency analysis provides a count of the patterns found in the specified fields.

The Data Cleanser validates and modifies data based on rules you specify. Rules are defined using the same RDL as the Data Profiler, which provides a flexible framework for defining and creating cleansing rules. A comprehensive rule set is provided for validating, transforming, and cleansing data, and also includes conditional rules and operators. The Data Cleanser not only validates and transforms data, but also parses, normalizes, and phonetically encodes data using the standardization configuration of the master index project.

The output of the Data Cleanser is two flat files; one file contains the records that passed all validations and was successfully transformed and standardized, and the other contains all records that failed validation or could not be transformed correctly. The bad data file also provides the reason each record failed so you can easily determine how to fix the data. This is an iterative process, and you might run the Data Cleanser several times to make sure all data is processed correctly.

The final run of the Data Cleanser should produce only a good data file that conforms to the master index object definition and that can then be fed to the Initial Bulk Match and Load tool. The data file no longer contains invalid or default values. The fields are formatted correctly and any fields that are defined for standardization in the master index application are standardized in the file.

The Data Cleanser and Data Profiler give you the ability to analyze, validate, cleanse, and standardize legacy data before it is loaded into the central database. They provide the following features:

Provide valuable insight into the current state and quality of legacy data.

Provide an extensive and flexible set of validation, transformation, and cleansing rules for both analysis and cleansing.

Allow you to easily specify and configure data processing rules using a simple rules markup language.

Allow you to define custom processing rules in Java that can be incorporated into the rules engine.

Provide information about field values to help determine a reliable a field is for matching purposes.

Output a data file that has been cleansed of errors, invalid data, and invalid formats, and that conforms to the object structure of the reference data.

One of the issues that arises during a data management deployment is how to get a large volume of legacy data into the master index database quickly and with little downtime, while at the same time cleansing the data, reducing data duplication, and reducing errors. The Initial Bulk Match and Load Tool (IBML Tool) gives you the ability to analyze match logic, match legacy data, and load a large volume of data into a master index application. The IBML Tool provides a scalable solution that can be run on multiple processors for better performance.

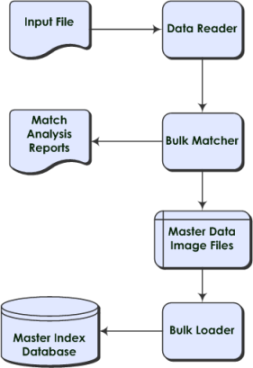

The IBML Tool is generated from the master index application, and consists of two components: the Bulk Matcher and the Bulk Loader. The Bulk Matcher compares records in the input data using probabilistic matching algorithms based on the Master Index Match Engine and based on the configuration you defined for your master index application. It then creates an image of the cleansed and matched data to be loaded into the master index. The Bulk Loader uses the output of the Bulk Matcher to load data directly into the master index database. Because the Bulk Matcher performs all of the match and potential duplicate processing and generates EUIDs for each unique record, the data is ready to be loaded with no additional processing from the master index application itself.

Performing an initial load of data into a master index database consists of three primary steps. The first step is optional and consists of running the Bulk Matcher in report mode on a representative subset of the data you need to load. This provides you with valuable information about the duplicate and match threshold settings and the blocking query for the master index application. Analyzing the data in this way is an iterative process, and the Bulk Matcher provides a configuration file that you can modify to test and retest the settings before you perform the final configuration of the master index application.

The second step in the process is running the Bulk Matcher in matching mode. The Bulk Matcher processes the data according to the query, matching, and threshold rules defined in the Bulk Matcher configuration file. This step compares and matches records in the input data in order to reduce data duplication and to link records that are possible matches of one another. The output of this step is a master image of the data to be loaded into the master index database.

The final step in the process is loading the data into the master index database. This can be done using either Oracle SQL*Loader or the Data Integrator Bulk Loader. Both products can read the output of the Bulk Matcher and load the image into the database.

Figure 11 Initial Bulk Match and Load Tool Process Flow

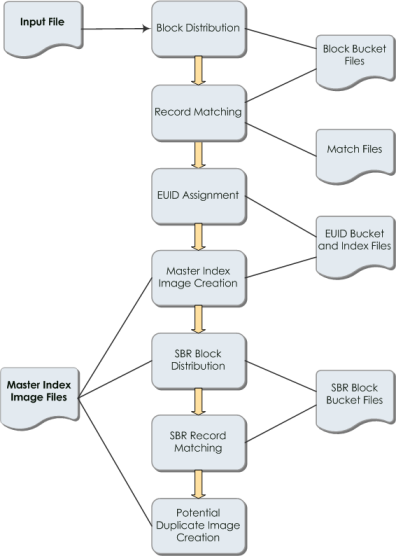

The Bulk Matcher performs a sequence of tasks to prepare the master image that will be loaded into the master index database. The first phase groups the records into buckets that can then be distributed to each matcher to process. Records are grouped based on the blocking query. The second phase matches the records in each bucket to one another and assign a match weight. The third phase merges all matched records into a master match file and assigns EUIDs (EUIDs are the unique identifiers used by the master index to link all matched system records). The fourth phase creates the master image of the data to be loaded into the master index database. The master image includes complete enterprise records with SBRs, system records, and child objects, as well as assumed matches and transactional information. The final phase generates any potential duplicate linkages and generate the master image for the potential duplicate table.

The following diagram illustrates each step in more detail along with the artifacts created along the way.

Figure 12 Bulk Matcher Internal Process

After the matching process is complete, you can load the data using either the Data Integrator Bulk Loader or a SQL*Loader bulk loader. Both are generated from the loader files created for the master index application. Like the Bulk Matcher, the Bulk Loader can be run on concurrent processors, each processing a different master data image file. Data Integrator provides a wizard to help create the ETL collaboration that defines the logic used to load the master images.

The cluster synchronizer coordinates the activities of all IBML processors. The cluster synchronizer database, installed within the master index database, stores activity information, such as bucket file names and the state of each phase. Each IBML Tool invokes the cluster synchronizer when they need to retrieve files, before they begin an activity, and after they complete an activity. The cluster synchronizer assigns the following states to a bucket as it is processed: new, assigned, and done. The master IBML Tool is also assigned states during processing based on which of the above phases is in process.

The IBML Tool provides high-performance, scalable matching and loading of bulk data to the Java CAPS MDM Suite. It provides the following features:

Includes a match analysis tool that can be used to test and analyze the values of the match threshold and duplicate threshold. (Depending on certain matching parameters, records with a match weight above the match threshold are automatically matched, and records with a match weight between the match threshold and the duplicate threshold are considered potential duplicates.)

Quickly and accurately performs the matching required for a high volume of legacy data that will become the MDM reference data.

Provides a highly scalable and powerful loading mechanism that dramatically reduces the length of time required to load bulk data.

Uses a cluster-based architecture to distribute the processing over multiple servers, so all activities are performed concurrently by all servers.

Reduces the time and resources required to perform a bulk match and load by first grouping records into blocks and then matching within each block rather than matching each record in sequence.

Synchronizes activities between all match and load processes, with a cluster of processors executing the same activity at any point. A cluster synchronizer coordinates activities across all components and processors.

Uses a sequential file I/O to read and write intermediate data.

Performs load balancing across all servers dynamically by having each server process one block of data at a time. Once a server completes a block, it picks up the next one to process.

Provides a default data reader that reads a flat file in the format output by the Data Cleanser, but also allows you to define a custom data reader for other formats.

Uses the existing configuration of the master index project for blocking and matching, and generates the master images based on the object structure of the master index.

Data Integrator provides a convenient wizard to help you generate the ETL collaboration that defines the load process. You can also use a command-line utility instead.