| Skip Navigation Links | |

| Exit Print View | |

|

Oracle VM Server for SPARC 2.1 Administration Guide Oracle VM Server for SPARC |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle VM Server for SPARC 2.1 Administration Guide Oracle VM Server for SPARC |

Part I Oracle VM Server for SPARC 2.1 Software

1. Overview of the Oracle VM Server for SPARC Software

2. Installing and Enabling Software

4. Setting Up Services and the Control Domain

Create an I/O Domain by Assigning a PCIe Bus

Assigning PCIe Endpoint Devices

Direct I/O Hardware and Software Requirements

12. Performing Other Administration Tasks

Part II Optional Oracle VM Server for SPARC Software

13. Oracle VM Server for SPARC Physical-to-Virtual Conversion Tool

14. Oracle VM Server for SPARC Configuration Assistant

15. Using the Oracle VM Server for SPARC Management Information Base Software

16. Logical Domains Manager Discovery

17. Using the XML Interface With the Logical Domains Manager

Starting with the Oracle VM Server for SPARC 2.0 release and the Oracle Solaris 10 9/10 OS, you can assign an individual PCIe endpoint (or direct I/O-assignable) device to a domain. This use of PCIe endpoint devices increases the granularity of the device assignment to I/O domains. This capability is delivered by means of the direct I/O (DIO) feature.

The DIO feature enables you to create more I/O domains than the number of PCIe buses in a system. The possible number of I/O domains is now limited only by the number of PCIe endpoint devices.

A PCIe endpoint device can be one of the following:

A PCIe card in a slot

An on-board PCIe device that is identified by the platform

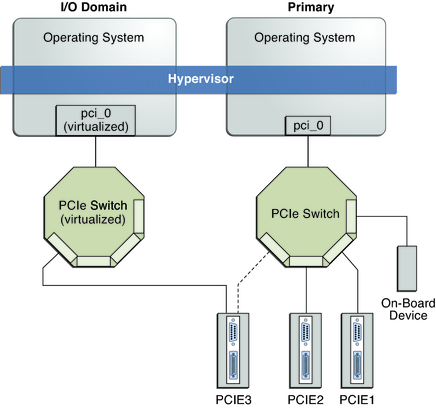

The following diagram shows that the PCIe endpoint device, PCIE3, is assigned to an I/O domain. Both bus pci_0 and the switch in the I/O domain are virtual. The PCIE3 endpoint device is no longer accessible in the primary domain.

In the I/O domain, the pci_0 block and the switch are a virtual root complex and a virtual PCIe switch, respectively. This block and switch are very similar to the pci_0 block and the switch in the primary domain. In the primary domain, the devices in slot PCIE3 are a shadow form of the original devices and are identified as SUNW,assigned.

Figure 6-2 Assigning a PCIe Endpoint Device to an I/O Domain

Use the ldm list-io command to list the PCIe endpoint devices.

Though the DIO feature permits any PCIe card in a slot to be assigned to an I/O domain, only certain PCIe cards are supported. See Direct I/O Hardware and Software Requirements in Oracle VM Server for SPARC 2.1 Release Notes.

Note - PCIe cards that have a switch or bridge are not supported. PCIe function-level assignment is also not supported. Assigning an unsupported PCIe card to an I/O domain might result in unpredictable behavior.

The following are a few important details about the DIO feature:

This feature is enabled only when all the software requirements are met. See Direct I/O Hardware and Software Requirements in Oracle VM Server for SPARC 2.1 Release Notes.

Only PCIe endpoints that are connected to a PCIe bus assigned to the primary domain can be assigned to another domain with the DIO feature.

I/O domains using DIO have access to the PCIe endpoint devices only when the primary domain is running.

Rebooting the primary domain affects I/O domains that have PCIe endpoint devices. See Rebooting the primary Domain. The primary domain also has the following responsibilities:

Initializes the PCIe bus and manages the bus.

Handles all bus errors that are triggered by the PCIe endpoint devices that are assigned to I/O domains. Note that only the primary domain receives all PCIe bus-related errors.

To successfully use the DIO feature, you must be running the appropriate software and assign only the PCIe cards that are supported by the DIO feature to I/O domains. For the hardware and software requirements, see Direct I/O Hardware and Software Requirements in Oracle VM Server for SPARC 2.1 Release Notes.

Note - All PCIe cards that are supported on a platform are supported in the primary domain. See the documentation for your platform for the list of supported PCIe cards. However, only direct I/O-supported PCIe cards can be assigned to I/O domains.

For information about how to work around the following limitations, see Planning PCIe Endpoint Device Configuration.

A delayed reconfiguration is initiated when you assign or remove a PCIe endpoint device to or from the primary domain, which means that the changes are applied only after the primary domain reboots.

Rebooting the primary domain affects direct I/O, so carefully plan your direct I/O configuration changes to maximize the direct I/O-related changes to the primary domain and to minimize primary domain reboots.

Assignment or removal of a PCIe endpoint device to any other domain is only permitted when that domain is either stopped or inactive.

Carefully plan ahead when you assign or remove PCIe endpoint devices to avoid primary domain downtime. The reboot of the primary domain not only affects the services that are available on the primary domain itself, but it also affects the I/O domains that have PCIe endpoint devices assigned. Though the changes to each I/O domain do not affect the other domains, planning ahead helps to minimize the consequences on the services that are provided by that domain.

The delayed reconfiguration is initiated the first time you assign or remove a device. As a result, you can continue to add or remove more devices and then reboot the primary domain only one time to make all the changes take effect.

For an example, see Create an I/O Domain by Assigning a PCIe Endpoint Device.

The following describes the general steps you must take to plan and perform a DIO device configuration:

Understand and record your system hardware configuration.

Specifically, record information about the part numbers and other details of the PCIe cards in the system.

Use the ldm list-io -l and prtdiag -v commands to obtain the information and save it for future reference.

Determine which PCIe endpoint devices are required to be in the primary domain.

For example, determine the PCIe endpoint devices that provide access to the following:

Boot disk device

Network device

Other devices that the primary domain offers as services

Remove all PCIe endpoint devices that you might use in I/O domains.

This step helps you to avoid performing subsequent reboot operations on the primary domain, as reboots affect I/O domains.

Use the ldm rm-io command to remove the PCIe endpoint devices. Use pseudonyms rather than device paths to specify the devices to the rm-io and add-io subcommands.

Note - Though the first removal of a PCIe endpoint device might initiate a delayed reconfiguration, you can continue to remove devices. After you have removed all the devices you want, you only need to reboot the primary domain one time to make all the changes take effect.

Save this configuration to the service processor (SP).

Use the ldm add-config command.

Reboot the primary domain to release the PCIe endpoint devices that you removed in Step 3.

Confirm that the PCIe endpoint devices you removed are no longer assigned to the primary domain.

Use the ldm list-io -l command to verify that the devices you removed appear as SUNW,assigned-device in the output.

Assign an available PCIe endpoint device to a guest domain to provide direct access to the physical device.

After you make this assignment, you can no longer migrate the guest domain to another physical system by means of the domain migration feature.

Add or remove a PCIe endpoint device to or from a guest domain.

Use the ldm add-io command.

Minimize the changes to I/O domains by reducing the reboot operations and by avoiding downtime of services offered by that domain.

(Optional) Make changes to the PCIe hardware.

The primary domain is the owner of the PCIe bus and is responsible for initializing and managing the bus. The primary domain must be active and running a version of the Oracle Solaris OS that supports the DIO feature. Shutting down, halting, or rebooting the primary domain interrupts access to the PCIe bus. When the PCIe bus is unavailable, the PCIe devices on that bus are affected and might become unavailable.

The behavior of I/O domains with PCIe endpoint devices is unpredictable when the primary domain is rebooted while those I/O domains are running. For instance, I/O domains with PCIe endpoint devices might panic during or after the reboot. Upon reboot of the primary domain, you would need to manually stop and start each domain.

To work around these issues, perform one of the following steps:

Manually shut down any domains on the system that have PCIe endpoint devices assigned to them before you shut down the primary domain.

This step ensures that these domains are cleanly shut down before you shut down, halt, or reboot the primary domain.

To find all the domains that have PCIe endpoint devices assigned to them, run the ldm list-io command. This command enables you to list the PCIe endpoint devices that have been assigned to domains on the system. So, use this information to help you plan. For a detailed description of this command output, see the ldm(1M) man page.

For each domain found, stop the domain by running the ldm stop command.

Configure a domain dependency relationship between the primary domain and the domains that have PCIe endpoint devices assigned to them.

This dependency relationship ensures that domains with PCIe endpoint devices are automatically restarted when the primary domain reboots for any reason.

Note that this dependency relationship forcibly resets those domains, and they cannot cleanly shut down. However, the dependency relationship does not affect any domains that were manually shut down.

# ldm set-domain failure-policy=reset primary # ldm set-domain master=primary ldom

The following steps help you avoid misconfiguring the PCIe endpoint assignments. For platform-specific information about installing and removing specific hardware, see the documentation for your platform.

No action is required if you are installing a PCIe card into an empty slot. This PCIe card is automatically owned by the domain that owns the PCIe bus.

To assign the new PCIe card to an I/O domain, use the ldm rm-io command to first remove the card from the primary domain. Then, use the ldm add-io command to assign the card to an I/O domain.

No action is required if a PCIe card is removed from the system and assigned to the primary domain.

To remove a PCIe card that is assigned to an I/O domain, first remove the device from the I/O domain. Then, add the device to the primary domain before you physically remove the device from the system.

To replace a PCIe card that is assigned to an I/O domain, verify that the new card is supported by the DIO feature.

If so, no action is required to automatically assign the new card to the current I/O domain.

If not, first remove that PCIe card from the I/O domain by using the ldm rm-io command. Next, use the ldm add-io command to reassign that PCIe card to the primary domain. Then, physically replace the PCIe card you assigned to the primary domain with a different PCIe card. These steps enable you to avoid a configuration that is unsupported by the DIO feature.

Plan all DIO deployments ahead of time to minimize downtime.

For an example of adding a PCIe endpoint device to create an I/O domain, see Planning PCIe Endpoint Device Configuration.

The output of the ldm list-io -l command shows how the I/O devices are currently configured. You can obtain more detailed information by using the prtdiag -v command.

Note - After the devices are assigned to I/O domains, the identity of the devices can only be determined in the I/O domains.

# ldm list-io -l

IO PSEUDONYM DOMAIN

-- --------- ------

pci@400 pci_0 primary

pci@500 pci_1 primary

PCIE PSEUDONYM STATUS DOMAIN

---- --------- ------ ------

pci@400/pci@0/pci@c PCIE1 EMP -

pci@400/pci@0/pci@9 PCIE2 OCC primary

network@0

network@0,1

network@0,2

network@0,3

pci@400/pci@0/pci@d PCIE3 OCC primary

SUNW,emlxs/fp/disk

SUNW,emlxs@0,1/fp/disk

SUNW,emlxs@0,1/fp@0,0

pci@400/pci@0/pci@8 MB/SASHBA OCC primary

scsi@0/tape

scsi@0/disk

scsi@0/sd@0,0

scsi@0/sd@1,0

pci@500/pci@0/pci@9 PCIE0 EMP -

pci@500/pci@0/pci@d PCIE4 OCC primary

network@0

network@0,1

pci@500/pci@0/pci@c PCIE5 OCC primary

SUNW,qlc@0/fp/disk

SUNW,qlc@0/fp@0,0

SUNW,qlc@0,1/fp/disk

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c605dbab,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c6041434,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c6053652,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c6041b4f,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c605dbb3,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c60413bc,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c604167f,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c6041b3a,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c605dabf,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c60417a4,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c60416a7,0

SUNW,qlc@0,1/fp@0,0/ssd@w21000011c60417e7,0

SUNW,qlc@0,1/fp@0,0/ses@w215000c0ff082669,0

pci@500/pci@0/pci@8 MB/NET0 OCC primary

network@0

network@0,1

network@0,2

network@0,3primary# df / / (/dev/dsk/c0t1d0s0 ): 1309384 blocks 457028 files

primary# df /

/ (rpool/ROOT/s10s_u8wos_08a):245176332 blocks 245176332 files

primary# zpool status rpool

zpool status rpool

pool: rpool

state: ONLINE

scrub: none requested

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

c0t1d0s0 ONLINE 0 0 0The following example uses block device c0t1d0s0:

primary# ls -l /dev/dsk/c0t1d0s0 lrwxrwxrwx 1 root root 49 Jul 20 22:17 /dev/dsk/c0t1d0s0 -> ../../devices/pci@400/pci@0/pci@8/scsi@0/sd@0,0:a

In this example, the physical device for the primary domain's boot disk is connected to the PCIe endpoint device (pci@400/pci@0/pci@8), which corresponds to the listing of MB/SASHBA in Step 1. Removing this device will prevent the primary domain from booting, so do not remove this device from the primary domain.

# ifconfig -a

lo0: flags=2001000849<UP,LOOPBACK,RUNNING,MULTICAST,IPv4,VIRTUAL> mtu 8232 index 1

inet 127.0.0.1 netmask ff000000

nxge0: flags=1004843<UP,BROADCAST,RUNNING,MULTICAST,DHCP,IPv4> mtu 1500 index 2

inet 10.6.212.149 netmask fffffe00 broadcast 10.6.213.255

ether 0:21:28:4:27:ccIn this example, the nxge0 interface is used as the network interface for the primary domain.

The following command uses the nxge0 network interface:

primary# ls -l /dev/nxge0 lrwxrwxrwx 1 root root 46 Jul 30 17:29 /dev/nxge0 -> ../devices/pci@500/pci@0/pci@8/network@0:nxge0

In this example, the physical device for the network interface used by the primary domain is connected to the PCIe endpoint device (pci@500/pci@0/pci@8), which corresponds to the listing of MB/NET0 in Step 1. So, you do not want to remove this device from the primary domain. You can safely assign all other PCIe devices to other domains because they are not used by the primary domain.

If the network interface used by the primary domain is on a bus that you want to assign to another domain, the primary domain would need to be reconfigured to use a different network interface.

In this example, you can remove the PCIE2, PCIE3, PCIE4, and PCIE5 endpoint devices because they are not being used by the primary domain.

| Caution - Do not remove the devices that are used in the primary domain. If you mistakenly remove the wrong devices, use the ldm cancel-op reconf primary command to cancel the delayed reconfiguration on the primary domain. |

You can remove multiple devices at one time to avoid multiple reboots.

# ldm rm-io PCIE2 primary Initiating a delayed reconfiguration operation on the primary domain. All configuration changes for other domains are disabled until the primary domain reboots, at which time the new configuration for the primary domain will also take effect. # ldm rm-io PCIE3 primary ------------------------------------------------------------------------------ Notice: The primary domain is in the process of a delayed reconfiguration. Any changes made to the primary domain will only take effect after it reboots. ------------------------------------------------------------------------------ # ldm rm-io PCIE4 primary ------------------------------------------------------------------------------ Notice: The primary domain is in the process of a delayed reconfiguration. Any changes made to the primary domain will only take effect after it reboots. ------------------------------------------------------------------------------ # ldm rm-io PCIE5 primary ------------------------------------------------------------------------------ Notice: The primary domain is in the process of a delayed reconfiguration. Any changes made to the primary domain will only take effect after it reboots. ------------------------------------------------------------------------------

The following command saves the configuration in a file called dio:

# ldm add-config dio

# reboot -- -r

# ldm list-io IO PSEUDONYM DOMAIN -- --------- ------ pci@400 pci_0 primary pci@500 pci_1 primary PCIE PSEUDONYM STATUS DOMAIN ---- --------- ------ ------ pci@400/pci@0/pci@c PCIE1 EMP - pci@400/pci@0/pci@9 PCIE2 OCC pci@400/pci@0/pci@d PCIE3 OCC pci@400/pci@0/pci@8 MB/SASHBA OCC primary pci@500/pci@0/pci@9 PCIE0 EMP - pci@500/pci@0/pci@d PCIE4 OCC pci@500/pci@0/pci@c PCIE5 OCC pci@500/pci@0/pci@8 MB/NET0 OCC primary

Note - The ldm list-io -l output might show SUNW,assigned-device for the PCIe endpoint devices that were removed. Actual information is no longer available from the primary domain, but the domain to which the device is assigned has this information.

# ldm add-io PCIE2 ldg1

# ldm bind ldg1 # ldm start ldg1 LDom ldg1 started

Use the dladm show-dev command to verify that the network device is available. Then, configure the network device for use in the domain.

# dladm show-dev vnet0 link: up speed: 0 Mbps duplex: unknown nxge0 link: unknown speed: 0 Mbps duplex: unknown nxge1 link: unknown speed: 0 Mbps duplex: unknown nxge2 link: unknown speed: 0 Mbps duplex: unknown nxge3 link: unknown speed: 0 Mbps duplex: unknown