1 Introduction to the Composite Application Validation System

This chapter describes the purpose and key components of the Composite Application Validation System (CAVS). It also describes design assumptions and knowledge prerequisites.

The Composite Application Validation System (CAVS) is a framework that provides a structured approach to test integration of Oracle Application Integration Architecture (AIA) services. The CAVS includes test initiators that simulate web service invocations and simulators that simulate service endpoints.

This chapter includes the following sections:

-

Section 1.1, "Describing the Purpose of the Composite Application Validation System"

-

Section 1.2, "Describing Key Components of the CAVS Framework"

-

Section 1.3, "Describing the CAVS Design Assumptions and Knowledge Prerequisites"

1.1 Describing the Purpose of the Composite Application Validation System

In the context of AIA, where there is a sequence of service invocations; spanning Application Business Connector Services (ABCSs), Enterprise Business Services (EBSs), Enterprise Business Flows (EBFs), and participating applications; the CAVS test initiators and simulators enable a layered testing approach. Each component in an integration can be thoroughly tested without having to account for dependencies by using test initiators and simulators on either end.

Consequently, when you build an integration, you have the ability to add new components to an already tested subset, allowing any errors to be constrained to the new component or to the interface between the new component and the existing component. This ability to isolate and test individual web services within an integration provides the benefit of narrowing the test scope, thereby distancing the service test from possible faults in other components.

Test initiators and simulators can be used independent of each other, thereby allowing users to effectively substitute them for non-available AIA services or participating applications.

The CAVS provides a repository that stores these test initiator and simulator definitions created by the CAVS user, as well as an interactive user interface to create and manage the same. Tests can be configured to run individually or in a single-threaded batch.

The CAVS provides value as a testing tool throughout the integration development life cycle:

-

Development

Because integration developers working with AIA are dealing with integrating disparate systems, they typically belong to different teams. To this end, the CAVS provides an effective way to substitute dependencies, letting developers focus on the functionality of their own service rather than being preoccupied with integrations to other services.

-

Quality assurance

The CAVS allows quality assurance engineers to unit and flow test integrations, thereby providing a way to easily certify different pieces of an integration. The reusability of test definitions, simulators, and test groups helps in regression testing and provides a quick way to certify new versions of services.

1.2 Describing Key Components of the CAVS Framework

The CAVS framework operates using the following key components:

-

Test definition

-

Simulator definition

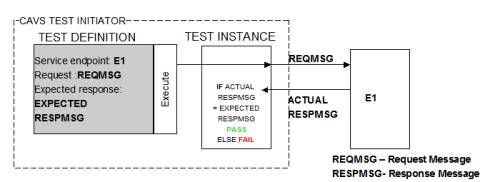

The CAVS test initiator reads test data and feeds it to the web service being tested. You create the test data as a part of a test definition. The test definition is a configuration of the test initiator and contains test execution instructions.

The CAVS user creates a definition using the CAVS user interface (UI) to define the service endpoint URL that needs to be invoked, as well as the request message that will be passed along with metadata about the test definition itself.

For more information about creating test definitions, see Chapter 4, "Creating and Modifying Test Definitions."

The test initiator is a logical unit that executes test definitions to call the endpoint URL defined and creates test instances. This call is no different from any other request initiated by other clients. If the test definition Service Type value is set to Synchronous or Asynchronous two-way, the actual response can be verified against predefined response data to validate the accuracy of the response.

Figure 1-1 illustrates the high-level concept of the test initiator.

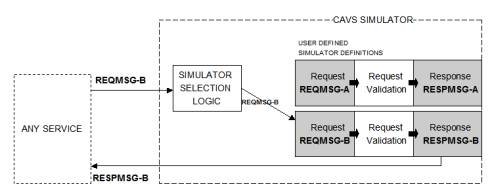

The CAVS simulator is used to simulate a web service. Simulators typically contain predefined responses for a specific request. CAVS users create several simulator definitions, each for a specific set of input.

At run time, the CAVS simulator framework receives data from the service being tested. Upon receiving the request, CAVS locates the appropriate simulator definition, validates the input against predefined request values, and then returns predefined response data so that the web service being tested can continue processing.

For more information about creating simulator definitions, see Chapter 5, "Creating and Modifying Simulator Definitions."

Figure 1-2 illustrates the high-level concept of the CAVS simulator:

1.3 Describing the CAVS Design Assumptions and Knowledge Prerequisites

The CAVS operates with the following design assumptions:

-

The CAVS assumes that the requester and provider ABCSs it is testing are implemented using BPEL.

-

The CAVS is designed to initiate requests and simulate responses as SOAP messages using SOAP over HTTP. The request and response messages that you define in test and simulator definitions must contain the entire XML SOAP document, including the SOAP envelope, message header, and body (payload).

-

The correlation logic between the test initiator and the response simulator is based on timestamps only. For this reason, test and simulator instances generated in the database schema will not always be reconcilable, especially when the same web service is invoked multiple times during a very short time period, as in during performance testing.

-

The CAVS does not provide or authenticate security information for web services that are initiated by a test initiator or received by a response simulator. However, security information passed through the system by the web service can be used as a part of verification and validation logic.

-

When a participating application is involved in a CAVS testing flow, execution of tests can potentially modify data in a participating application. Therefore, consecutive running of the same test may not generate the same results. The CAVS is not designed to prevent this kind of data tampering because it supports the user s intention to include a real participating application in the flow. The CAVS has no control over modifications that are performed in participating applications.

This issue does not apply if your CAVS test scenario uses test definitions and simulator definitions to replace all participating applications and other dependencies. In this case, all cross-reference data is purged after the test scenario has been executed. This enables rerunning of the test scenario.

Note:

CAVS cross reference data is purged at the end of a test execution when executing a test definition and at the end of a test group execution when executing a test group definition. Therefore, if you want to execute test definitions that are dependent on cross referencing data created by earlier test executions, ensure that you include all dependent test definitions in a test group and execute the test group.

For more information about how to make test scenarios rerunnable, see Chapter 11, "Purging CAVS-Related Cross-Reference Entries to Enable Rerunning of Test Scenarios."

To work effectively with the CAVS, users must have working knowledge of the following concepts and technologies:

-

AIA

-

XML

-

XPath

-

SOAP