| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris 10 1/13 Installation Guide: Live Upgrade and Upgrade Planning Oracle Solaris 10 1/13 Information Library |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris 10 1/13 Installation Guide: Live Upgrade and Upgrade Planning Oracle Solaris 10 1/13 Information Library |

Part I Upgrading With Live Upgrade

1. Where to Find Oracle Solaris Installation Planning Information

Creating a Boot Environment With RAID-1 Volume File Systems

Managing Volumes With Live Upgrade

Mapping Solaris Volume Manager Tasks to Live Upgrade

Examples of Using Live Upgrade to Create RAID-1 Volumes

Auto Registration Impact for Live Upgrade

4. Using Live Upgrade to Create a Boot Environment (Tasks)

5. Upgrading With Live Upgrade (Tasks)

6. Failure Recovery: Falling Back to the Original Boot Environment (Tasks)

7. Maintaining Live Upgrade Boot Environments (Tasks)

8. Upgrading the Oracle Solaris OS on a System With Non-Global Zones Installed

Part II Upgrading and Migrating With Live Upgrade to a ZFS Root Pool

10. Live Upgrade and ZFS (Overview)

11. Live Upgrade for ZFS (Planning)

12. Creating a Boot Environment for ZFS Root Pools

13. Live Upgrade for ZFS With Non-Global Zones Installed

A. Live Upgrade Command Reference

C. Additional SVR4 Packaging Requirements (Reference)

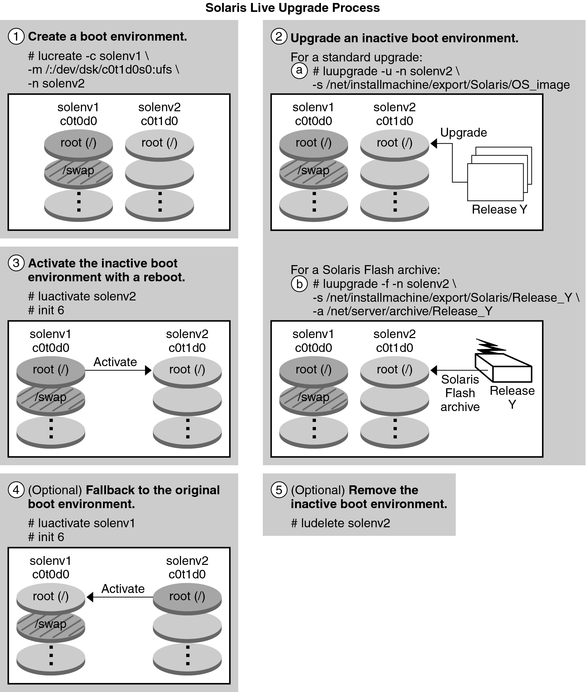

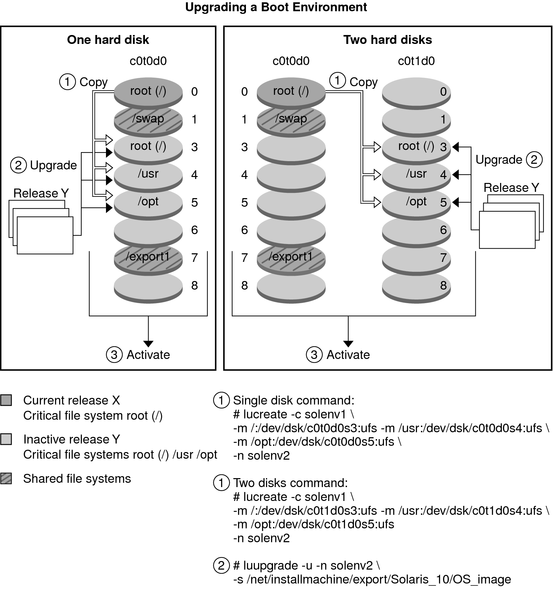

The following overview describes the tasks necessary to create a copy of the current boot environment, upgrade the copy, and switch the upgraded copy to become the active boot environment. The fallback process of switching back to the original boot environment is also described. Figure 2-1 describes this complete Live Upgrade process.

Figure 2-1 Live Upgrade Process

The following sections describe the Live Upgrade process.

A new boot environment can be created on a physical slice or a logical volume:

The process of creating a boot environment provides a method of copying critical file systems from an active boot environment to a new boot environment. The disk is reorganized if necessary, file systems are customized, and the critical file systems are copied to the new boot environment.

Live Upgrade distinguishes between two file system types: critical file systems and shareable. The following table describes these file system types.

|

Live Upgrade can create a boot environment with RAID-1 volumes (mirrors) on file systems. For an overview, see Creating a Boot Environment With RAID-1 Volume File Systems.

The process of creating a new boot environment begins by identifying an unused slice where a critical file system can be copied. If a slice is not available or a slice does not meet the minimum requirements, you need to format a new slice.

After the slice is defined, you can reconfigure the file systems on the new boot environment before the file systems are copied into the directories. You reconfigure file systems by splitting and merging them, which provides a simple way of editing the vfstab to connect and disconnect file system directories. You can merge file systems into their parent directories by specifying the same mount point. You can also split file systems from their parent directories by specifying different mount points.

After file systems are configured on the inactive boot environment, you begin the automatic copy. Critical file systems are copied to the designated directories. Shareable file systems are not copied, but are shared. The exception is that you can designate some shareable file systems to be copied. When the file systems are copied from the active to the inactive boot environment, the files are directed to the new directories. The active boot environment is not changed in any way.

|

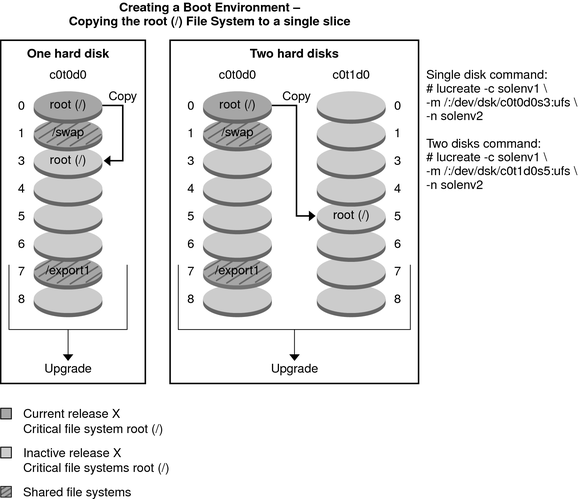

For UFS file systems, the figures in this section illustrate various ways of creating new boot environments.

For ZFS file system information, see Chapter 10, Live Upgrade and ZFS (Overview)

The following figure shows that critical file system root (/) has been copied to another slice on a disk to create a new boot environment. The active boot environment contains the root (/) file system on one slice. The new boot environment is an exact duplicate with the root (/) file system on a new slice. The /swap volume and /export/home file system are shared by the active and inactive boot environments.

Figure 2-2 Creating an Inactive Boot Environment – Copying the root (/) File System

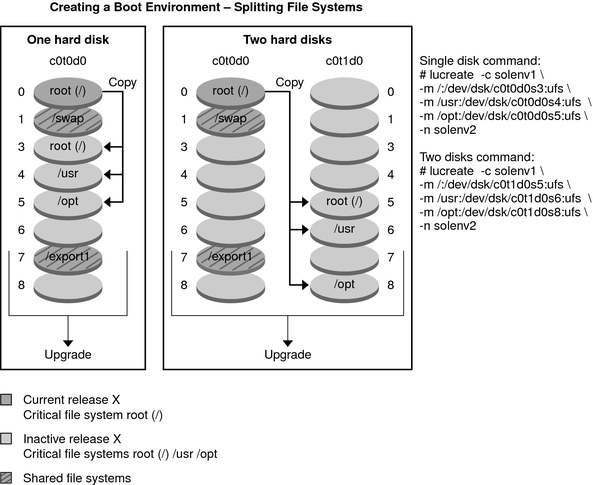

The following figure shows critical file systems that have been split and have been copied to slices on a disk to create a new boot environment. The active boot environment contains the root (/) file system on one slice. On that slice, the root (/) file system contains the /usr, /var, and /opt directories. In the new boot environment, the root (/) file system is split and /usr and /opt are put on separate slices. The /swap volume and /export/home file system are shared by both boot environments.

Figure 2-3 Creating an Inactive Boot Environment – Splitting File Systems

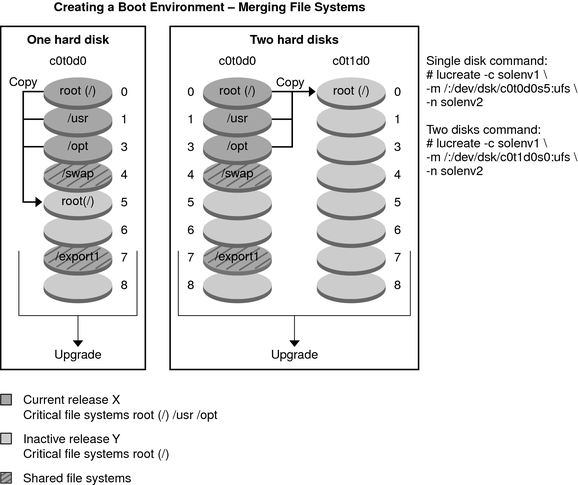

The following figure shows critical file systems that have been merged and have been copied to slices on a disk to create a new boot environment. The active boot environment contains the root (/) file system, /usr, /var, and /opt with each file system on their own slice. In the new boot environment, /usr and /opt are merged into the root (/) file system on one slice. The /swap volume and /export/home file system are shared by both boot environments.

Figure 2-4 Creating an Inactive Boot Environment – Merging File Systems

Live Upgrade uses Solaris Volume Manager technology to create a boot environment that can contain file systems encapsulated in RAID-1 volumes. Solaris Volume Manager provides a powerful way to reliably manage your disks and data by using volumes. Solaris Volume Manager enables concatenations, stripes, and other complex configurations. Live Upgrade enables a subset of these tasks, such as creating a RAID-1 volume for the root (/) file system.

A volume can group disk slices across several disks to transparently appear as a single disk to the OS. Live Upgrade is limited to creating a boot environment for the root (/) file system that contains single-slice concatenations inside a RAID-1 volume (mirror). This limitation is because the boot PROM is restricted to choosing one slice from which to boot.

When creating a boot environment, you can use Live Upgrade to manage the following tasks.

Detach a single-slice concatenation (submirror) from a RAID-1 volume (mirror). The contents can be preserved to become the content of the new boot environment if necessary. Because the contents are not copied, the new boot environment can be quickly created. After the submirror is detached from the original mirror, the submirror is no longer part of the mirror. Reads and writes on the submirror are no longer performed through the mirror.

Create a boot environment that contains a mirror.

Attach a maximum of three single-slice concatenations to the newly created mirror.

You use the lucreate command with the -m option to create a mirror, detach submirrors, and attach submirrors for the new boot environment.

Note - If VxVM volumes are configured on your current system, the lucreate command can create a new boot environment. When the data is copied to the new boot environment, the Veritas file system configuration is lost and a UFS file system is created on the new boot environment.

For more information, see the following resources:

For step-by-step procedures, see How to Create a Boot Environment With RAID-1 Volumes (Mirrors).

For an overview of creating RAID-1 volumes when installing, see Chapter 8, Creating RAID-1 Volumes (Mirrors) During Installation (Overview), in Oracle Solaris 10 1/13 Installation Guide: Planning for Installation and Upgrade.

For in-depth information about other complex Solaris Volume Manager configurations that are not supported if you are using the Live Upgrade, see Chapter 2, Storage Management Concepts, in Solaris Volume Manager Administration Guide.

Live Upgrade manages a subset of Solaris Volume Manager tasks. The following table shows the Solaris Volume Manager components that the Live Upgrade can manage.

Table 2-1 Classes of Volumes

|

The examples in this section present command syntax for creating RAID-1 volumes for a new boot environment.

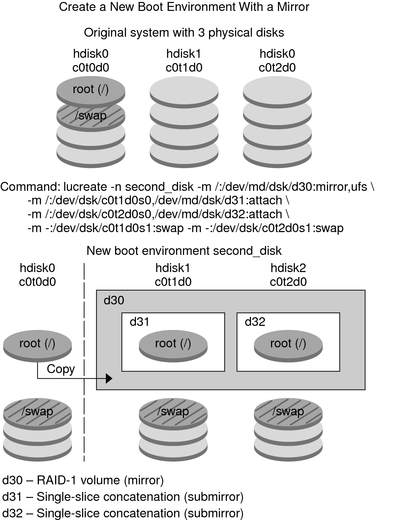

The following figure shows a new boot environment with a RAID-1 volume (mirror) that is created on two physical disks. The following command created the new boot environment and the mirror:

# lucreate -n second_disk -m /:/dev/md/dsk/d30:mirror,ufs \ -m /:/dev/dsk/c0t1d0s0,/dev/md/dsk/d31:attach -m /:/dev/dsk/c0t2d0s0,/dev/md/dsk/d32:attach \ -m -:/dev/dsk/c0t1d0s1:swap -m -:/dev/dsk/c0t2d0s1:swap

This command performs the following tasks:

Creates a new boot environment, second_disk.

Creates a mirror d30 and configures a UFS file system.

Creates a single-device concatenation on slice 0 of each physical disk. The concatenations are named d31 and d32.

Adds the two concatenations to mirror d30.

Copies the root (/) file system to the mirror.

Configures file systems for swap on slice 1 of each physical disk.

Figure 2-5 Create a Boot Environment and Create a Mirror

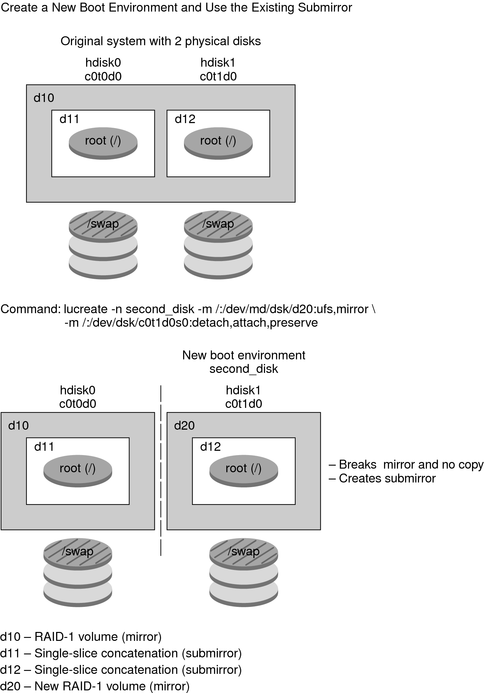

The following figure shows a new boot environment that contains a RAID-1 volume (mirror). The following command created the new boot environment and the mirror:

# lucreate -n second_disk -m /:/dev/md/dsk/d20:ufs,mirror \ -m /:/dev/dsk/c0t1d0s0:detach,attach,preserve

This command performs the following tasks:

Creates a new boot environment, second_disk.

Breaks mirror d10 and detaches concatenation d12.

Preserves the contents of concatenation d12. File systems are not copied.

Creates a new mirror d20 resulting in two one-way mirrors d10 and d20.

Attaches concatenation d12 to mirror d20.

Figure 2-6 Create a Boot Environment and Use the Existing Submirror

After you have created a boot environment, you can perform an upgrade on the boot environment. As part of that upgrade, the boot environment can contain RAID-1 volumes (mirrors) for any file systems, or it can have non-global zones installed. The upgrade does not affect any files in the active boot environment. When you are ready, you activate the new boot environment, which then becomes the current boot environment.

Note - Starting with the Oracle Solaris 10 9/10 release, the upgrade process is affected by Auto Registration. See Auto Registration Impact for Live Upgrade.

For more information, see the following resources:

For procedures about upgrading a boot environment for UFS file systems, see Chapter 5, Upgrading With Live Upgrade (Tasks).

For an example of upgrading a boot environment with a RAID–1 volume file system for UFS file systems, see Example of Detaching and Upgrading One Side of a RAID-1 Volume (Mirror).

For procedures about upgrading with non-global zones for UFS file systems, see Chapter 8, Upgrading the Oracle Solaris OS on a System With Non-Global Zones Installed.

For upgrading ZFS file systems or migrating to a ZFS file system, see Chapter 10, Live Upgrade and ZFS (Overview).

The following figure shows an upgrade to an inactive boot environment.

Figure 2-7 Upgrading an Inactive Boot Environment

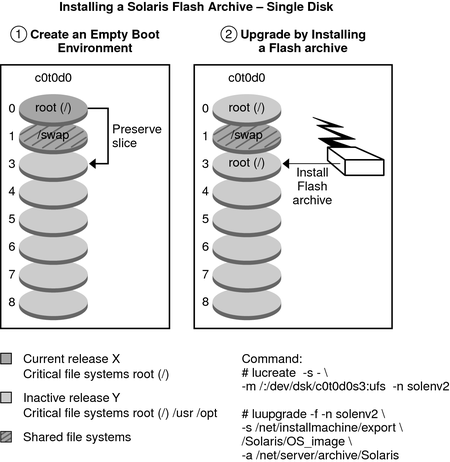

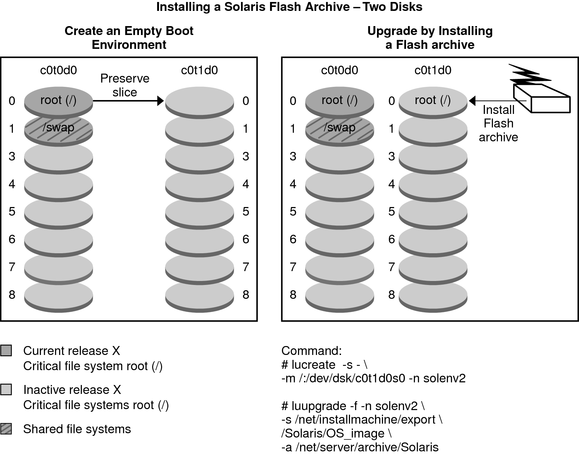

Rather than an upgrade, you can install a Flash Archive on a boot environment. The Flash Archive installation feature enables you to create a single reference installation of the Oracle Solaris OS on a system. This system is called the master system. Then, you can replicate that installation on a number of systems that are called clone systems. In this situation, the inactive boot environment is a clone. When you install the flash archive on a system, the archive replaces all the files on the existing boot environment as an initial installation would.

For procedures about installing a flash archive, see Installing Flash Archives on a Boot Environment.

The following figures show an installation of a flash archive on an inactive boot environment. Figure 2-8 shows a system with a single hard disk. Figure 2-9 shows a system with two hard disks.

Figure 2-8 Installing a Flash Archive on a Single Disk

Figure 2-9 Installing a Flash Archive on Two Disks

Starting with the Oracle Solaris 10 9/10 release, the upgrade process is affected by Auto Registration.

When you install or upgrade a system, configuration data about that system is, on rebooting, automatically communicated through the existing service tag technology to the Oracle Product Registration System. This service tag data about your system is used, for example, to help Oracle enhance customer support and services. You can use this same configuration data to create and manage your own inventory of your systems.

Auto Registration does not change Live Upgrade procedures unless you are specifically upgrading a system from a prior release to the Oracle Solaris 10 9/10 release or a later release.

Auto Registration does not change any of the following Live Upgrade procedures:

Installing a flash archive

Adding or removing patches or packages

Testing a profile

Checking package integrity

When, and only when, you are upgrading a system from a prior release to the Oracle Solaris 10 9/10 release or to a later release, you must create an Auto Registration configuration file. Then, when you upgrade that system, you must use the -k option in the luupgrade -u command and point to this configuration file.

When, and only when, you are upgrading a prior release to the Oracle Solaris 10 9/10 release or to a later release, use this procedure to provide required Auto Registration information during the upgrade.

This file should be formatted as a list of keyword-value pairs. Include the following keywords and values, in this format, in the file:

http_proxy=Proxy-Server-Host-Name http_proxy_port=Proxy-Server-Port-Number http_proxy_user=HTTP-Proxy-User-Name http_proxy_pw=HTTP-Proxy-Password oracle_user=My-Oracle-Support-User-Name oracle_pw=My-Oracle-Support-Password

Note the following formatting rules:

The passwords must be in plain, not encrypted, text.

Keyword order does not matter.

Keywords can be entirely omitted if you do not want to specify a value. Or, you can retain the keyword, and its value can be left blank.

Note - If you omit the support credentials, the registration will be anonymous.

Spaces in the configuration file do not matter, unless the value you want to enter should contain a space. Only http_proxy_user and http_proxy_pw values can contain a space within the value.

The oracle_pw value must not contain a space.

The following example shows a sample file.

http_proxy= webcache.central.example.COM http_proxy_port=8080 http_proxy_user=webuser http_proxy_pw=secret1 oracle_user=joe.smith@example.com oracle_pw=csdfl2442IJS

autoreg=disable

# /opt/ocm/ccr/bin/emCCR status

Oracle Configuration Manager - Release: 10.3.6.0.1 - Production

Copyright (c) 2005, 2011, Oracle and/or its affiliates. All rights reserved.

------------------------------------------------------------------

Log Directory /opt/ocm/config_home/ccr/log

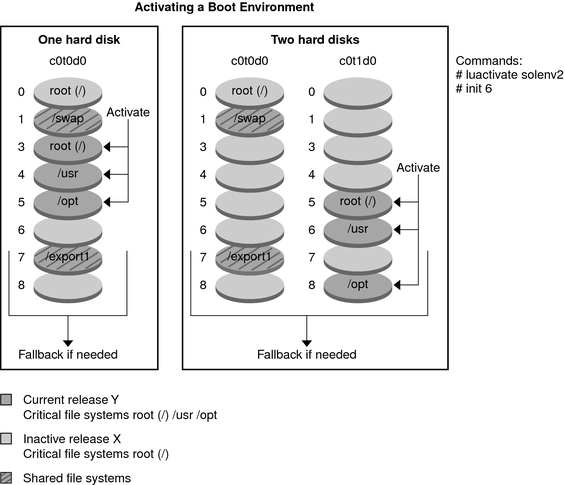

Collector Mode DisconnectedWhen you are ready to switch and make the new boot environment active, you can easily activate the new boot environment and reboot. Files are synchronized between boot environments the first time that you boot a newly created boot environment. “Synchronize” means that certain system files and directories are copied from the last-active boot environment to the boot environment being booted. When you reboot the system, the configuration that you installed on the new boot environment is active. The original boot environment then becomes an inactive boot environment.

For procedures about activating a boot environment, see Activating a Boot Environment. For information about synchronizing the active and inactive boot environment, see Synchronizing Files Between Boot Environments.

The following figure shows a switch after a reboot from an inactive to an active boot environment.

Figure 2-10 Activating an Inactive Boot Environment

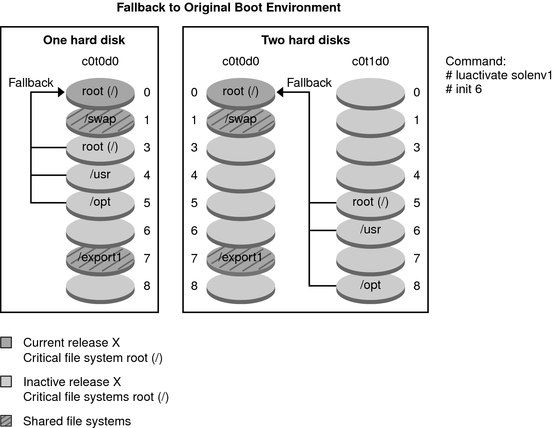

If a failure occurs, you can quickly fall back to the original boot environment with an activation and reboot. The use of fallback takes only the time to reboot the system, which is much quicker than backing up and restoring the original. The new boot environment that failed to boot is preserved. The failure can then be analyzed. You can fall back only to the boot environment that was used by luactivate to activate the new boot environment.

The following table describes the ways you can fall back to the previous boot environment.

|

For procedures to fall back, see Chapter 6, Failure Recovery: Falling Back to the Original Boot Environment (Tasks).

The following figure shows the switch that is made when you reboot to fallback.

Figure 2-11 Fallback to the Original Boot Environment

You can also do various maintenance activities such as checking status, renaming, or deleting a boot environment. For maintenance procedures, see Chapter 7, Maintaining Live Upgrade Boot Environments (Tasks).