| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris 10 1/13 Installation Guide: Live Upgrade and Upgrade Planning Oracle Solaris 10 1/13 Information Library |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris 10 1/13 Installation Guide: Live Upgrade and Upgrade Planning Oracle Solaris 10 1/13 Information Library |

Part I Upgrading With Live Upgrade

1. Where to Find Oracle Solaris Installation Planning Information

4. Using Live Upgrade to Create a Boot Environment (Tasks)

5. Upgrading With Live Upgrade (Tasks)

6. Failure Recovery: Falling Back to the Original Boot Environment (Tasks)

7. Maintaining Live Upgrade Boot Environments (Tasks)

8. Upgrading the Oracle Solaris OS on a System With Non-Global Zones Installed

Part II Upgrading and Migrating With Live Upgrade to a ZFS Root Pool

10. Live Upgrade and ZFS (Overview)

What's New in Oracle Solaris 10 8/11 Release

What's New in the Solaris 10 10/09 Release

Introduction to Using Live Upgrade With ZFS

Migrating From a UFS File System to a ZFS Root Pool

Migrating From a UFS Root (/) File System to ZFS Root Pool

Migrating a UFS File System With Solaris Volume Manager Volumes Configured to a ZFS Root File System

Creating a New Boot Environment From a ZFS Root Pool

Creating a New Boot Environment From a Source Other Than the Currently Running System

Creating a ZFS Boot Environment on a System With Non-Global Zones Installed

11. Live Upgrade for ZFS (Planning)

12. Creating a Boot Environment for ZFS Root Pools

13. Live Upgrade for ZFS With Non-Global Zones Installed

A. Live Upgrade Command Reference

C. Additional SVR4 Packaging Requirements (Reference)

You can either create a new ZFS boot environment within the same root pool or on a new root pool. This section contains the following overviews:

When creating a new boot environment within the same ZFS root pool, the lucreate command creates a snapshot from the source boot environment and then a clone is made from the snapshot. The creation of the snapshot and clone is almost instantaneous and the disk space used is minimal. The amount of space ultimately required depends on how many files are replaced as part of the upgrade process. The snapshot is read-only, but the clone is a read-write copy of the snapshot. Any changes made to the clone boot environment are not reflected in either the snapshot or the source boot environment from which the snapshot was made.

Note - As data within the active dataset changes, the snapshot consumes space by continuing to reference the old data. As a result, the snapshot prevents the data from being freed back to the pool. For more information about snapshots, see Chapter 6, Working With Oracle Solaris ZFS Snapshots and Clones, in Oracle Solaris ZFS Administration Guide.

When the current boot environment resides on the same ZFS pool, the -p option is omitted.

The following figure shows the creation of a ZFS boot environment from a ZFS root pool. The slice c0t0d0s0 contains a the ZFS root pool, rpool. In the lucreate command, the -n option assigns the name to the boot environment to be created, new-zfsBE. A snapshot of the original root pool is created rpool@new-zfsBE. The snapshot is used to make the clone that is a new boot environment, new-zfsBE. The boot environment, new-zfsBE, is ready to be upgraded and activated.

Figure 10-3 Creating a New Boot Environment on the Same Root Pool

Example 10-3 Creating a Boot Environment Within the Same ZFS Root Pool

This example shows the same command as in the figure. It creates a new boot environment in the same root pool. The lucreate command names the currently running boot environment with the -c zfsBE option, and the -n new-zfsBE creates the new boot environment. The zfs list command shows the ZFS datasets with the new boot environment and snapshot.

# lucreate -c zfsBE -n new-zfsBE # zfs list NAME USED AVAIL REFER MOUNTPOINT rpool 11.4G 2.95G 31K /rpool rpool/ROOT 4.34G 2.95G 31K legacy rpool/ROOT/new-zfsBE 4.34G 2.95G 4.34G / rpool/dump 2.06G 5.02G 16K - rpool/swap 5.04G 7.99G 16K -

You can use the lucreate command to copy an existing ZFS root pool into another ZFS root pool. The copy process might take some time depending on your system.

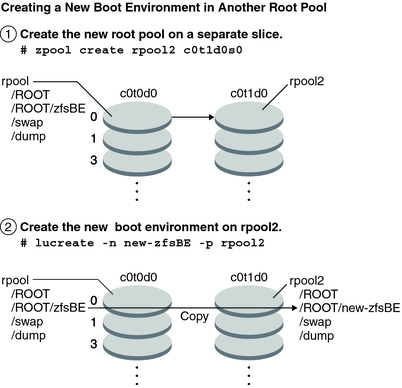

The following figure shows the zpool command that creates a ZFS root pool, rpool2, on c0t1d0s5 because a bootable ZFS root pool does not yet exist. The lucreate command with the -n option assigns the name to the boot environment to be created, new-zfsBE. The -p option specifies where to place the new boot environment.

Figure 10-4 Creating a New Boot Environment on Another Root Pool

Example 10-4 Creating a Boot Environment on a Different ZFS Root Pool

This example shows the same commands as in the figure which create a new root pool and then a new boot environment in the newly created root pool. In this example, the zpool create command creates rpool2. The zfs list command shows that no ZFS datasets are created in rpool2. The datasets are created with the lucreate command.

# zpool create rpool2 c0t2d0s5 # zfs list NAME USED AVAIL REFER MOUNTPOINT rpool 11.4G 2.95G 31K /rpool rpool/ROOT 4.34G 2.95G 31K legacy rpool/ROOT/new-zfsBE 4.34G 2.95G 4.34G / rpool/dump 2.06G 5.02G 16K - rpool/swap 5.04G 7.99G 16K -

The new ZFS root pool, rpool2, is created on disk slice c0t2d0s5.

# lucreate -n new-zfsBE -p rpool2 # zfs list NAME USED AVAIL REFER MOUNTPOINT rpool 11.4G 2.95G 31K /rpool rpool/ROOT 4.34G 2.95G 31K legacy rpool/ROOT/new-zfsBE 4.34G 2.95G 4.34G / rpool/dump 2.06G 5.02G 16K - rpool/swap 5.04G 7.99G 16K -

The new boot environment, new-zfsBE, is created on rpool2 along with the other datasets, ROOT, dump and swap. The boot environment, new-zfsBE, is ready to be upgraded and activated.