11 Configuring Server Migration for an Enterprise Deployment

This chapter describes the procedures for configuring server migration for the Oracle Business Intelligence enterprise deployment.

Important:

Oracle strongly recommends that you read the Oracle Fusion Middleware Release Notes for any additional installation and deployment considerations before starting the setup process.

This chapter contains the following topics:

-

Section 11.1, "Overview of Server Migration for an Enterprise Deployment"

-

Section 11.2, "Setting Up a User and Tablespace for the Server Migration Leasing Table"

-

Section 11.3, "Creating a Multi-Data Source Using the Administration Console"

-

Section 11.4, "Enabling Host Name Verification Certificates"

-

Section 11.6, "Setting Environment and Superuser Privileges for the wlsifconfig.sh Script"

11.1 Overview of Server Migration for an Enterprise Deployment

Configure server migration for the bi_server1 and bi_server2 Managed Servers. With server migration configured, if a failure occurs, the bi_server1 Managed Server restarts on APPHOST2, and the bi_server2 Managed Server restarts on APPHOST1. For this configuration, the bi_server1 and bi_server2 servers listen on specific floating IPs that are failed over by WLS Server Migration.

Perform the steps in the following sections to configure server migration for the Managed Servers.

11.2 Setting Up a User and Tablespace for the Server Migration Leasing Table

Set up a user and tablespace for the server migration leasing table using the create tablespace leasing command.

Perform the following steps to set up a user and tablespace for the server migration leasing table:

-

Create a tablespace called leasing. For example, log on to SQL*Plus as the sysdba user and run the following command:

SQL> create tablespace leasing logging datafile 'DB_HOME/oradata/orcl/leasing.dbf' size 32m autoextend on next 32m maxsize 2048m extent management local; -

Create a user named leasing and assign to it the leasing tablespace:

SQL> create user leasing identified by password; SQL> grant create table to leasing; SQL> grant create session to leasing; SQL> alter user leasing default tablespace leasing; SQL> alter user leasing quota unlimited on LEASING; -

Create the leasing table using the leasing.ddl script using the following steps:

-

Copy the leasing.ddl file located in either of the following directories to the database node:

WL_HOME/server/db/oracle/817 WL_HOME/server/db/oracle/920

-

Connect to the database as the leasing user.

-

Run the leasing.ddl script in SQL*Plus:

SQL> @copy_location/leasing.ddl;

-

11.3 Creating a Multi-Data Source Using the Administration Console

Create a multi-data source for the leasing table from the Oracle WebLogic Server Administration Console.

You create a data source for each of the Oracle RAC database instances during the process of setting up the multi-data source, both for these data sources and the global leasing multi-data source. When you create a data source:

-

Ensure that it is a non-XA data source.

-

The names of the multi-data sources are in the format of <MultiDS>-rac0, <MultiDS>-rac1, and so on.

-

Use Oracle's Driver (Thin) Version 9.0.1, 9.2.0, 10, 11.

-

Data sources do not require support for global transactions. Therefore, do not use any type of distributed transaction emulation/participation algorithm for the data source (do not choose the Supports Global Transactions option, or the Logging Last Resource, Emulate Two-Phase Commit, or One-Phase Commit suboptions), and specify a service name for the database.

-

Target these data sources to the bi_cluster.

-

Ensure that the initial connection pool capacity of the data sources is set to 0 (zero). To do this, select Services and Data Sources. In the Data Sources list, click the name of the data source, and click the Connection Pool tab and enter 0 (zero) in the Initial Capacity field.

For additional recommendations for setting up a multi-data source for Oracle RAC, see "Considerations for High Availability Oracle Database Access" in Oracle Fusion Middleware High Availability Guide.

Perform the following steps to create a multi-data source:

-

In the Domain Structure window in the Administration Console, expand the Services node, then click Data Sources. The Summary of JDBC Data Sources page is displayed.

-

In the Change Center, click Lock & Edit.

-

Click New, then select Multi Data Source. The Create a New JDBC Multi Data Source page is displayed.

-

For Name, enter

leasing. -

For JNDI Name, enter

jdbc/leasing. -

For Algorithm Type, select Failover (the default).

-

Click Next.

-

On the Select Targets page, select bi_cluster as the target.

-

Click Next.

-

On the Select Data Source Type page, select non-XA driver (the default).

-

Click Next.

-

Click Create a New Data Source.

-

For Name, enter

leasing-rac0. For JNDI Name, enterjdbc/leasing-rac0. For Database Type, select Oracle.Note:

When creating the multi-data sources for the leasing table, enter names in the format of <MultiDS>-rac0, <MultiDS>-rac1, and so on.

-

Click Next.

-

For Database Driver, select Oracle's Driver (Thin) for RAC Service-Instance connections; Versions:10 and later.

-

Click Next.

-

Deselect Supports Global Transactions.

-

Click Next.

-

Enter the leasing schema details, as follows:

-

Service Name: Enter the service name of the database.

-

Database name: Enter the Instance Name for the first instance of the Oracle RAC database.

-

Host Name: Enter the name of the node that is running the database. For the Oracle RAC database, specify the first instance's VIP name or the node name as the host name.

-

Port: Enter the port number for the database (

1521). -

Database User Name: Enter

leasing. -

Password: Enter the leasing password.

-

-

Click Next.

-

Click Test Configuration and verify that the connection works.

-

Click Next.

-

On the Select Targets page, select bi_cluster as the target.

-

Click Finish.

-

Click Create a New Data Source for the second instance of the Oracle RAC database and target it to the bi_cluster, while repeating the steps for the second instance of the Oracle RAC database.

-

On the Add Data Sources page, add leasing-rac0 and leasing-rac1 to the datasource by moving them to the Chosen list.

-

Click Finish.

-

Click Activate Changes.

11.4 Enabling Host Name Verification Certificates

Create the appropriate certificates for host name verification between Node Manager and the Administration Server. This procedure is described in Section 10.3, "Enabling Host Name Verification Certificates for Node Manager." If you have not yet created these certificates, then perform the steps in this section to create certificates for host name verification between Node Manager and the Administration Server.

11.5 Editing the Node Manager Properties File

Edit the Node Manager properties file for the Node Managers in both nodes on which server migration is being configured. The nodemanager.properties file is located in the following directory:

WL_HOME/common/nodemanager

Add the following properties to enable server migration to work properly:

Interface=eth0 NetMask=255.255.255.0 UseMACBroadcast=true

-

Interface: This property specifies the interface name for the floating IP (for example, eth0).

Do not specify the sub-interface, such as

eth0:1oreth0:2. This interface is to be used without:0or:1. Node Manager scripts traverse the different :X-enabled IPs to determine which to add or remove. For example, the valid values in Linux environments are eth0, eth1, eth2, eth3, ethn, depending on the number of interfaces configured. -

NetMask: This property specifies the net mask for the interface for the floating IP. The net mask is the same as the net mask on the interface; 255.255.255.0 is used as an example in this document.

-

UseMACBroadcast: This property specifies whether to use a node's MAC address when sending ARP packets, or in other words, whether to use the -

bflag in thearpingcommand.

Verify in the Node Manager output (the shell where Node Manager is started) that these properties in use. Otherwise, problems might occur during migration. The output is similar to the following:

... StateCheckInterval=500 Interface=eth0 NetMask=255.255.255.0 ...

Note:

The following steps are not required if the server properties (start properties) have been properly set and Node Manager can start the servers remotely.

-

Set the following property in the nodemanager.properties file:

-

StartScriptEnabled: Set this property to "true". This setting is required for Node Manager to start the Managed Servers using start scripts.

-

-

Start Node Manager on APPHOST1 and APPHOST2 by running the startNodeManager.sh script, which is located in the

WL_HOME/server/bindirectory.

Note:

When running Node Manager from a shared storage installation, multiple nodes are started using the same nodemanager.properties file. However, each node might require different NetMask or Interface properties. In this case, specify individual parameters on a per-node basis using environment variables. For example, to use a different interface (eth3) in HOSTn, use the Interface environment variable as follows:

HOSTn> export JAVA_OPTIONS=-DInterface=eth3

Then, start Node Manager after the variable has been set in the shell.

11.6 Setting Environment and Superuser Privileges for the wlsifconfig.sh Script

Perform the following steps to set the environment and superuser privileges for the wlsifconfig.sh script:

-

Ensure that the PATH environment variable includes the files that are described in Table 11-1.

-

Grant sudo configuration for the wlsifconfig.sh script.

-

Configure sudo to work without a password prompt.

-

For security reasons, sudo must be restricted to the subset of commands that are required to run the wlsifconfig.sh script. For example, perform these steps to set the environment and superuser privileges for the wlsifconfig.sh script:

-

Grant sudo privilege to the WebLogic user ("oracle") with no password restriction, and grant execute privilege on the /sbin/ifconfig and /sbin/arping binaries.

-

Ensure that the script is executable by the WebLogic user ("oracle"). The following is an example of an entry inside /etc/sudoers granting sudo execution privilege for

oracleand also overifconfigandarping:oracle ALL=NOPASSWD: /sbin/ifconfig,/sbin/arping

-

Note:

Ask the system administrator for the sudo and system rights as appropriate to this step.

-

11.7 Configuring Server Migration Targets

Configure server migration targets. You first assign all the available nodes for the cluster's members and then specify candidate machines (in order of preference) for each server that is configured with server migration. Perform the following steps to configure cluster migration in a migration in a cluster:

-

Log in to the Administration Console (http://Host:Admin_Port/console). Typically, Admin_Port is 7001 by default.

-

In the Domain Structure window, expand Environment and select Clusters. The Summary of Clusters page is displayed.

-

Click the cluster for which you want to configure migration (bi_cluster) in the Name column of the table.

-

Click the Migration tab.

-

In the Change Center, click Lock & Edit.

-

In the Available field, select the machine to which to allow migration and click the right arrow. In this case, select APPHOST1 and APPHOST2.

-

Select the data source to be used for automatic migration. In this case, select the leasing data source.

-

Click Save.

-

Click Activate Changes.

-

Set the candidate machines for server migration. You must perform this task for all of the Managed Servers using the following steps:

-

In the Domain Structure window of the Administration Console, expand Environment and select Servers.

Tip:

Click Customize this table in the Summary of Servers page and move Current Machine from the Available window to the Chosen window to view the machine on which the server is running. This is different from the configuration if the server gets migrated automatically.

-

Click the server for which you want to configure migration.

-

Click the Migration tab.

-

In the Change Center, click Lock & Edit.

-

In the Migration Configuration section, for Candidate Machines, select the machines to which you want to enable migration and click the right arrow. For bi_server1, select APPHOST2. For bi_server2, select APPHOST1.

-

Select Automatic Server Migration Enabled. This enables Node Manager to start a failed server on the target node automatically.

-

Click Save.

-

Click Activate Changes.

-

Restart the Administration Server, Node Managers, Managed Servers, and the system components for which server migration has been configured.

-

11.8 Testing the Server Migration

Perform the following steps to verify that server migration is working properly:

-

Stop the bi_server1 Managed Server. To do this, run this command:

APPHOST1> kill -9 pidwhere pid specifies the process ID of the Managed Server. You can identify the pid in the node by running this command:

APPHOST1> ps -ef | grep bi_server1

-

Watch the Node Manager console. You see a message that indicates that bi_server1's floating IP has been disabled.

-

Wait for Node Manager to try a second restart of bi_server1. It waits for a fence period of 30 seconds before trying this restart.

-

After Node Manager restarts the server, stop it again. Node Manager now logs a message that indicates that the server is not restarted again locally.

-

Watch the local Node Manager console. After 30 seconds since the last try to restart bi_server1 on node 1, Node Manager on node 2 prompts that the floating IP for bi_server1 is being brought up and that the server is being restarted in this node.

-

Access one of the applications (for example, BI Publisher) using the same IP.

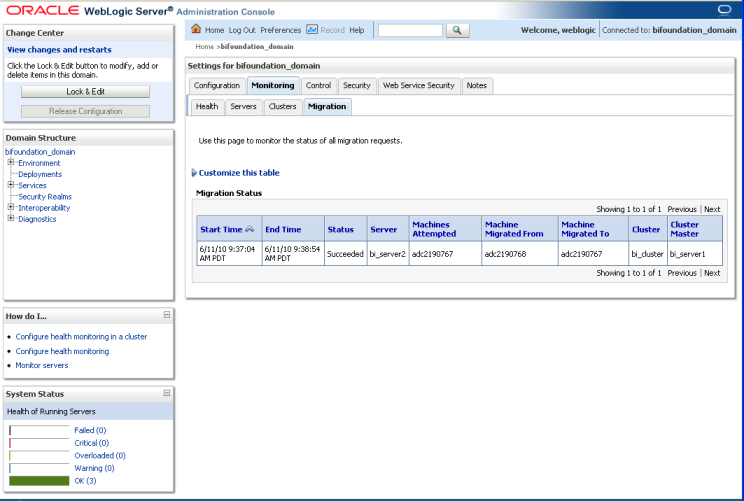

Verification From the Administration Console

Perform the following steps to verify migration in the Administration Console:

-

Log in to the Administration Console.

-

Click Domain on the left console.

-

Click the Monitoring tab and the Migration tab.

The Migration Status table provides information on the status of the migration, as shown in the following image:

Description of the illustration moz-screenshot-22_small.png

Note:

After a server is migrated, to fail it back to its original node or computer, stop the Managed Server from the Administration Console and start it again. The appropriate Node Manager starts the Managed Server on the computer to which it was originally assigned.