| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Cluster Concepts Guide Oracle Solaris Cluster 4.1 |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Cluster Concepts Guide Oracle Solaris Cluster 4.1 |

2. Key Concepts for Hardware Service Providers

3. Key Concepts for System Administrators and Application Developers

Device IDs and DID Pseudo Driver

Cluster Configuration Repository (CCR)

Local and Global Namespaces Example

Using the cldevice Command to Monitor and Administer Disk Paths

Using the clnode set Command to Manage Disk Path Failure

Adhering to Quorum Device Requirements

Adhering to Quorum Device Best Practices

Recommended Quorum Configurations

Characteristics of Scalable Services

Data Service API and Data Service Development Library API

Using the Cluster Interconnect for Data Service Traffic

Resources, Resource Groups, and Resource Types

Resource and Resource Group States and Settings

Resource and Resource Group Properties

Support for Oracle Solaris Zones

Support for Zones on Cluster Nodes Through Oracle Solaris Cluster HA for Solaris Zones

Criteria for Using Oracle Solaris Cluster HA for Solaris Zones

Requirements for Using Oracle Solaris Cluster HA for Solaris Zones

Additional Information About Oracle Solaris Cluster HA for Solaris Zones

Data Service Project Configuration

Determining Requirements for Project Configuration

Setting Per-Process Virtual Memory Limits

Two-Node Cluster With Two Applications

Two-Node Cluster With Three Applications

Failover of Resource Group Only

Public Network Adapters and IP Network Multipathing

SPARC: Dynamic Reconfiguration Support

SPARC: Dynamic Reconfiguration General Description

SPARC: DR Clustering Considerations for CPU Devices

SPARC: DR Clustering Considerations for Memory

SPARC: DR Clustering Considerations for Disk and Tape Drives

SPARC: DR Clustering Considerations for Quorum Devices

SPARC: DR Clustering Considerations for Cluster Interconnect Interfaces

SPARC: DR Clustering Considerations for Public Network Interfaces

This section contains the following topics:

Note - For a list of the specific devices that Oracle Solaris Cluster software supports as quorum devices, contact your Oracle service provider.

Because cluster nodes share data and resources, a cluster must never split into separate partitions that are active at the same time because multiple active partitions might cause data corruption. The Cluster Membership Monitor (CMM) and quorum algorithm guarantee that, at most, one instance of the same cluster is operational at any time, even if the cluster interconnect is partitioned.

For more information on the CMM, see Cluster Membership Monitor.

Two types of problems arise from cluster partitions:

Split brain occurs when the cluster interconnect between nodes is lost and the cluster becomes partitioned into subclusters. Each partition “believes” that it is the only partition because the nodes in one partition cannot communicate with the node or nodes in the other partition.

Amnesia occurs when the cluster restarts after a shutdown with cluster configuration data that is older than the data was at the time of the shutdown. This problem can occur when you start the cluster on a node that was not in the last functioning cluster partition.

Oracle Solaris Cluster software avoids split brain and amnesia by:

Assigning each node one vote

Mandating a majority of votes for an operational cluster

A partition with the majority of votes gains quorum and is allowed to operate. This majority vote mechanism prevents split brain and amnesia when more than two nodes are configured in a cluster. However, counting node votes alone is sufficient when more than two nodes are configured in a cluster. In a two-node cluster, a majority is two. If such a two-node cluster becomes partitioned, an external vote is needed for either partition to gain quorum. This external vote is provided by a quorum device.

A quorum device is a shared storage device or quorum server that is shared by two or more nodes and that contributes votes that are used to establish a quorum. The cluster can operate only when a quorum of votes is available. The quorum device is used when a cluster becomes partitioned into separate sets of nodes to establish which set of nodes constitutes the new cluster.

Oracle Solaris Cluster software supports the monitoring of quorum devices. Periodically, each node in the cluster tests the ability of the local node to work correctly with each configured quorum device that has a configured path to the local node and is not in maintenance mode. This test consists of an attempt to read the quorum keys on the quorum device.

When the Oracle Solaris Cluster system discovers that a formerly healthy quorum device has failed, the system automatically marks the quorum device as unhealthy. When the Oracle Solaris Cluster system discovers that a formerly unhealthy quorum device is now healthy, the system marks the quorum device as healthy and places the appropriate quorum information on the quorum device.

The Oracle Solaris Cluster system generates reports when the health status of a quorum device changes. When nodes reconfigure, an unhealthy quorum device cannot contribute votes to membership. Consequently, the cluster might not continue to operate.

Use the clquorum show command to determine the following information:

Total configured votes

Current present votes

Votes required for quorum

See the cluster(1CL) man page.

Both nodes and quorum devices contribute votes to the cluster to form quorum.

A node contributes votes depending on the node's state:

A node has a vote count of one when it boots and becomes a cluster member.

A node has a vote count of zero when the node is being installed.

A node has a vote count of zero when a system administrator places the node into maintenance state.

Quorum devices contribute votes that are based on the number of nodes that are connected to the device. When you configure a quorum device, Oracle Solaris Cluster software assigns the quorum device a vote count of N-1 where N is the number of connected nodes to the quorum device. For example, a quorum device that is connected to two nodes with nonzero vote counts has a quorum count of one (two minus one).

A quorum device contributes votes if one of the following two conditions are true:

At least one of the nodes to which the quorum device is currently attached is a cluster member.

At least one of the nodes to which the quorum device is currently attached is booting, and that node was a member of the last cluster partition to own the quorum device.

You configure quorum devices during the cluster installation, or afterwards, by using the procedures that are described in Chapter 6, Administering Quorum, in Oracle Solaris Cluster System Administration Guide.

The following list contains facts about quorum configurations:

Quorum devices can contain user data.

In an N+1 configuration where N quorum devices are each connected to one of the 1 through N cluster nodes and the N+1 cluster node, the cluster survives the death of either all 1 through N cluster nodes or any of the N/2 cluster nodes. This availability assumes that the quorum device is functioning correctly and is accessible.

In an N-node configuration where a single quorum device connects to all nodes, the cluster can survive the death of any of the N-1 nodes. This availability assumes that the quorum device is functioning correctly.

In an N-node configuration where a single quorum device connects to all nodes, the cluster can survive the failure of the quorum device if all cluster nodes are available.

For examples of recommended quorum configurations, see Recommended Quorum Configurations.

Ensure that Oracle Solaris Cluster software supports your specific device as a quorum device. If you ignore this requirement, you might compromise your cluster's availability.

Note - For a list of the specific devices that Oracle Solaris Cluster software supports as quorum devices, contact your Oracle service provider.

Oracle Solaris Cluster software supports the following types of quorum devices:

Multi-hosted shared disks that support SCSI-3 PGR reservations.

Dual-hosted shared disks that support SCSI-2 or SCSI-3 PGR reservations.

A quorum server process that runs on the quorum server machine.

Any shared disk, provided that you have turned off fencing for this disk, and are therefore using software quorum. Software quorum is a protocol developed by Oracle that emulates a form of SCSI Persistent Group Reservations (PGR).

| Caution - If you are using disks that do not support SCSI, such as Serial Advanced Technology Attachment (SATA) disks, turn off fencing. |

Sun ZFS Storage Appliance iSCSI quorum device from Oracle.

Note - You cannot use a storage-based replicated device as a quorum device.

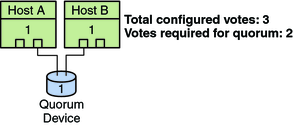

In a two–node configuration, you must configure at least one quorum device to ensure that a single node can continue if the other node fails. See Figure 3-2.

For examples of recommended quorum configurations, see Recommended Quorum Configurations.

Use the following information to evaluate the best quorum configuration for your topology:

Do you have a device that is capable of being connected to all cluster nodes of the cluster?

If yes, configure that device as your one quorum device. You do not need to configure another quorum device because your configuration is the most optimal configuration.

| Caution - If you ignore this requirement and add another quorum device, the additional quorum device reduces your cluster's availability. |

If no, configure your dual-ported device or devices.

Note - In particular environments, you might want fewer restrictions on your cluster. In these situations, you can ignore this best practice.

In general, if the addition of a quorum device makes the total cluster vote even, the total cluster availability decreases. If you do not have more than 50% of the total cluster vote to continue the operation, both subclusters will panic with a loss of operational quorum.

Quorum devices slightly slow reconfigurations after a node joins or a node dies. Therefore, do not add more quorum devices than are necessary.

For examples of recommended quorum configurations, see Recommended Quorum Configurations.

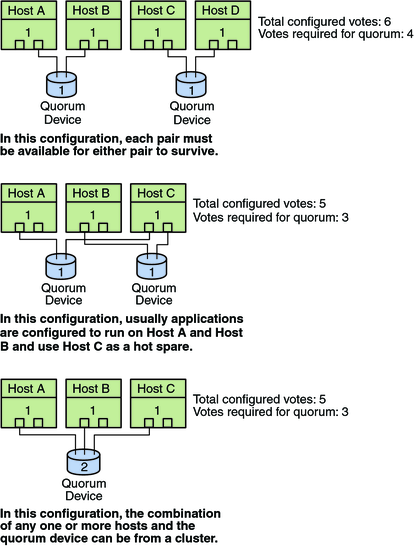

This section shows examples of quorum configurations that are recommended.

Two quorum votes are required for a two-node cluster to form. These two votes can derive from the two cluster nodes, or from just one node and a quorum device.

Figure 3-2 Two–Node Configuration

Quorum devices are not required when a cluster includes more than two nodes, as the cluster survives failures of a single node without a quorum device. However, under these conditions, you cannot start the cluster without a majority of nodes in the cluster.

You can add a quorum device to a cluster that includes more than two nodes. A partition can survive as a cluster when that partition has a majority of quorum votes, including the votes of the nodes and the quorum devices. Consequently, when adding a quorum device, consider the possible node and quorum device failures when choosing whether and where to configure quorum devices.