49 Site Capture File System

The Site Capture file system is created during the Site Capture installation process to store installation-related files, property files, sample crawlers, and sample code used by the FirstSiteII crawler to control its site capture process. The file system also provides the framework in which Site Capture organizes custom crawlers and their captures.

This chapter contains the following topics:

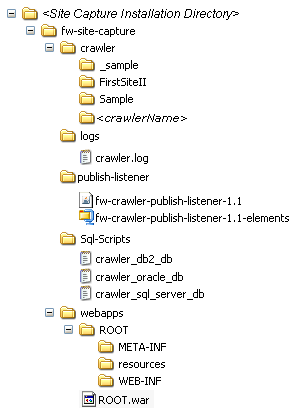

49.1 General Directory Structure

Figure 49-1 shows Site Capture's most frequently accessed folders to help administrators find commonly used Site Capture information. All folders, except for <crawlerName>, are created during the Site Capture installation process. For information about <crawlerName> folders, see Table 49-1, "Site Capture's Frequently Accessed Folders" and Section 49.2, "Custom Folders."

Table 49-1 Site Capture's Frequently Accessed Folders

| Folder | Description |

|---|---|

|

|

The parent folder. |

|

|

Contains all Site Capture crawlers, each stored in its own crawler-specific folder. |

|

|

Contains the source code for the FirstSiteII sample crawler. Note: Folder names beginning with the underscore character ("_") are not treated as crawlers. They are not displayed in the Site Capture interface. |

|

|

Represents a crawler named "Sample." This folder is created only if the "Sample" crawler was installed during the Site Capture installation process. The When the Sample crawler is invoked in static or archive mode, subfolders are created within the |

|

|

Contains the |

|

|

Contains the following files needed for installing Site Capture for publishing-triggered crawls:

|

|

|

Contains the following scripts, which create database tables that are needed by Site Capture to store its data:

|

|

|

Contains the |

|

|

Contains the |

|

|

Contains the following files:

|

49.2 Custom Folders

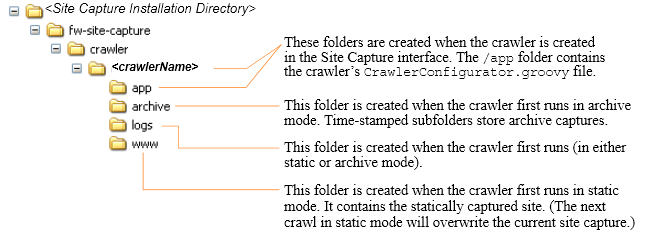

A custom folder is created for every crawler that a user creates in the Site Capture interface. The custom folder, <crawlerName>, is used to organize the crawler's configuration file, captures, and logs, as summarized in Figure 49-2.

Figure 49-2 Site Capture's Custom Folders: <crawlerName>

Description of ''Figure 49-2 Site Capture's Custom Folders: <crawlerName>''