| Oracle® Enterprise Data Quality for Product Data Endeca Connector Installation and User's Guide Release 11g R1 (11.1.1.6) E29135-03 |

|

|

PDF · Mobi · ePub |

| Oracle® Enterprise Data Quality for Product Data Endeca Connector Installation and User's Guide Release 11g R1 (11.1.1.6) E29135-03 |

|

|

PDF · Mobi · ePub |

The Endeca Connector supports high availability through:

redundancy,

round-robin Oracle DataLens Server support,

and real-time fail-over of Oracle DataLens Servers during processing.

The Endeca Connector supports parallel processing and load balancing through:

multiple parallel processing threads for each PDQ_SERVER_n defined

and each thread fully supports the high availability

This is accomplished without the need for additional hardware support such as redundant clustered servers or intensive hardware support although these hardware solutions are fully supported. This reduces hardware infrastructure costs by having a very robust software solution. Additionally, it allows parallel processing, load balancing and high availability for the Endeca Connector Adapter when running as part of the Endeca Forge processing.

Redundancy is accomplished by having multiple Oracle DataLens Servers, all setup to process DSAs, and all setup to load and process the same data lenses. This is configured with the multiple Oracle DataLens Server configuration parameters supported by the Endeca Connector.

PDQ_SERVER_1 = DLFProdServerOne

PDQ_SERVER_2 = DLFProdServerTwo

PDQ_SERVER_3 = DLFProdServerThree

These multiple redundant servers eliminate the need for additional hardware support for redundancy.

Note:

Three servers are defined in the example though there is no limit to the number of Oracle DataLens Servers that you can add.

These multiple redundant servers are used by both the Endeca Connector Add-In Discovery components (and the Deletion components) and the Endeca Connector PdqAdapter.

This solution is completely flexible and will work with almost any Oracle DataLens Server topology, such as the following:

An Administration server and a Production server.

An Administration server and multiple Production servers, all in the same server group.

An Administration server and multiple Production servers, all in different server groups.

Multiple production servers, all in the same server group. Oracle recommends this configuration.

The Endeca Connector DSA must be made available to all the Oracle DataLens Servers in any of the Development or Production Server Groups.

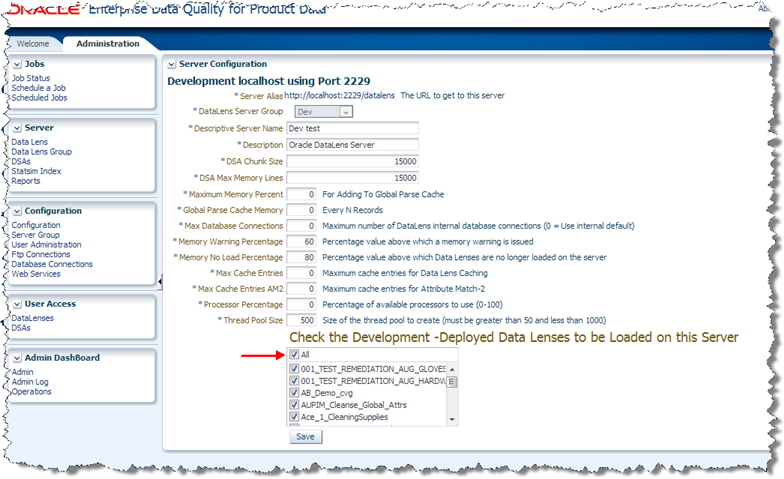

Each Oracle DataLens Server must have the All check box selected so that the all of the deployed data lenses used by the Forge process DSAs are loaded as in the following:

Go to the Oracle DataLens Server Administration web page and ensure this option is set for each Oracle DataLens Server in the appropriate Development and Production server groups. For more information, see Oracle Enterprise Data Quality for Product Data Oracle DataLens Server Administration Guide

The Endeca Connector DSA Transformation Add-Ins (the discovery processes) uses a round-robin approach to selecting an initial server for data processing. This means that the job will not even start until a Oracle DataLens Server is verified to be up and running. This is controlled by the PDQ_SERVER parameters that are set in the PdqAdapter pass through parameters and used by all the components of the Endeca Connector.

The round-robin checking always starts with PDQ_SERVER_1 and then checks PDQ_SERVER_2 and finally PDQ_SERVER_3.

Note that the Endeca Connector Adapter is more sophisticated and keeps track of the last accessed server when doing the round-robin fail-over.

The Oracle DataLens Servers all have a ”ping servlet” so that the Endeca Connector can ensure not only that the server is running, but also that the Oracle DataLens Server service is running and processing requests.

Following is an example of the round-robin server connection from the Oracle DataLens Server log file for a ”Discover Precedence” DSA job.

INFO 16 Sep 2008 15:53:07 [] - PDQ-Endeca Connector Dimension Discovery Version 11.1.1.6.0, Build 20120804 Copyright (c) 2012, 2012, Oracle and/or its affiliates. All rights reserved. INFO 16 Sep 2012 15:53:10 [] - Attempted 0 times to connect to http:// DLFProdServerOne:2229/datalens/Ping ERROR 16 Sep 2012 15:53:10 [] - Failed to connect to server (http:// DLFProdServerOne:2229/datalens/Ping)[PingRequest]: DLFProdServerOne INFO 16 Sep 2012 15:53:10 [] - PdqAdapter parameters: DSA_MAP = endeca_demo_dimensions REPLACE_UNDERSCORES_ONLY = true USE_PDQ_rPREFIX = false Using PDQ_SERVER_2 (DLFProdServerTwo:2229) INFO 16 Sep 2012 15:53:10 [] - Connecting to Server DLFProdServerTwo and port 2229 INFO 16 Sep 2012 15:53:10 [] - Running on DataLens Admin server DLFProdServerTwo:2229

This failed to get a response from the DLFProdServerOne and ended up connecting to the DLFProdServerOne. The log also reports on which PDQ_SERVER is being used.

The Endeca Connector fail-over is a component that works when processing the actual data with the PdqAdapter during the Endeca Forge processing. This is optimized over a hardware fail-over solution because the Endeca Connector Fail-over will resubmit the data chunk to an alternate server is a problem is encountered, continuing the Forge processing. If a job is processing chunk 15 of a total of 20 chunks, the fail-over will resubmit data chunk 15 to a redundant Oracle DataLens Server, continuing the Forge processing without Forge ever being aware that a Oracle DataLens Server went down.

A hardware fail-over will require that the Forge job is re-submitted from the start.

The fail-over will occur if the following occur:

The DSA Job has a fault and fails to respond.

The DSA machine has any type of connection error such as the server hardware failure or Tomcat failure.

The DSA machine has a memory error such as a Java heap space error.

Note:

The Endeca Connector Adapter keeps track of the last accessed server among all the servers defined when doing the round-robin fail-over and will use this information to determine which server to send a chunk of data to for re-processing.

Here is the result of pulling the plug on one of the Oracle DataLens Servers:

Endeca51:2229-2 2009.02.04_03:51:00 Running a data chunk on the DLS Server Endeca51:2229

Endeca51:2229-2 2009.02.04_03:51:01 Processing a chunk of 9950 records on the Endeca51:2229 DLS server

Endeca51:2229-2 2009.02.04_03:51:31 DLF Server Endeca51:2229 is not responding

Endeca51:2229-2 2009.02.04_03:51:31 Warning: Caught a Connection Exception, trying another server...

Endeca51:2229-2 2009.02.04_03:52:13 DLF Server Endeca51:2229 is not responding

Endeca51:2229-2 2009.02.04_03:52:13 Retrying the chunk with the DL Server admin1-M6300:2229

Endeca51:2229-2 2009.02.04_03:52:14 Processing a chunk of 9950 records on the admin1-M6300:2229 DLS server

Endeca51:2229-2 2009.02.04_03:52:15 Using Job Id: 182

Endeca51:2229-2 2009.02.04_03:53:04 Job#182 DLS Server returned 7600 records from the chunk

In the preceding example, PDQ_SERVER_1 is pinged to verify that there was just not a network issue. Then the server is hot-swapped to PDQ_SERVER_2 and the entire chunk is re-submitted. The last line in the preceding log snippet is the first line of re-submitted data for this current chunk.

The following error message will be output to the log file if the WebLogic Server is stopped or fails:

admin1-M6300:2229-1 2009.02.04_03:45:07 Warning: Caught a Job Failed Fault, trying another server...

This first example is of the Endeca Connector Adapter running with a single Oracle DataLens Server.

All the data chunks are being processed in parallel threads (one per server) on three separate Oracle DataLens Servers.

PDQ-Endeca Connector Adapter (Endeca Java Manipulator) Version 11.1.1.6.0, Build 20120804 Copyright (c) 2008, 2012, Oracle and/or its affiliates. All rights reserved. PDQ_SERVER_1 - Adding required DataLens Server cwellell-M6300:2229 PDQ_SERVER_2 - Adding optional High-Availability, Load-Balanced, Parallel-Processing DataLens Server Endeca51:2229 PDQ_SERVER_3 - Adding optional High-Availability, Load-Balanced, Parallel-Processing DataLens Server cwellell-VM:2229 cwellell-M6300:2229-1 2009.02.12_01:10:07.000 Running a data chunk on the DLS Server cwellell-M6300:2229 cwellell-M6300:2229-1 2009.02.12_01:10:07.343 Processing a chunk of 10000 records on the cwellell-M6300:2229 DLS server cwellell-M6300:2229-1 2009.02.12_01:10:08.796 Using Job Id: 208 cwellell-M6300:2229-1 2009.02.12_01:10:23.031 Job#208 DLS Server returned 8965 records from the chunk cwellell-VM:2229-3 2009.02.12_01:10:12.890 Running a data chunk on the DLS Server cwellell-VM:2229 cwellell-VM:2229-3 2009.02.12_01:10:13.984 DLF Server cwellell-VM:2229 is not responding cwellell-VM:2229-3 2009.02.12_01:10:14.125 Retrying the chunk with the DL Server Endeca51:2229 cwellell-VM:2229-3 2009.02.12_01:10:14.359 Processing a chunk of 3041 records on the Endeca51:2229 DLS server cwellell-VM:2229-3 2009.02.12_01:10:20.859 Using Job Id: 10 cwellell-VM:2229-3 2009.02.12_01:11:17.343 Job#10 DLS Server returned 1954 records from the chunk Endeca51:2229-2 2009.02.12_01:10:11.250 Running a data chunk on the DLS Server Endeca51:2229 Endeca51:2229-2 2009.02.12_01:10:11.921 Processing a chunk of 9950 records on the Endeca51:2229 DLS server Endeca51:2229-2 2009.02.12_01:10:43.515 Using Job Id: 11 Endeca51:2229-2 2009.02.12_01:11:06.500 Job#11 DLS Server returned 7600 records from the chunk ********* Processed 3 Chunks with 22991 total input lines ********* ********* Updated 18519 total lines by the PDQ-Endeca Connector ********* ********* Completed the PDQ-Endeca Connector processing in 73 seconds *********