| Oracle® Fusion Middleware Configuration Guide for Oracle Business Intelligence Applications 11g Release 1 (11.1.1.7) Part Number E36171-03 |

|

|

PDF · Mobi · ePub |

| Oracle® Fusion Middleware Configuration Guide for Oracle Business Intelligence Applications 11g Release 1 (11.1.1.7) Part Number E36171-03 |

|

|

PDF · Mobi · ePub |

This is a reference section that contains Help topics for Informational Tasks in Functional Setup Manager (FSM). Informational Tasks display conceptual information, or display configuration steps that are performed in tools external to FSM (for example, in Oracle Data Integrator, or Oracle BI EE Administration Tool).

The Help topics in this section are displayed in FSM when you click Go to Task for an Informational Task, or you click a Help icon for additional information about an FSM Task.

This chapter contains the following sections:

Section B.1, "Example Functional Configuration Tasks For Multiple Offerings"

Section B.4, "Informational Task Reference - ETL Notes and Overviews"

This section lists example Tasks that apply to multiple Offerings.

Configure Data Load Parameters for File Based Calendars

Configure Enterprise List

Configure Global Currencies

Configure Initial Extract Date

Configure Reporting Parameters for Year Prompting

Configure Slowly Changing Dimensions

Define Enterprise Calendar

Specify Gregorian Calendar Date Range

This section contains miscellaneous Help topics.

To get started with Functional Configuration, see Section 3.2, "Roadmap for Functional Configuration".

A BI Application Offering and one or more Functional Areas are selected during the creation of an Implementation Project. A list of Functional Setup tasks is generated based on the selected Oracle BI Applications Offering and Functional Area(s).

There are four main types of Functional Task:

Tasks to configure Data Load Parameters - Clicking on the Go To Task button for these tasks launches Oracle BI Applications Configuration Manager and the Manage Data Load Parameter setup user interface is displayed with the appropriate set of Data Load Parameters required to perform a task.

Tasks to manage Domains and Mappings - Clicking on the Go To Task button for these tasks launches Oracle BI Applications Configuration Manager and the Manage Domains and Mappings setup user interface is displayed with the appropriate set of Domain Mappings.

Tasks to configure Reporting Parameters - Clicking on the Go To Task button for these tasks launches Oracle BI Applications Configuration Manager and the Manage Reporting Parameter setup user interface is displayed with the appropriate set of Reporting Parameters required to perform a task.

Tasks that are informational - These tasks provide either:

conceptual, background or supporting information.

instructions for configuration that is performed in tools external to FSM (for example, in Oracle Data Integrator, or Oracle BI EE Administration Tool).

By default, the Oracle Supply Chain and Order Management Analytics application only extracts open sales orders from the Sales Order Lines (W_SALES_ORDER_LINE_F) table and Sales Schedule Lines table (W_SALES_SCHEDULE_LINE_F) for backlog calculations to populate the Backlog tables. Open sales orders are defined as orders that are not canceled or not complete. The purpose in extracting only open orders is that in most organizations those orders that are closed are no longer a part of backlog. However, if you want to extract sales orders that are marked as closed, you may remove the default filter condition from the extract mapping.

For example, assume your customer orders ten items. Six items are invoiced and shipped, but four items are placed on operational and financial backlog. This backlog status continues until one of two things happens:

The items are eventually shipped and invoiced.

The remainder of the order is canceled.

If you choose to extract sales orders that are flagged as closed, you must remove the condition in the Backlog flag. To do so, use the following procedure.

The BACKLOG_FLAG in the W_SALES_ORDER_LINE_F table is also used to identify which sales orders are eligible for backlog calculations. By default, all sales order types have their Backlog flag set to Y. As a result, all sales orders are included in backlog calculations.

To remove open order extract filters:

In Oracle Data Integrator, open Mappings folder, and then SDE_ORA11510_Adaptor, SDE_ORAR12Version_Adaptor, or SDE_FUSION_V1_Adaptor folder.

Open SDE_ORA_SalesOrderLinesFact - Interfaces - SDE_ORA_SalesOrderLinesFact.W_SALES_ORDER_LINE_FS for E-Business Suite adaptors, or SDE_FUSION_SalesOrderLinesFact - Interfaces - SDE_FUSION_SalesOrderLinesFact.W_SALES_ORDER_LINE_FS for FUSION adaptor.

Click Quick-Edit tab and expand Mappings inside Quick-Edit tab.

Find the OPR_BACKLOG_FLG and open Mapping Expression. Then, remove SQ_BCI_SALES_ORDLNS.OPEN_FLAG = 'Y' AND for E-Business Suite adaptors, or remove SQ_FULFILLLINEPVO.FulfillLineOpenFlag = 'Y' AND for FUSION adaptor.

Find the FIN_BACKLOG_FLG and open Mapping Expression. Then, remove SQ_BCI_SALES_ORDLNS.OPEN_FLAG = 'Y' AND for E-Business Suite adaptors, or remove SQ_FULFILLLINEPVO.FulfillLineOpenFlag = 'Y' AND for FUSION adaptor.

Save your changes to the repository.

Open the Mappings folder, and then PLP folder.

Open PLP_SalesBacklogLinesFact_Load_OrderLines - Interfaces -PLP_SalesBacklogLinesFact_Load_OrderLines.W_SALES_BACKLOG_LINE_F.SQ_SALES_ORER_LINES_BACKLOG.

Click Quick-Edit tab and expand Filters inside Quick-Edit tab.

Find the filter W_STATUS_D.W_STATUS_CODE<>'Closed' and remove it.

Open PLP_SalesBacklogLinesFact_Load_ScheduleLines - Interfaces -PLP_SalesBacklogLinesFact_Load_ScheduleLines.W_SALES_BACKLOG_LINE_F.SQ_W_SALES_SCHEDULE_LINE_F.

Click Quick-Edit tab and expand Filters inside Quick-Edit tab.

Find the filter W_STATUS_D.W_STATUS_CODE<>'Closed' and remove it.

Save your changes to the repository.

This is for only Oracle E-Business Suite source system such as SDE_ORA11510_Adaptor, and SDE_ORAR12Version_Adaptor. By default, only booked orders are extracted from the Oracle source system, as shown in Figure B-1.

Therefore, all orders loaded into the Sales Order Lines, Sales Schedule Lines, and Sales Booking Lines tables are booked.

However, you can also load non-booked orders in Sales Order Lines (W_SALES_ORDERS_LINES_F) and Sales Schedule Lines (W_SALES_SCHEDULE_LINE_F), while loading only booked orders in Sales Booking Lines (W_SALES_BOOKING_LINE_F).

If you want to load non-booked orders into the Sales Order Lines and Sales Schedule Lines tables, you have to configure the extract so that it does not filter out non-booked orders. The OE_ORDER_LINES_ALL.BOOKED_FLAG = 'Y' condition indicates that an order is booked; therefore, this statement is used to filter out non-booked orders. So, to load all orders, including non-booked orders, remove the filter condition from the temp interfaces of the following mappings:

SDE_ORA_SalesOrderLinesFact

SDE_ORA_SalesOrderLinesFact_Primary

Also, if you include non-booked orders in the Sales Order Lines and Sales Schedule Lines tables, you have to exclude non-booked orders when you populate the Sales Booking Lines table from the Sales Order Lines or from the Sales Schedule Lines. You can do this by adding the W_SALES_ORDER_LINE_F.BOOKING_FLG = 'Y' or W_SALES_SCHEDULE_LINE_F.BOOKING_FLG = 'Y' condition to the interfaces of the following mappings:

SIL_SalesBookingLinesFact_Load_OrderLine_Credit

SIL_SalesBookingLinesFact_Load_OrderLine_Debit

SIL_SalesBookingLinesFact_Load_ScheduleLine_Credit

SIL_SalesBookingLinesFact_Load_ScheduleLine_Debit

To include non-booked orders in the Sales Order Lines and Sales Schedule Lines tables (for both full and Incremental load):

In ODI Designer Navigator, open the SDE_ORA11510_Adaptor, or SDE_ORAR12Version _Adaptor.

Find SDE_ORA_SalesOrderLinesFact and SDE_ORA_SalesOrderLinesFact_Primary. Then open the temp interfaces below.

SDE_ORA_SalesOrderLinesFact.W_SALES_ORDER_LINE_FS_SQ_BCI_SALES_ORDLNS

SDE_ORA_SalesOrderLinesFact_Primary.W_SALES_ORDER_LINE_F_PE_SQ_BCI_SALES_ORDLS

Find and delete the filter condition OE_ORDER_LINES_ALL.BOOKED_FLAG='Y' from the temp interfaces mentioned above.

Save your changes to the repository.

Follow the steps below to make changes for Booking Lines table.

To include only booked orders in the Sales Booking Lines table:

In ODI Designer Navigator, open the SILOS folder.

Open the following interfaces then add the filter to Filters section.

SIL_SalesBookingLinesFact_Load_OrderLine_Credit folder: Open Quick-Edit tab of the SIL_SalesBookingLinesFact_Load_OrderLine_Credit.W_SALES_BOOKING_LINE_F_SQ_W_SALES_ORDER_LINE_F interface, and add W_SALES_ORDER_LINE_F.BOOKING_FLG = 'Y' to the Filters section.

SIL_SalesBookingLinesFact_Load_OrderLine_Debt folder : Open Quick-Edit tab of the SIL_SalesBookingLinesFact_Load_OrderLine_Debt.W_SALES_BOOKING_LINE_F interface, and add SQ_W_SALES_ORDER_LINE_F.BOOKING_FLG = 'Y' to the Filters section.

SIL_SalesBookingLinesFact_Load_ScheduleLine_Credit folder : Open Quick-Edit tab of the SIL_SalesBookingLinesFact_Load_ScheduleLine_Credit.W_SALES_BOOKING_LINE_F_SQ_W_SALES_SCHEDULE_LINE_F interface, and add W_SALES_SCHEDULE_LINE_F.BOOKING_FLG = 'Y' to the Filters section.

SIL_SalesBookingLinesFact_Load_ScheduleLine_Debt folder : Open Quick-Edit tab of the SIL_SalesBookingLinesFact_Load_ScheduleLine_Debt.W_SALES_BOOKING_LINE_F interface, and add SQ_W_SALES_SCHEDULE_LINE_F.BOOKING_FLG = 'Y' to the Filters section.

Save your changes to the repository.

The W_SALES_BOOKING_LINE_F table tracts changes in SALES_QTY, NET_AMT, and certain attributes defined in BOOKING_ID column. BOOKING_ID is calculated in SDE mappings of Sales Order Line table as follows:

For SDE_ORA11510_Adaptor and SDE_ORA12Version_Adaptor:

TO_CHAR(SQ_BCI_SALES_ORDLNS.LINE_ID)||'~'||TO_CHAR(SQ_BCI_SALES_ORDLNS.INVENTORY_ITEM_ID)||'~'||TO_CHAR(SQ_BCI_SALES_ORDLNS.SHIP_FROM_ORG_ID)

For SDE_FUSION_V1_Adaptor:

TO_CHAR(SQ_FULFILLLINEPVO.FulfillLineId)||'~'||TO_CHAR(SQ_FULFILLLINEPVO.FulfillLineInventoryItemId)||'~'||TO_CHAR(SQ_FULFILLLINEPVO.FulfillLineFulfillOrgId)

However, if you want to track changes on another attribute, then you must concatenate the source column of the attribute with the default mapping expression. For example, if you want to track changes in Customer Account, then concatenate the source column of Customer Account in the BOOKING_ID column as follows:

For SDE_ORA11510_Adaptor and SDE_ORA12Version_Adaptor:

TO_CHAR(SQ_BCI_SALES_ORDLNS.LINE_ID)||'~'||TO_CHAR(SQ_BCI_SALES_ORDLNS.INVENTORY_ITEM_ID)||'~'||TO_CHAR(SQ_BCI_SALES_ORDLNS.SHIP_FROM_ORG_ID)||'~'||TO_CHAR(INP_CUSTOMER_ACCOUNT_ID)

For SDE_FUSION_V1_Adaptor:

TO_CHAR(SQ_FULFILLLINEPVO.FulfillLineId)||'~'||TO_CHAR(SQ_FULFILLLINEPVO.FulfillLineInventoryItemId)||'~'||TO_CHAR(SQ_FULFILLLINEPVO.FulfillLineFulfillOrgId)||'~'||TO_CHAR(SQ_FULFILLLINEPVO.HeaderSoldToCustomerId)

To track multiple dimensional attribute changes in bookings:

In ODI Designer Navigator, open the SDE_ORA11510_Adaptor, SDE_ORAR12Version _Adaptor, or SDE_FUSION_V1_Adaptor folder.

Open the main interface of SDE mappings of Sales Order Line table:

SDE_ORA_SalesOrderLinesFact.W_SALES_ORDER_LINE_FS

SDE_FUSION_SalesOrderLinesFact.W_SALES_ORDER_LINE_FS

Find BOOKING_ID column and modify the mapping expression as described above.

If you want to track changes in multiple attributes, then you must concatenate all source columns of the attributes.

Save your changes to the repository.

The Human Resource application will benefit from table partitioning especially on larger systems where the amount of data is greater.

The main benefits of table partitioning are:

Faster ETL, as indexes are rebuilt only over the table partitions that have changed.

Faster reports, as partition pruning is a very efficient way of getting to the required data.

This task is optional, however by default no tables are partitioned.

Systems where Oracle Business Analytics Warehouse is implemented on an Oracle database.

No dependencies.

The latest recommendations for table partitioning of Human Resource tables can be found in Tech Notes in My Oracle Support. These should be reviewed before any action is taken.

There is a table partitioning utility provided in ODI which can be used to create partitioned tables. This utility can be run at any time to implement a particular partition strategy on a table. It is re-runnable and can be used to change the strategy if needed. It will backup the existing table, create the partitioned table in its place and copy in the data and indexes.

For example, to implement table partitioning on the table W_WRKFC_EVT_MONTH_F:

Execute the scenario IMPLEMENT_DW_TABLE_PARTITIONS passing in the parameters as follows:

Table B-1 Parameters for Table Partitioning

| Parameter Name | Description | Value |

|---|---|---|

|

CREATE_SCRIPT_FILE |

Whether or not to create a file with the partition table script. |

Y(es) |

|

PARTITION_KEY |

Column acting as partition key. |

EVENT_MONTH_WID |

|

RUN_DDL |

Whether or not to execute the script. |

N(o) |

|

SCRIPT_LOCATION |

Location on file system to create the script. |

C:/Scripts/Partitioning |

|

TABLE_NAME |

Name of table to partition. |

W_WRKFC_EVT_MONTH_F |

If required, then review the script and adjust the partitioning definition.

For the workforce fact table, monthly snapshot records are created from a specified date (HR Workforce Snapshot Date, default value 1st January 2008). Therefore, it would be logical to make this date the cutoff for the first partition, and then partition monthly or quarterly thereafter.

This is done by changing the script from:

CREATE TABLE W_WRKFC_EVT_MONTH_F … PARTITION BY RANGE (EVENT_MONTH_WID) INTERVAL(1) (PARTITION p0 VALUES LESS THAN (1)) …

To:

CREATE TABLE W_WRKFC_EVT_MONTH_F … PARTITION BY RANGE (EVENT_MONTH_WID) INTERVAL(3) (PARTITION p0 VALUES LESS THAN (200801)) …

Execute the script against Oracle Business Analytics Warehouse.

Financial Analytics supports security over Billing and Revenue Management Business Unit in Accounts Receivable. This Business Unit is the same as Receivables Business Unit in PeopleSoft, and the list of Receivables Business Unit that a user has access to is determined by the grants in PeopleSoft.

Configuring Accounts Receivable Security

In order for data security filters to be applied, appropriate initialization blocks need to be enabled depending on the deployed source system. To enable Accounts Receivable security for PeopleSoft, enable Oracle PeopleSoft initialization block and make sure the initialization blocks of all other source systems are disabled. The initialization block names relevant to various source systems are given below. If more than one source system is deployed, then you must also enable the initialization blocks of those source systems.

Oracle Fusion Applications: Receivables Business Unit

Oracle E-Business Suite: Operating Unit Organizations EBS

Oracle PeopleSoft: Receivables Organizations

To enable initialization blocks, follow the steps below:

In Oracle BI EE Administration Tool, edit the BI metadata repository (for example, OracleBIAnalyticsApps.rpd).

Choose Manage, then Variables to display the Variables dialog

Under Session – Initialization Blocks, open the initialization block that you need to enable.

Clear the Disabled check box.

Save the RPD file.

The following BI Duty Roles are applicable to the Accounts Receivable subject area.

AR Analyst PSFT

AR Manager PSFT

These duty roles control which subject areas and dashboard content the user get access to. These duty roles also ensure the data security filters are applied to all the queries. For more information about how to define new groups and mappings for Users and BI Roles, see Section B.2.44, "How to Define New Groups and Mappings for Users and BI Roles".

Financial Analytics supports security over Payables Invoicing Business Unit in Accounts Payable subject areas. This Business Unit is the same as Business Unit in Oracle Fusion Applications, and the list of Business units that a user has access to is determined by the grants in Oracle Fusion applications.

Configuring Accounts Payable Security

In order for data security filters to be applied, appropriate initialization blocks need to be enabled depending on the deployed source system. The initialization block names relevant to various source systems are given below. Oracle Fusion Applications security is enabled by default so there is no change required in the setup. If more than one source system is deployed, then you must also enable the initialization blocks of those source systems. For example:

Oracle Fusion Applications: Payables Business Unit

Oracle E-Business Suite: Operating Unit Organizations EBS

Oracle PeopleSoft: Payables Organizations

To enable initialization blocks, follow the steps below:

In Oracle BI EE Administration Tool, edit the BI metadata repository (RPD file).

Choose Manage, then Variables to display the Variables dialog.

Under Session – Initialization Blocks, open the initialization block that you need to enable.

Clear the Disabled check box.

Save the RPD file.

The following BI Duty Roles are applicable to the Accounts Payable subject area.

OBIA_ACCOUNTS_PAYABLE_MANAGERIAL_ANALYSIS_DUTY

These duty roles control which subject areas and dashboard content the user get access to. These duty roles also ensure the data security filters are applied to all the queries. For more information about how to define new groups and mappings for Users and BI Roles, see Section B.2.44, "How to Define New Groups and Mappings for Users and BI Roles".

Financial Analytics supports security over Payables Invoicing Business Unit in Accounts Payable subject areas. This Business Unit is the same as Operating Unit Organizations in E-Business Suite, and the list of Operating Unit Organizations that a user has access to is determined by the grants in E-Business Suite.

Configuring Accounts Payable Security

In order for data security filters to be applied, appropriate initialization blocks need to be enabled depending on the deployed source system. To enable Accounts Payable security for E-Business Suite, enable Oracle E-Business Suite initialization block and make sure the initialization blocks of all other source systems are disabled. The initialization block names relevant to various source systems are given below. If more than one source system is deployed, then you must also enable the initialization blocks of those source systems. For example:

Oracle Fusion Applications: Payables Business Unit

Oracle E-Business Suite: Operating Unit Organizations EBS

Oracle PeopleSoft: Payables Organizations

To enable initialization blocks, follow the steps below:

In Oracle BI EE Administration Tool, edit the BI metadata repository (RPD file).

Choose Manage, then Variables to display the Variables dialog.

Under Session – Initialization Blocks, open the initialization block that you need to enable.

Clear the Disabled check box.

Save the RPD file.

The following BI Duty Roles are applicable to the Accounts Payable subject area.

AP Analyst

AP Manager

These duty roles control which subject areas and dashboard content the user get access to. These duty roles also ensure the data security filters are applied to all the queries. For more information about how to define new groups and mappings for Users and BI Roles, see Section B.2.44, "How to Define New Groups and Mappings for Users and BI Roles".

Financial Analytics supports security over Payables Invoicing Business Unit in Accounts Payable subject areas. This Business Unit is the same as Payables Business Unit in PeopleSoft, and the list of Payables Business Unit that a user has access to is determined by the grants in PeopleSoft.

Configuring Accounts Payable Security

In order for data security filters to be applied, appropriate initialization blocks need to be enabled depending on the deployed source system. To enable Accounts Payable security for PeopleSoft, enable Oracle PeopleSoft initialization block and make sure the initialization blocks of all other source systems are disabled. The initialization block names relevant to various source systems are given below. If more than one source system is deployed, then you must also enable the initialization blocks of those source systems. For example:

Oracle Fusion Applications: Payables Business Unit

Oracle E-Business Suite: Operating Unit Organizations EBS

Oracle PeopleSoft: Payables Organizations

To enable initialization blocks, follow the steps below:

In Oracle BI EE Administration Tool, edit the BI metadata repository (RPD file).

Choose Manage, then Variables to display the Variables dialog.

Under Session – Initialization Blocks, open the initialization block that you need to enable.

Clear the Disabled check box.

Save the RPD file.

The following BI Duty Roles are applicable to the Accounts Payable subject area.

AP Analyst PSFT

AP Manager PSFT

These duty roles control which subject areas and dashboard content the user get access to. These duty roles also ensure the data security filters are applied to all the queries. For more information about how to define new groups and mappings for Users and BI Roles, see Section B.2.44, "How to Define New Groups and Mappings for Users and BI Roles".

Financial Analytics supports security over Billing and Revenue Management Business Unit in Accounts Receivable subject areas. This Business Unit is the same as Business Unit in Oracle Fusion Applications, and the list of Business units that a user has access to is determined by the grants in Oracle Fusion applications.

Configuring Accounts Receivable Security

In order for data security filters to be applied, appropriate initialization blocks need to be enabled depending on the deployed source system. The initialization block names relevant to various source systems are given below. Oracle Fusion Applications security is enabled by default so there is no change required in the setup. If more than one source system is deployed, then you must also enable the initialization blocks of those source systems. For example:

Oracle Fusion Applications: Receivables Business Unit

Oracle E-Business Suite: Operating Unit Organizations EBS

Oracle PeopleSoft: Receivables Organizations

To enable initialization blocks, follow the steps below:

In Oracle BI EE Administration Tool, edit the BI metadata repository (RPD file).

Choose Manage, then Variables to display the Variables dialog

Under Session – Initialization Blocks, open the initialization block that you need to enable.

Clear the Disabled check box.

Save the RPD file.

The following BI Duty Roles are applicable to the Accounts Receivable subject area.

OBIA_ACCOUNTS_RECEIVABLE_MANAGERIAL_ANALYSIS_DUTY

These duty roles control which subject areas and dashboard content the user get access to. These duty roles also ensure the data security filters are applied to all the queries. For more information about how to define new groups and mappings for Users and BI Roles, see Section B.2.44, "How to Define New Groups and Mappings for Users and BI Roles".

Financial Analytics supports security over Billing and Revenue Management Business Unit in Accounts Receivable subject areas. This Business Unit is the same as Operating Unit Organization in E-Business Suite, and the list of Operating Unit Organizations that a user has access to is determined by the grants in E-Business Suite.

Configuring Accounts Receivable Security

In order for data security filters to be applied, appropriate initialization blocks need to be enabled depending on the deployed source system. To enable Accounts Receivable security for E-Business Suite, enable Oracle E-Business Suite initialization block and make sure the initialization blocks of all other source systems are disabled. The initialization block names relevant to various source systems are given below. If more than one source system is deployed, then you must also enable the initialization blocks of those source systems. For example:

Oracle Fusion Applications: Receivables Business Unit

Oracle E-Business Suite: Operating Unit Organizations EBS

Oracle PeopleSoft: Receivables Organizations

To enable initialization blocks, follow the steps below:

In Oracle BI EE Administration Tool, edit the BI metadata repository (RPD file).

Choose Manage, then Variables to display the Variables dialog

Under Session – Initialization Blocks, open the initialization block that you need to enable.

Clear the Disabled check box.

Save the RPD file.

The following BI Duty Roles are applicable to the Accounts Receivable subject area.

AR Analyst

AR Manager

These duty roles control which subject areas and dashboard content the user get access to. These duty roles also ensure the data security filters are applied to all the queries. For more information about how to define new groups and mappings for Users and BI Roles, see Section B.2.44, "How to Define New Groups and Mappings for Users and BI Roles".

The Bill of Materials (BOM) functional area enables you to determine the profit margin of the components that comprise the finished goods. BOM enables you to keep up with the most viable vendors in terms of cost and profit, and to keep your sales organization aware of product delivery status, including shortages.

To deploy objects in Oracle E-Business Suite for exploding the BOM, ensure that the Oracle E-Business Suite source environment meets the minimum patch level for your version, as follows:

Customers with Oracle E-Business Suite version R12 must be at or above patch level 16023729.

Customers with Oracle E-Business Suite version R12.0.x or OPI patch set A must be at or above patch level 16037126:R12.OPI.A.

Customers with Oracle E-Business Suite version R12.1.x or OPI patch set B must be at or above patch level 16037126:R12.OPI.B.

Customers with Oracle E-Business Suite version 11i must be at or above patch level 16036191.

Refer to the System Requirements and Supported Platforms for Oracle Business Intelligence Applications for full information about supported patch levels for your source system.

Note: Systems at or above these minimum patch levels include the package OPI_OBIA_BOMPEXPL_WRAPPER_P in the APPS schema, and include the following tables in the OPI schema with alias tables in the APPS schema:

OPI_OBIA_W_BOM_HEADER_DS

OPI_OBIA_BOM_EXPLOSION

OBIA_BOM_EXPLOSION_TEMP

How to Configure the Bill of Materials Explosion Options

The Bill of Materials (BOM) functional area enables you to analyze the components that comprise the finished goods. BOM enables you to determine how many products use a certain component. It also enables you to get visibility into the complete BOM hierarchy for a finished product. In order to explode BOM structures, certain objects need to be deployed in your E-Business Suite system.

Note: To run the ETL as the apps_read_only user, you must first run the following DCL commands from the APPS schema:

Grant insert on opi.opi_obia_w_bom_header_ds to &read_only_user; Grant analyze any to &read_only_user;

You can explode the BOM structure with three different options:

All. All the BOM components are exploded regardless of their effective date or disable date. To explode a BOM component is to expand the BOM tree structure.

Current. The incremental extract logic considers any changed components that are currently effective, any components that are effective after the last extraction date, or any components that are disabled after the last extraction date.

Current and Future. All the BOM components that are effective now or in the future are exploded. The disabled components are left out.

These options are controlled by the EXPLODE_OPTION variable. The EXPLODE_OPTION variable is preconfigured with a value of 2, explode Current BOM structure.

There are five different BOM types in a source system: 1- Model, 2 - Option Class, 3 - Planning, 4 - Standard, and 5 - Product Family. By default, only the Standard BOM type is extracted and exploded. You can control this selection using the EBS_BOM_TYPE parameter.

The SDE_ORA_BOMItemFact_Header mapping invokes the OPI_OBIA_BOMPEXPL_P package in the E-Business Suite database to explode the BOM structure. The table below lists the variables used to control the stored procedure.

Table B-2 Variables for the BOM Explosion Stored Procedure

| Input Variable | Preconfigured Value | Description |

|---|---|---|

|

BOM_OR_ENG |

1 |

1—BOM 2—ENG |

|

COMMIT_POINT |

5000 |

Number of records to trigger a Commit. |

|

COMP_CODE |

Not applicable. |

This parameter is deprecated and no longer affects the functionality of the procedure. |

|

CST_TYPE_ID |

0 |

This parameter is deprecated and no longer affects the functionality of the procedure. |

|

EXPLODE_OPTION |

2 |

1—All 2—Current 3—Current and Future |

|

EXPL_QTY |

1 |

Explosion quantity. |

|

IMPL_FLAG |

1 |

1—Implemented Only 2—Implemented and Non-implemented |

|

LEVELS_TO_EXPLODE |

10 |

Number of levels to explode. |

|

MODULE |

2 |

1—Costing 2—BOM 3—Order Entry 4—ATO 5—WSM |

|

ORDER_BY |

1 |

Controls the order of the records. 1—Operation Sequence Number, Item Number. 2—Item Number, Operation Sequence Number. |

|

PLAN_FACTOR_FLAG |

2 |

1—Yes 2—No |

|

RELEASE_OPTION |

0 |

Option to use released items. |

|

STD_COMP_FLAG |

0 |

1—Explode only standard components 2—All components |

|

UNIT_NUMBER |

Not applicable. |

When entered, limits the components exploded to the specified Unit. |

|

VERIFY_FLAG |

0 |

This parameter is deprecated and no longer affects the functionality of the procedure. |

The Dimension tables listed below contain twenty generic attribute columns to allow for storage and display of customizable values from the source application system:

W_PRODUCT_ADDL_ATTR_D

W_PARTY_ORG_ADDL_ATTR_D

W_CUSTOMER_ACCOUNT_D (contains the new Attributes columns in the base table itself)

W_INT_ORG_D (contains the new Attributes columns in the base table itself)

On Oracle E-Business Suite Self-service time cards are defined based on Templates, those templates have seeded mappings which correspond to the physical table / column name of a given time card attribute.

The BI Apps product is shipped with mappings based on the seeded time card templates.

Optionally customers can change this mapping by editing the associated variable for each column mapping, in the first release this must be done within ODI metadata variables with the prefix HR_TIMECARD_FLEX_MAP. The screenshot below shows an example.

When changing the values be careful not to introduce an ETL runtime error, that is, by introducing a SQL syntax error.

Note: Refer to "How to set up Group Account Numbers for Peoplesoft" for general concepts about group account number and Financial Statement Item code.

When a user maps a GL natural account to an incorrect group account number, incorrect accounting entries might be inserted into the fact table. For example, the natural account 620000 is mistakenly classified under 'AR' group account number when it should be classified under 'AP' group account number. When this happens, the ETL program will try to reconcile all GL journals charged to account 620000 against sub ledger accounting records in AR Fact (W_AR_XACT_F). Since these GL journal lines did not come from AR, the ETL program will not be able to find the corresponding sub ledger accounting records for these GL journal lines. In this case, the ETL program will insert 'Manual' records into the AR fact table because it thinks that these GL journal lines are 'Manual' journal entries created directly in the GL system charging to the AR accounts.

To make corrections to group account number configurations for Peoplesoft, correct the mapping of GL natural account to the correct group account in the input CSV file called file_group_acct_codes_psft.csv.

If you add values, then you also need to update the BI metadata repository (that is, the RPD file).

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

For example, before correction, a CSV file has the following values (Incorrect Group Account Number assignment):

BUSINESS_UNIT = AUS01

FROM ACCT = 620000

TO ACCT = 620000

GROUP_ACCT_NUM = AR

After correction, account '620000' should now correctly point to 'AP' group account number, and the CSV file would have the following (corrected) values:

BUSINESS_UNIT = AUS01

FROM ACCT = 620000

TO ACCT = 620000

GROUP_ACCT_NUM = AP

Based on the Group Account corrections made in the CSV file, the next ETL process would reassign the group accounts correctly and fix the entries that were made to the fact tables from the previous ETL run(s).

Setting up the JDE_RATE_TYPE parameter

The concept of Rate Type in JD Edwards EnterpriseOne is different to that defined in Oracle Business Analytics Warehouse.

In Oracle's JD Edwards EnterpriseOne, the Rate Type is an optional key; it is not used during Currency Exchange Rate calculations.

ODI uses the JDE_RATE_TYPE parameter to populate the Rate_Type field in the W_EXCH_RATE_GS table. By default, the JDE_RATE_TYPE parameter has a value of "Actual." The query and lookup on W_EXCH_RATE_G will succeed when the RATE_TYPE field in the W_EXCH_RATE_G table contains the same value as the GLOBAL1_RATE_TYPE, GLOBAL2_RATE_TYPE and GLOBAL3_RATE_TYPE fields in the W_GLOBAL_CURR_G table.

To add more dates, you need to understand how the Order Cycle Times table is populated. Therefore, if you want to change the dates loaded into the Order Cycle Time table (W_SALES_CYCLE_LINE_F), then you have to modify the interfaces for both a full load and an incremental load that take the dates from the W_* tables and load them into the Cycle Time table.

To add dates to the Cycle Time table load:

In ODI Designer Navigator, expand Models - Oracle BI Applications - Oracle BI Applications - Fact.

Find W_SALES_CYCLE_LINE_F and add a column to store this date you want to add.

For example, if you are loading the Validated on Date in the W_SALES_CYCLE_LINE_F table, then you need to create a new column, VALIDATED_ON_DT, and modify the target definition of the W_SALES_CYCLE_LINE_F table.

Save the changes.

Open Projects - BI Apps Project - Mappings - PLP folders.

Find PLP_SalesCycleLinesFact_Load folder and modify interfaces under the folder to select the new column from any of the following source tables, and load it to the W_SALES_CYCLE_LINE_F target table:

W_SALES_ORDER_LINE_F

W_SALES_INVOICE_LINE_F

W_SALES_PICK_LINE_F

W_SALES_SCHEDULE_LINE_F

Modify the temp interfaces and the main interfaces for both a full load and an incremental load.

This section explains how to map Oracle General Ledger Accounts to Group Account Numbers, and includes the following topics:

An overview of setting up Oracle General Ledger Accounts - see Section B.2.18.1, "Overview of Mapping Oracle GL Accounts to Group Account Numbers".

A description of how to map Oracle General Ledger Accounts to Group Account Numbers - see Section B.2.18.2, "How to Map Oracle GL Account Numbers to Group Account Numbers".

An example that explains how to add Group Account Number metrics to the Oracle BI Repository - see Section B.2.18.3, "Example of Adding Group Account Number Metrics to the Oracle BI Repository".

Note:

It is critical that the GL account numbers are mapped to the group account numbers (or domain values) because the metrics in the GL reporting layer use these values.

Group Account Number Configuration is an important step in the configuration of Financial Analytics, because it determines the accuracy of the majority of metrics in the General Ledger and Profitability module. Group Accounts in combination with Financial Statement Item Codes are also leveraged in the GL reconciliation process, to ensure that subledger data reconciles with GL journal entries. This topic is discussed in more detail later in this section.

You set up General Ledger accounts using the following configuration file:

file_group_acct_codes_ora.csv - this file maps General Ledger accounts to group account codes.

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

You can categorize your Oracle General Ledger accounts into specific group account numbers. The group account number is used during data extraction as well as front-end reporting. The GROUP_ACCT_NUM field in the GL Account dimension table W_GL_ACCOUNT_D denotes the nature of the General Ledger accounts (for example, cash account, payroll account). For a list of the Group Account Number domain values, see Oracle Business Analytics Warehouse Data Model Reference. The mappings to General Ledger Accounts Numbers are important for both Profitability analysis and General Ledger analysis (for example, Balance Sheets, Profit and Loss, Cash Flow statements).

The logic for assigning the group accounts is located in the file_group_acct_codes_ora.csv file. Table B-3 shows an example configuration of the file_group_acct_codes_ora.csv file.

Table B-3 Example Configuration of file_group_acct_codes_ora.csv File

| CHART OF ACCOUNTS ID | FROM ACCT | TO ACCT | GROUP_ACCT_NUM |

|---|---|---|---|

|

1 |

101010 |

101099 |

CA |

|

1 |

131010 |

131939 |

FG INV |

|

1 |

152121 |

152401 |

RM INV |

|

1 |

171101 |

171901 |

WIP INV |

|

1 |

173001 |

173001 |

PPE |

|

1 |

240100 |

240120 |

ACC DEPCN |

|

1 |

261000 |

261100 |

INT EXP |

|

1 |

181011 |

181918 |

CASH |

|

1 |

251100 |

251120 |

ST BORR |

In Table B-3, in the first row, all accounts within the account number range from 101010 to 101099 that have a Chart of Account (COA) ID equal to 1 are assigned to Current Asset (that is, CA). Each row maps all accounts within the specified account number range and within the given chart of account ID.

If you need to create a new group of account numbers, you can create new rows in Oracle BI Applications Configuration Manager. You can then assign GL accounts to the new group of account numbers in the file_group_acct_codes_ora.csv file.

You must also add a new row in Oracle BI Applications Configuration Manager to map Financial Statement Item codes to the respective Base Table Facts. Table B-4 shows the Financial Statement Item codes to which Group Account Numbers must map, and their associated base fact tables.

Table B-4 Financial Statement Item Codes and Associated Base Fact Tables

| Financial Statement Item Codes | Base Fact Tables |

|---|---|

|

AP |

AP base fact (W_AP_XACT_F) |

|

AR |

AR base fact (W_AR_XACT_F) |

|

COGS |

Cost of Goods Sold base fact (W_GL_COGS_F) |

|

REVENUE |

Revenue base fact (W_GL_REVN_F) |

|

TAX |

Tax base fact (W_TAX_XACT_F)Foot 1 |

|

OTHERS |

GL Journal base fact (W_GL_OTHER_F) |

Footnote 1 E-Business Suite adapters for Financial Analytics do not support the Tax base fact (W_TAX_XACT_F).

By mapping your GL accounts against the group account numbers and then associating the group account number to a Financial Statement Item code, you have indirectly associated the GL account numbers to Financial Statement Item codes as well. This association is important to perform GL reconciliation and to ensure the subledger data reconciles with GL journal entries. It is possible that after an invoice has been transferred to GL, a GL user might adjust that invoice in GL. In this scenario, it is important to ensure that the adjustment amount is reflected in the subledger base fact as well as balance tables. To determine such subledger transactions in GL, the reconciliation process uses Financial Statement Item codes.

Financial Statement Item codes are internal codes used by the ETL process to process the GL journal records during the GL reconciliation process against the subledgers. When the ETL process reconciles a GL journal record, it looks at the Financial Statement Item code associated with the GL account that the journal is charging against, and then uses the value of the Financial Statement item code to decide which base fact the GL journal should reconcile against. For example, when processing a GL journal that charges to a GL account which is associate to 'AP' Financial Statement Item code, then the ETL process will try to go against AP base fact table (W_AP_XACT_F), and try to locate the corresponding matching AP accounting entry. If that GL account is associated with the 'REVENUE' Financial Statement Item code, then the ETL program will try to go against the Revenue base fact table (W_GL_REVN_F), and try to locate the corresponding matching Revenue accounting entry.

This section explains how to map Oracle General Ledger Account Numbers to Group Account Numbers.

Note:

If you add new Group Account Numbers to the file_group_acct_codes_<source system type>.csv file, you must also add metrics to the BI metadata repository (that is, the RPD file). See Section B.2.18.3, "Example of Adding Group Account Number Metrics to the Oracle BI Repository" for more information.

To map Oracle GL account numbers to group account numbers:

Edit the file_group_acct_codes_ora.csv file.

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

For each Oracle GL account number that you want to map, create a new row in the file containing the following fields:

| Field Name | Description |

|---|---|

|

CHART OF ACCOUNTS ID |

The ID of the GL chart of account. |

|

FROM ACCT |

The lower limit of the natural account range. This is based on the natural account segment of your GL accounts. |

|

TO ACCT |

The higher limit of the natural account range. This is based on the natural account segment of your GL accounts. |

|

GROUP_ACCT_NUM |

This field denotes the group account number of the Oracle General Ledger account, as specified in the warehouse domain Group Account in Oracle BI Applications Configuration Manager. For example, 'AP' for Accounts Payables, 'CASH' for cash account, 'GEN PAYROLL' for payroll account, and so on. |

For example:

101, 1110, 1110, CASH 101, 1210, 1210, AR 101, 1220, 1220, AR

Note:

You can optionally remove the unused rows from the CSV file.

Ensure that the values that you specify in the file_group_acct_codes_ora.csv file are consistent with the values that are specified in Oracle BI Applications Configuration Manager for Group Accounts.

Save and close the CSV file.

If you add new Group Account Numbers to the file_group_acct_codes_<source system type>.csv file, then you must also use Oracle BI EE Administration Tool to add metrics to the Oracle BI repository to expose the new Group Account Numbers, as described in this example.

This example is applicable to the following tasks:

For E-Business Suite, Section B.2.18.2, "How to Map Oracle GL Account Numbers to Group Account Numbers"

For PeopleSoft, Section B.2.19.2, "How to Map GL Account Numbers to Group Account Numbers"

For JD Edwards, Section B.2.40.2, "How to Map GL Account Numbers to Group Account Numbers"

This example assumes that you have a new Group Account Number called 'Payroll (Domain member code 'PAYROLL'), and you want to add a new metric to the Presentation layer called 'Payroll Expense'.

To add a new metric in the logical table Fact – Fins – GL Other Posted Transaction:

Using Oracle BI EE Administration Tool, edit the BI metadata repository (RPD file).

For example, the file OracleBIAnalyticsApps.rpd is located at:

ORACLE_INSTANCE\bifoundation\OracleBIServerComponent\coreapplication_ obis<n>\repository

In the Business Model and Mapping layer:

Create a logical column named 'Payroll Expense' in the logical table 'Fact – Fins – GL Journals Posted'.

For example, right-click the Core\Fact - Fins - GL Journals Posted\ object and choose New Object, then Logical Column, to display the Logical Column dialog. Specify Payroll Expense in the Name field.

Display the Aggregation tab, and then choose 'Sum' in the Default aggregation rule drop-down list.

Click OK to save the details and close the dialog.

Expand the Core\Fact - Fins - GL Journals Posted\Sources\ folder and double click the Fact_W_GL_OTHER_GRPACCT_FSCLPRD_A source to display the Logical Table Source dialog.

Display the Column Mapping tab.

Select Show unmapped columns.

Locate the Payroll Expense expression, and click the Expression Builder button to open Expression Builder.

Use Expression Builder to specify the following SQL statement:

FILTER("Core"."Fact - Fins - GL Journals Posted"."Transaction Amount" USING "Core"."Dim - GL Account"."Group Account Number" = 'PAYROLL')

The filter condition refers to the new Group Account Number 'Payroll'.

Repeat steps (d) to (h) for each Logical Table Source. Modify the expression in step (h) appropriately for each LTS by using the appropriate fact table that corresponds to the Logical Table Source.

Steps (d) to (h) must be repeated for each Logical Table Source because in this example, there are multiple Logical Table Sources for fact table and aggregation tables in this logical table. Modify the expression in step (h) appropriately for each Logical Table Source by using the appropriate fact table to which it corresponds.

Save the details.

To expose the new repository objects in end users' dashboards and reports, drag the new objects from the Business Model and Mapping layer to an appropriate folder in the Presentation layer.

To add a new metric in the logical table Fact – Fins – GL Balance:

Using Oracle BI EE Administration Tool, edit the BI metadata repository (RPD file).

For example, the file OracleBIAnalyticsApps.rpd is located at:

ORACLE_INSTANCE\bifoundation\OracleBIServerComponent\coreapplication_ obis<n>\repository

In the Business Model and Mapping layer:

Create a logical column named 'Payroll Expense' in logical table 'Fact – Fins – GL Balance'.

For example, right-click the Core\Fact – Fins – GL Balance object and choose New Object, then Logical Column, to display the Logical Column dialog. Specify Payroll Expense in the Name field.

In the Column Source tab, select Derived from existing columns using an expression.

Click the Expression Builder button to display Expression Builder.

Use Express Builder to specify the following SQL statement:

FILTER("Core"."Fact - Fins - GL Balance"."Activity Amount" USING "Core"."Dim - GL Account"."Group Account Number" = 'PAYROLL')

The filter condition refers to the new Group Account Number 'PAYROLL'.

Save the details.

To expose the new repository objects in end users' dashboards and reports, drag the new objects from the Business Model and Mapping layer to an appropriate folder in the Presentation layer.

This section explains how to map General Ledger Accounts to Group Account Numbers, and includes the following topics:

An overview of setting up General Ledger Accounts - see Section B.2.19.1, "Overview of Mapping GL Accounts to Group Account Numbers."

A description of how to map General Ledger Accounts to Group Account Numbers - see Section B.2.19.2, "How to Map GL Account Numbers to Group Account Numbers."

An example that explains how to add Group Account Number metrics to the Oracle BI Repository - see Section B.2.18.3, "Example of Adding Group Account Number Metrics to the Oracle BI Repository".

Note:

It is critical that the GL account numbers are mapped to the group account numbers (or domain values) because the metrics in the GL reporting layer use these values. For a list of domain values for GL account numbers, see Oracle Business Analytics Warehouse Data Model Reference.

Group Account Number configuration is an important step in the configuration of Financial Analytics, as it determines the accuracy of the majority of metrics in the General Ledger and Profitability module. Group Accounts in combination with Financial Statement Item codes are also leveraged in the GL reconciliation process, to ensure that subledger data reconciles with GL journal entries. This topic is discussed in more detail later in this section.

You can categorize your PeopleSoft General Ledger accounts into specific group account numbers. The GROUP_ACCT_NUM field denotes the nature of the General Ledger accounts.

You set up General Ledger accounts using the following configuration file:

file_group_acct_codes_psft.csv - this file maps General Ledger accounts to group account codes.

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

Examples include Cash account, Payroll account, and so on. For a list of the Group Account Number domain values, see Oracle Business Analytics Warehouse Data Model Reference. The group account number configuration is used during data extraction as well as front-end reporting. For example, the group account number configuration is used heavily in both Profitability Analysis (Income Statement) and General Ledger analysis. The logic for assigning the accounts is located in the file_group_acct_codes_psft.csv file.

Table B-5 Layout of file_group_acct_codes_psft.csv File

| BUSINESS_UNIT | FROM_ACCT | TO_ACCT | GROUP_ACCT_NUM |

|---|---|---|---|

|

AUS01 |

101010 |

101099 |

AP |

|

AUS01 |

131010 |

131939 |

AR |

|

AUS01 |

152121 |

152401 |

COGS |

|

AUS01 |

171101 |

173001 |

OTHER |

|

AUS01 |

240100 |

240120 |

REVENUE |

|

AUS01 |

251100 |

251120 |

TAXFoot 1 |

Footnote 1 Oracle's PeopleSoft adapters for Financial Analytics do not support the Tax base fact (W_TAX_XACT_F).

In Table B-5, in the first row, all accounts within the account number range from 101010 to 101099 containing a Business Unit equal to AUS01 are assigned to AP. Each row maps all accounts within the specified account number range and with the given Business Unit. If you need to assign a new group of account numbers, you can then assign GL accounts to the new group of account numbers in the file_group_acct_codes_psft.csv file.

You must also add a new row in Oracle BI Applications Configuration Manager to map Financial Statement Item codes to the respective Base Table Facts. Table B-6 shows the Financial Statement Item codes to which Group Account Numbers must map, and their associated base fact tables.

Table B-6 Financial Statement Item Codes and Associated Base Fact Tables

| Financial Statement Item Codes | Base Fact Tables |

|---|---|

|

AP |

AP base fact (W_AP_XACT_F) |

|

AR |

AR base fact (W_AR_XACT_F) |

|

COGS |

Cost of Goods Sold base fact (W_GL_COGS_F) |

|

REVENUE |

Revenue base fact (W_GL_REVN_F) |

|

TAX |

Tax base fact (W_TAX_XACT_F)Foot 1 |

|

OTHERS |

GL Journal base fact (W_GL_OTHER_F) |

Footnote 1 Oracle's PeopleSoft adapters for Financial Analytics do not support the Tax base fact (W_TAX_XACT_F).

By mapping your GL accounts against the group account numbers and then associating the group account number to a Financial Statement Item code, you have indirectly associated the GL account numbers to Financial Statement Item codes as well. This association is important to perform GL reconciliation and ensure the subledger data reconciles with GL journal entries. It is possible that after an invoice has been transferred to GL, a GL user might adjust that invoice in GL. In this scenario, it is important to ensure that the adjustment amount is reflected in the subledger base fact as well as balance tables. To determine such subledger transactions in GL, the reconciliation process uses Financial Statement Item codes.

Financial Statement Item codes are internal codes used by the ETL process to process the GL journal records during the GL reconciliation process against the subledgers. When the ETL process reconciles a GL journal record, it looks at the Financial Statement Item code associated with the GL account that the journal is charging against, and then uses the value of the Financial Statement item code to decide which base fact the GL journal should reconcile against. For example, when processing a GL journal that charges to a GL account which is associate to 'AP' Financial Statement Item code, then the ETL process will try to go against AP base fact table (W_AP_XACT_F), and try to locate the corresponding matching AP accounting entry. If that GL account is associated with the 'REVENUE' Financial Statement Item code, then the ETL program will try to go against the Revenue base fact table (W_GL_REVN_F), and try to locate the corresponding matching Revenue accounting entry.

This section explains how to map General Ledger Account Numbers to Group Account Numbers.

Note:

If you add new Group Account Numbers to the file_group_acct_codes_<source system type>.csv file, you must also add metrics to the BI metadata repository (that is, the RPD file). See Section B.2.18.3, "Example of Adding Group Account Number Metrics to the Oracle BI Repository" for more information.

To map PeopleSoft GL account numbers to group account numbers:

Edit the file_group_acct_codes_psft.csv file.

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

For each GL account number that you want to map, create a new row in the file containing the following fields:

| Field Name | Description |

|---|---|

|

BUSINESS_UNIT |

The ID of the BUSINESS UNIT. |

|

FROM ACCT |

The lower limit of the natural account range. This is based on the natural account segment of your GL accounts. |

|

TO ACCT |

The higher limit of the natural account range. This is based on the natural account segment of your GL accounts. |

|

GROUP_ACCT_NUM |

This field denotes the group account number of the General Ledger account, as specified in a domain in the Group Account domain in Oracle BI Applications Configuration Manager. For example, 'AP' for Accounts Payables, 'CASH' for cash account, 'GEN PAYROLL' for payroll account, and so on. |

For example:

AUS01, 1110, 1110, CASH AUS01, 1210, 1210, AR AUS01, 1220, 1220, AR

Note:

You can optionally remove the unused rows in the CSV file.

Ensure that the values that you specify in the file_group_acct_codes_psft.csv file are consistent with the values that are specified for domains in Oracle BI Applications Configuration Manager.

Save and close the CSV file.

This section explains how to configure General Ledger Account and General Ledger Segments for Oracle E-Business Suite, and contains the following topics:

Section B.2.20.2, "Example of Data Configuration for a Chart of Accounts"

Section B.2.20.3, "How to Set Up the GL Segment Configuration File"

Section B.2.20.4, "How to Configure GL Segments and Hierarchies Using Value Set Definitions"

If you are deploying Oracle Financial Analytics, Oracle Procurement and Spend Analytics, or Oracle Supply Chain and Order Management Analytics, then you must configure GL account hierarchies as described in this topic.

Thirty segments are supported in which you can store accounting flexfields. Flexfields are flexible enough to support complex data configurations. For example:

You can store data in any segment.

You can use more or fewer segments per chart of accounts, as required.

You can specify multiple segments for the same chart of accounts.

A single company might have a US chart of accounts and an APAC chart of accounts, with the following data configuration:

Table B-7 Example Chart of Accounts

| Segment Type | US Chart of Account (4256) value | APAC Chart of Account (4257) value |

|---|---|---|

|

Company |

Stores in segment 3 |

Stores in segment 1 |

|

Natural Account |

Stores in segment 4 |

Stores in segment 3 |

|

Cost Center |

Stores in segment 5 |

Stores in segment 2 |

|

Geography |

Stores in segment 2 |

Stores in segment 5 |

|

Line of Business (LOB) |

Stores in segment 1 |

Stores in segment 4 |

This example shows that in US Chart of Account , 'Company' is stored in the segment 3 column in the Oracle E-Business Suite table GL_CODE_COMBINATIONS. In APAC Chart of Account, 'Company' is stored in the segment 1 column in GL_CODE_COMBINATIONS table. The objective of this configuration file is to ensure that when segment information is extracted into the Oracle Business Analytics Warehouse table W_GL_ACCOUNT_D, segments with the same nature from different chart of accounts are stored in the same column in W_GL_ACCOUNT_D.

For example, we can store 'Company' segments from US COA and APAC COA in the segment 1 column in W_GL_ACCOUNT_D; and Cost Center segments from US COA and APAC COA in the segment 2 column in W_GL_ACCOUNT_D, and so on.

Before you run the ETL process for GL accounts, you must specify the segments that you want to analyze. To specify the segments, you use the ETL configuration file named file_glacct_segment_config_<source_system>.csv.

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

In file_glacct_segment_config_ora.csv, you must specify the segments of the same type in the same column. For example, you might store all Cost Center segments from all charts of accounts in one column, and all Company segments from all charts of accounts in a separate column.

File file_glacct_segment_config_ora.csv contains a pair of columns for each accounting segment to be configured in the warehouse. In the 1st column, give the actual segment column name in Oracle E-Business Suite where this particular entity is stored. This column takes values such as SEGMENT1, SEGMENT2....SEGMENT30 (this is case sensitive). In the second column give the corresponding VALUESETID used for this COA and segment in Oracle E-Business Suite.

For example, you might want to do the following:

Analyze GL account hierarchies using only Company, Cost Center, Natural Account, and LOB.

You are not interested in using Geography for hierarchy analysis.

Store all Company segments from all COAs in ACCOUNT_SEG1_CODE column in W_GL_ACCOUNT_D.

Store all Cost Center segments from all COAs in ACCOUNT_SEG2_CODE column in W_GL_ACCOUNT_D.

Store all Natural Account segments from all COAs in ACCOUNT_SEG3_CODE column in W_GL_ACCOUNT_D.

Store all LOB segments from all COAs in ACCOUNT_SEG4_CODE column in W_GL_ACCOUNT_D.

Note: Although the examples above are mapping Natural Account, Balancing Segment and Cost Center segments to one of the segment columns in the file, it is not required that you map these three segments in the file. This is because we have dedicated dimensions to populate these three segments and they will be populated automatically by default whether or not you map these three segments in this file. It is preferred that these three segments are not mapped in this file so as to avoid redundant segment dimensions giving the same information.

GL Segment Configuration for Budgetary Control

For Budgetary Control, the first two segments are reserved for Project and Program segments respectively. Therefore, to use one or both of these, configure file_glacct_segment_config_ora.csv in this particular order:

1. Put your Project segment column name in the 'SEG_PROJECT' column in the CSV file.

2. Put your Program segment column name in the 'SEG_PROGRAM' column in the CSV file.

If you do not have any one of these reserved segments in your source system, leave that particular segment empty in the CSV file.

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

Configure file_glacct_segment_config_ora.csv, as follows:

Edit the file file_glacct_segment_config_ora.csv.

For example, you might edit the file located in \src_files\EBS11510.

Follow the steps in Section B.2.20.3, "How to Set Up the GL Segment Configuration File" to configure the file.

Edit the BI metadata repository (that is, the RPD file) for GL Segments and Hierarchies Using Value Set Definitions.

The metadata contains multiple logical tables that represent each GL Segment, such as Dim_W_GL_SEGMENT_D_ProgramSegment, Dim_W_GL_SEGMENT_D_ProjectSegment, Dim_W_GL_SEGMENT_D_Segment1 and so on. Because all these logical tables are mapped to the same physical table, W_GL_SEGMENT_D, a filter should be specified in the logical table source of these logical tables in order to restrain the output of the logical table to get values pertaining to that particular segment. You must set the filter on the physical column SEGMENT_LOV_ID to the Value Set IDs that are applicable for that particular segment. The list of the Value Set IDs would be the same as the Value Set IDs you configured in the CSV file mentioned above.

Specify a filter in the Business Model and Mapping layer of the Oracle BI Repository, as follows.

In Oracle BI EE Administration Tool, edit the BI metadata repository (for example, OracleBIAnalyticsApps.rpd).

The OracleBIAnalyticsApps.rpd file is located in ORACLE_INSTANCE\bifoundation\OracleBIServerComponent\coreapplication_obis<n>\repository.

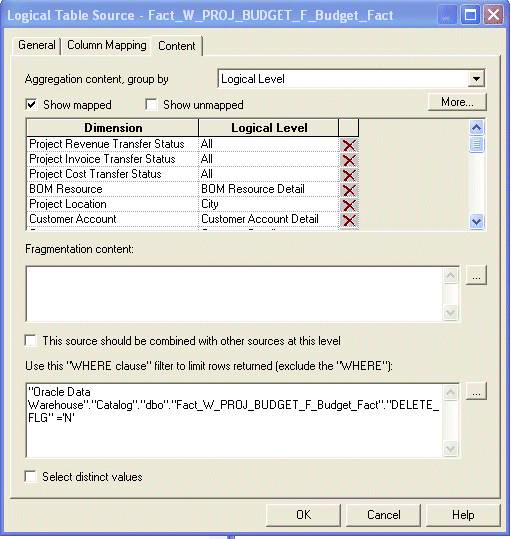

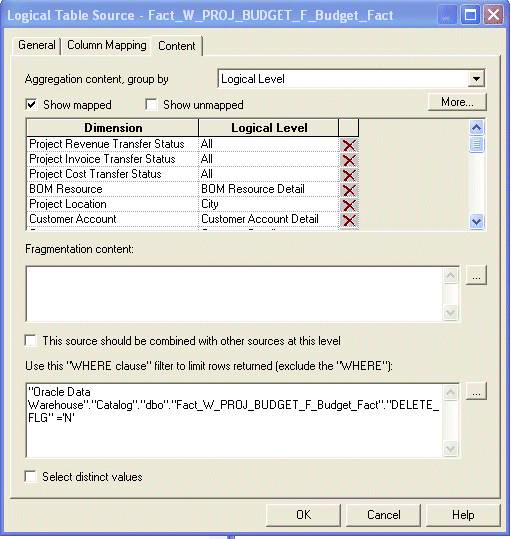

Expand each logical table, for example, Dim - GL Segment1, and open the logical table source under it. Display the Content tab. In the 'Use this WHERE clause…' box, apply a filter on the corresponding physical table alias of W_GL_SEGMENT_D.

For example: "Oracle Data Warehouse"."Catalog"."dbo"."Dim_W_GL_SEGMENT_D_Segment1"."SEGMENT_LOV_ID" IN (comma separated values IDs).

Enter all Value Set IDs, separated by commas that correspond to this segment.

Oracle Financial Analytics supports up to 30 segments in the GL Account dimension, and by default delivers ten GL Segment dimensions in the RPD. If you need more than ten GL Segments, perform the following steps to add new segments:

In the Physical Layer:

Create two new physical alias of W_GL_SEGMENT_D as "Dim_W_GL_SEGMENT_D_SegmentXX" and Dim_W_GL_SEGMENT_D_SegmentXX_GLAccount.

To do this, right-click the physical table W_GL_SEGMENT_D and select New Object and then Alias.Name the new alias as "Dim_W_GL_SEGMENT_D_SegmentXX" and "Dim_W_GL_SEGMENT_D_SegmentXX_GLAccount".

Create 4 new alias of W_GL_SEGMENT_DH as:

- "Dim_W_GL_SEGMENT_DH_SegmentXX"

- "Dim_W_GL_SEGMENT_DH_Security_SegmentXX"

- "Dim_W_GL_SEGMENT_DH_SegmentXX_GLAccount"

- "Dim_W_GL_SEGMENT_DH_Security_SegmentXX_GLAccount"

Create a Foreign Key from "Dim_W_GL_SEGMENT_D_SegmentXX" to "Dim_W_GL_SEGMENT_DH_SegmentXX" and "Dim_W_GL_SEGMENT_DH_Security_SegmentXX".

The foreign key is similar to the one from "Dim_W_GL_SEGMENT_D_Segment1" to "Dim_W_GL_SEGMENT_DH_Segment1" and "Dim_W_GL_SEGMENT_DH_Security_Segment1".

The direction of the foreign key should be from W_GL_SEGMENT_DH to W_GL_SEGMENT_D; for example, on a '0/1': N cardinality join, W_GL_SEGMENT_DH will be on the '0/1' side and W_GL_SEGMENT_D will be on the 'N' side. See Oracle Fusion Middleware Metadata Repository Builder's Guide for Oracle Business Intelligence Enterprise Edition for more information about how to create physical foreign key joins.

Create a similar physical foreign key from "Dim_W_GL_SEGMENT_D_SegmentXX_GLAccount" to "Dim_W_GL_SEGMENT_DH_SegmentXX_GLAccount' and "Dim_W_GL_SEGMENT_DH_Security_SegmentXX_GLAccount".

Similarly, create physical foreign key join between Dim_W_GL_SEGMENT_D_SegmentXX and Dim_W_GL_ACCOUNT_D, with W_GL_SEGMENT_D on the '1' side and W_GL_ACCOUNT_D on the 'N' side.

Save your changes.

In the Business Model and Mapping Layer, do the following:

Create a new logical table "Dim - GL SegmentXX" similar to "Dim – GL Segment1".

This logical table should have a logical table source that is mapped to the physical tables created above (for example, it will have both Dim_W_GL_SEGMENT_DH_SegmentXX and Dim_W_GL_SEGMENT_DH_SegmentXX_GLAccount).

This logical table should also have all attributes similar to "Dim – GL Segment1" properly mapped to the respective physical tables, Dim_W_GL_SEGMENT_DH_SegmentXX and Dim_W_GL_SEGMENT_DH_SegmentXX_GLAccount.

In the Business Model Diagram, create a logical join from "Dim – GL SegmentXX" to all the relevant logical fact tables similar to "Dim – GL Segment1", with the GL Segment Dimension Logical table on the '0/1' side and the logical fact table on the 'N' side.

To see all the relevant logical fact tables, first include Dim – GL Segment1 on the Business Model Diagram, and then right-click that table and select Add Direct Joins.

Add the content filter in the logical table source of "Dim – GL SegmentXX" as described in the previous step.

Create a dimension by right-clicking "Dim – GL SegmentXX", and select Create Dimension. Rename this to "GL SegmentXX". Make sure the drill-down structure is similar to "GL Segment1".

If you are not sure how to do this, follow these steps: By default, the dimension will have two levels: the Grand Total Level and the Detail Level. Rename these levels to "All" and "Detail – GL Segment" respectively.

Right-click the "All" level and select "New Object" and then "Child Level". Name this level as Tree Code And Version. Create a level under Tree Code And Version and name it as Level31. Similarly create a level under Level31 as Level30. Repeat this process until you have Level1 under Level2.

Drag the "Detail – GL Segment" level under "Level1" so that it is the penultimate level of the hierarchy. Create another child level under "Detail – GL Segment" and name it as "Detail – GL Account".

From the new logical table Dim - GL SegmentXX, drag the Segment Code, Segment Name, Segment Description, Segment Code Id and Segment Value Set Code attributes to the "Detail – GL Segment" level of the hierarchy. Similarly pull in the columns mentioned below for the remaining levels.

Detail – GL Account – Segment Code – GL Account

Levelxx – Levelxx Code, Levelxx Name, Levelxx Description and Levelxx Code Id

Tree Code And Version – Tree Filter, Tree Version ID, Tree Version Name and Tree Code

Navigate to the properties of each Level and from the Keys tab, create the appropriate keys for each level as mentioned below. Select the primary key and "Use for Display option" for each level as mentioned in the matrix below.

Table B-8 Configuration values for GL Segments and Hierarchies Using Value Set Definitions

| Level | Key Name | Columns | Primary Key of that Level | Use for Display? |

|---|---|---|---|---|

|

Tree Code And Version |

Tree Filter |

Tree Filter |

Y |

Y |

|

Levelxx |

Levelxx Code |

Levelxx Code |

Y |

Y |

|

Levelxx |

Levelxx ID |

Levelxx Code Id |

<empty> |

<empty> |

|

Detail - GL Segment |

Segment ID |

Segment Code Id |

Y |

<empty> |

|

Detail - GL Segment |

Segment Code |

Segment Value Set Code and Segment Code |

<empty> |

Y |

|

Detail - GL Account |

Segment Code - GL Account |

Segment Code - GL Account |

Y |

Y |

Once you have created these new levels, you will have to set the aggregation content for all the Logical Table Sources of the newly created logical table created Dim - GL SegmentXX. Set the Aggregation Content in the Content tab for each LTS as mentioned below:

Dim_W_GL_SEGMENT_DH_SegmentXX – Set the content level to "Detail – GL Segment".

Dim _W_GL_SEGMENT_DH_SegmentXX_GLAccount – Set it to "Detail – GL Account".

Set the aggregation content to all relevant fact logical table sources. Open all Logical Table Sources of all the logical fact tables that are relevant to the new logical table one at a time. Display the Content tab. If the LTS is applicable for that newly created segment, then set the aggregation content to "Detail – GL Account". If not, skip that logical table source and go to the next one.

Drag your new "Dim - GL Segment XX" dimensions into the appropriate subject areas in the Presentation layer. Typically, you can expose these GL Segment dimensions in all subject areas where the GL Account dimension is exposed. You can also find all appropriate subject areas by right-clicking Dim – GL Segment1 and select Query Related Objects, then selecting Presentation, and then selecting Subject Area.

Save your changes and check global consistency.

Each GL Segment denotes a certain meaningful ValueSet(s) in your OLTP. To clearly identify each segment in the report, you can rename the presentation table "GL SegmentX", logical dimension "GL SegmentX", and logical table "Dim - GL SegmentX" according to its own meaning.

For example, if you populate Product segment into Segment1, you can rename logical table "Dim - GL Segment1" as "Dim – GL Segment Product" or any other appropriate name and then rename the tables in the Presentation layer accordingly.

The GL Account dimension in the Oracle Business Analytics Warehouse is at a granularity of a combination of chartfields. PeopleSoft Financials provides several chartfields for GL accounts, such as account, alternate account, operating unit, department, and so on. The ETL program extracts all possible combinations of these chartfields that you have used and stores each of these chartfields individually in the GL Account dimension. It extracts the combinations of chartfields used from the following PeopleSoft account entry tables:

PS_VCHR_ACCTG_LINES (Accounts Payable)

PS_ITEM_DST (Accounts Receivable)

PS_BI_ACCT_ENTRY (Billings)

PS_CM_ACCTG_LINE (Costing)

PS_JRNL_LN (General Ledger)

The GL Account dimension (W_GL_ACCOUNT_D) in the Oracle Business Analytics Warehouse provides a flexible and generic data model to accommodate up to 30 chartfields. These are stored in the generic columns named ACCOUNT_SEG1_CODE, ACCOUNT_SEG2_CODE and so on up to ACCOUNT_SEG30_CODE, henceforth referred to as segments. These columns store the actual chartfield value that is used in your PeopleSoft application.

Mapping PeopleSoft Chartfields

A CSV file named file_glacct_segment_config_psft.csv is provided to map the PeopleSoft chartfields to the generic segments.

Note:

The configuration file or files for this task are provided on installation of Oracle BI Applications at one of the following locations:

Source-independent files: <Oracle Home for BI>\biapps\etl\data_files\src_files\.

Source-specific files: <Oracle Home for BI>\biapps\etl\data_files\src_files\<source adaptor>.

Your system administrator will have copied these files to another location and configured ODI connections to read from this location. Work with your system administrator to obtain the files. When configuration is complete, your system administrator will need to copy the configured files to the location from which ODI reads these files.

The first row in the file is a header row; do not modify this line. The second row in the file is where you specify how to do the mapping. The value for the column ROW_ID is hard coded to '1'; there is no need to change this.

Note that the file contains 30 columns – SEG1, SEG2, up to SEG30. You will have to specify which chartfield to populate in each of these columns by specifying one of the supported values for the chartfields. The following list shows the chartfields currently supported for the PeopleSoft application.

Note:

Values are case sensitive. You must specify the values exactly as shown in the following list.

Activity ID

Affiliate

Alternate Account

Analysis Type

Book Code

Budget Reference

Budget Scenario

Business Unit PC

ChartField 1

ChartField 2

ChartField 3

Class Field

Fund Affiliate

GL Adjust Type

Operating Unit

Operating Unit Affiliate

Product

Program Code

Project

Resource Category

Resource Sub Category

Resource Type

Statistics Code

Note:

You only need to include the chartfields in the CSV file that you want to map.