Using Performance Analyzer to Examine the jlowfruit Data

This section shows how to explore the data in the experiment created from the jlowfruit sample code.

-

If the experiment you created in the previous section is not already open, you can start Performance Analyzer from the jlowfruit directory and load the experiment as follows:

% analyzer test.1.er

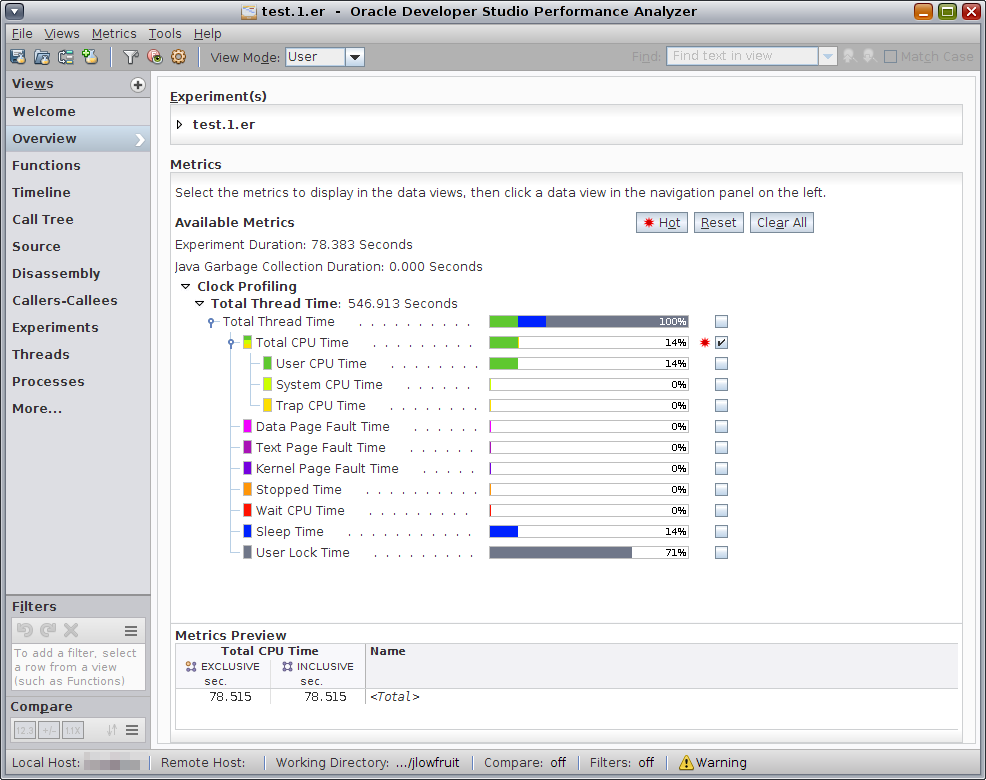

When the experiment opens, Performance Analyzer shows the Overview page.

-

Notice the Overview page shows a summary of the metric values and enables you to select metrics.

In this experiment the Overview shows about 14% Total CPU Time which was all User CPU Time, plus about 14% Sleep Time and 71% User Lock Time. The user Java code jlowfruit is single-threaded and that one thread is CPU-bound, but all Java programs use multiple threads including a number of system threads. The number of those threads depends on the choice of JVM options, including the Garbage Collector parameters and the size of the machine on which the program was run.

The experiment was recorded on an Oracle Solaris system, and the Overview shows twelve metrics recorded but only Total CPU Time is enabled by default.

The metrics with colored indicators are the times spent in the ten microstates defined by Oracle Solaris. These metrics include User CPU Time, System CPU Time, and Trap CPU Time which together are equal to Total CPU Time, as well as various wait times. Total Thread Time is the sum over all of the microstates.

On a Linux machine, only Total CPU Time is recorded because Linux does not support microstate accounting.

By default, both Inclusive and Exclusive Total CPU Time are previewed. Inclusive for any metric refers to the metric value in that function or method, including metrics accumulated in all the functions or methods that it calls. Exclusive refers only to the metric accumulated within that function or method.

-

Click the Hot button to select metrics with high values to show them in the data views.

The Metrics Preview panel at the bottom is updated to show you how the metrics will be displayed in the data views that present table-formatted data. You will next look to see which threads are responsible for which metrics.

-

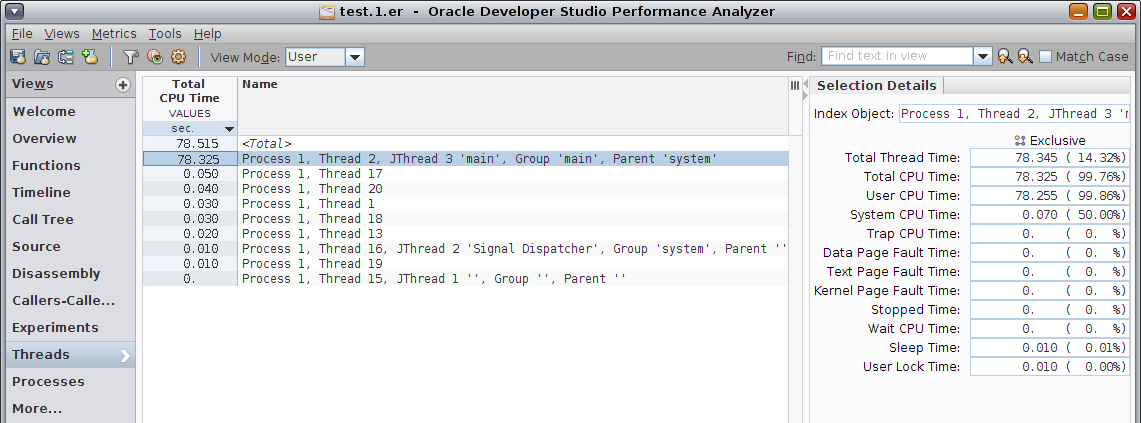

Now switch to the Threads view by clicking its name in the Views navigation panel or choosing Views → Threads from the menu bar.

The thread with almost all of the Total CPU Time is Thread 2, which is the only user Java thread in this simple application.

Thread 15 is most likely a user thread even though it is actually created internally by the JVM. It is only active during start-up and has very little time accumulated. In your experiment, a second thread similar to thread 15 might be created.

Thread 1 spends its entire time sleeping.

The remaining threads spend their time waiting for a lock, which is how the JVM synchronizes itself internally. Those threads include those used for HotSpot compilation and for Garbage Collection. This tutorial does not explore the behavior of the JVM system, but that is explored in another tutorial, Java and Mixed Java-C++ Profiling.

-

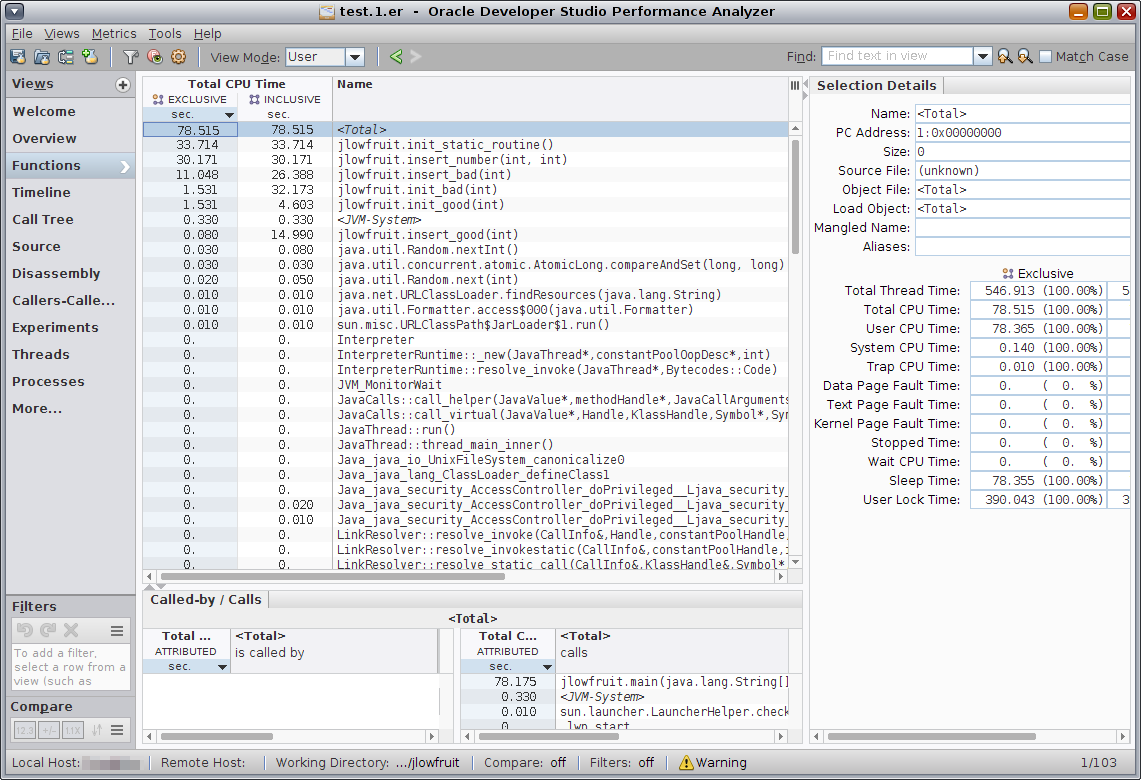

Click on the Functions view in the Views navigation panel, or choose Views → Functions from the menu bar.

The Functions view shows the list of functions in the application, with performance metrics for each function. The list is initially sorted by the Exclusive Total CPU Time spent in each function. There are also a number of functions from the JVM in the Functions view, but they have relatively low metrics. The list includes all functions from the target application and any shared objects the program uses. The top-most function, the most expensive one, is selected by default.

The Selection Details window on the right shows all the recorded metrics for the selected function.

The Called-by/Calls panel below the functions list provides more information about the selected function and is split into two lists. The Called-by list shows the callers of the selected function and the metric values show the attribution of the total metric for the function to its callers. The Calls list shows the callees of the selected function and shows how the Inclusive metric of its callees contributed to the total metric of the selected function. If you double-click a function in either list in the Called-by/Calls panel, the function becomes the selected function in the main Functions view.

-

Experiment with selecting the various functions to see how the Called-by / Calls panel and Selection Details window in the Functions view update with the changing selection.

The Selection Details window shows you that most of the functions come from the jlowfruit.class as indicated in the Load Object field.

You can also experiment with clicking on the column headers to change the sort from Exclusive Total CPU Time to Inclusive Total CPU Time, or by Name.

-

In the Functions view compare the two versions of the initialization task, jlowfruit.init_bad() and jlowfruit.init_good().

You can see that the two functions have roughly the same Exclusive Total CPU Time but very different Inclusive times. The jlowfruit.init_bad() function is slower due to time it spends in a callee. Both functions call the same callee, but they spend very different amounts of time in that routine. You can see why by examining the source of the two routines.

-

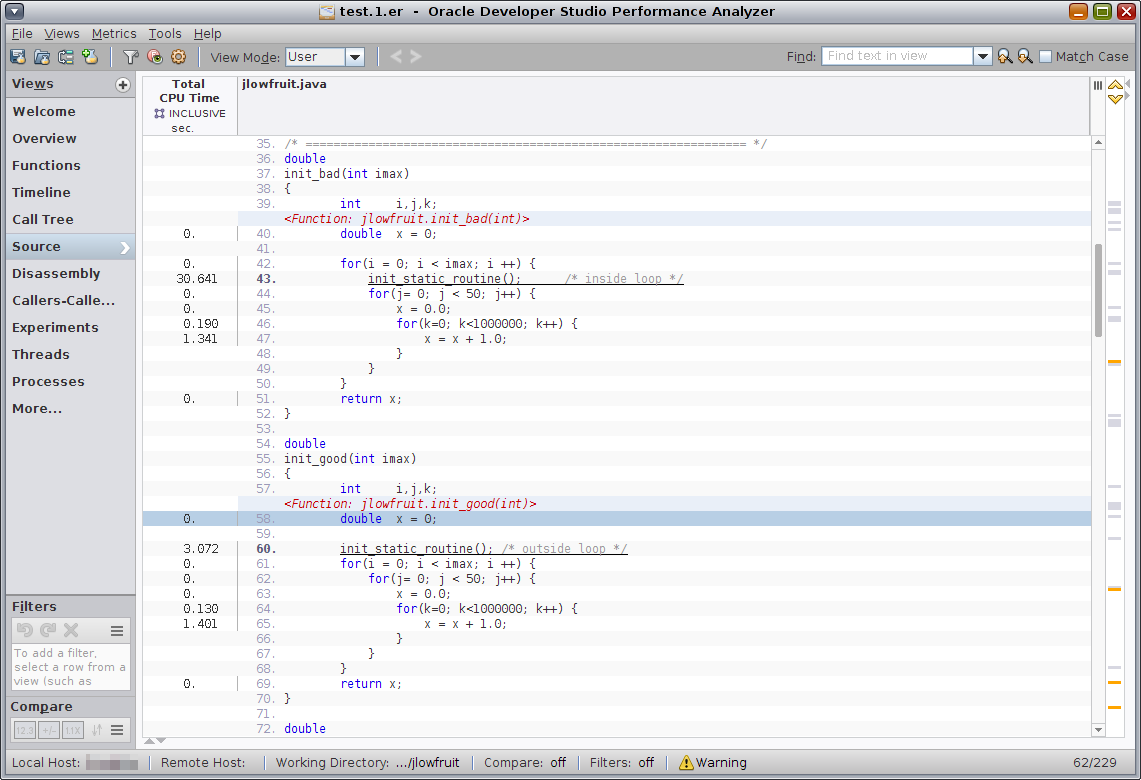

Select the function jlowfruit.init_good() and then click the Source view or choose Views → Source from the menu bar.

-

Adjust the window to allow more space for the code: Collapse the Called-by/Calls panel by clicking the down arrow in the upper margin, and collapse the Selection Details panel by clicking the right arrow in the side margin.

Note - You might have to re-expand and re-collapse these panels as needed for the rest of the tutorial.You should scroll up a little to see the source for both jlowfruit.init_bad() and jlowfruit.init_good(). The Source view should look similar to the following screen shot.

Notice that the call to jlowfruit.init_static_routine() is outside of the loop in jlowfruit.init_good(), while jlowfruit.init_bad() has the call to jlowfruit.init_static_routine() inside the loop. The bad version takes about ten times longer (corresponding to the loop count) than in the good version.

This example is not as silly as it might appear. It is based on a real code that produces a table with an icon for each table row. While it is easy to see that the initialization should not be inside the loop in this example, in the real code the initialization was embedded in a library routine and was not obvious.

The toolkit that was used to implement that code had two library calls (APIs) available. The first API added an icon to a table row, and second API added a vector of icons to the entire table. While it is easier to code using the first API, each time an icon was added, the toolkit recomputed the height of all rows in order to set the correct value for the whole table. When the code used the alternative API to add all icons at once, the recomputation of height was done only once.

-

Now go back to the Functions view and look at the two versions of the insert task, jlowfruit.insert_bad() and jlowfruit.insert_good().

Note that the Exclusive Total CPU time is significant for jlowfruit.insert_bad(), but negligible for jlowfruit.insert_good(). The difference between Inclusive and Exclusive time for each version, representing the time in the function jlowfruit.insert_number() called to insert each entry into the list, is the same. You can see why by examining the source.

-

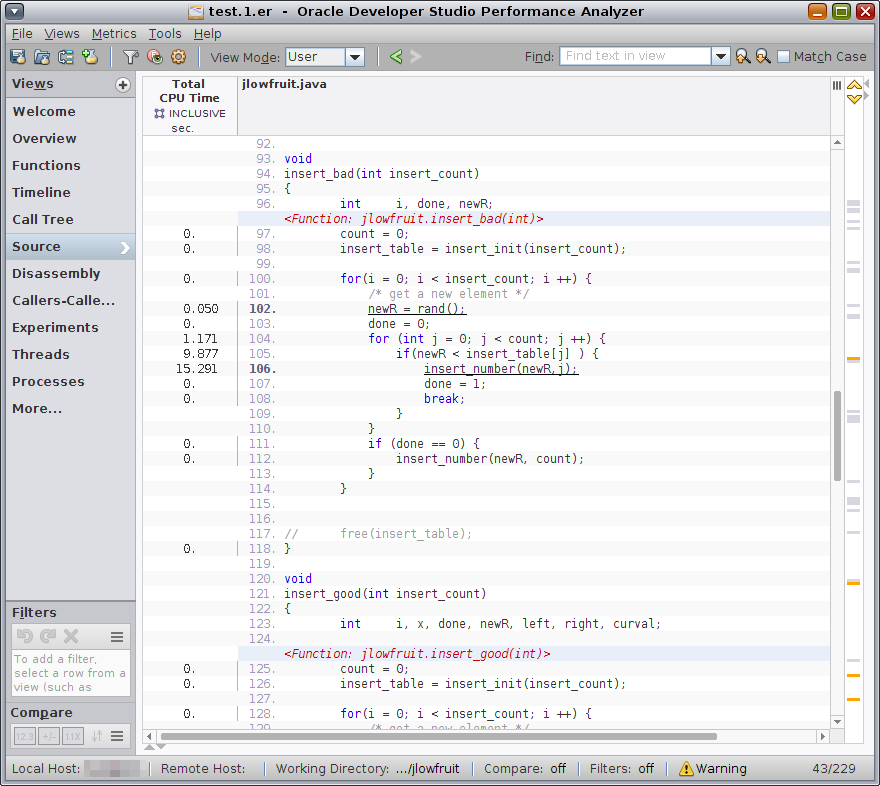

Select jlowfruit.insert_bad() and switch to the Source view:

Notice that the time, excluding the call to jlowfruit.insert_number(), is spent in a loop looking with a linear search for the right place to insert the new number.

-

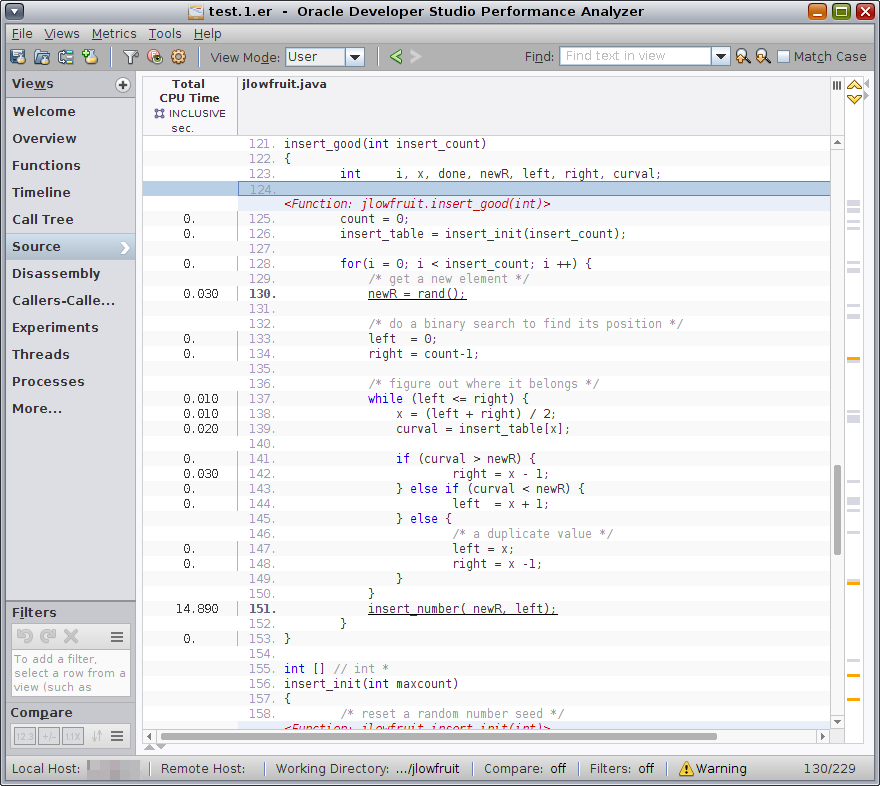

Now scroll down to look at jlowfruit.insert_good().

Note that the code is more complicated because it is doing a binary search to find the right place to insert, but the total time spent, excluding the call to jlowfruit.insert_number(), is much less than in jlowfruit.insert_bad(). This example illustrates that binary search can be more efficient than linear search.

You can also see the differences in the routines graphically in the Timeline view.

-

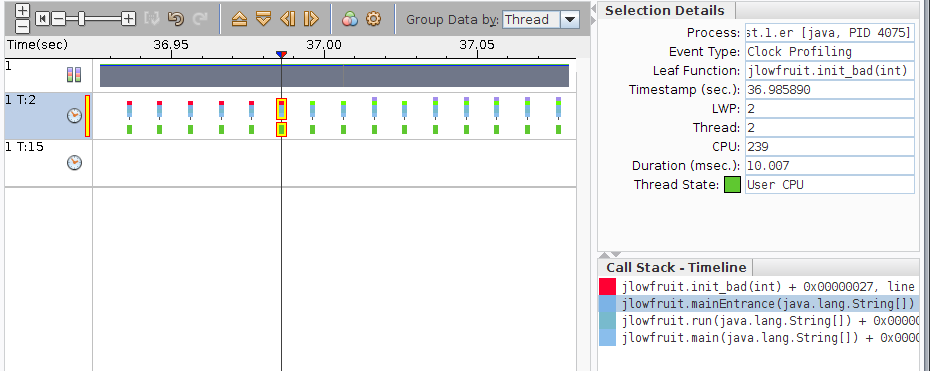

Click on the Timeline view or choose Views → Timeline from the menu bar.

The profiling data is recorded as a series of events, one for every tick of the profiling clock for every thread. The Timeline view shows each individual event with the call stack recorded in that event. The call stack is shown as a list of the frames in the callstack, with the leaf PC (the instruction next to execute at the instant of the event) at the top, and the call site calling it next, and so forth. For the main thread of the program, the top of the callstack is always main.

-

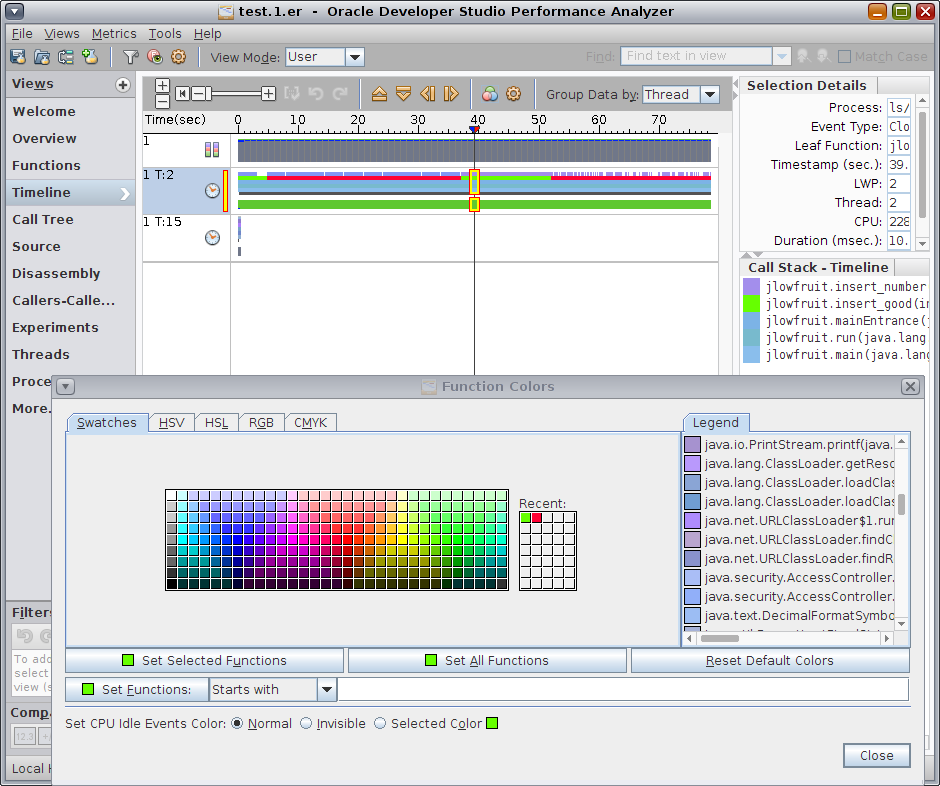

In the Timeline tool bar, click the Call Stack Function Colors icon

for coloring functions or choose Tools → Function Colors from the menu bar and see the dialog box as shown below.

for coloring functions or choose Tools → Function Colors from the menu bar and see the dialog box as shown below.

The function colors were changed to distinguish the good and bad versions of the functions more clearly for the screen shot. The jlowfruit.init_bad() and jlowfruit.insert_bad() functions are both now red and the jlowfruit.init_good() and jlowfruit.insert_good() are both bright green.

-

To make your Timeline view look similar, do the following in the Function Colors dialog box:

-

Scroll down the list of java methods in the Legend to find the jlowfruit.init_bad() method.

-

Select the jlowfruit.init_bad() method, click on a red color square in Swatches, and click Set Selected Functions button.

-

Select the jlowfruit.insert_bad() method, click on a red color square in Swatches, and click Set Selected Functions button.

-

Select the jlowfruit.init_good() method, click on a green color square in Swatches, and click Set Selected Functions button.

-

Select the jlowfruit.insert_good() method, click on a green color square in Swatches, and click Set Selected Functions button.

-

-

Look at the top bar of the Timeline.

The top bar of the Timeline is the CPU Utilization Samples bar as you can see in the tool tip if you move your mouse cursor over the first column. Each segment of the CPU Utilization Samples bar represents a one-second interval showing the resource usage of the target during that second of execution.

In this example, the segments are mostly gray with some green, reflecting the fact that only a small fraction of the Total Time was spent accumulating User CPU Time. The Selection Details window shows the mapping of colors to microstate although it is not visible in the screen shot.

-

Look at the second bar of the Timeline.

The second bar is the Clock Profiling Call Stacks bar, labeled "1 T:2" which means Process 1 and Thread 2, the main user thread in the example. The Clock Profiling Call Stacks bar shows two bars of data for events occurring during program execution. The upper bar shows color-coded representations of the callstack and the lower bar shows the state of the thread at each event. The state in this example was always User CPU Time so it appears to be a solid green line.

You should see one or two additional bars labeled with different thread numbers but they will only have a few events at the beginning of the run.

If you click anywhere within that Clock Profiling Call Stacks bar you select the nearest event and the details for that event are shown in the Selection Details window. From the pattern of the call stacks, you can see that the time in the jlowfruit.init_good() and jlowfruit.insert_good() routines shown in bright green in the screen shot is considerably shorter than the corresponding time in the jlowfruit.init_bad() and jlowfruit.insert_bad() routines shown in red.

-

Select events in the regions corresponding to the good and bad routines in the timeline and look at the call stacks in the Call Stack - Timeline window below the Selection Details window.

You can select any frame in the Call Stack window, and then select the Source view on the Views navigation bar, and go to the source for that source line. You can also double-click a frame in a call stack to go to the Source view or right-click the frame in the call stack and select from a pop-up menu.

-

Zoom in on the events by using the slider at the top of the Timeline, or using the + key, or by double-clicking with the mouse.

If you zoom in enough you can see that the data shown is not continuous but consists of discrete events, one for each profile tick, which is about 10 ms in this example.

Press the F1 key to see the Help for more information about the Timeline view.

-

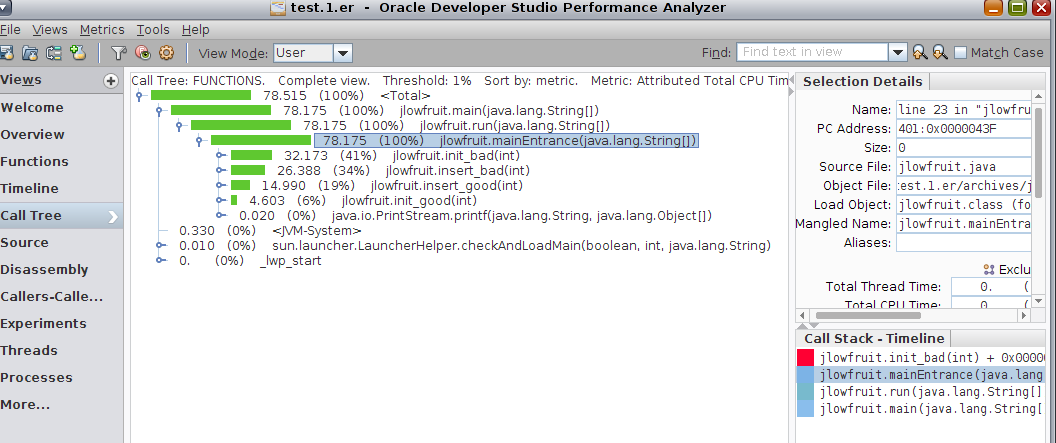

Click on the Call Tree view or choose Views → Call Tree to see the structure of your program.

The Call Tree view shows a dynamic call graph of the program, annotated with performance information.