Oracle® Retail Merchandising

System

Operations Guide, Volume 3 - Back End Configuration and Operations

Release

15.0.1

E75566-01

June 2016

Oracle®

Retail Merchandising System Operations Guide, Volume 3 - Back End Configuration

and Operations, Release 15.0.1

E75566-01

Copyright © 2016, Oracle. All rights reserved.

Primary Author: Maria Andrew

This software and related documentation are provided

under a license agreement containing restrictions on use and disclosure and are

protected by intellectual property laws. Except as expressly permitted in your

license agreement or allowed by law, you may not use, copy, reproduce,

translate, broadcast, modify, license, transmit, distribute, exhibit, perform,

publish, or display any part, in any form, or by any means. Reverse

engineering, disassembly, or decompilation of this software, unless required by

law for interoperability, is prohibited.

The information contained herein is subject to change

without notice and is not warranted to be error-free. If you find any errors,

please report them to us in writing.

If this is software or related documentation that is

delivered to the U.S. Government or anyone licensing it on behalf of the U.S.

Government, then the following notice is applicable:

U.S. GOVERNMENT END USERS: Oracle programs, including

any operating system, integrated software, any programs installed on the

hardware, and/or documentation, delivered to U.S. Government end users are

"commercial computer software" pursuant to the applicable Federal

Acquisition Regulation and agency-specific supplemental regulations. As such,

use, duplication, disclosure, modification, and adaptation of the programs,

including any operating system, integrated software, any programs installed on

the hardware, and/or documentation, shall be subject to license terms and

license restrictions applicable to the programs. No other rights are granted to

the U.S. Government.

This software or hardware is developed for general use

in a variety of information management applications. It is not developed or

intended for use in any inherently dangerous applications, including

applications that may create a risk of personal injury. If you use this

software or hardware in dangerous applications, then you shall be responsible

to take all appropriate fail-safe, backup, redundancy, and other measures to

ensure its safe use. Oracle Corporation and its affiliates disclaim any

liability for any damages caused by use of this software or hardware in

dangerous applications.

Oracle and Java are registered trademarks of Oracle

and/or its affiliates. Other names may be trademarks of their respective owners.

Intel and Intel Xeon are trademarks or registered

trademarks of Intel Corporation. All SPARC trademarks are used under license

and are trademarks or registered trademarks of SPARC International, Inc. AMD,

Opteron, the AMD logo, and the AMD Opteron logo are trademarks or registered

trademarks of Advanced Micro Devices. UNIX is a registered trademark of The

Open Group.

This software or hardware and documentation may provide

access to or information about content, products, and services from third

parties. Oracle Corporation and its affiliates are not responsible for and

expressly disclaim all warranties of any kind with respect to third-party

content, products, and services unless otherwise set forth in an applicable

agreement between you and Oracle. Oracle Corporation and its affiliates will

not be responsible for any loss, costs, or damages incurred due to your access

to or use of third-party content, products, or services, except as set forth in

an applicable agreement between you and Oracle.

Value-Added Reseller (VAR) Language

Oracle

Retail VAR Applications

The following restrictions and provisions only apply to

the programs referred to in this section and licensed to you. You acknowledge

that the programs may contain third party software (VAR applications) licensed

to Oracle. Depending upon your product and its version number, the VAR

applications may include:

(i) the MicroStrategy Components developed and

licensed by MicroStrategy Services Corporation (MicroStrategy) of McLean, Virginia to Oracle and imbedded in the MicroStrategy for Oracle Retail Data

Warehouse and MicroStrategy for Oracle Retail Planning & Optimization

applications.

(ii) the Wavelink component developed and

licensed by Wavelink Corporation (Wavelink) of Kirkland, Washington, to Oracle

and imbedded in Oracle Retail Mobile Store Inventory Management.

(iii) the software component known as Access Via™

licensed by Access Via of Seattle, Washington, and imbedded in Oracle Retail

Signs and Oracle Retail Labels and Tags.

(iv) the software component known as Adobe Flex™ licensed

by Adobe Systems Incorporated of San Jose, California, and imbedded in Oracle

Retail Promotion Planning & Optimization application.

You acknowledge and confirm that Oracle grants you use

of only the object code of the VAR Applications. Oracle will not deliver source

code to the VAR Applications to you. Notwithstanding any other term or

condition of the agreement and this ordering document, you shall not cause or

permit alteration of any VAR Applications. For purposes of this section,

"alteration" refers to all alterations, translations, upgrades,

enhancements, customizations or modifications of all or any portion of the VAR

Applications including all reconfigurations, reassembly or reverse assembly,

re-engineering or reverse engineering and recompilations or reverse

compilations of the VAR Applications or any derivatives of the VAR

Applications. You acknowledge that it shall be a breach of the agreement to

utilize the relationship, and/or confidential information of the VAR

Applications for purposes of competitive discovery.

The VAR Applications contain trade secrets of Oracle and

Oracle's licensors and Customer shall not attempt, cause, or permit the

alteration, decompilation, reverse engineering, disassembly or other reduction

of the VAR Applications to a human perceivable form. Oracle reserves the right

to replace, with functional equivalent software, any of the VAR Applications in

future releases of the applicable program.

Oracle Retail Merchandising System Operations Guide, Volume 3 -

Back End Configuration and Operations, Release 15.0.1

Oracle welcomes customers' comments and suggestions on the

quality and usefulness of this document.

Your feedback is important, and helps us to best meet your needs

as a user of our products. For example:

§

Are the implementation steps correct and complete?

§

Did you understand the context of the procedures?

§

Did you find any errors in the information?

§

Does the structure of the information help you with your tasks?

§

Do you need different information or graphics? If so, where, and

in what format?

§

Are the examples correct? Do you need more examples?

If you find any errors or have any other suggestions for

improvement, then please tell us your name, the name of the company who has

licensed our products, the title and part number of the documentation and the

chapter, section, and page number (if available).

Note:

Before sending us your comments, you might like to check that you have the

latest version of the document and if any concerns are already addressed. To do

this, access the new Applications Release Online Documentation CD available on

My Oracle Support and www.oracle.com. It

contains the most current Documentation Library plus all documents revised or

released recently.

Send your comments to us using the electronic mail address: retail-doc_us@oracle.com

Please give your name, address, electronic mail address, and

telephone number (optional).

If you need assistance with Oracle software, then please contact

your support representative or Oracle Support Services.

If you require training or instruction in using Oracle software,

then please contact your Oracle local office and inquire about our Oracle University offerings. A list of Oracle offices is available on our Web site at www.oracle.com.

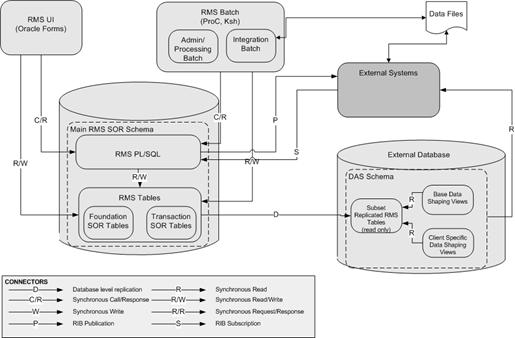

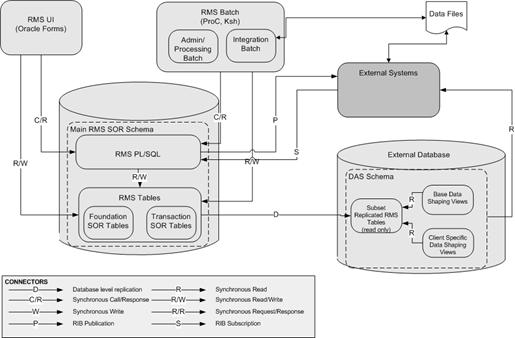

This Retail Merchandising System Operations Guide,

Volume 3 - Back End Configuration and Operations provides critical

information about the processing and operating details of Oracle Retail

Merchandising System (RMS), including the following:

§

System configuration settings

§

Technical architecture

§

Functional integration dataflow across the enterprise

§

Batch processing

This guide is for:

§

Systems administration and operations personnel

§

Systems analysts

§

Integrators and implementers

§

Business analysts who need information about Merchandising System

processes and interfaces

For information about Oracle's commitment to accessibility, visit

the Oracle Accessibility Program website at

http://www.oracle.com/pls/topic/lookup?ctx=acc&id=docacc.

Access to Oracle Support

Oracle customers that have purchased support have access to

electronic support through My Oracle Support. For information, visit http://www.oracle.com/pls/topic/lookup?ctx=acc&id=info

or visit http://www.oracle.com/pls/topic/lookup?ctx=acc&id=trs

if you are hearing impaired.

For more information, see the following documents:

§

Oracle Retail Merchandising System Installation Guide

§

Oracle Retail Merchandising System Release Notes

§

Oracle Retail Merchandising System Data Model

§

Oracle Retail Merchandising System Data Access Schema Data

Model

§

Oracle Retail Merchandising Security Guide

§

Oracle Retail Merchandising Implementation Guide

§

Oracle Retail Merchandising Batch Schedule

§

Oracle Retail Sales Audit documentation

§

Oracle Retail Trade Management documentation

§

Oracle Retail Fiscal Management documentation

To contact Oracle

Customer Support, access My Oracle Support at the following URL:

https://support.oracle.com

When contacting

Customer Support, please provide the following:

§

Product version and program/module name

§

Functional and technical description of the problem (include

business impact)

§

Detailed step-by-step instructions to re-create

§

Exact error message received

§

Screen shots of each step you take

When you install the application for the first time, you install

either a base release (for example, 15.0) or a later patch release (for

example, 15.0.1). If you are installing the base release or additional patch

releases, read the documentation for all releases that have occurred since the

base release before you begin installation. Documentation for patch releases

can contain critical information related to the base release, as well as

information about code changes since the base release.

To more quickly address critical corrections to Oracle Retail

documentation content, Oracle Retail documentation may be republished whenever

a critical correction is needed. For critical corrections, the republication of

an Oracle Retail document may at times not be attached to a numbered

software release; instead, the Oracle Retail document will simply be replaced

on the Oracle Technology Network Web site, or, in the case of Data Models, to

the applicable My Oracle Support Documentation container where they reside.

This process will prevent delays in making critical corrections

available to customers. For the customer, it means that before you begin

installation, you must verify that you have the most recent version of the

Oracle Retail documentation set. Oracle Retail documentation is available on

the Oracle Technology Network at the following URL:

http://www.oracle.com/technetwork/documentation/oracle-retail-100266.html

An updated version of the applicable Oracle Retail document is

indicated by Oracle part number, as well as print date (month and year). An

updated version uses the same part number, with a higher-numbered suffix. For

example, part number E123456-02

is an updated version of a document with part number E123456-01.

If a more recent version of a document is available, that version

supersedes all previous versions.

Oracle Retail product documentation is available on the following

web site:

http://www.oracle.com/technetwork/documentation/oracle-retail-100266.html

(Data Model documents are not available through Oracle Technology

Network. You can obtain them through My Oracle Support.)

Navigate:

This is a navigate statement. It tells you how to get to the start of the

procedure and ends with a screen shot of the starting point and the statement

“the Window Name window opens”.

This is a code sample

It is used to display examples of code

2

Pro*C Restart and Recovery

RMS has implemented a restart recovery process in most of its

batch architecture. The general purpose of restart/recovery is to:

§

Recover a halted process from the point of failure.

§

Prevent system halts due to large numbers of transactions.

§

Allow multiple instances of a given process to be active at the

same time.

Further, the RMS restart/recovery tracks batch execution

statistics and does not require DBA authority to execute.

The restart capabilities revolve around a program’s logical unit

of work (LUW). A batch program processes transactions, and commit points are

enabled based on the LUW. LUWs consist of a relatively unique transaction key

(such as sku/store) and a maximum commit counter. Commit events take place

after the number of processed transaction keys meet or exceed the maximum

commit counter. For example, after every 10,000 sku/store combinations, a

commit occurs. At the time of commit, key data information that is necessary

for restart is stored in the restart tables. In the event of a handled or

un-handled exception, transactions is rolled back to the last commit point, and

upon restart the key information is retrieved from the tables so that

processing can continue from the last commit point.

The RMS restart/recovery process is driven by a set of four

tables. These tables are shown in Entity Relationship diagram. For detailed table

description, see RESTART_CONTROL, RESTART_PROGRAM_STATUS, RESTART_BOOKMARK and RESTART_PROGRAM_HISTORY.

RMS Restart Recovery Entity

Relationships Diagram

RESTART_CONTROL

The restart_control

table is the master table in the restart/recovery table set. One record exists

on this table for each batch program that is run with restart/recovery logic in

place. The restart/recovery process uses this table to determine:

§

Whether the restart/recovery is table-based or file-based.

§

The total number of threads used for each batch program.

§

The maximum records processed before a commit event takes place.

§

The driver for the threading (multi-processing) logic.

§

Time to wait for a lock and the number of lock retry.

|

restart_control

|

|

(PK) program_name

|

varchar2

|

25

|

Batch program name.

|

|

program_desc

|

varchar2

|

120

|

A brief description of the program function.

|

|

driver_name

|

varchar2

|

25

|

Driver on query, for example, department (non-updatable).

|

|

num_threads

|

num

|

10

|

Number of threads used for current process.

|

|

update_allowed

|

varchar2

|

2

|

Indicates whether user can update thread numbers or if

done programmatically.

|

|

process_flag

|

varchar2

|

1

|

Indicates whether the process is table-based (T) or file-based

(F).

|

|

commit_max_ctr

|

num

|

6

|

Numeric maximum value for counter before commit occurs.

|

|

LOCK_WAIT_TIME

|

num

|

6

|

Contains the number of seconds between the last and the

next lock retry.

|

|

RETRY_MAX_CTR

|

num

|

10

|

Contains the maximum number of lock retry.

|

RESTART_PROGRAM_STATUS

The restart_program_status

table is the table that holds record keeping information about current program

processes. The number of rows for a program on the status table is equal to its

num_threads value on the restart_control table. The status table

is modified during restart/recovery initialization and close logic. For

table-based processing, the restart/recovery initialization logic assigns the

next available thread to a program based on the program status and restart

flag. For file-based processing, the thread value is passed in from the input

file name. When a thread is assigned, the program_status

is updated to prevent the assignment of that thread to another process.

Information is logged on the current status of a given thread, as well as

record keeping information such as operator and process timing information.

|

restart_program_status

|

|

(PK)restart_name

|

varchar2

|

50

|

Program name

|

|

(PK)thread_val

|

num

|

10

|

Thread counter

|

|

start_time

|

date

|

|

dd-mon-yy hh:mi:ss

|

|

program_name

|

varchar2

|

25

|

Program name

|

|

program_status

|

varchar2

|

25

|

Started, aborted, aborted in init, aborted in process,

aborted in final, completed, ready for start.

|

|

restart_flag

|

varchar2

|

1

|

Automatically set to ‘N’ after abnormal end, must be

manually set to ‘Y’ for program to restart.

|

|

restart_time

|

date

|

|

dd-mon-yy hh:mi:ss.

|

|

finish_time

|

date

|

|

dd-mon-yy hh:mi:ss.

|

|

current_pid

|

num

|

15

|

Starting program ID.

|

|

current_operator_id

|

varchar2

|

20

|

Operator that started the program.

|

|

err_message

|

varchar2

|

255

|

Record that caused program abort and associated error

message.

|

|

current_oracle_sid

|

num

|

15

|

Oracle SID for the session associated with the current

process.

|

|

current_shadow_pid

|

num

|

15

|

O/S process ID for the shadow process associated with the

current process. It is used to locate the session trace file when a process

is not finished successfully.

|

RESTART_PROGRAM_HISTORY

The restart_program_history

table will contain one record for every successfully completed program thread

with restart/recovery logic. Upon the successful completion of a program

thread, its record on the restart_program_status

table will be inserted into the history table. Table purging will be at

user discretion.

|

restart_program_history

|

|

restart_name

|

varchar2

|

50

|

Program name.

|

|

thread_val

|

Num

|

10

|

Thread counter.

|

|

start_time

|

Date

|

|

dd-mon-yy hh:mi:ss.

|

|

program_name

|

varchar2

|

25

|

Program name.

|

|

num_threads

|

Num

|

10

|

Number of threads.

|

|

commit_max_ctr

|

num

|

6

|

Numeric maximum value for counter before commit occurs.

|

|

restart_time

|

date

|

|

dd-mon-yy hh:mi:ss.

|

|

finish_time

|

date

|

|

dd-mon-yy hh:mi:ss.

|

|

shadow_pid

|

num

|

15

|

O/S process ID for the shadow process associated with the

process. It is used to locate the session trace file.

|

|

success_flag

|

varchar2

|

1

|

Indicates whether the process finished successfully

(reserved for future use).

|

|

non_fatal_err_flag

|

varchar2

|

1

|

Indicates whether non-fatal errors have occurred for the

process.

|

|

num_commits

|

num

|

12

|

Total number of commits for the process. The possible last

commit when restart/recovery is closed is not counted.

|

|

avg_time_btwn_commits

|

num

|

12

|

Accumulated average time between commits for the process.

The possible last commit when restart/recovery is closed is not counted.

|

|

LREAD

|

num

|

|

Session logical reads. The sum of "db block

gets" plus "consistent gets". This includes logical reads of

database blocks from either the buffer cache or process private memory.

|

|

LWRITE

|

num

|

|

Session logical writes. The sum of "db block

changes" plus "consistent changes".

|

|

PREAD

|

num

|

|

Physical reads. Total number of data blocks read from

disk.

|

|

UGA_MAX

|

num

|

|

Peak UGA (user global area) size for a session.

|

|

PGA_MAX

|

num

|

|

Peak PGA (program global area) size for the session.

|

|

SQLNET_BYTES_FROM_CLIENT

|

num

|

|

Total number of bytes received from the client over Oracle

Net Services.

|

|

SQLNET_BYTES_TO_CLIENT

|

num

|

|

Total number of bytes sent to the client from the

foreground processes.

|

|

SQLNET_ROUNDTRIPS

|

num

|

|

Total number of Oracle Net Services messages sent to and

received from the client.

|

|

COMMITS

|

num

|

|

Number of user commits. When a user commits a transaction,

the redo generated that reflects the changes made to database blocks must be

written to disk. Commits often represent the closest thing to a user

transaction rate.

|

RESTART_BOOKMARK

When a restart/recovery program thread is active, its state is

started or aborted, and a record for it exists on the restart_bookmark table. Restart/recovery initialization logic

inserts the record into the table for a program thread. The restart/recovery

commit process updates the record with the following restart information:

§

A concatenated string of key values for table processing

§

A file pointer value for file processing

§

Application context information such as counters and accumulators

The restart/recovery closing process deletes the program thread

record if the program finishes successfully. In the event of a restart, the

program thread information on this table will allow the process to begin from

the last commit point.

|

restart_bookmark

|

|

(PK)

restart_name

|

varchar2

|

50

|

Program name.

|

|

(PK)

thread_val

|

num

|

10

|

Thread counter.

|

|

bookmark_string

|

varchar2

|

255

|

Character string of key of last committed record.

|

|

application_image

|

varchar2

|

1000

|

Application parameters from the last save point.

|

|

out_file_string

|

varchar2

|

255

|

Concatenated file pointers (UNIX sometimes refers to these

as stream positions) of all the output files from the last commit point of

the current process. It is used to return to the right restart point for all

the output files during restart process.

|

|

non_fatal_err_flag

|

varchar2

|

1

|

Indicates whether non-fatal errors have occurred for the

current process.

|

|

num_commits

|

num

|

12

|

Number of commits for the current process. The possible

last commit when restart/recovery is closed is not counted.

|

|

avg_time_btwn_commits

|

num

|

12

|

Average time between commits for the current process. The

possible last commit when restart/recovery is closed is not counted.

|

Restart views are used for query-based programs that require

multi-threading. Separate views (ex v_restart_dept, and v_restart_store) are created

for each threading driver, for example, department or store. A join is made to

a view based on threading driver to force the separation of discrete data into

particular threads. See the threading discussion for more details.

|

v_restart_<x>

|

|

driver_name

|

varchar2

|

Example dept, store, region, and others.

|

|

num_threads

|

number

|

Total number of threads in set (defined on restart

control).

|

|

driver_value

|

number

|

Numeric value of the driver_name.

|

|

thread_val

|

number

|

Thread value defined for driver_value and num_threads

combination.

|

The restart_program_status

and restart_bookmark are separate

tables. This is because the initialization process needs to fetch all of

the rows associated with restart_name/schema,

but will only update one row. The commit process will continually lock a row

with a specific restart_name and Thread_val. The data involved with these

two processes is separated into two tables to reduce the number of hangs that

could occur due to locked rows. Even if you allow ‘dirty reads’ on locked rows,

a process will still hang if it attempts to do an update on a locked row. The

commit process is only interested in a unique row, so if we move the commit

process data to a separate table with row level (not page level) locking, there

will not be contention issues during the commit. With the separate tables, the

initialization process will now see fewer problems with contention because rows

will only be locked twice, at the beginning and end of the process.

The restart/recovery process needs to be as robust as possible in

the event of database related failure. The costs outweigh the benefits of

placing the restart/recovery tables in a separate database. The tables should,

however, be setup in a separate, mirrored table space with a separate rollback

segment.

The restart/recovery process works by storing all the data

necessary to resume processing from the last commit point. Therefore, the

necessary information is updated on the restart_bookmark

table before the processed data is committed. Query-based and file-based module

stores different information on the restart tables, and therefore calls different

functions within the restart/recovery API to perform their tasks.

When a program’s process is query-based on a module is driven by

a driving query that processes the retrieved rows, the information that is

stored on the restart_bookmark table

is related to the data retrieved in the driving query. If the program fails

while processing, the information that is stored on the restart-tables can be

used in the conditional where-clause of the driving query to only retrieve data

that has yet to be processed since the last commit event.

File-based processing, needs to store the file location at the

time of the last commit point. This file’s byte location is stored on the restart_bookmark table and will be

retrieved at the time of a restart. This location information will be used

further be used in reopening the file when the data was last committed. Because

there is different information being saved to and retrieved from the restart_bookmark table for each of the

different types of processing, different functions need to be called to perform

the restart/recovery logic. The query-based processing calls the restart_init or retek_init and restart_commit

or retek_commit functions

while the file-based processing calls the restart_file_init

and restart_file_commit functions.

In addition to the differences in API function calls, the batch

processing flow of the restart/recovery differs between the files. Table-based

restart/recovery needs to use a priming fetch logical flow, while the

file-based processing usually reads lines in a batch. Table-based processing

requires its structure to ensure that the LUW key has changed before a commit

event is allowed to occur, while the file-based processing does not need to

evaluate the LUW, which can typically be thought of as the type of transaction

being processed by the input file.

The following diagram depicts table-based Restart/Recovery

program flow:

Table-Based Restart/Recovery Program Flow

The following diagram depicts file-based Restart/Recovery

program flow

File-based Restart/Recovery Program Flow

Initialization logic:

§

Variable declarations

§

File initialization

§

Call restart_init() or restart_file_init() function - determines

start or restart logic

§

First fetch on driving query

Start logic: initialize counters/accumulators to start values

Restart logic:

§

Parse application_image field on bookmark table into

counters/accumulators

§

Initialize counters/accumulators to values of parsed fields

Process/commit loop:

§

Process updates and manipulations

§

Fetch new record

§

Create varchar from counters/accumulators to pass into

application_image field on restart_bookmark table

§

Call restart_commit() or restart_file_commit()

Close logic:

§

Reset pointers

§

Close files/cursors

§

Call Restart_close()

An initialization functions for table-based batch processing.

The batch process gathers the following information from the

restart control tables:

§

Total number of threads for a program and thread value assigned

to current process.

§

Number of records to loop through in driving cursor before commit

(LUW).

§

Start string - bookmark of last commit to be used for restart or

a null string if current process is an initial start and initializes the

restart record-keeping (restart_program_status).

§

Program status is changed to ‘started’ for the first available

thread.

§

Operational information is updated: operator, process,

start_time, and bookmarking (restart_bookmark) tables.

§

On an initial start, a record is inserted.

§

On restart, the start string and application context information

from the last commit is retrieved.

§

Lock wait time and maximum retry for locking.

An initialization functions for file-based batch processing. It

is called from program modules.

The batch process gathers the following information from the

restart control tables:

§

Number of records to read from file for array processing and for

commit cycle.

§

File start point- bookmark of last commit to be used for restart

or 0 for initial start.

The process initializes the restart record-keeping

(restart_program_status):

§

Program status is changed to ‘started’ for the current thread.

§

Operational information is updated: operator, process, start_time.

The process initializes the restart bookmarking (restart_bookmark)

tables:

§

On an initial start, a record is inserted.

§

On restart, the file starting point information and application

context information from the last commit is retrieved.

A function that commits the processed transaction for a given

number of driving query fetches. It is called from program modules.

The process updates the restart_bookmark

start string and application image information if a commit event has taken

place:

§

The current number of driving query fetches is greater than or

equal to the maximum set in the restart_program_status table (and fetched in

the restart_init function).

§

The bookmark string of the last processed record is greater than

or equal to the maximum set in the restart_program_status table (and fetched in

the restart_init function).

§

The bookmark string increments the counter.

§

The bookmark string sets the current string to be the most

recently fetched key string.

A function that commits processed transactions after reading a

number of lines from a flat file. It is called from program modules.

The process updates the RESTART_BOOKMARK table:

§

Start_string is set to the file pointer location in the current

read of the flat file.

§

Application image is updated with context information.

A function that updates the restart tables after program

completion.

The process determines whether the program was successful. If the

program finished successfully:

§

The restart_program_status table is updated with finish

information and the status is reset.

§

The corresponding record in the RESTART_BOOKMARK table is deleted.

§

The restart_program_history table has a copy of the

restart_program_status table record inserted into it.

§

The restart_program_status is re-initialized.

If the program ends with errors:

§

The transactions are rolled back.

§

The program_status column on the restart_program_status table is

set to ‘aborted in *’ where * is one of the three main functions in batch:

init, process or final.

§

The changes are committed.

This function parses a string into components and places results

into multidimensional array. It is only called within API functions and will

never be called in program modules.

The process is passes a string to parse, and a pointer to an

array of characters.

The first character of the passed string is the delimiter.

This function will append output in temporary files to final

output files when a commit point is reached. It is called from program modules.

This function contains the logic that appends one file to

another. It is only called within the restart/recovery API functions and never

called directly in program modules.

The restart.h and

the std_err.h header files are

included in retek.h to utilize

the restart/recovery functionality.

This library header file contains constant, macro substitutions,

and external global variable definitions as well as restart/recovery functions prototypes.

The global variables that are defined include:

§

The thread number assigned to the current process.

§

The value of the current process’s thread maximum counter:

– For

table-based processing, it is equal to the number of iterations of the driving

query before a commit can take place.

– For

file-based processing, it is equal to the number of lines that is read from a

flat file and processed using a structured array before a commit can take place.

§

The current count of driving query iterations used for

table-based processing or the current array index used in file-based processing.

§

The name assigned to the program/logical unit of work by the

programmer. It is the same as the restart_name column on the

restart_program_status, restart_program_history, and RESTART_BOOKMARK tables.

This library header file contains standard restart variable

declarations that are used in the program modules.

The variable definitions that are included are:

§

The concatenated string value of the fetched driving query key

that is currently being processed.

§

The concatenated string value of the fetched driving query key that

will be processed next.

§

The error message passed to the restart_close function and

updated to restart_program_status.

§

Concatenated string of application context information, for

example, counters and accumulators.

§

The name of the threading driver, for example, department, store,

warehouse and others.

§

The total number of threads used by this program.

§

The pointer to pass to initialization function to retail number

of threads value.

Restart/recovery

performs the following, among other capabilities:

§

Organizes global variables associated with restart recovery.

§

Allows the batch developer full control of restart recovery

variables parameter passing during initialization.

§

Removes temporary write files to speed up the commit process.

§

Moves more information and processing from the batch code into

the library code.

§

Adds more information into the restart recovery tables for tuning

purposes.

retek_2.h

This library header file is included by all C code within Retail

and serves to centralize system includes, macro defines, globals, function

prototypes, and, especially, structs for use in the new restart/recovery

library.

The globals used by the old restart/recovery library are all

discarded. Instead, each batch program declares variables needed and calls

retek_init() to get them populated from restart/recovery tables. Therefore, only

the following variables are declared:

§

gi_no_commit: flag for NO_COMMIT command line option (used for

tuning purposes).

§

gi_error_flag: fatal error flag.

§

gi_non_fatal_err_flag: non-fatal error flag.

In addition, a rtk_file struct is defined to handle all file

interfaces associated with restart/recovery. Operation functions on the file

struct are also defined.

#define NOT_PAD 1000 /* Flag not to pad thread_val */

#define PAD 1001 /* Flag to pad thread_val at the

end */

#define TEMPLATE 1002 /* Flag to pad thread_val using

filename template */

#define MAX_FILENAME_LEN 50

typedef struct

{

FILE* fp; /* File pointer */

char filename[MAX_FILENAME_LEN + 1]; /* Filename */

int pad_flag; /* Flag whether to pad thread_val to

filename */

} rtk_file;

int set_filename(rtk_file* file_struct, char* file_name, int

pad_flag);

FILE* get_FILE(rtk_file* file_struct);

int rtk_print(rtk_file* file_struct, char* format, ...);

int rtk_seek(rtk_file* file_struct, long offset, int whence);

The parameters that retek_init() needs to populate passed using a

format known to retek_init(). A struct is defined here for this purpose. An

array of parameters of this struct type is needed in each batch program. Other requirements

have to be initialized at each batch program.

§

The lengths of name, type and sub_type should not exceed the

definitions here.

§

Type can only be: "int", “uint”, "long",

"string", or "rtk_file".

§

For type "int", “uint” or "long", use

"" as sub_type.

§

For type "string", sub_type can only be "S"

(start string) unless the string is the thread value or number of threads, in

which case use “” as sub_type or "I" (image string).

§

For type "rtk_file", sub_type can only be "I"

(input) or "O" (output).

#define NULL_PARA_NAME 51

#define NULL_PARA_TYPE 21

#define NULL_PARA_SUB_TYPE 2

typedef struct

{

char name[NULL_PARA_NAME];

char type[NULL_PARA_TYPE];

char sub_type[NULL_PARA_SUB_TYPE];

} init_parameter;

INT RETEK_INIT(INT NUM_ARGS, INIT_PARAMETER *parameter, ...)

retek_init

initializes restart/recovery (for both table and file-based):

Pass in num_args

as the number of elements in the init_parameter

array, and then the init_parameter

array, then variables a batch program needs to initialize in the order and

types defined in the init_parameter

array. Note that all int, uint and long variables need to be passed by

reference.

Get all global and module level values from databases.

Initialize records for RESTART_PROGRAM_STATUS and

RESTART_BOOKMARK.

Parse out user-specified initialization variables (variable

arg list).

Return NO_THREAD_AVAILABLE if no qualified record in

RESTART_CONTROL or RESTART_PROGRAM_STATUS.

Commit work.

INT RETEK_COMMIT(INT NUM_ARGS, ...)

retek_commit checks

and commits if needed (for both table and file-based):

Pass in num_args, then variables for start_STRING first, and

those for image string (if needed) second. The num_args is the total number of

these two groups. All are string variables and are passed in the same order as

in retek_init().

Concatenate start_string either from passed in variables

(table-based) or from ftell of input file pointers (file-based).

Check if commit point is reached (counter check and, if

table-based, start string comparison).

If reached, concatenated image_string from passed in

variables (if needed) and call internal_commit() to get out_file_string and

update RESTART_BOOKMARK.

If table-based, increment pl_current_count and update

ps_cur_string.

INT COMMIT_POINT_REACHED(INT

NUM_ARGS, ...)

commit_point_reached

checks if the commit point is reached (for both table- and file-based). The

difference between this function and the check in retek_commit() is that here the pl_current_count and ps_cur_string

are not updated. This checking function is designed to be used with retek_force_commit(), and the logic to

ensure integrity of LUW exists in user batch program. It can also be used

together with retek_commit() for

extra processing at the time of commit.

Pass in num_args, then all string variables for start_string

in the same order as in retek_init(). The num_args is the number of variables

for start_string. If no start_string (as in file-based), pass in NULL.

For table-based, if pl_curren_count reaches pl_max_counter

and if newly concatenated bookmark string is different from ps_cur_string,

return 1; otherwise return 0.

For file-based, if pl_curren_count reaches pl_max_counter

return 1; otherwise return 0.

INT RETEK_FORCE_COMMIT(INT NUM_ARGS,

...)

retek_force_commit always

commits (for both table and file-based):

Pass in num_args, then variables for start_string first, and

those for image string (if needed) second. The num_args is the total number of

these two groups. All are string variables and are passed in the same order as

in retek_init().

Concatenate start_string either from passed in variables

(table-based) or from ftell of input file pointers (file-based).

Concatenated image_string from passed in variables (if

needed) and call internal_commit() to get out_file_string and update

RESTART_BOOKMARK.

If table-based, increment pl_current_count and update

ps_cur_string.

INT RETEK_CLOSE(void)

retek_close closes

restart/recovery (for both table and file-based):

If gi_error_flag or NO_COMMIT command line option is TRUE,

rollback all database changes.

Update RESTART_PROGRAM_STATUS according to gi_error_flag.

If no gi_error_flag, insert record into

RESTART_PROGRAM_HISTORY with information fetched from RESTART_CONTROL,

RESTART_PROGRAM_BOOKMARK and RESTART_PROGRAM_STATUS tables.

If no gi_error_flag, delete RESTART_BOOKMARK record.

Commit work.

Close all opened file streams.

INT RETEK_REFRESH_THREAD(void)

You must refresh a program’s thread, so that it can be run again.

Updates the RESTART_PROGRAM_STATUS record for the current

program’s PROGRAM_STATUS to be ‘ready for start’.

Deletes any RESTART_BOOKMARK records for the current program.

Commits work.

VOID INCREMENT_CURRENT_COUNT(void)

increment_current_count

increases pl_current_count by 1.

Note:

This is called from get_record()

of intrface.pc for file-based input/output.

INT PARSE_NAME_FOR_THREAD_VAL(char*

name)

parse_name_for_thread_val

parses thread value from the extension of the specified file name.

INT IS_NEW_START(void)

is_new_start checks

if current run is a new start; if yes, return 1; otherwise 0.

The restart capabilities revolve around a program’s logical unit

of work (LUW). A batch program processes transactions and enables commit points

based on the LUW. An LUW is comprised of a transaction key (such as item-store)

and a maximum commit counter. Commit events occur after a given number of

transaction keys are processed. At the time of the commit, key data information

that is necessary for restart is stored in the restart table. In the event of a

handled or un-handled exception, transactions will be rolled back to the last

commit point. Upon restart the restart key information will be retrieved from

the tables so that processing can resume with the unprocessed data.

3

Processing multiple instances of a given program can be

accomplished through “threading”. This requires driving cursors to be separated

into discrete segments of data to be processed by different threads. This is

accomplished through a stored procedure that separates threading mechanisms

(for example, departments or stores) into particular thread given value (for

example, department 1001) and the total number of threads for a given process.

File-based processing will not “thread” its processing. The same

data file will never be acted upon by multiple processes. Multi-threading is

accomplished by dividing the data into separate files using a separate process

for each file. The thread value is related to the input file. This is necessary

to ensure that the appropriate information can be tied back to the relevant

file in the event of a restart.

The store length in RMS is ten digits. Therefore, thread value

which is based on the store number must allow ten digits. As the thread value

is declared as a ‘C’ variable of type integer (long), the system restricts

thread values to nine digits.

This does not mean that you cannot use ten digit store numbers.

It means that if you do use ten digit store numbers you cannot use them as

thread values.

The use of multiple threads or processes in Oracle Retail batch

processing increases efficiency and decrease processing time. The design of the

threading process allows maximum flexibility to the end user in defining the

number of processes over which a program is divided.

Originally, the threading function was used directly in the

driving queries. This was a slow process. Instead of using the function call

directly in the driving queries, the designs call for joining driving query

tables to a view (for example, v_restart_store) that includes the function.

A stored procedure has been created to determine thread values.

Restart_thread_return returns a thread value derived from a numeric driver

value, such as department number, and the total number of threads in a given

process. Retailers should be able to determine the best algorithm for their

design, and if a different means of segmenting data is required, then either

the restart_thread_return function can be altered, or a different function can

be used in any of the views in which the function is contained.

Currently the restart_thread_return function is a very simple

modulus routine:

CREATE OR REPLACE FUNCTION RESTART_THREAD_RETURN(in_unit_value

NUMBER,

in_total_threads NUMBER)

RETURN NUMBER IS

ret_val NUMBER;

BEGIN

ret_val := MOD(ABS(in_unit_value),in_total_threads) + 1;

RETURN ret_val;

END;

Each restart view will have four elements:

§

The name of the threading mechanism, driver_name.

§

The total number of threads in a grouping, num_threads.

§

The value of the driving mechanism, driver_value.

§

The thread value for that given combination of driver_name,

num_threads, and driver value, thread_val.

The view will be based on the restart_control table and an

information table such as DEPS or STORES. A row will exist in the view for

every driver value and every total number of threads value. Therefore, if a

retailer were to always use the same number of threads for a given driver

(dept, store, and others), then the view would be relatively small. As an

example, if all of a retailer’s programs threaded by department have a total of

5 threads, then the view will contain only one value for each department. For

example, if there are 10 total departments, 10 rows will exist in

v_restart_dept. However, if the retailer wants to have one of the programs to

have ten threads, then there will be 2 rows for every department: one for five

total threads and one for ten total threads (for example, if 10 total

departments, 20 rows will exist in v_restart_dept). Obviously, retailers should

be advised to keep the number of total thread values for a thread driver to a

minimum to reduce the scope of the table joins of the driving cursor with the

view.

Below is an example of how the same driver value can result in

differing thread values. This example uses the restart_thread_return function

as it currently is written to derive thread values.

|

Driver_name

|

num_threads

|

driver_val

|

thread_val

|

|

DEPT

|

1

|

101

|

1

|

|

DEPT

|

2

|

101

|

2

|

|

DEPT

|

3

|

101

|

3

|

|

DEPT

|

4

|

101

|

2

|

|

DEPT

|

5

|

101

|

2

|

|

DEPT

|

6

|

101

|

6

|

|

DEPT

|

7

|

101

|

4

|

Below is an example of what a distribution of stores might look

like given 10 stores and 5 total threads:

|

Driver_name

|

num_threads

|

driver_val

|

thread_val

|

|

STORE

|

5

|

1

|

2

|

|

STORE

|

5

|

2

|

3

|

|

STORE

|

5

|

3

|

4

|

|

STORE

|

5

|

4

|

5

|

|

STORE

|

5

|

5

|

1

|

|

STORE

|

5

|

6

|

2

|

|

STORE

|

5

|

7

|

3

|

|

STORE

|

5

|

8

|

4

|

|

STORE

|

5

|

9

|

5

|

|

STORE

|

5

|

10

|

1

|

View syntax:

The following is an example of the syntax needed to create the

view for the multi-threading join, created with script (see threading

discussion for details on restart_thread_return

function):

create or replace view v_restart_store

as

select rc.driver_name driver_name,

rc.num_threads num_threads,

s.store driver_value,

restart_thread_return(s.store,

rc.num_threads) thread_val

from restart_control rc, store s

where rc.driver_name = 'STORE'

There is a different threading scheme used within Oracle Retail

Sales Audit (ReSA). Because ReSA needs to run 24 hours a day and seven days a

week, there is no batch window. This means that there may be batch programs

running at the same time that there are online users. ReSA solved this

concurrency problem by creating a locking mechanism for data that is organized

by store days. These locks provide a natural threading scheme. Programs that

cycle through all of the store day data attempt to lock the store day first. If

the lock fails, the program simply goes on to the next store day. This has the

affect of automatically balancing the workload between all of the programs

executing.

All program names will be stored on the restart_control table

along with a functional description, the query driver (department, store,

class, and others) and the user-defined number of threads associated with them.

Users should be able to scroll through all programs to view the name,

description, and query driver, and if the update_allowed flag is set to true,

to modify the number of threads (update is set to true).

File-based processing does not truly “multi-thread” and therefore

the number of threads defined on restart_control will always be one. However, a

restart_program_status record needs to be created for each input file that is

to be processed for the program module. Further, the thread value that is

assigned must be part of the input file name. The restart_parse_name function

included in the program module will parse the thread value from the program

name and use that to determine the availability and restart requirements on the

restart_program_status table.

Refer to the beginning of this multi-threading section for a

discussion of limits on using large (greater than nine digits) thread values.

When the number of threads is modified in the restart_control

table, the form must first validate that no records for that program are

currently being processed in the restart_program_status_table (that is, all

records = ‘Completed’). The program must insert or delete rows depending on

whether the new thread number is greater than or less than the old thread

number. In the event that the new number is less than the previous number, all

records for that program_name with a thread number greater than the new thread

number will be deleted. If the new number is greater than the old number, new

rows will be inserted. A new record is inserted for each restart_name/thread_val combination.

For example if the batch program SALDLY has its number of

processes changed from 2 to 3, then an additional row (3) will be added to the

restart_program_status table. In the same way, if the number of threads was

reduced to 1 in this example, rows 2 and 3 would be deleted.

|

Row

|

RESTART_NAME

|

THREAD_VA

|

program_name

|

|

1

|

SALDLY

|

1

|

SALDLY

|

|

2

|

SALDLY

|

2

|

SALDLY

|

restart_program_status

table after insert:

|

Row

|

RESTART_NAME

|

THREAD_VA

|

program_name

|

|

1

|

SALDLY

|

1

|

SALDLY

|

|

2

|

SALDLY

|

2

|

SALDLY

|

|

3

|

SALDLY

|

3

|

SALDLY

|

restart_program_status

table after delete:

|

Row

|

RESTART_NAME

|

THREAD_VA

|

program_name

|

|

1

|

SALDLY

|

1

|

SALDLY

|

Users should also be able to modify the commit_max_ctr column in

restart_program_status table. This controls the number of iterations in driving

query or the number of lines read from a flat file that determine the logical

unit of work (LUW).

Users must be able to view the status of all records in

restart_program_status table. This is where the user gets to view error

messages from aborted programs, and statistics and histories of batch runs. The

only fields that are modified will be program_status and restart_flag. The user

should be able to reset the restart_flag to ‘Y’ from ‘N’ on records with a

status of aborted, started records to aborted in the event of an abend

(abnormal termination), and all records in the event of a restore from

tape/re-run of all batch.

Before any batch with restart/recovery logic is run, an

initialization program must be run to update the status in the

restart_program_status table. This program must update the program_status to

‘ready for start’ wherever a record’s program_status is ‘completed.’ This leaves

unchanged all programs that ended unsuccessfully in the last batch run.

Due to the nature of threading algorithm, individual programs

needs a pre or a post program run to initialize variables or files before any

of the threads have run or to update final data when all the threads are run.

The decision was made to create pre-programs and post-programs in these cases

rather than let the restart/recovery logic decide whether the currently

processed thread is the first thread to start or the last thread to end for a

given program.

For ksh driven batch programs that call PL/SQL for its main

processing logic, multi-threading is also supported. An example of this type of

batch job is ksh script stockcountupload.ksh calling PL/SQL package

CORESVC_STOCK_UPLOAD_SQL. The threading configuration for each program is

defined in table RMS_PLSQL_BATCH_CONFIG (instead of RESTART_CONTROL for the

ProC programs). Column MAX_CONSURRENT_THREAD holds the maximum number of

concurrent threads. MAX_CHUNK_SIZE defines the commit size within each thread,

similar to the RESTART_CONTROL.COMMIT_MAX_CTR column.

4

Oracle Retail batch architecture uses array processing to improve

performance. Instead of processing SQL statements using scalar data, data is

grouped into arrays and used as bind variables in SQL statements. This improves

performance by reducing the server/client and network traffic.

Array processing is used in selecting, inserting, deleting, and

updating statements. Oracle Retail typically does not statically define the

array sizes, but uses the restart maximum commit variable as a sizing multiple.

The user must remember this when defining the system's maximum commit counters.

Remember, when using array processing in Oracle, it does not

allow a single array operation to be performed for more than 32000 records in

one step. The Oracle Retail restart/recovery libraries have been updated to

define macros for this value: MAX_ORACLE_ARRAY_SIZE.

All batch programs that use array processing need to limit the

size of their array operations to MAX_ORACLE_ARRAY_SIZE.

If the commit max counter is used for array processing size,

check it after the call to restart_init() and, if necessary, reset it to the

maximum value if greater. If retek_init() is used to initialize, check the

returned commit max counter and reset it to the maximum size if it is greater.

In case of retek_init(), reset the library’s internal commit max counter by

calling extern int limit_commit_max_ctr(unsigned int new_max_ctr).

If some other variable is used for sizing the array processing,

the actual array-processing step must be encapsulated in a calling loop that

performs the array operation in sub segments of the total array size where each

sub-segment is at most MAX_ORACLE_ARRAY_SIZE large. Currently all Oracle Retail

batch programs are implemented in the similar way.

5

Pro*C Input and Output

Formats

Oracle Retail batch processing utilizes input from both tables

and flat files. Further, the outcome of processing can both modify data

structures and write output data. Interfacing Oracle Retail with external

systems is the main use of file based I/O.

To simplify the interface requirements, Oracle Retail requires

that all in-bound and out-bound file-based transactions adhere to standard file

layouts. There are two types of file layouts, detail-only and master-detail,

which are described in the sections below.

An interfacing API exists within Oracle Retail to simplify the

coding and the maintenance of input files. The API provides functionality to

read input from files, ensure file layout integrity, and write and maintain

files for rejected transactions.

The RMS interface library supports two standard file layouts; one

for master/detail processing, and one for processing detail records only. True

sub-details are not supported within the RMS base package interface library

functions.

A 5-character identification code or record type identifies all

records within an I/O file, regardless of file type. The following includes the

valid record type values:

§

FHEAD—File Header

§

FDETL—File Detail

§

FTAIL—File Tail

§

THEAD—Transaction Header

§

TDETL—Transaction Detail

§

TTAIL—Transaction Tail

Each line of the file must begin with the record type code

followed by a 10-character record ID.

File layouts have a standard file header record, a detail record

for each transaction to be processed, and a file trailer record. Valid record

types are FHEAD, FDETL, and FTAIL.

Example:

FHEAD0000000001STKU1996010100000019960929

FDETL0000000002SKU100000040000011011

FDETL0000000003SKU100000050003002001

FDETL0000000004SKU100000050003002001

FTAIL00000000050000000003

File layouts consists of:

§

Standard file header record

§

Set of records for each transaction to be processed

§

File trailer record.

The transaction set consists of:

§

Transaction set header record

§

Transaction set detail for detail within the transaction

§

Transaction trailer record

Valid record types are FHEAD, THEAD, TDETL, TTAIL, and FTAIL.

Example:

FHEAD0000000001RTV 19960908172000

THEAD000000000200000000000001199609091202000000000003R

TDETL000000000300000000000001000001SKU10000012

TTAIL0000000004000001

THEAD000000000500000000000002199609091202001215720131R

TDETL000000000600000000000002000001UPC400100002667

TDETL0000000007000000000000020000021UPC400100002643 0

TTAIL0000000008000002

FTAIL00000000090000000007

|

Record Name

|

Field Name

|

Field Type

|

Default Value

|

Description

|

|

File Header

|

File Type Record Descriptor

|

Char(5)

|

FHEAD

|

Identifies file record type.

|

|

|

File Line Identifier

|

Number(10)

|

Specified by external system

|

Line number of the current file.

|

|

|

File Type Definition

|

Char(4)

|

n/a

|

Identifies transaction type.

|

|

|

File Create Date

|

Date

|

Create date

|

Date file was written by external system.

|

|

Transaction Header

|

File Type Record Descriptor

|

Char(5)

|

THEAD

|

Identifies file record type.

|

|

|

File Line Identifier

|

Number(10)

|

Specified by external system

|

Line number of the current file.

|

|

|

Transaction Set Control Number

|

Char(14)

|

Specified by external system

|

Used to force unique transaction check.

|

|

|

Transaction Date

|

Char(14)

|

Specified by external system

|

Date the transaction was created in external system.

|

|

Transaction Detail

|

File Type Record Descriptor

|

Char(5)

|

TDETL

|

Identifies file record type.

|

|

|

File Line Identifier

|

Number(10)

|

Specified by external system

|

Line number of the current file.

|

|

|

Transaction Set Control Number

|

Char(14)

|

Specified by external system

|

Used to force unique transaction check.

|

|

|

Detail Sequence Number

|

Char(6)

|

Specified by external system

|

Sequential number assigned to detail records within a

transaction.

|

|

Transaction Trailer

|

File Type Record Descriptor

|

Char(5)

|

TTAIL

|

Identifies file record type.

|

|

|

File Line Identifier

|

Number(10)

|

Specified by external system

|

Line number of the current file.

|

|

|

Transaction Detail Line Count

|

Number(6)

|

Sum of detail lines

|

Sum of the detail lines within a transaction.

|

|

File Trailer

|

File Type Record Descriptor

|

Char(5)

|

FTAIL

|

Identifies file record type.

|

|

|

File Line Identifier

|

Number(10)

|

Specified by external system

|

Line number of the current file.

|

|

|

Total Transaction Line Count

|

Number(10)

|

Sum of all transaction lines

|

All lines in file less the file header and trailer records.

|

6

RETL Program Overview for the RMS-RPAS

Interface

This chapter covers information about the Oracle Retail Extract

Transform and Load (RETL) program overview for the RMS and the RPAS interface. The

RETL architecture is mentioned along with the RETL program overview.

RMS works with the Oracle Retail Extract Transform and Load

(RETL) framework. This architecture utilizes a high performance data processing

tool that allows database batch processes to take advantage of parallel

processing capabilities.

The RETL framework runs and parses through the valid operators

composed in XML scripts. For more information on RETL tool, see RETL

Programmer’s Guide.

The figure The two stages of RETL

processing illustrates the extraction processing architecture. Instead of managing the change captures as they

occur in the source system during the day, the process involves

extracting the current data from the source system. The extracted data is output to flat files. These flat files are then

available for consumption by products such as RPAS.

The target system has its own way of completing the

transformations and loading the necessary data into the system, where it can be used for further processing.. For more

information on transformation and loading, see RPAS documentation.

The architecture relies upon two distinct stages, shown in the figure

The two stages of RETL processing.

§

Stage 1- Extraction from the RMS database using

well-defined flows specific to the RMS database. The resulting output is

comprised of data files written in a well-defined schema file format. This

stage includes no destination specific code.

§

Stage 2- Introduces a flow specific to the destination. In

this case, flows for RPAS are

designed to transform the data so that RPAS can import the data properly.

The two stages of RETL processing

This section summarizes the RETL program features utilized in the

RMS extractions and loads. Installation information about the RETL tool is

available in the latest RETL Programmer’s Guide.

Before trying to configure and run RMS RETL, install RETL version

13.0 or later, which is requires RMS RETL to run. For thorough installation

procedure, see RETL Programmer’s Guide.

The permissions are set up as per the RETL Programmer’s Guide.

RMS RETL reads and writes data files, you can also create, delete, update and

insert into tables. The extraction fails, if it is not set up properly.

See the RETL Programmer’s Guide for RETL environment variables

that must be set up for your version of RETL. You will need to set RDF_HOME to

your base directory for RMS RETL. This is the top level directory that you

select during the installation process. Add export RDF_HOME=<base

directory for RMS RETL> in. profile.

There are several constants that must be set in

rmse_rpas_config.env depending upon a retailer’s preferences and the local

environment. This is summarized in the table below.

|

Constant Name

|

Default Value

|

Alternate Value

|

Description

|

|

DATE_TYPE

|

vdate

|

current_date

|

Determines whether the date used in naming the error, log,

and status files is the current date or the VDATE value found in the PERIOD

table.

|

|

DBNAME

|

rtkdev01

|

Depends on installation

|

The database schema name.

|

|

RMS_OWNER

|

RPASINT

|

Depends on installation

|

The username of the RMS database schema owner.

|

|

BA_OWNER

|

|

Depends on installation

|

The username of the RMS batch user (not currently used by

RMS-RPAS).

|

|

CONN_TYPE

|

thin

|

oci

|

The way in which RMS connects to the database.

|

|

DBHOST

|

mspdev17

|

Depends on installation

|

The computer hardware node name.

|

|

DBPORT

|

1524

|

Depends on installation

|

The port on which the database listener resides.

|

|

LOC_ATTRIBUTES_

ACTIVE

|

False

|

True

|

Determines whether rmse_rpas_attributes.ksh is run or not.

|

|

PROD_

ATTRIBUTES_ACTIVE

|

False

|

True

|

Determines whether rmse_rpas_attributes.ksh is run or not.

|

|

DIFFS_ACTIVE

|

True

|

False

|

Determines whether rmse_rpas_merchhier.ksh generates data

files that contain diff allocation information.

|

|

ISSUES_ACTIVE

|

True

|

False

|

If set to ‘True’, rmse_rpas_stock_on_hand also extracts

stock at the warehouse level. If set to ‘False’, rmse_rpas_stock_on_hand

extracts stock at the store level only.

|

|

LOAD_TYPE

|

CONVENTIONAL

|

DIRECT

|

Data loading method to be used by SQL*Loader

(Direct may be faster than conventional).

|

|

DB_ENV

|

ORA

|

DB2, TERA

|

Database type (Additional changes to the software may be

needed if a database other than Oracle is selected).

|

|

NO_OF_CPUS

|

4

|

Depends on installation

|

Used in parallel database query hints to improve

performance.

|

|

LANGUAGE

|

en

|

Various

|

En = English

|

|

RFX_OPTIONS

|

-c $RDF_HOME/

rfx/etc/rfx.conf

-s SCHEMAFILE

|

-c $RDF_

HOME/

rfx/etc/rfx

.conf

|

Processing speed may be increased for some extractions if

the

-s SCHEMAFILE

option is omitted.

|

You must set up the wallet alias in the rmse_aip_config.env. The

wallet and wallet alias creation is required to use programs in a secured mode.

The following variables must be setup RETL_WALLET_ALIAS, ORACLE_WALLET_ALIAS,

SQLPLUS_LOGON.

Be sure to review the environmental parameters in the

rmse_rpas_config.env file before executing batch modules.

Steps to Configure RETL

Log in to the UNIX server with a UNIX account that will run

the RETL scripts.

Change directories to <base_directory>/rfx/etc.

Modify the constants from the table above in the

rmse_rpas_config.env script as needed.

RETL programs use a return code to indicate successful

completion. If the program successfully runs, a zero (0) is returned. If the

program fails, no number is returned.

To prevent a program from running while the same program is

already running against the same set of data, the code utilizes a program

status control file. At the beginning of each module, rmse_rpas_config.env is run.

This script checks for the existence of the program status control file. If the

file exists, then a message stating, ‘${PROGRAM_NAME}

has already started’, is logged and the module exits. If the file does

not exist, a program status control file is created and the module executes.

If the module fails at any point, the program status control file

is not removed, and the user is responsible for removing the control file

before running the module again.

File Naming Conventions

The name and directory of the program status control file is set

in the configuration script (rmse_rpas_config.env). The directory defaults to

$RDF_HOME/error.

The naming convention for the program status control file itself

defaults to the following dot separated file name:

§

The program name.

§

The program status is mentioned under the ‘status’ parameter.

§

The business virtual date for which the module was run.

For example, a program status control file for the

rmse_rpas_daily_sales.ksh program is named as follows for a batch run on the

business virtual date of January 5, 2001:

$RDF_HOME/error/rmse_rpas_daily_sales.status.20010105

Because RETL processes all records as a set, as opposed to one

record at a time, the method for restart and recovery must be different from

the method that is used for Pro*C. The restart and recovery process serves the

following two purposes:

It prevents the loss of data due to program or database

failure.

It increases performance when restarting after a program or

database failure by limiting the amount of reprocessing that needs to occur.

The RMS extract (RMSE) modules extract from a source transaction

database or text file and write to a text file. The RMS loads module import

data from flat files, performs necessary transformations, and then loads the

data into the applicable RMS table.