| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Solaris Cluster Concepts Guide |

2. Key Concepts for Hardware Service Providers

3. Key Concepts for System Administrators and Application Developers

Cluster Configuration Repository (CCR)

Device IDs and DID Pseudo Driver

Local and Global Namespaces Example

Using the cldevice Command to Monitor and Administer Disk Paths

Using Oracle Solaris Cluster Manager to Monitor Disk Paths

Using the clnode set Command to Manage Disk Path Failure

Adhering to Quorum Device Requirements

Adhering to Quorum Device Best Practices

Recommended Quorum Configurations

Quorum in Two-Host Configurations

Quorum in Greater Than Two-Host Configurations

Atypical Quorum Configurations

Characteristics of Scalable Services

Data Service API and Data Service Development Library API

Using the Cluster Interconnect for Data Service Traffic

Resources, Resource Groups, and Resource Types

Resource and Resource Group States and Settings

Resource and Resource Group Properties

Support for Oracle Solaris Zones

Support for Global-Cluster Non-Voting Nodes (Solaris Zones) Directly Through the RGM

Criteria for Using Support for Solaris Zones Directly Through the RGM

Requirements for Using Support for Solaris Zones Directly Through the RGM

Additional Information About Support for Solaris Zones Directly Through the RGM

Criteria for Using Oracle Solaris Cluster HA for Solaris Zones

Requirements for Using Oracle Solaris Cluster HA for Solaris Zones

Additional Information About Oracle Solaris Cluster HA for Solaris Zones

Data Service Project Configuration

Determining Requirements for Project Configuration

Setting Per-Process Virtual Memory Limits

Two-Host Cluster With Two Applications

Two-Host Cluster With Three Applications

Failover of Resource Group Only

Public Network Adapters and IP Network Multipathing

SPARC: Dynamic Reconfiguration Support

SPARC: Dynamic Reconfiguration General Description

SPARC: DR Clustering Considerations for CPU Devices

SPARC: DR Clustering Considerations for Memory

SPARC: DR Clustering Considerations for Disk and Tape Drives

SPARC: DR Clustering Considerations for Quorum Devices

SPARC: DR Clustering Considerations for Cluster Interconnect Interfaces

SPARC: DR Clustering Considerations for Public Network Interfaces

In the Oracle Solaris Cluster software, all multihost devices must be under control of the Oracle Solaris Cluster software. You first create volume manager disk groups, either Solaris Volume Manager disk sets or Veritas Volume Manager disk groups, on the multihost disks. Then, you register the volume manager disk groups as device groups. A device group is a type of global device. In addition, the Oracle Solaris Cluster software automatically creates a raw device group for each disk and tape device in the cluster. However, these cluster device groups remain in an offline state until you access them as global devices.

Registration provides the Oracle Solaris Cluster software information about which Oracle Solaris hosts have a path to specific volume manager disk groups. At this point, the volume manager disk groups become globally accessible within the cluster. If more than one host can write to (master) a device group, the data stored in that device group becomes highly available. The highly available device group can be used to contain cluster file systems.

Note - Device groups are independent of resource groups. One node can master a resource group (representing a group of data service processes). Another node can master the disk groups that are being accessed by the data services. However, the best practice is to keep on the same node the device group that stores a particular application's data and the resource group that contains the application's resources (the application daemon). Refer to Relationship Between Resource Groups and Device Groups in Oracle Solaris Cluster Data Services Planning and Administration Guide for more information about the association between device groups and resource groups.

When a node uses a device group, the volume manager disk group becomes “global” because it provides multipath support to the underlying disks. Each cluster host that is physically attached to the multihost disks provides a path to the device group.

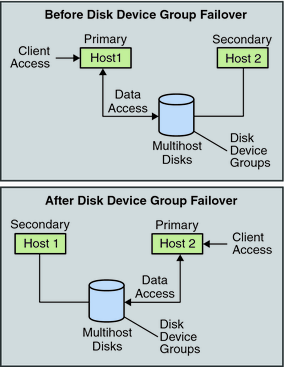

Because a disk enclosure is connected to more than one Oracle Solaris host, all device groups in that enclosure are accessible through an alternate path if the host currently mastering the device group fails. The failure of the host that is mastering the device group does not affect access to the device group except for the time it takes to perform the recovery and consistency checks. During this time, all requests are blocked (transparently to the application) until the system makes the device group available.

Figure 3-1 Device Group Before and After Failover

This section describes device group properties that enable you to balance performance and availability in a multiported disk configuration. Oracle Solaris Cluster software provides two properties that configure a multiported disk configuration: preferenced and numsecondaries. You can control the order in which nodes attempt to assume control if a failover occurs by using the preferenced property. Use the numsecondaries property to set the number of secondary nodes for a device group that you want.

A highly available service is considered down when the primary node fails and when no eligible secondary nodes can be promoted to primary nodes. If service failover occurs and the preferenced property is true, then the nodes follow the order in the node list to select a secondary node. The node list defines the order in which nodes attempt to assume primary control or transition from spare to secondary. You can dynamically change the preference of a device service by using the clsetup command. The preference that is associated with dependent service providers, for example a global file system, is identical to the preference of the device service.

Secondary nodes are check-pointed by the primary node during normal operation. In a multiported disk configuration, checkpointing each secondary node causes cluster performance degradation and memory overhead. Spare node support was implemented to minimize the performance degradation and memory overhead that checkpointing caused. By default, your device group has one primary and one secondary. The remaining available provider nodes become spares. If failover occurs, the secondary becomes primary and the node or highest in priority on the node list becomes secondary.

You can set the number of secondary nodes that you want to any integer between one and the number of operational nonprimary provider nodes in the device group.

Note - If you are using Solaris Volume Manager, you must create the device group before you can set the numsecondaries property to a number other than the default.

The default number of secondaries for device services is 1. The actual number of secondary providers that is maintained by the replica framework is the number that you want, unless the number of operational nonprimary providers is less than the number that you want. You must alter the numsecondaries property and double-check the node list if you are adding or removing nodes from your configuration. Maintaining the node list and number of secondaries prevents conflict between the configured number of secondaries and the actual number that is allowed by the framework.

(Solaris Volume Manager) Use the metaset command for Solaris Volume Manager device groups, in conjunction with the preferenced and numsecondaries property settings, to manage the addition of nodes to and the removal of nodes from your configuration.

(Veritas Volume Manager) Use the cldevicegroup command for VxVM device groups, in conjunction with the preferenced and numsecondaries property settings, to manage the addition of nodes to and the removal of nodes from your configuration.

Refer to Overview of Administering Cluster File Systems in Oracle Solaris Cluster System Administration Guide for procedural information about changing device group properties.