| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Java CAPS Master Data Management Suite Primer Java CAPS Documentation |

| Skip Navigation Links | |

| Exit Print View | |

|

Oracle Java CAPS Master Data Management Suite Primer Java CAPS Documentation |

Oracle Java CAPS Master Data Management Suite Primer

About the Oracle Java CAPS Master Data Management Suite

Java CAPS MDM Suite Architecture

Master Data Management Components

Java CAPS Data Quality and Load Tools

Java CAPS MDM Integration and Infrastructure Components

Oracle Java CAPS Enterprise Service Bus

Oracle Java CAPS Business Process Manager

Oracle Java System Access Manager

Oracle Directory Server Enterprise Edition

Oracle Java System Portal Server

NetBeans Integrated Development Environment (IDE)

Java CAPS Master Index Overview

Java CAPS Master Index Features

Java CAPS Master Index Architecture

Master Index Design and Development Phase

Data Monitoring and Maintenance

Java CAPS Data Integrator Overview

Java CAPS Data Integrator Features

Java CAPS Data Integrator Architecture

Java CAPS Data Integrator Development Phase

Java CAPS Data Integrator Runtime Phase

Java CAPS Data Quality and Load Tools

Master Index Standardization Engine

Master Index Standardization Engine Configuration

Master Index Standardization Engine Features

Master Index Match Engine Matching Weight Formulation

Master Index Match Engine Features

Data Cleanser and Data Profiler

Data Cleanser and Data Profiler Features

Initial Bulk Match and Load Tool

Initial Bulk Match and Load Process Overview

The Oracle Java CAPS Master Data Management Suite(MDM Suite) is a unified product offering that provides a comprehensive MDM solution. The MDM Suite creates an integrated and consistent view of master data. It addresses the full MDM lifecycle, from extracting, cleansing, and matching data in source systems to loading the modified data into the master index database, and finally to managing and maintaining the reference data. The final step includes deduplication, merging, unmerging, auditing, and so on.

The Java CAPS MDM Suite is based on the solid foundation of the Oracle Java Composite Application Platform Suite (Java CAPS), which provides an integration platform for building and managing composite applications based on a service oriented architecture (SOA). The Java CAPS MDM Suite includes products for creating a cross-reference of records stored throughout an organization and for extracting, transforming, and loading bulk data. It also includes data quality tools, a portal and presentation layer, and a security and identity management framework. Java CAPS MDM Suite operations can be exposed as web services for complete integration with external systems.

The Java CAPS MDM Suite leverages existing applications and systems and consolidates existing information to provide a single best view of the information and improve the quality, accuracy, and availability of data across an organization. The single best view is the source of consistent, reliable, accurate, and complete data for the entire organization and, in some cases, business partners. The suite is able to create the singe best view by using advanced standardization and matching methodologies along with configurable business logic to uniquely identify common records and determine whether two records represent the same entity.

The following topics provide additional information about the Java CAPS MDM Suite.

The Java CAPS MDM Suite establishes the data model, data storage, and data quality for an MDM solution, and also includes the ongoing management lifecycle to maintain the most accurate and current data and make that information available to diverse customers. Features of the Java CAPS MDM Suite include the following:

Consolidates, cleanses, deduplicates, matches, publishes, and protects the reference data integrated from fragmented data sets.

Improves the accuracy, visibility, and availability of an organization's data.

Allows rapid development of new functionality and extensions to existing functionality with a flexible and extensible framework that can handle future applications and protocols.

Creates an integrated and consistent view of master data.

Includes a rich and intuitive web–based user interface for data stewards to review and manage master data.

Synchronizes with external source systems, leveraging a rich integration platform.

Provides a unified development and monitoring environment.

Extends the single best view to partners using federated identity.

Allows for layered levels of access for privacy and security.

Leverages current applications, data, and systems. Changes to existing systems are minimal.

Delivers real-time access to master data based on defined restrictions.

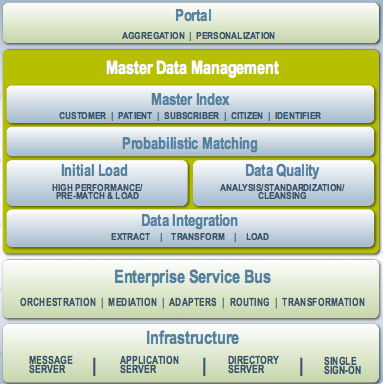

The Java CAPS MDM Suite is a subset of the Java Composite Application Platform Suite (Java CAPS). It includes an infrastructure layer, Enterprise Service Bus (ESB) layer, MDM layer, and portal layer. The infrastructure layer is the foundation for deploying the MDM applications, access and security, and database connectivity. The ESB layer provides connectivity to external through adapters and business process orchestration. It also performs data transformation, mapping, and routing.

The MDM layer includes the core MDM products. Java CAPS Master Index defines the data structure for the reference data, and stores and maintains the reference data on an ongoing basis. Java CAPS Data Integrator extracts legacy data from existing systems, transforms that data if necessary, and loads it into a master index database. The Java CAPS Data Quality and Load Tools profile, cleanse, standardize, match, and load the reference data. The load tool uses Java CAPS Data Integrator for its high-performance loading capabilities.

The Portal layer defines personalized content delivery for MDM data, providing access to the reference data based on the specific needs of each user or group of users.

Certain components of the Java CAPS MDM Suite are geared specifically to the needs of an MDM solution. These include Java CAPS Master Index, Java CAPS Data Integrator, and the Data Quality tools. These components provide data cleansing, profiling, loading, standardization, matching, deduplication, and stewardship to the MDM Suite.

Java CAPS Master Index provides a flexible framework for you to design and create custom single-view applications, or master indexes. A master index cleanses, matches, and cross–references business objects across an enterprise. A master index that contains the most current and accurate data about each business object is at the center of the MDM solution. Java CAPS Master Index provides a wizard that takes you through all the steps of creating a master index application. Using the wizard, you can define a custom master index with a data structure, processing logic, and matching and standardization logic that are completely geared to the type of data you are indexing. Java CAPS Master Index also provides a graphical editor so you can further customize the business logic, including matching, standardization, queries, match weight thresholds, and so on.

Java CAPS Master Index addresses the issues of dispersed data and poor quality data by uniquely identifying common records, using data cleansing and matching technology to automatically build a cross-index of the many different local identifiers that an entity might have. Applications can then use the information stored by the master index to obtain a comprehensive and current view of an entity since master index operations can be exposed as services. Java CAPS Master Index also provides the ability to monitor and maintain reference data through a customized web-based user interface called the Master Index Data Manager (MIDM).

Java CAPS Data Integrator is an extract, transform, and load (ETL) tool designed for high-performance ETL processing of bulk data between files and databases. It manages and orchestrates high-volume data transfer and transformation between a wide range of diverse data sources, including relational and non-relational data sources. Java CAPS Data Integrator is designed to process very large data sets, making it the ideal tool to use to load data from multiple systems across an organization into the master index database.

Java CAPS Data Integrator provides a wizard to guide you through the steps of creating basic and advanced ETL mappings and collaborations. It also provides options for generating a staging database and bulk loader for the legacy data that will be loaded into a master index database. These options are based on the object structure defined for the master index. The ETL Collaboration Editor allows you to easily and quickly customize the required mappings and transformations, and supports a comprehensive set of data operators. Java CAPS Data Integrator works within the MDM Suite to dramatically shorten the length of time it takes to match and load large data sets into the master index database.

By default, Java CAPS Master Index uses the Master Index Match Engine and Master Index Standardization Engine to standardize and match incoming data. Additional tools are generated directly from the master index application and use the object structure defined for the master index. These tools include the Data Profiler, Data Cleanser, and the Initial Bulk Match and Load (IBML) tool.

Master Index Standardization EngineThe standardization engine is built on a highly configurable and extensible framework to enable standardization of multiple types of data originating in various languages and counties. It performs parsing, normalization, and phonetic encoding of the data being sent to the master index or being loaded in bulk to the master index database. Parsing is the process of separating a field into individual components, such as separating a street address into a street name, house number, street type, and street direction. Normalization changes a field value to its common form, such as changing a nickname like Bob to its standard version, Robert. Phonetic encoding allows queries to account for spelling and input errors. The standardization process cleanses the data prior to matching, providing data to the match engine in a common form to help provide a more accurate match weight.

Master Index Match EngineThe match engine provides the basis for deduplication with its record matching capabilities. The match engine compares the match fields in two records and calculates a match weight for each match field. It then totals the weights for all match fields to provide a composite match weight between records. This weight indicates how likely it is that two records represent the same entity. The Master Index Match Engine is a high-performance engine, using proven algorithms and methodologies based on research at the U.S. Census Bureau. The engine is built on an extensible and configurable framework, allowing you to customize existing comparison functions and to create and plug in custom functions.

Data ProfilerWhen gathering data from various sources, the quality of the data sets is unknown. You need a tool to analyze, or profile, legacy data in order to determine how it needs to be cleansed prior to being loaded into the master index database. It uses a subset of the Data Cleanser rules to analyze the frequency of data values and patterns in bulk data. The Data Profiler performs a variety of frequency analyses. You can profile data prior to cleansing in order to determine how to define cleansing rules, and you can profile data after cleansing in order to fine-tune query blocking definitions, standardization rules, and matching rules.

Data CleanserOnce you know the quality of the data to be loaded to the master index database, you can clean up data anomalies and errors as well as standardize and validate the data. The Data Cleanser validates, standardizes, and transforms bulk data prior to loading the initial data set into a master index database. The rules for the cleansing process are highly customizable and can easily be configured for specific data requirements. Any records that fail validation or are rejected can be fixed and put through the cleanser again. The output of the Data Cleanser is a file that can be used by the Data Profiler for analysis and by the IBML tool. Standardizing data using the Data Cleanser aids the matching process.

Initial Bulk Match and Load ToolBefore your MDM solution can begin to cleanse data in real time, you need to seed the master index database with the data that currently exists in the systems that will share information with the master index. The IBML tool can match bulk data outside of the master index environment and then load the matched data into the master index database, greatly reducing the amount of time it would normally take to match and load bulk data. This tool is highly scalable and can handle very large volumes of data when used in a distributed computing environment. The IBML loads a complete image of processed data, including potential duplicate flags, assumed matches, and transaction information.

The Java CAPS MDM Suite is built on a platform of integration and infrastructure applications that provide connectivity, define the flow of data, handle access and security, and route and transform data.

The Java CAPS MDM Suite can be used with the Oracle applications listed below:

The Enterprise Service Bus is an integration platform based on Java technology and web services. It is a pluggable platform that incorporates the Java Business Integration (JBI) standard to allow loosely coupled components to communicate with each other through standards-based messaging. It provides core integration capabilities to the MDM Suite, including comprehensive application connectivity, guaranteed messaging, and transformation capabilities along with a unified environment for integration development, deployment, monitoring, and management.

The Business Process Manager enables long-lived, process-driven integration. It allows you to model, test, implement, monitor, manage, and optimize business processes that orchestrate the flow of activities across any number of web services, systems, people, and partners. It delivers an open, graphical modeling environment for the industry-standard business process execution language (BPEL). Java CAPS Master Index services can be called from a business process in order to share data with external systems.

Oracle Java System Access Manager is based on open standards and delivers authentication and policy-based authorization within a single, unified framework to support composite application integration. It secures the delivery of essential identity and application information on top of the Oracle Directory Server Enterprise Edition and scales with growing business needs by offering single sign-on as well as enabling federation across trusted networks of partners, suppliers, and customers.

The Oracle Directory Server Enterprise Edition (DSEE) builds a solid foundation for identity management by providing a central repository for storing and managing identity profiles, access privileges, and application and network resource information. The Oracle DSEE enables enterprise applications and large-scale extranet applications to access consistent, accurate, and reliable identity data.

The Oracle Java System Portal Server provides a user portal for collaboration with business processes and composite applications layered on top of legacy and packaged applications that are integrated using business integration components within the Java CAPS MDM Suite.

Java CAPS Adapters provide extensive support for integration with legacy applications, packaged applications, and data stores through a combination of traditional adapter technology and modern JBI and Java Connector Architecture (JCA) standards-based approach.

The GlassFish Enterprise Server is an application server that is compatible with Java Platform, Enterprise Edition (Java EE), for developing and delivering server-side applications. Once you create and configure the MDM applications, they can be deployed to the GlassFish server.

NetBeans provides a unified interface for building, testing, and deploying reusable, secure web services. The Java CAPS MDM Suite applications are created within the NetBeans project structure and include wizards and editors that are fully integrated into the NetBeans IDE.

An effective MDM implementation involves more than just creating and running the required applications. Once the applications are in place, the MDM Suite continues to cleanse and deduplicate data and makes the updated information available to external sources. The Oracle Java CAPS MDM Suite organizes the MDM lifecycle into three phases: Creation, Synchronization, and Syndication.

Creation - This phase begins with analyzing the structure of the reference data and then designing and building the master index application based on that analysis. Once the master index application is configured, the data quality tools can be generated in order to profile, cleanse, match, and load the legacy data from external systems that are part of the MDM system. This phase is iterative; the results of the profiling and match analysis steps provide you with key information to fine-tune the query, blocking, standardization, and match logic for the application. This phase also includes creating the components that will integrate the flow of data between the MDM applications and external systems. When this step is complete, the master index application is running and its operations can be exposed as web services.

Synchronization - The MDM application can propagate any reference data updates to external systems that are configured to accept such information. There are a number of methods to make this information available to external systems, including web services, Java clients, JMS Topics, business processes, and so on. Once MDM services are implemented as either passive or active services, the project can be configured to actively deliver MDM services to external systems. Synchronization keeps data in all systems current, and is an ongoing process.

Syndication - Once the MDM application is running, you can create and manage virtual views on the reference data, defining who in your organization can see what information and how that information is presented. All access to information is available as services implemented by the MDM Suite in different views. For example, your accounting department might need a different set of data than the sale department requires. Syndication removes the complexity of obtaining information from multiple sources and provides a single point of access.

In addition to the above three phases of the MDM lifecycle, the Java CAPS MDM Suite applies three operational layers to control and monitor each phase: Governance, Federation, and Analytics.

Governance - This layer provides policy enforcement, reporting, and compliance to all phases of the MDM lifecycle. The standardization and matching operations form the basis of a compliance strategy, ensuring that the reference data has been strictly verified. During runtime, the MDM application controls, executes, and audits the notifications and repair of incomplete information, revealing problems at their sources. The MDM application can also govern access to master data, and you can govern the use of MDM services at a business level rather than governing technical services.

Federation - This layer provides provisioning, authentication, and authorization to all phases of the MDM lifecycle. You can allow trusted business partners to view certain portions of your reference data using secure standards. This is done in secure and compliant manner with federated identity and access management.

Analytics - The Java CAPS MDM Suite offers reporting, alerts, and analysis tools at all three phases to provide information about business data, including sources of quality issues, histories of deduplication, audit logs, searches, and statistics about the number and types of master data errors encountered. This is particularly important in the Creation phase, where identifying problems early can help ensure that quality issues are addressed.

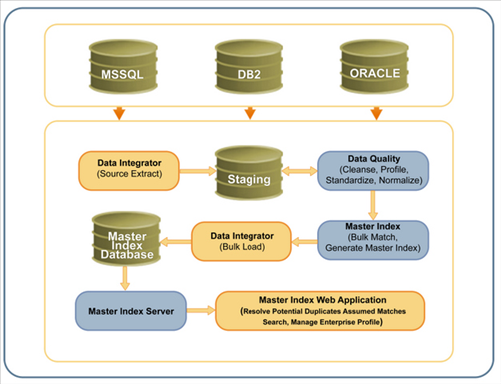

The following steps describe the general workflow for implementing the Oracle Java CAPS MDM Suite solution once you create the master index application and generate the data quality tools. These steps correspond to the diagram below.

Extract data from existing systems (Data Integrator).

Configure standardization, cleansing, and analysis rules, and then cleanse and profile the extracted data (Data Quality).

Match and load standardized data (Master Index and Data Integrator).

Deploy the MDM application to perform ongoing cleansing and deduplication (Master Index Server).

Monitor and maintain data using the data stewardship application (Master Index Web Application).

Figure 1 MDM Workflow Diagram

Below is a more detailed outline of the development steps required to create an MDM solution using the MDM Suite.

Perform a preliminary analysis of the data you plan to store in the master index application to determine the fields to include in the object structure and their attributes.

Create and configure the Oracle Java CAPS Master Index application, defining the object structure, standardization and match logic, queries, runtime characteristics, and any custom processing logic.

Create the database that will store the reference data.

Define security for the MIDM and any web services you will expose.

Generate the profiling, cleansing, and bulk match and load tools.

Extract the data from external systems that will be profiled, cleansed, and loaded into the master index database.

Analyze and cleanse the extracted data. Adjust the application configuration based on the results.

Perform a match analysis using the IBML tool. Adjust the matching logic based on the results.

Load the matched records to the master index database.

Build and deploy the MDM project.

Define connectivity to external systems using a combination of adapters, business processes, web services, Java, and JMS Topics.

Create any necessary presentation layer views.

The foundation of the Java CAPS MDM Suite lies in data standardization and matching capabilities. During runtime, both matching and standardization occur when two records are analyzed for the probability of a match. In an MDM application, the standardization and matching process includes the following steps:

The master index application receives an incoming record.

The Master Index Standardization Engine standardizes the fields specified for parsing, normalization, and phonetic encoding based on customizable rules.

The master index application queries the database for a candidate selection pool (records that are possible matches) using the customizable blocking query.

For each possible match, the master index application creates a match string based on the fields specified for matching. It sends the string to the Master Index Match Engine.

The Master Index Match Engine checks the incoming record against each possible match, producing a matching weight for each. Matching is performed using the weighting rules defined in the match configuration file.

The master index application determines how to handle the incoming record based on the match weight, matching parameters, and configurable business logic. One of the following occurs:

A new record is added with no potential duplicates.

A new record is added, but is flagged as a potential duplicate of other records.

An existing record is updated.