3 Hadoop Jobs: Processors and Locations

Hadoop Jobs consists of locations that store site visitor data and processors that selectively handle the portion of the data they are programmed to handle. A given processor reads one location, processes the data, and writes the results to the next location for pickup by the next processor.

This chapter contains a summary of Hadoop Jobs, guidelines for monitoring Hadoop Jobs, and descriptions of the processors and locations. This chapter contains the following sections:

3.1 Hadoop Jobs Process Flow

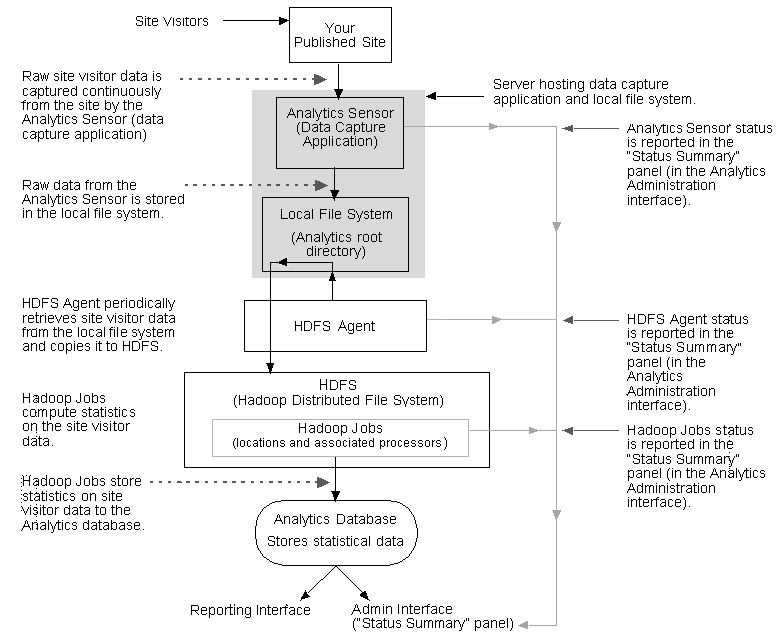

Hadoop Jobs is an Oracle application that statistically processes Analytics data and stores the results in the Analytics database.

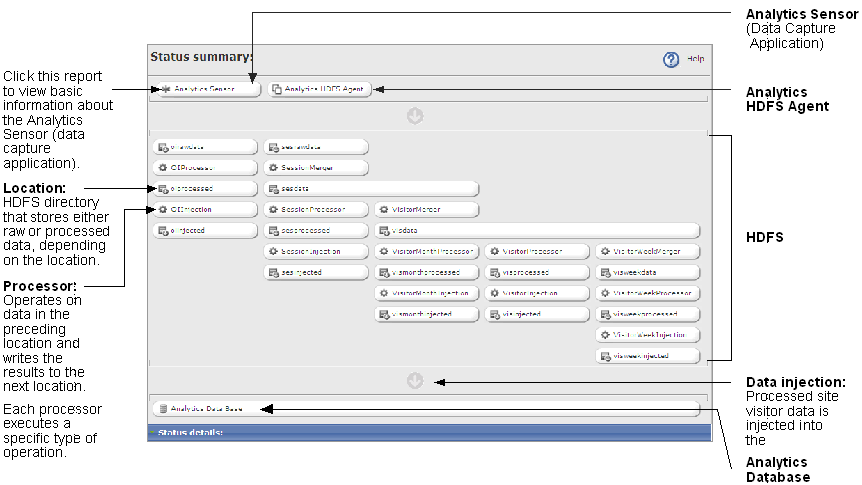

In a functional Analytics installation, raw site visitor data is continuously captured by the Analytics Sensor (Data Capture Application), which then stores the data to the local file system. The raw data in the file system is called on periodically by the Hadoop Distributed File System (HDFS) Agent, which then copies the raw data to the Hadoop Distributed File System, where Hadoop jobs process the data.

Hadoop jobs consist of locations and Oracle-specific processors that read site visitor data in one location, statistically process that data, and write the results to another location for pickup by the next processor. When processing is complete, the results (statistics on the raw data) are injected into the Analytics database.

Hadoop jobs can be monitored from the Status Summary panel of the Analytics Administration interface (see Section 3.2, "Monitoring Hadoop Jobs"). Figure 3-1 depicts Hadoop Jobs process flow.

3.2 Monitoring Hadoop Jobs

Hadoop jobs can be monitored from the Status Summary panel of the Analytics Administration interface. The Status Summary panel renders an interactive flow chart which displays Hadoop job components—locations that store site visitor data captured by the Analytics Sensor (data capture application) and processors that calculate daily, weekly, and monthly sums for the stored site visitor data.

Accessing the Status Summary Panel

When working in the Analytics Administration interface, you can access the Status Summary panel by clicking the Components tab and selecting the Overview option (Figure 3-2).

Figure 3-2 Status Summary Panel (In the Components tab, the Overview option)

Description of "Figure 3-2 Status Summary Panel (In the Components tab, the Overview option)"

Each location stores different types of site visitor data. The type of site visitor data that is stored in a given location is determined by how that data is aggregated by the location's associated processor. For example, the oiprocessed location is associated with the OIProcessor (it stores the results of the OIProcessor's computation) and therefore stores data such as the number of times specific assets have been rendered during a given time interval on a given date.

-

Clicking a location enables you to view the status of the location and its data.

-

Clicking a processor enables you to view the status of the data processing job.

-

Clicking the Analytics Sensor and HDFS Agent buttons provides a status summary of those components. For more information about monitoring the Analytics Sensor, see Section 2.1, "Sensor Overload Alerts."

3.3 Processors and Locations

This section describes the different locations that are involved in storing site visitor data, and the processors that read the data from their locations, map/reduce the data, and write the results to another location.

-

Processors – HDFS includes several processors developed by Oracle to process Analytics data. A processor consists of two parts: a mapper and a reducer. The mapper starts with a set of object impressions (collection of raw data) and creates intermediate data (n Java beans). The intermediate data is processed by the reducer in a way that aggregates the n Java beans into one Java bean containing x occurrences of a given data type, a second Java bean containing y occurrences of a different data type, and so on. As the reducer runs, it writes the aggregated data to the next location. The output of a processor is called a work package (for more information, see Section 3.4.2, "Work Packages").

Each execution of a processor is called a job, and each job is a map/reduce job. As each job is scheduled, it is assigned a unique job-identifier.

-

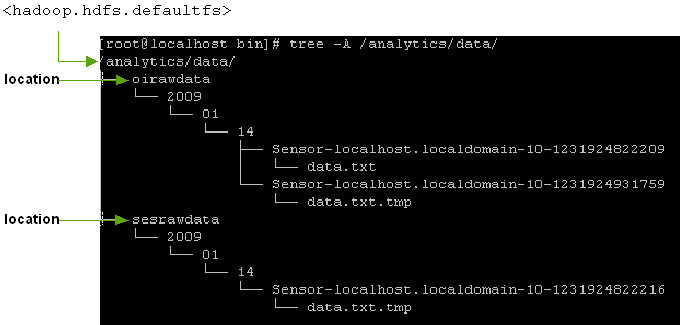

Locations – HDFS stores the site visitor data (both raw and processed) in different folders known as locations. A location is a specific folder in HDFS, which can be monitored through the Status Summary panel in the Administration interface (Figure 3-2).

A location has sub-folders which represent year, month, day, and time, and these sub-folders are arranged in a hierarchical manner, as shown in the Figure 3-3.

See Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data," for example.

Each location stores site visitor data in one of the following formats, depending on the location:

-

Raw data – Site visitor data in every object impression that is captured by the Analytics Sensor (data capture application).

-

Processed data – Site visitor data that is processed from its raw form. Fully processed site visitor data is injected into the Analytics database for reporting purposes.

The different processors that can be monitored from the Analytics Status Summary panel are listed alphabetically as follows:

The different locations that can be monitored from the Analytics Status Summary panel are listed alphabetically as follows:

3.4 Object Impressions and Work Packages

Object impressions and work packages are the main constructs of Hadoop Jobs. Object impressions are raw site visitor data that is captured as visitors browse and then processed by Hadoop Jobs in units called work packages. Results of the processing are stored to the Analytics database, where they are available on demand for the reports users generate.

This section contains the following topics:

3.4.1 Object Impressions

An object impression is a single invocation of the sensor servlet. An object impression can also be thought of as a snapshot of raw site visitor data that is captured for analysis.

An object impression contains many types of raw data on the site visitor at the moment of capture. It contains session data and visitor data including object types, object IDs, sessions, session IDs, IP addresses, operating systems used, browsers used, referrers, and so on. If Engage is installed, the raw data also includes segments and recommendations.

When site visitors browse, object impressions are collected. They are collected during a 24-hour period as work packages in the oirawdata and sesrawdata locations.

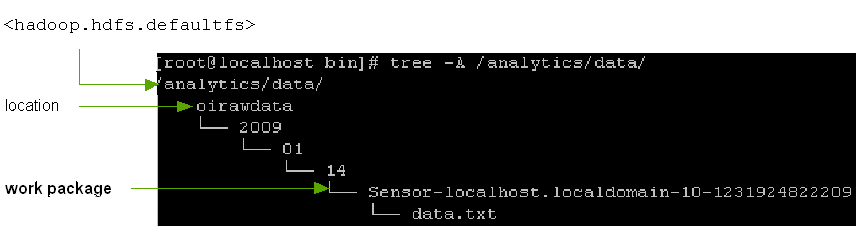

3.4.2 Work Packages

A work package is a directory within a location, as shown in Figure 3-4.

A work package stores:

-

A data file containing either object impressions (raw data) or intermediate data (Java beans). The contents of the data file are statistically analyzed by a series of processors. When analysis is complete, the final processor injects results into the Analytics database for report generation.

-

A metadata file. The metadata file reports the data processing status.

At least one work package exists in each location in the Hadoop Distributed File System (the number of work packages depends on the location. For an example, see Section 3.4.2.1, "Data Collection"). Each work package is positioned hierarchically in the location's directory structure, according to a calendar type of structure.

The data file in a given work package is input for the processor that is associated with the location containing the work package. When the processor completes its analysis of the data file, it writes the results, as a work package, to the next location for pickup by the next processor.

Note:

The initial work package, containing newly captured object impressions, is created by the Analytics Sensor. All other work packages are created by the processors.

During data processing, neither work packages nor their contents are moved from one location to another. Instead, each work package's data file is read by the appropriate processor and analyzed by the processor. Results are written (by the processor) as a work package to the next location.

This section contains the following topics:

-

Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data"

-

Section 3.4.2.4, "Directory Structure for Daily Work Packages"

-

Section 3.4.2.5, "Directory Structure for Weekly Work Packages"

-

Section 3.4.2.6, "Directory Structure for Monthly Work Packages"

3.4.2.1 Data Collection

Object impressions are collected as work packages for a 24-hour period into two locations, simultaneously—oirawdata and sesrawdata. All work packages in the two locations contain a data file named data.txt. The locations (and their work packages) differ as follows:

-

The

oirawdatalocation collects objects impressions at fixed intervals during a 24-hour period; each interval has its own work package. The interval is specified by thesensor.thresholdtimeproperty in the sensor'sglobal.xmlfile. For example, ifsensor.thresholdtimeis set to4hours, then six work packages will have been collected in theoirawdatalocation at the end of 24 hours. All six packages are stamped with the creation time, and they all contain adata.txtfile. -

The

sesrawdatalocation collects object impressions continuously as a single work package during a 24-hour period. The work package is stamped with its creation time and contains adata.txtfile.

Any one of the work packages in the oirawdata location contains only a portion of the day's raw data. A work package in the sesrawdata location contains the complete set of the day's raw data. In both locations, each work package is analyzed as soon as it is complete and computational resources are available.

3.4.2.2 Processed Data

All work packages are collected for a 24-hour period (see Section 3.4.2.1, "Data Collection"). The work packages are processed on a daily basis. For visitor data, additional work packages are created to represent weekly and monthly statistics. The work package directory structure for weekly and monthly processing differs from the directory structures for daily processing and data collection.

Note:

Analytics administrators can obtain the directory structures of locations and paths to work packages from the HDFS file browser:

http://<hostname_MasterNode>:50070/

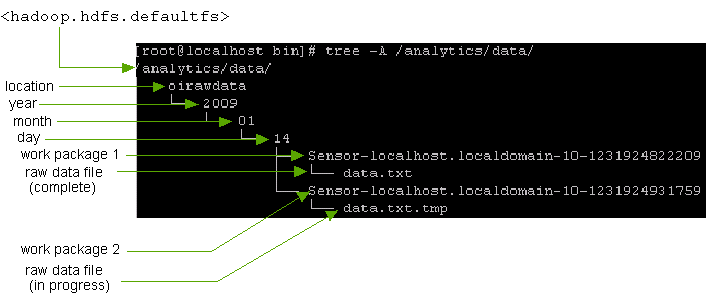

3.4.2.3 Directory Structure for Raw Data and oiprocessed Data

Work packages that contain raw data and oiprocessed data are stored in directories with a structure that identifies the day and time that the work packages were created. The following locations use a day-time directory structure: oirawdata, sesrawdata, and oiprocessed (an exception, as this location contains processed data).

The path to a raw data or oiprocessed work package is the following:

/<hadoop.hdfs.defaultfs>/<location>/<yyyy>/<mm>/<dd>/ <workpackageDir>-<n>-<time>/data.txt <or part-<xxxxx>

For example:

/analytics/data/oirawdata/2009/01/14/Sensor-localhost.localdomain-10-1231924822209/data.txt

The variables are defined as follows:

-

<hadoop.hdfs.defaultfs>is the location of the root directory under which raw data, output, and cache files are stored on the Hadoop file system. -

<location>is the name of the location that stores the raw data work package(s). Valid values for<location>are the following:-

oirawdatacollects data into multiple work packages during a 24-hour period -

sesrawdatacollects data into a single work packages during a 24-hour period -

oiprocessed(although this location contains processed data)

(For a list of locations and descriptions, see Section 3.3, "Processors and Locations.")

-

-

<yyyy>is the year in which the work package was created. -

<mm>is the month in which the work package was created. -

<dd>is the day on which the work package was created. The day is determined from the site's time zone. -

<workpackageDir>is the sensor name (the IP address or host name of the data capture server) -

<n>is a system-generated number -

<time>is the creation time of the work package, computed in milliseconds elapsed since January 1, 1970. -

data.txtis the file that contains object impression. Raw data in the object impressions will be statistically analyzed by the processor that reads the file.Data.txtfiles are stored in theoirawdatalocation and in thesesrawdatalocation, as explained in Section 3.4.2.1, "Data Collection."Note that all raw data files are named

data.txt. The<time>stamp in the work package directory containing the data file uniquely identifies the data file. -

<part-xxxxx>is the name of the work package in theoiprocessedlocation.

Figure 3-5 illustrates the directory structure of the oirawdata location. The first data.txt file that was created in the oirawdata location on January 14, 2009 is stored as shown in Figure 3-5:

/analytics/data/oirawdata/2009/01/14/Sensor-localhost.localdomain-10-1231924822209/data.txt

The second data file data.txt.temp is in progress. (At the end of the collection interval, the file will be complete and will take the name data.txt.) The file is stored as shown in Figure 3-5.

3.4.2.4 Directory Structure for Daily Work Packages

When a raw data work package is complete, the associated processor statistically analyzes the work package's data.txt file and writes the results to the work package in the next location for pickup by the next processor.

Work packages that contain daily statistics are stored in directories with a structure that identifies the day on which the work package was created. The following locations use a day-based directory structure: oiinjected, sesdata, sesprocessed, sesinjected, visdata, visprocessed, and visinjected.

The path to a daily work package is the following:

/<hadoop.hdfs.defaultfs>/<location>/<yyyy>/<mm>/<dd>/<workpackageID>/part-<xxxxx>

For example:

/analytics/sesprocessed/2009/06/25/181bd6cd-c040-46a2-abb4/part-00000

The variables are defined as follows:

-

<location>is the name of the location that stores the daily work package(s). Valid values for<location>are the following: -

oiinjected,sesdata,sesprocessed,sesinjected,visdata,visprocessed, andvisinjected -

<workpackageID> is a system-generated number used to identify the work package. -

The remaining variables are defined in Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data."

3.4.2.5 Directory Structure for Weekly Work Packages

Work packages that are processed for weekly statistics are stored in directories with a structure that identifies the ISO week in which the work package was stored. The following locations use a week-based directory structure: visweekdata, visweekprocessed, visweekinjected.

The path to a weekly work package is the following:

-

visweekdata/<hadoop.hdfs.defaultfs>/visweekdata/<yyyy>/W<no.>/<yyyy>/<mm>/<dd>/<workpackageID>/part-<xxxxx>

For example:

/analytics/visweekdata/2009/W26/2009/06/25/1db1039-0b10-417d-9895/part-00000

The variables are defined as follows:

-

W<no.>represents the number of the week in the given year. -

<workpackageID> is a system generated number. -

The remaining variables are defined in Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data."

-

-

visweekprocessedandvisweekinjected/<hadoop.hdfs.defaultfs>/<location>/<yyyy>/W<no.>/ <workpackageID>/part-<xxxxx>

For example:

/analytics/visweekprocessed/2009/W26/9fe7607b-31b1-417d-9895/part-00000

The variables are defined as for

visweekdata.

3.4.2.6 Directory Structure for Monthly Work Packages

Work packages that are processed for monthly statistics are stored in directories with a structure that identifies the month in which the work package was stored. The following locations use a month-based directory structure: vismonthprocessed and vismonthinjected.

The path to a monthly work package is the following:

/<hadoop.hdfs.defaultfs>/<location>/<yyyy>/<mm>/ <workpackageID>/part-<xxxxx>

For example:

/analytics/vismonthprocessed/2009/06/c3b9ex84-0417-4b6f-9e38/part-00000

The variables are defined as follows:

-

<workpackageID> is a system-generated number used to identify the work package -

The remaining variables are defined in Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data."

3.5 Processor Descriptions

Analytics supports three types of processors. They analyze the same object impressions, collected within a 24-hour period, but they perform their computations differently.

This section contains the following topics:

3.5.1 Object Impression Processors

Object Impression Processors analyze object impressions directly, by computing the frequency of occurrence of each type of data within the object impressions.

This section contains the following topics:

3.5.1.1 OIProcessor

Output: Intermediate daily sums. This processor reads each work package that is created in the oirawdata location and computes an intermediate daily sum (that is, frequency of occurrence) for all types of data within the object impression.

Daily sums are called intermediate when they are computed for a work package containing less than 24 hours of data. Work packages are collected into the oirawdata location throughout the day, at the interval specified by the sensor.thresholdtime property in the sensor's global.xml file (for example, every 4 hours). Each work package then holds data that was collected for the specified interval—4 hours, in our example. At the end of 24 hours, six work packages will have been collected in the oirawdata location.

Details of the computation process are described below:

-

When a work package collected in the

oirawdatalocation is complete,OIProcessorreads the data file in the work package and counts (that is, sums, aggregates) the number of occurrences of each selected type of raw data in the work package. Thus, a work package has a set of intermediate daily sums, one for each selected type of raw data in the package. (If six work packages are collected and processed over a 24 hour period, then each work package has its own set of intermediate daily sums.) -

OIProcessorwrites the intermediate daily sums for each work package to theoiprocessedlocation for pickup by theOIInjectionprocessor.Intermediate daily sums written to the

oiprocessedlocation are counted (that is, summed, aggregated) by theOIInjectionprocessor and injected into the Analytics database. The sum of intermediate daily sums for a given type of raw data is the grand total for the day for that type of raw data; it is called the complete daily sum, or aggregated daily sum.Note:

The WebCenter Sites database and Analytics database are not synchronized. Therefore, Analytics creates an

L2ObjectBeanobject for each unique object impression. TheL2ObjectBeansaves the object impression's name (title) and object (asset) id in theL2_Objecttable of the Analytics database.

3.5.1.1.1 Input Location for OIProcessor

oirawdata Stores the current day's data.txt file (and a metadata file). More information about data.txt can be found in Section 3.4.2.1, "Data Collection."

Table 3-1 Input Location for OIProcessor

| oirawdata | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data." |

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

The Every 10 minutes (or the time interval that is explicitly set for the Every object impression captured by the Analytics Sensor results in one line of data in the work package. The |

|

Work package used by |

This processor. |

3.5.1.1.2 Output Location for OIProcessor

oiprocessed Stores the work packages of this processor. Each work package contains a data file with intermediate daily sums for the work package, that is, the frequency of occurrence of each type of data that was collected into the work package. (Each work package also contains a metadata file.)

Table 3-2 Output Location for OIProcessor

| oiprocessed | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data." |

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

This processor. |

|

Work package used by |

OIInjection processor. |

3.5.1.2 OIInjection

Output: Complete daily sums for specific types of data (that is, the frequency of occurrence of each type of data that was collected during the last 24 hours). Injection status report.

-

This processor reads the intermediate daily sums in the data files of work packages in the

oiprocessedlocation, and counts (that is, sums, aggregates) the intermediate daily sums. The result is a grand total — a complete daily sum for each type of data that was collected during the last 24-hour period. -

This processor injects the complete daily sums into various tables in the Analytics database, and creates a status report in the

oiinjectedlocation. (For information about intermediate daily sums, see OIProcessor.)

Data injected into the database is retrieved into the reports that Analytics users generate.

3.5.1.2.2 Output Locations for OIInjection Processor

Analytics database Stores the output of this processor. The output is complete daily sums (that is, the frequency of occurrence of each type of data that was collected during the last 24- hours).

oiinjected Stores the work package created by this processor. Note that the work package does not contain a data file. It contains only the metadata file indicating the status of the injection process.

Table 3-3 Output Locations for OIInjection Processor

| oiinjected | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.4, "Directory Structure for Daily Work Packages." |

|

Work package |

|

|

Work package data file |

No data file is created in this work package. The metadata file |

|

Data source |

This processor. |

|

Work package used by |

None of the processors. Administrators can open the HDFS file browser to view the metadata file (injection status report). |

3.5.2 Session Data Processors

Session Data Processors analyze session objects derived from the object impressions.

This section contains the following topics:

3.5.2.1 SessionMerger

Output: Session objects for the last 24 hours of session data (that is, aggregated object impressions grouped by their respective sessions and stored in the sesdata location).

This processor reads the object impressions in the data file of the work package in the sesrawdata location. It takes session data from the object impressions and combines the data to create a session object for each entire session. The session object contains all the information that relates to the specific session. In this manner, SessionMerger aggregates all object impressions collected during a 24-hour period into their respective sessions. This processor writes the aggregated data (as a work package) to the sesdata location (for pickup by the SessionProcessor).

3.5.2.1.1 Input Location for SessionMerger Processor

sesrawdata Stores the current day's data.txt file (and a metadata file). More information about data.txt can be found in Section 3.4.2.1, "Data Collection."

Table 3-4 Input Location for SessionMerger Processor

| sesrawdata | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.3, "Directory Structure for Raw Data and oiprocessed Data." (Contains one work package for each calendar day.) |

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

Analytics Sensor. The Analytics Sensor creates a new work package every 24 hours. (A work package contains the 24-hour interval of collected raw data, which is the input for the |

|

Work package used by |

|

3.5.2.1.2 Output Location for SessionMerger Processor

sesdata Stores the work package of the SessionMerger processor. The work package's data file contains session objects for the last 24 hours of session data (that is, aggregated object impressions grouped by their respective sessions). (The work package's metadata file contains the data processing status report.)

Table 3-5 Output Location for SessionMerger Processor

| sesdata | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.4, "Directory Structure for Daily Work Packages." (Contains one work package for each calendar day.) |

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

This processor. |

|

Work package used by |

3.5.2.2 SessionProcessor

Output: Complete daily sums of session data (that is, frequency of occurrence of each type of data across sessions that ran during the last 24 hours).

This processor reads the session objects in the data file of the work package in the sesdata location, computes complete daily sums, and writes the results (as a work package) to the sesprocessed location for pickup by the SessionInjection processor.

3.5.2.2.1 Input Location for SessionProcessor.

3.5.2.2.2 Output Location for SessionProcessor

sesprocessed Stores the work package created by this processor. The work package's data file contains complete daily sums of session data (that is, the frequency of occurrence of each type of data across sessions that ran in the last 24-hours). (The work package's metadata file contains the data processing status report.)

Table 3-6 Output Location for SessionProcessor

| sesprocessed | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.4, "Directory Structure for Daily Work Packages." (Contains one work package for each calendar day.) |

|

Work package |

|

|

Work package data file |

Contains beans of all |

|

Data source |

This processor. |

|

Work package used by |

SessionInjection processor. |

3.5.2.3 SessionInjection

Output: Injection status report.

This processor reads the complete daily sums in the data file of the work package in the sesprocessed location, injects the complete daily sums into various tables in the Analytics database, and creates a status report in the sesinjected location. Data injected into the database is retrieved into the reports that Analytics users generate.

3.5.2.3.1 Input Location for SessionInjection Processor.

See Section 3.5.2.2.2, "Output Location for SessionProcessor."

3.5.2.3.2 Output Locations for SessionInjection Processor

Analytics database Stores complete daily sums of session data (that is, frequency of occurrence of each type of data across sessions that ran in the last 24 hours).

sesinjected Stores the work package created by this processor. Note that the work package does not contain a data file. It contains only the metadata file indicating the status of the injection process.

Table 3-7 Output Locations for SessionInjection Processor

| sesinjected | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.4, "Directory Structure for Daily Work Packages." (Contains one work package for each calendar day.) |

|

Work package |

|

|

Work package data file |

No data file is created in this work package. The metadata file |

|

Data source |

This processor. |

|

Work package used by |

None of the processors. Administrators can open the HDFS file browser to view the metadata file (injection status report). |

3.5.3 Visitor Data Processors

Visitor data identifies site visitors by their IP addresses, for example. Visitor data includes the segments visitors belong to and the recommendations associated with those segments.

This section contains the following topics:

3.5.3.1 VisitorMerger

Output: Raw site visitor data.

This processor reads the visitor-specific data (such as segments and recommendations) from the data file in the work package of the sesdata location. It writes that visitor data (as a work package) to the visdata location, in raw format (not aggregated) in order to save all visitor IDs. The visitor data is not aggregated by this processor because it must be used in its raw form by other visitor data processors to compute daily, weekly, and monthly sums.

3.5.3.1.2 Output Location for VisitorMerger Processor

visdata Stores the work package created by this processor. The work package contains a data file with site visitor data in raw format. (The work package's metadata file contains the data processing status report.)

Table 3-8 Output Location for VisitorMerger Processor

| visdata | Description |

|---|---|

|

Directory structure |

|

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

This processor. |

|

Work package used by |

VisitorWeekMerger processor |

3.5.3.2 VisitorMonthProcessor

Output: Complete monthly sums for visitor data (that is, the frequency of occurrence of each type of visitor data that was collected during the last month).

This processor reads the raw visitor data in the data file of the work package in the visdata location and computes monthly sums. It writes the monthly sums (as a work package) to the vismonthprocessed location for pickup by the VisitorMonthInjection processor.

3.5.3.2.2 Output Location for VisitorMonthProcessor

vismonthprocessed Stores the work package created by this processor. The work package's data file contains complete monthly sums for visitor data. (The work package's metadata file contains the data processing status report.)

Table 3-9 Output Location for VisitorMonthProcessor

| vismonthprocessed | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.6, "Directory Structure for Monthly Work Packages." |

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

This processor. |

|

Work package used by |

VisitorMonthInjection processor. |

3.5.3.3 VisitorMonthInjection

Output: Injection status report.

This processor reads the complete monthly sums in the data file of the work package in the vismonthprocessed location, injects the complete monthly sums into the Analytics database, and creates a status report in the vismonthinjected location. Data injected into the database is retrieved into the reports that Analytics users generate.

3.5.3.3.1 Input Location for VisitorMonthInjection Processor.

See vismonthprocessed (Output Location for VisitorMonthProcessor).

3.5.3.3.2 Output Locations for VisitorMonthInjection Processor

Analytics database Stores the data from this processor's input location.

vismonthinjected Stores the work package created by this processor. Note that the work package does not contain a data file. It contains only the metadata file indicating the status of the injection process.

Table 3-10 Output Locations for VisitorMonthInjection Processor

| vismonthinjected | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.6, "Directory Structure for Monthly Work Packages." |

|

Work package |

|

|

Work package data file |

No data file is created in this work package. The metadata file |

|

Data source |

This processor. |

|

Work package used by |

None of the processors. Administrators can open the HDFS file browser to view the metadata file (injection status report). |

3.5.3.4 VisitorProcessor

Output: Complete daily sums for visitor data (that is, the frequency of occurrence of each type of visitor data that was collected during the last 24 hours).

This processor reads the raw visitor data in the data file of the work package in the visdata location. It then computes complete daily sums and writes the complete daily sums (as a work package) to the visprocessed location for pickup by the VisitorInjection processor.

3.5.3.4.2 Output Location for VisitorProcessor

visprocessed Stores the work package created by this processor. The work package's data file contains complete daily sums for visitor data. (The work package's metadata file contains the data processing status report.)

Table 3-11 Output Location for VisitorProcessor

| visprocessed | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.4, "Directory Structure for Daily Work Packages." |

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

This processor. |

|

Work package used by |

VisitorInjection processor. |

3.5.3.5 VisitorInjection

Output: Injection status report.

This processor reads the complete daily sums in the data file of the work package in the visprocessed location, injects the complete daily sums into various tables in the Analytics database, and creates a status report in the visinjected location. Data injected into the database is retrieved into the reports that Analytics users generate.

3.5.3.5.2 Output Locations for VisitorInjection Processor

Analytics database Stores the data from this processor's input location.

visinjected Stores the work package created by this processor. Note that the work package does not contain a data file. It contains only the metadata file indicating the status of the injection process.

Table 3-12 Output Locations for VisitorInjection Processor

| visinjected | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.4, "Directory Structure for Daily Work Packages." |

|

Work package |

|

|

Work package data file |

No data file is created in this work package. The metadata file |

|

Data source |

This processor. |

|

Work package used by |

None of the processors. Administrators can open the HDFS file browser to view the metadata file (injection status report). |

3.5.3.6 VisitorWeekMerger

Output: Raw site visitor data from the visdata location merged into a weekly folder.

This processor reads the raw visitor data in the data file of the work package in the visdata location. It merges the raw site visitor data into the appropriate ISO-week directory (in the processor's work package). This processor does not modify the data. This processor then writes its work package to the visweekdata location.

3.5.3.6.2 Output Location for VisitorWeekMerger Processor

visweekdata Stores the work package created by this processor. The work package's data file contains raw site visitor data (from the visdata location) merged into a weekly directory. (The work package's metadata file contains the data processing status report.)

Table 3-13 Output Location for VisitorWeekMerger Processor

| visweekdata | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.5, "Directory Structure for Weekly Work Packages." |

|

Work package |

|

|

Work package data file |

Contains beans of type |

|

Data source |

This processor. |

|

Work package used by |

3.5.3.7 VisitorWeekProcessor

Output: Complete weekly sums for site visitor data (that is, the frequency of occurrence of each type of visitor data that was captured in the last week.)

This processor reads the weekly raw data in the data file of the work package in the visweekdata location. It computes weekly sums, and writes the weekly sums (as a work package) to the visweekprocessed location.

3.5.3.7.1 Input Location for VisitorWeekProcessor.

See visweekdata (Output Location for VisitorWeekMerger Processor).

3.5.3.7.2 Output Location for VisitorWeekProcessor

visweekprocessed Stores the work package created by this processor. The work package's data file contains weekly sums for site visitor data (that is, the frequency of occurrence of each type of site visitor data that was captured during the last week.) (The work package's metadata file contains the data processing status report.)

Table 3-14 Output Location for VisitorWeekProcessor

| visweekprocessed | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.5, "Directory Structure for Weekly Work Packages." |

|

Work package |

Note: If the work package contains visitor data for the last week of the year, it will also contain visitor data for the new year if the week runs over to the new year. |

|

Work package data file |

Contains beans of type |

|

Data source |

This processor. |

|

Work package used by |

VisitorWeekInjection processor. |

3.5.3.8 VisitorWeekInjection

Output Injection status report.

This processor reads the weekly sums in the data file of the work package in the visweekprocessed location, injects the weekly sums into the Analytics database, and creates a status report in the visweekinjected location. Data injected into the database is retrieved into the reports that Analytics users generate.

3.5.3.8.1 Input Location for VisitorWeekInjection Processor.

See Section 3.5.3.7.2, "Output Location for VisitorWeekProcessor."

3.5.3.8.2 Output Locations for VisitorWeekInjection Processor

Stores the data from this processor's input location.

Analytics database Stores the data from this processor's input location.

visweekinjected Stores the work package created by this processor. Note that the work package does not contain a data file. It contains only the metadata file indicating the status of the injection process.

Table 3-15 Output Locations for VisitorWeekInjection Processor

| visweekinjected | Description |

|---|---|

|

Directory structure |

See Section 3.4.2.5, "Directory Structure for Weekly Work Packages." |

|

Work package |

<workpackageID>/part-<xxxxx> |

|

Work package data file |

No data file is created in this work package. The metadata file |

|

Data source |

This processor. |

|

Work package used by |

None of the processors. Administrators can open the HDFS file browser to view the metadata file (injection status report). |