| Oracle® Enterprise Data Quality for Product Data Endeca Connector Installation and User's Guide Release 11g R1 (11.1.1.6) E29135-03 |

|

|

PDF · Mobi · ePub |

| Oracle® Enterprise Data Quality for Product Data Endeca Connector Installation and User's Guide Release 11g R1 (11.1.1.6) E29135-03 |

|

|

PDF · Mobi · ePub |

This appendix describes performance aspects of interest.

This section explains ways to improve performance on your Oracle DataLens Server when using the Endeca Connector.

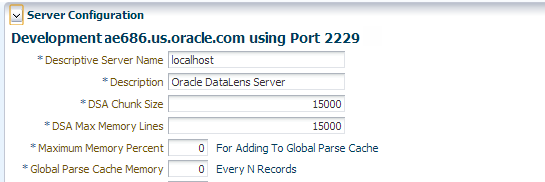

The Oracle DataLens Servers should be tuned to match the parameters used in the Endeca Connector Adapter.

By default, the Endeca Connector is set to send records from the Endeca Forge process to the Oracle DataLens Server in chunks of 15,000 records. Generally, the larger the chunk size, the greater the performance gain on record processing. The limit is the amount of memory allocated to the Forge process when running a baseline update on the Endeca ITL machine.

Assuming that you will leave the chunk size at 15,000 for the Endeca Connector Adapter, you do not need to change the default values of your Oracle DataLens Server.

You can view the default values for your server by:

Ensure that your Oracle DataLens Server is running.

Open one of the following supported Web browsers for your environment:

Internet Explorer 8.0 or later and Internet Explorer 9.0 or later

Mozilla Firefox 4.0 or later and Firefox 5.0 or later

Google Chrome 12.0 or later

Safari 5.0 or later

Enter the following URL:

http://hostname:port/datalens

where hostname is the DNS name or IP address of the Administration Server and port is the listen port on which the Administration Server is listening for requests (port 2229 by default).

If you configured the Administration Server to use Secure Socket Layer (SSL) you must add s after http as follows:

https://hostname:port/datalens

When the login page appears, enter a user name and the password. Typically, this is the user name and password you specified during the installation process.

The Oracle DataLens Server Web pages are displayed and default to the Welcome tab.

Select the Administration tab.

From the Server panel, select Server Group.

Click the link for your server.

The values for the selected server are displayed.

For additional information on these parameter, see Oracle Enterprise Data Quality for Product Data Oracle DataLens Server Administration Guide.

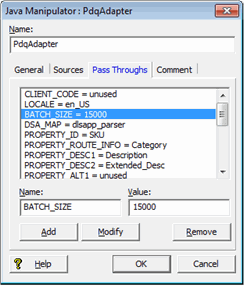

If you need to change the BATCH_SIZE parameter in the PdqAdapter (Java Manipulator) to a larger value, then the value on the Oracle DataLens Server should be increased to match this size.

You must restart your Oracle DataLens Server after changing any parameter values for them to be used.

To speed up the processing of the data during the Forge processing by the PdqAdapter, multiple production servers can be put into a single Production server group. The Oracle DataLens Server will load balance the work of the data lens processing among all the Oracle DataLens Servers in the Production server group.

The main DSA that performs the processing by the PdqAdapter will use the Oracle DataLens Server's load-balancing and multi-threading capability to increase throughput when processing data on multiple servers with no additional configuration changes needed by the Endeca Connector administrator.

It should be noted that for the Endeca Connector ”Discover/Delete” Transform Maps, the processing of the individual steps in a DSA are single-threaded, but the steps within a single Transform Map are multi-threaded. This means that when creating the Endeca Connector Discover Maps, each step should only perform a single Endeca Connector Add-In to prevent concurrent updating of the same Endeca project files by simultaneous job steps.

There will be an overhead when the pipeline is being run during the Forge processing because the Oracle DataLens Server will be called to extract attributes for each line of input data. This processing will run quite fast, with processing speeds over 100 lines per second typical based on the input data and the quality of the Route Information. This means that when processing the input during the Forge step you would expect to add about two to seven minutes of processing for 40,000 lines of input data.

You can further speed the processing of this data by:

Using Ultra-high priority DSA jobs.

The smaller the chunk size, the more impact this will have on performance.

Setting the Endeca Connector Adapter chunking levels higher for faster throughput.

Performance improvements have e been observed from over 20 minutes for processing 40K records to under 2 minutes for processing the same records, simply by changing the chunk size (BATCH_SIZE) from 100 to 21000.

Ensuring that the Route Information is accurate for the input data.

This is important to minimize the number of data lenses that need to process each line of data.

Running the processing on a fast Oracle DataLens Production server.

Typically, the Production server is a more powerful machine than the Oracle DataLens Administration server, although the processing can take place on either type of server.

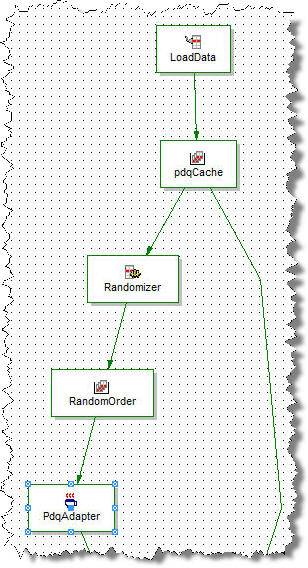

This is useful if you are using multiple parallel Oracle DataLens Servers to process the Forge data. If the data is randomized, then large performance gains are possible, especially if the data is grouped by product.

Here is a simple way to randomize the data in the pipeline. If you create a flow similar to the following diagram, you can randomize the data just before it goes through the PdqAdapter.

In the Perl manipulator, add a ”next record” method with the following code:

# Add a random field

my $rec = $this->record_source(0)->next_record;

# Careful: $rec will be undefined if there are no more records.

if ($rec) {

my $pval = new EDF::PVal("random", sprintf("%07d",int(rand(9999999))));

$rec->add_pvals($pval);

}

return $rec;

In the RandomOrder cache, use 'random' as your record index.

The Dgidx processing will also be expected to slow down. In this step, although there is no data being processing by the Oracle DataLens Server, the slowdown will be due to the additional Dimensions that are added to the input data and will need to be indexed for guided navigation and search.

If you double the number of Dimensions that are used by Endeca, then you would expect a corresponding increase in the processing time to index these additional attributes.

You can further speed the processing of the Dgidx step by spreading the Dgidx processing out over several Endeca servers