Converting Incremental Load Jobs to Destructive Load Jobs

As part of the ETL configuration process, you can convert incremental load jobs to destructive load jobs. However, because server jobs that use CRC logic would require modification to at least 80% of the design, it is better not to alter the existing jobs and create a new destructive load job from scratch.

This topic discusses how to convert incremental load jobs that use the DateTime stamp.

Converting Jobs that Use the DateTime Stamp

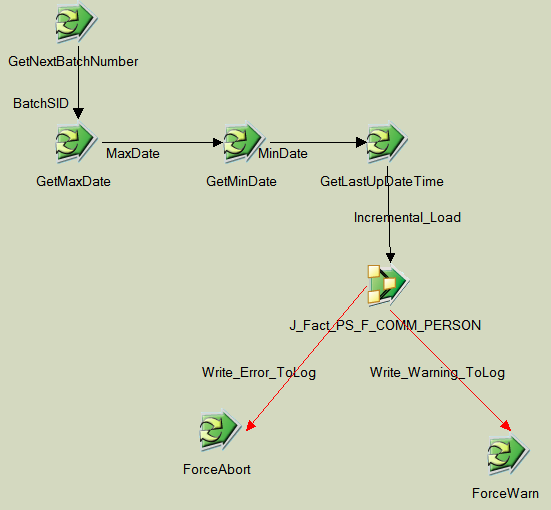

The changes required to convert an incremental load job (that uses the DateTime stamp) to a destructive load job can be demonstrated using the J_Fact_PS_F_COMM_PERSON job as an example.

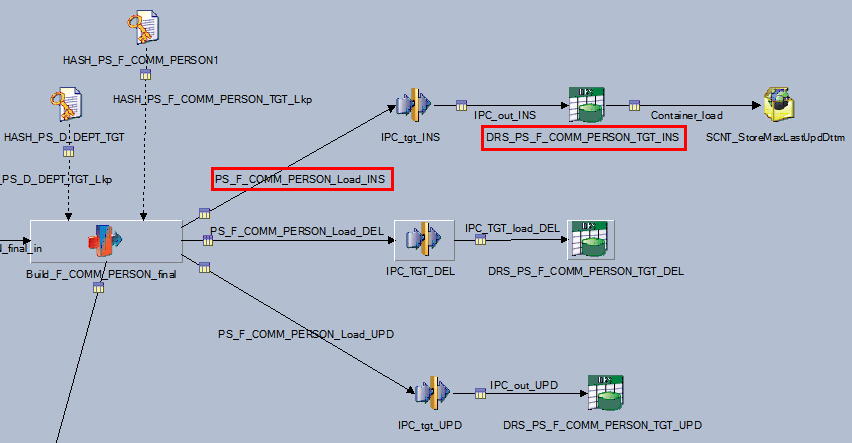

Image: Example of the incremental load in the J_Fact_PS_F_COMM_PERSON job

This example illustrates the Example of the incremental load in the J_Fact_PS_F_COMM_PERSON job.

To convert an incremental load job (that uses the DateTime stamp) to a destructive load job:

In DataStage Designer, open the server job you want to convert.

Open the source DRS stage and select the Output tab.

In the Selection sub-tab, locate the WHERE clause and delete the last update date time portion (highlighted below).

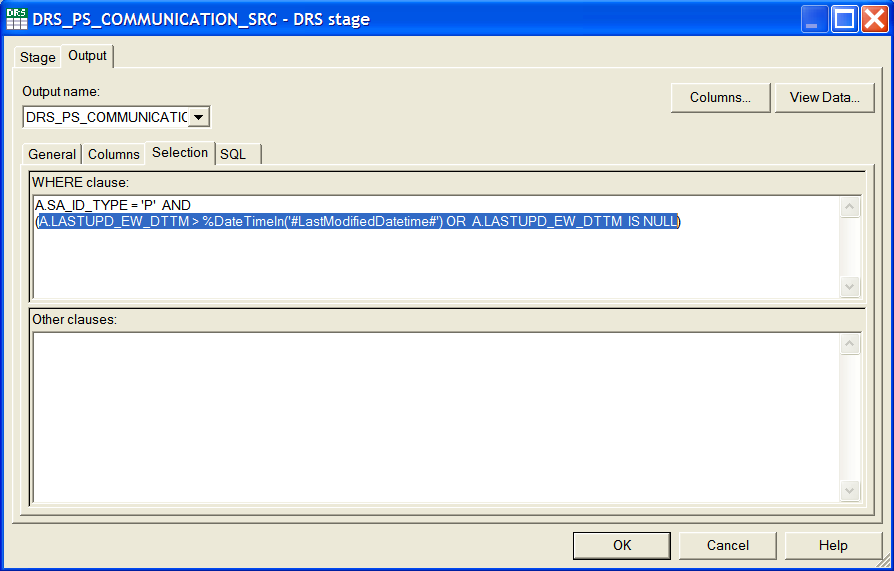

Image: Deleting the WHERE clause

This example illustrates Deleting the WHERE clause.

Click OK to save your changes.

Open the insert (*_INS) target DRS stage and select the Input tab.

In the General sub-tab, select Truncate table then insert rows for the Update Action field.

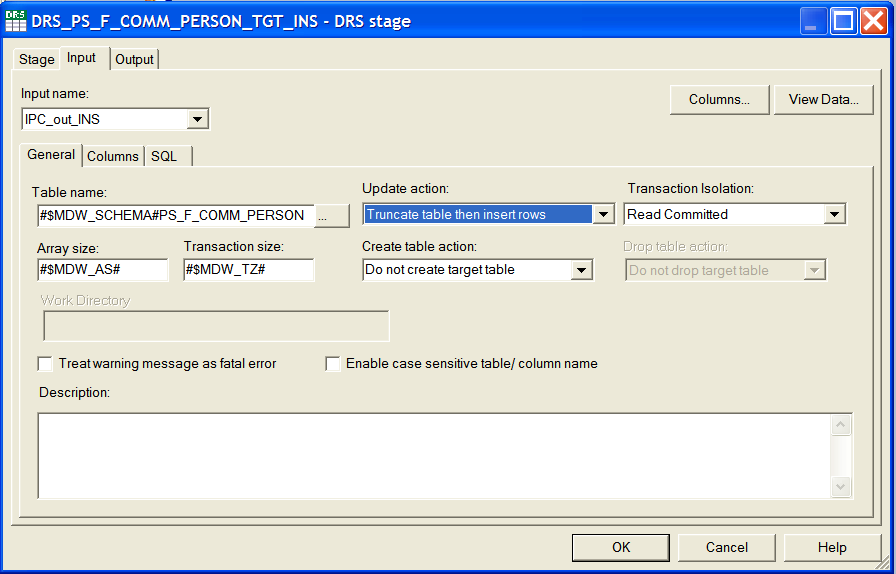

Image: Update Action field

This example illustrates the Update Action field.

Click OK to save your changes.

Delete the StoreMaxLastUpdDttm container and link.

Delete the delete (*_DEL) target DRS stage and link.

Delete the update (*_UPD) target DRS stage and link.

Delete the hash target table lookup (the hash lookup that is performed against target table data) and link.

Because this hash load is used to identify updated or new records and you are converting the job to destructive load, the hash load is no longer needed.

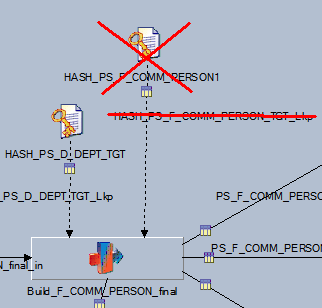

Image: Deleting the hash target table lookup

This example illustrates Deleting the hash target table lookup.

Open the last transformation stage in the job (it should immediately precede the insert target DRS stage).

New rows are identified in this stage and this is done to retain the Created_EW_DTTM of rows.

In the example job above, the last stage is called Build_F_COMM_PERSON_final.

Delete the InsertFlag stage variable and click OK to save and exit the window.

Select Edit, Job Properties from the menu and change the target column value for CREATED_EW_DTTM to DSJobStartTimestamp, which is a DS Macro (and same as for the field LASTUPD_EW_DTTM).

Delete the LastModifiedDateTime job parameter and click OK to save and exit the window.

Open the corresponding sequence job that calls the server job and delete the GetLastUpDatetime job activity stage (which calls the routine of the same name).

Image: Sequencer job

This example illustrates the Sequencer job.

Select Edit, Job Properties from the menu and delete the LastUpdDateTime job parameter if it is present.

This parameter is not present in every job.

( I say "IF" because it may not be present in the sequence job. It is not needed in the sequencer anyway).

Change the job annotations and descriptions to reflect the change.

Save changes and exit.

Save and recompile the job.