Managing Aborted and Failed Jobs

This topic provides information on how you can manage aborted and failed jobs and discusses how to:

Review the job log to determine job errors.

Debug aborted and failed jobs.

Reviewing the Job Log to Determine Job Errors

The first step in managing aborted or failed jobs is to use DataStage Director to review the job log, which provides job run information.

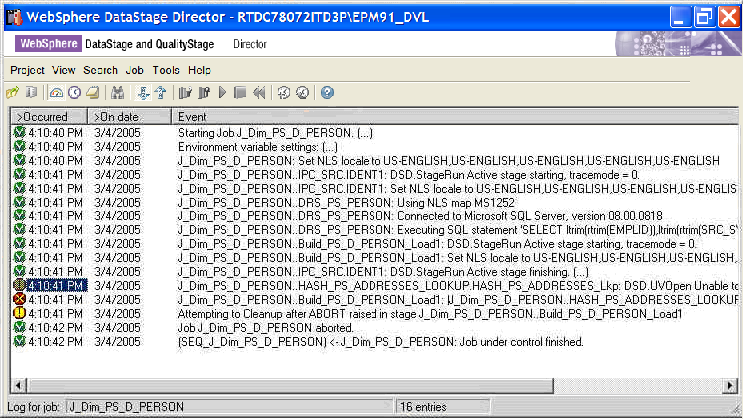

Image: Detailed Job Log View of Aborted and Failed Jobs

This example illustrates the Detailed Job Log View of Aborted and Failed Jobs.

Using the job log you can first determine which jobs require your attention. Note that the job log displays which jobs aborted or failed.

Jobs status are color-coded as follows:

Green (V): Informational. Success condition.

Yellow (I): Failed with warnings.

Red (X): Error messages.

You can double-click an aborted or failed job to view details about the job.

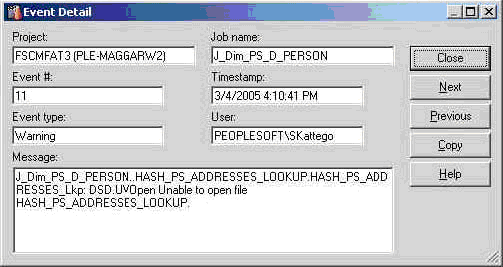

Image: Event Detail for Failed Job

This example illustrates the Event Detail for Failed Job.

A common cause for jobs aborting is that dependent hash files do not exist. This happens when a hash file that a job performs a lookup on has not been pre-created. The hash file load jobs have to be run. As you can see in the screen above, event details suggest that the job is missing the hash file HASH_PS_ADDRESSES_LOOKUP, which is required.

Debugging Aborted and Failed Jobs

Once you know which job has an issue, you can access the job in DataStage Designer and view the job with performance statistics on. This shows successful links in green and failed links in red, and helps target the specific part of the job design that failed. The performance statistics also gives the number of rows that have been transmitted through each link, again which information can be useful for debugging a job. Datastage Designer also provides advanced debugging features that can help developers set break points and watch variable values.

Jobs that run with more than on array size or transaction size usually result in a warning message; the job log displays a warning message relevant to each row of data in some instances. For example, if a job has a right string truncated error when inserting into the target database, the log specifically provides the row data that failed.

To address this type of issue, configure the job to limit the rows to process so there are less rows processed during job execution. This restricts the job run time and the log will also be smaller and more manageable.