| Oracle® Communications Calendar Server System Administrator's Guide Release 7.0.5 E54935-01 |

|

|

PDF · Mobi · ePub |

| Oracle® Communications Calendar Server System Administrator's Guide Release 7.0.5 E54935-01 |

|

|

PDF · Mobi · ePub |

Choices for making Oracle Communications Calendar Server highly available include Oracle GlassFish HA Cluster, MySQL Async Replication, and Oracle Data Guard. You can also configure the document store for high availability.

Topics:

This section describes how to deploy and configure Calendar Server on a GlassFish Server cluster. The GlassFish Server cluster feature enables you to create highly available and scalable deployment architectures.

This example assumes that you are familiar with the following tasks:

Installing and configuring Calendar Server. See Calendar Server Installation and Configuration Guide for more information.

Setting up clusters using GlassFish Server. See GlassFish Server High Availability Administration Guide for more information.

The hardware and software requirements for configuring Calendar Server in a GlassFish Server cluster are the same as configuring a default Calendar Server deployment. However, additionally you must install Oracle iPlanet Web Server (formerly known as Sun Java System Web Server 7.0). In this example, Web Server functions as the load balancer.

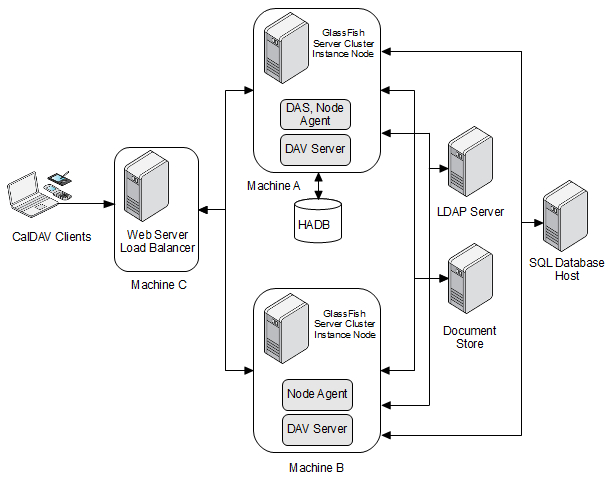

Figure 13-1 shows the deployment architecture.

Figure 13-1 Calendar Server Cluster Deployment Architecture

Also, ensure that clocks are synchronized on the HADB hosts. See the topic on synchronizing system clocks for in GlassFish Server High Availability Administration Guide for more information.

For the following steps, refer to Figure 13-1.

Prepare shared memory configuration for HADB by editing the /etc/system file and adding the following entries:

set shmsys:shminfo_shmmax=0x80000000 set semsys:seminfo_semmni=10 set semsys:seminfo_semmns=60 set semsys:seminfo_semmnu=600

Reboot the system:

reboot

Consult GlassFish Server High Availability Administration Guide for more shared memory configuration information.

Run the Glassfish Server installer and select the "High Availability Database Server," and "Domain Administration Server and Administration Tool" in the Component Selection screen.

Start the HADB agent.

GlassFish_home/hadb/4/bin/ma-initd start

Create an HADB Management Domain.

./hadbm createdomain --adminpassword=password machine-A-IP-address export HADBM_AGENT=machineA:1862

Give Node Supervisor Processes Root Privileges.

chmod u+s GlassFish_home/hadb/4/lib/server/clu_nsup_srv chown root GlassFish_home/hadb/4/lib/server/clu_nsup_srv

Create a node agent for the instances in the cluster.

./asadmin create-node-agent --host machineA --port 4848 --user admin --agentproperties remoteclientaddress=machineA dav-agent-1 ./asadmin create-cluster --user admin dav-cluster ./asadmin create-instance --user admin --nodeagent dav-agent-1 --cluster dav-cluster instance1 ./asadmin create-instance --user admin --nodeagent dav-agent-2 --cluster dav-cluster instance2 ./asadmin set dav-cluster-config.jms-service.type=EMBEDDED

Go to "Configuring Machine B Where Node Agent Resides" and "Configuring Machine C Where Web Server 7 Is Used as the Load Balancer", then return and continue with the next step.

Create an HTTP load balancer.

./asadmin create-http-lb --user admin --devicehost machineC --deviceport machineC-SSL-Port --autoapplyenabled=true --lbenableallinstances=true --lbenableallapplications=true --target dav-cluster dav-lb

Log in to the GlassFish Server Administration Console on machine A and go to the load balancer dav-lb properties page and do "Test Connection" to make sure the SSL connection between DAS and Web Server 7 succeeds.

./asadmin configure-ha-cluster --user admin --hosts machineA,machineA --haadminpassword password dav-cluster

Install and configure Calendar Server on machine A.

See Calendar Server Installation and Configuration Guide for instructions on installing and configuring Calendar Server. Make sure the web-app target is dav-cluster, and the MySQL host is the FQDN of the MySQL server, not the default localhost.

Enable HA on the deployed web app davserver, by editing the GlassFish_home/domains/domain1/applications/j2ee-modules/davserver/WEB-INF/web.xml file to add <distributable/> under <web-app>, and creating sun-web.xml in the preceding location with the following content:

<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE sun-web-app PUBLIC "-Sun Microsystems, Inc. DTD Application Server 9.0 Servlet 2.5//EN" ">http://www.sun.com/software/appserver/dtds/sun-web-app_2_5-0.dtd" <sun-web-app> <session-config> <session-manager persistence-type="ha"> <manager-properties> <property name="persistenceFrequency" value="web-method"/> </manager-properties> <store-properties> <property name="persistenceScope" value="session"/> </store-properties> </session-manager> <session-properties/> <cookie-properties/> </session-config> </sun-web-app>

Copy the contents of /var/opt/sun/comms/davserver from machine A to machine B, so that both instances in the dav-cluster, instance1, and instance2 have the same configuration.

Enable load balancing for the davserver web app, and start the cluster.

./asadmin enable-http-lb-application -u admin --name davserver dav-cluster ./asasmin start-cluster dav-cluster

Run the GlassFish Server installer and select Command Line Administration Tool Only in the Component Selection screen.

Create a node agent for the instance in the cluster and start the node agent.

./asadmin create-node-agent --host machineA --port 4848 --user admin --agentproperties remoteclientaddress=machineB dav-agent-2 ./asadmin start-node-agent dav-agent-2

Install Web Server 7 and create a symlink in the WebServer_home directory to the server instance directory.

ln -s /var/opt/SUNWwbsvr7/https-machineC /opt/SUNWwbsvr7/https-machineC

Start the Web Server 7 administrative server.

/var/opt/SUNWwbsvr7/admin-server/bin/startserv

Run the GlassFish Server installer, and select only the "Load Balancing Plugin" and use the path /opt/SUNWwbsvr7/https-machineC for the Web Server Location.

Synchronize Web Server instance configureation changes back to store, and start the instance.

wadm pull-config --config=machineC machineC wadm deploy-config machineC wadm start-instance --config=machineC machineC

Create an SSL http listener for use with load balancer configuration synchronization with GlassFish Server.

wadm create-selfsigned-cert --config=machineC --server-name=machineC --nickname=cert-machineC

wadm create-http-listener --listener-port=machineC-SSL-Port --config=machineC --server-name=machineC --default-virtual-server-name=machineC http-listener-ssl

wadm set-ssl-prop --config=machineC --http-listener=http-listener-ssl enabled=true server-cert-nickname=cert-machineC client-auth=optional

wadm deploy-config machineC

wadm restart-instance --config=machineC

From machine A, export the server certificate and copy the s1as.rfc file to /var/tmp on machine C.

GlassFish_home/lib/certutil -L -a -n s1as -d GlassFish_home/domains/domain1/config > /var/tmp/s1as.rfc

On machine C, import the GlassFish Server certificate to Web Server.

/opt/SUNWwbsvr7/bin/certutil -A -a -n s1as -t "TC" -i /var/tmp/s1as.rfc -d /var/opt/SUNWwbsvr7/admin-server/config-store/machineC/config

Add the following lines to the default.acl in the /var/opt/SUNWwbsvr7/admin-server/config-store/machineC/config/default.acl file. Change /davserver in the URI if it is different in your deployment.

acl "uri=/davserver"; allow (http_propfind,http_proppatch,http_mkcol,http_head,http_delete,http_put,http_copy,http_move,http_lock,http_unlock,http_mkcalendar,http_report) user = "anyone";

Add the following line to the /var/opt/SUNWwbsvr7/admin-server/config-store/machineC/config/magnus.conf file:

Init fn="register-http-method" methods="MKCALENDAR"

Deploy the configuration to the instance and restart the server instance.

wadm deploy-config machineC wadm restart-instance --config=machineC

The GlassFish Server load balancer used in this deployment example can only do cookie-based stickiness routing, not IP-based routing. As a consequence, the session-based WCAP protocol does not work well with the load balancer. Requests from the same client are routed among the active instances that could be different than the authenticated session. Therefore, Convergence would not work against this HA deployment. To get around this limitation, an IP-based load balancer is required.

You can achieve a highly available, redundant MySQL back-end deployment by using asynchronous replication. You must configure GlassFish Server to use the replication driver, as explained in the MySQL Connector/J Developer Guide at:

http://dev.mysql.com/doc/connector-j/en/connector-j-reference-configuration-properties.html

See "Configuring a High-Availability Database" for instructions.

Note:

MySQL Cluster with Calendar Server does not work reliably under load. Additionally, MySQL Cluster's data recovery mechanism is not satisfactory with Calendar Server. For these reasons, use MySQL asynchronous replication to achieve a redundant, highly available Calendar Server deployment.For information on maximizing Oracle Database availability by using Oracle Data Guard and Advanced Replication, see the Oracle Database High Availability documentation at:

You can deploy a highly available document store. See "Configuring the Calendar Server Document Store" in Calendar Server Installation and Configuration Guide for details.