BDD supports many different cluster configurations. You should determine the one that best suits your needs before installing.

The following sections describe three configurations suitable for demonstration, development, and production environments, and their possible variations.

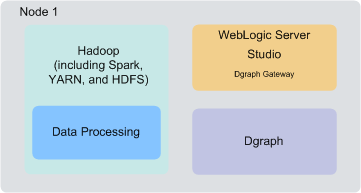

Single-node demo environment

You can install BDD in a demo environment running on a single physical or virtual machine. This configuration can only handle a limited amount of data, so it is recommended solely for demonstrating the product's functionality with small a small sample database.

In a single-node deployment, all BDD and Hadoop components are hosted on the same node, and the Dgraph databases are stored on the local filesystem.

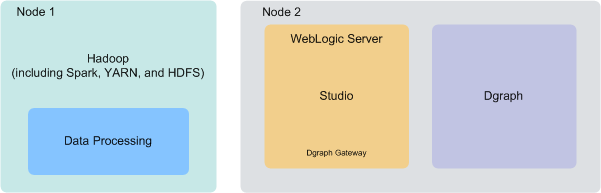

Two-node development environment

You can install BDD in a development environment running on two nodes. This configuration can handle a slightly larger database than a single-node deployment, but is still has limited processing capacity. Additionally, it doesn't provide high availability for the Dgraph or Studio.

In a two-node configuration, Hadoop and Data Processing are hosted on one node, and WebLogic Server (including Studio and the Dgraph Gateway) and the Dgraph are hosted on another. The Dgraph databases are stored on the local filesystem.

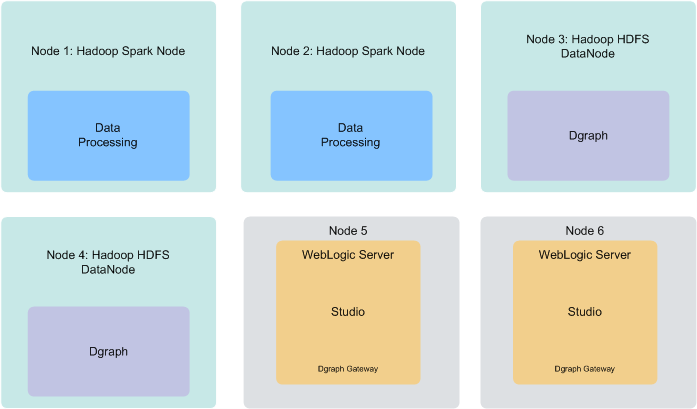

Six-node production environment

A production environment can consist of any number of nodes required for scale; however, a cluster of six nodes, with BDD deployed on at least four Hadoop nodes, provides maximum availability guarantees.

- Nodes 1 and 2 are running Spark on YARN (and other related services) and BDD Data Processing.

- Nodes 3 and 4 are running

the HDFS DataNode service and the Dgraph, with the Dgraph databases stored on

HDFS.

Note that this configuration is different from the two described above, in which the Dgraph is separate from Hadoop and its databases are stored on the local filesystem. Storing the databases on HDFS is a high availability option for the Dgraph and is recommended for large production environments.

- Nodes 4 and 5 are running WebLogic Server, Studio, and the Dgraph Gateway. Having two of these nodes ensures minimal redundancy of the Studio instances.

Remember that you aren't restricted to the above configuration—your cluster can contain as many Data Processing, WebLogic Server, and Dgraph nodes as necessary. You can also co-locate WebLogic Server and Hadoop on the same nodes, or host your databases on a shared NFS and run the Dgraph on its own node. Be aware that these decisions may impact your cluster's overall performance and are dependent on your site's resources and requirements.

About the number of nodes

Although this document doesn't include sizing recommendations, you can use the following guidelines along with your site's specific requirements to determine an appropriate size for your cluster. You can also add more Dgraph and Data Processing nodes later on, if necessary; for more information, see the Administrator's Guide.

- Data Processing nodes: Your BDD cluster must include at least one Hadoop node running Data Processing. For high availability, Oracle recommends having at least three. (Note: Your pre-existing Hadoop cluster may have more than three nodes. The Hadoop nodes discussed here are those that BDD has also been installed on.) The BDD installer will automatically install Data Processing on all Hadoop nodes running Spark on YARN, YARN, and HDFS.

- WebLogic Server nodes: Your BDD cluster must include at least one WebLogic Server node running Studio and the Dgraph Gateway. There is no recommended number of Studio instances, but if you expect to have a large number of end users making queries at the same time, you might want two.

- Dgraph nodes: Your deployment must include at least one Dgraph instance. If there are more than one, they will run as a cluster within the BDD cluster. Having a cluster of Dgraphs is desirable because it enhances high availability of query processing. Note that if your Dgraph databases are on HDFS, the Dgraph must be installed on HDFS DataNodes.

Co-locating Hadoop, WebLogic Server, and the Dgraph

One way to configure your cluster is to co-locate different components on the same nodes. This is a more efficient use of your hardware, since you don't have to devote an entire node to any specific BDD component.

Be aware, however, that the co-located components will compete for memory, which can have a negative impact on performance. The decision to host different components on the same nodes depends on your site's production requirements and your hardware's capacity.

Any combination of Hadoop and BDD components can run on a single node, including all three together. Possible combinations include:

- The Dgraph and

Hadoop. The Dgraph can run on Hadoop DataNodes. This is required if you

store your databases on HDFS, and is also an option if you store them on an

NFS.

For best performance, you shouldn't host the Dgraph on a node running Spark on YARN as both processes require a lot of memory. However, if you have to co-locate them, you can use cgroups to partition resources for the Dgraph. For more information, see Setting up cgroups.

- The Dgraph and WebLogic Server. The Dgraph and WebLogic Server can be hosted on the same node. If you do this, you should configure the WebLogic Server to consume a limited amount of memory to ensure the Dgraph has access to sufficient resources for its query processing.

- WebLogic Server and Hadoop. WebLogic Server can run on any of your Hadoop nodes. If do this, you should configure WebLogic Server to consume a limited amount of memory to ensure that Hadoop has access to sufficient resources for processing.