Procedure - Replace Drive Module Assembly

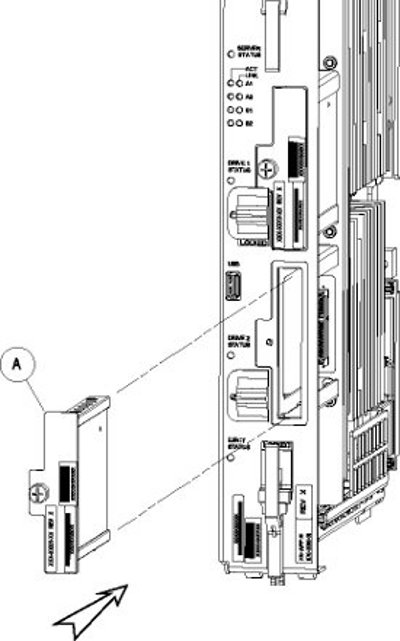

- Slide a new drive(s) module into the drive slot on the card (see Figure 1).Figure 1. Drive Module Replacement

- Gently push the drive (A) in slowly until it is properly seated.

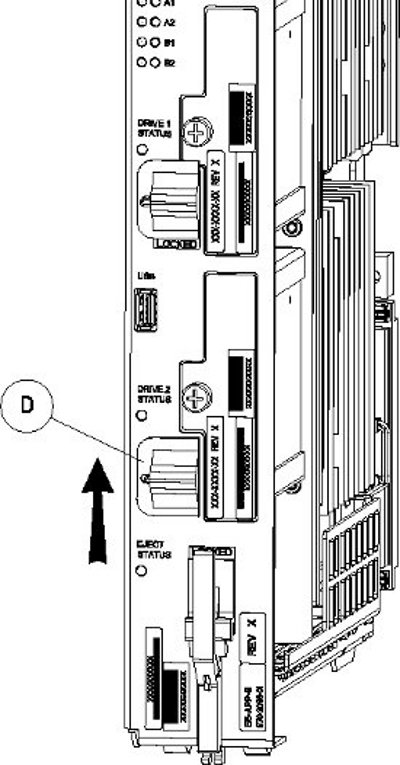

- Tighten the mounting screw until the Drive Status LED is in a steady red state ((B), from Figure 3).

- Move the drive module locking switch (D) from the unlocked to the LOCKED position.

When drive module locking switch (D) is transitioned from unlocked to locked, the LED will flash red to indicate the drive is locked and in process of coming online (see Figure 2).

Figure 2. Drive Module Locked

- When the LED turns off, log in as admusrroot and run the cpDiskCfg command to copy the partition table from the good drive module to the new drive module.

$ sudo /usr/TKLC/plat/sbin/cpDiskCfg <source disk> <destination disk>

# /usr/TKLC/plat/sbin/cpDiskCfg <source disk> <destination disk>

For example:$ sudo /usr/TKLC/plat/sbin/cpDiskCfg /dev/sdb /dev/sda

# /usr/TKLC/plat/sbin/cpDiskCfg /dev/sdb /dev/sda

- After successfully copying the partition table, use the mdRepair command to replicate the data from the good drive module to the new drive module.

$ sudo /usr/TKLC/plat/sbin/mdRepair

# /usr/TKLC/plat/sbin/mdRepair

This step takes 45 to 90 minutes and runs in the background without impacting functionality.Sample output of the command:

[admusr@recife-b ~]$ sudo /usr/TKLC/plat/sbin/mdRepair SCSI device 'sdb' is not currently online probing for 'sdb' on SCSI 1:0:0:0 giving SCSI subsystem some time to discover newly-found disks Adding device /dev/sdb1 to md group md1... md resync in progress, sleeping 30 seconds... md1 is 0.0% percent done... This script MUST be allowed to run to completion. Do not exit. bgRe-installing master boot loader(s) Adding device /dev/sdb2 to md group md3... Adding device /dev/sdb9 to md group md5... Adding device /dev/sdb7 to md group md4... Adding device /dev/sdb6 to md group md7... Adding device /dev/sdb8 to md group md6... Adding device /dev/sdb3 to md group md2... Adding device /dev/sdb5 to md group md8... md resync in progress, sleeping 30 seconds... md3 is 3.6% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md5 is 27.8% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md4 is 8.9% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md4 is 62.5% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md7 is 14.7% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md7 is 68.3% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md8 is 0.3% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md8 is 1.1% percent done... This script MUST be allowed to run to completion. Do not exit. md resync in progress, sleeping 30 seconds... md8 is 2.0% percent done...

- Use the cat /proc/mdstat command to confirm whether RAID repairs are successful.

After the RAID is repaired successfully, output showing both drive modules is displayed:

Personalities : [raid1] md1 : active raid1 sdb2[1] sda2[0] 262080 blocks super 1.0 [2/2] [UU] md2 : active raid1 sda1[0] sdb1[1] 468447232 blocks super 1.1 [2/2] [UU] bitmap: 1/4 pages [4KB], 65536KB chunk unused devices: <none>

Personalities : [raid1] md2 : active raid1 sda2[0] sdb2[1] 26198016 blocks super 1.1 [2/2] [UU] bitmap: 1/1 pages [4KB], 65536KB chunk md1 : active raid1 sda3[0] sdb3[1] 262080 blocks super 1.0 [2/2] [UU] md3 : active raid1 sdb1[1] sda1[0] 442224640 blocks super 1.1 [2/2] [UU] bitmap: 1/4 pages [4KB], 65536KB chunk unused devices: <none>

Output of cat /proc/mdstat prior to re-mirroring:

[admusr@recife-b ~]$ sudo cat /proc/mdstat Personalities : [raid1] md1 : active raid1 sda1[0] 264960 blocks [2/1] [U_] md3 : active raid1 sda2[0] 2048192 blocks [2/1] [U_] md8 : active raid1 sda5[0] 270389888 blocks [2/1] [U_] md7 : active raid1 sda6[0] 4192832 blocks [2/1] [U_] md4 : active raid1 sda7[0] 4192832 blocks [2/1] [U_] md6 : active raid1 sda8[0] 1052160 blocks [2/1] [U_] md5 : active raid1 sda9[0] 1052160 blocks [2/1] [U_] md2 : active raid1 sda3[0] 1052160 blocks [2/1] [U_] unused devices: <none>Output of cat /proc/mdstat during re-mirroring process:[admusr@recife-b ~]$ sudo cat /proc/mdstat Personalities : [raid1] md1 : active raid1 sdb1[1] sda1[0] 264960 blocks [2/2] [UU] md3 : active raid1 sdb2[1] sda2[0] 2048192 blocks [2/2] [UU] md8 : active raid1 sdb5[2] sda5[0] 270389888 blocks [2/1] [U_] [=====>...............] recovery = 26.9% (72955264/270389888) finish=43.8min speed=75000K/sec md7 : active raid1 sdb6[1] sda6[0] 4192832 blocks [2/2] [UU] md4 : active raid1 sdb7[1] sda7[0] 4192832 blocks [2/2] [UU] md6 : active raid1 sdb8[1] sda8[0] 1052160 blocks [2/2] [UU] md5 : active raid1 sdb9[1] sda9[0] 1052160 blocks [2/2] [UU] md2 : active raid1 sdb3[2] sda3[0] 1052160 blocks [2/1] [U_] resync=DELAYEDOutput of cat /proc/mdstat upon successful completion of re-mirror:[admusr@recife-b ~]$ sudo cat /proc/mdstat Personalities : [raid1] md1 : active raid1 sdb1[1] sda1[0] 264960 blocks [2/2] [UU] md3 : active raid1 sdb2[1] sda2[0] 2048192 blocks [2/2] [UU] md8 : active raid1 sdb5[1] sda5[0] 270389888 blocks [2/2] [UU] md7 : active raid1 sdb6[1] sda6[0] 4192832 blocks [2/2] [UU] md4 : active raid1 sdb7[1] sda7[0] 4192832 blocks [2/2] [UU] md6 : active raid1 sdb8[1] sda8[0] 1052160 blocks [2/2] [UU] md5 : active raid1 sdb9[1] sda9[0] 1052160 blocks [2/2] [UU] md2 : active raid1 sdb3[1] sda3[0] 1052160 blocks [2/2] [UU] unused devices: <none>