This chapter provides information on how to extract, transform, and load (ETL) information in FCC Studio. As part of running ETL, you can configure new data source for a graph. Also you can perform additional configuration for a graph. For information on performing graph configurations, see Appendix - Performing Graph Configurations.

To configure a new data source for a graph, follow these steps:

1. Navigate to the fcc_studio_etl_queries table in the Studio Schema.

The FCDM related nodes and edges are available in the table.

2. If you want to add additional nodes or edges, you can add a new entry in the fcc_studio_etl_queries table.

3. Enter the following details in the fcc_studio_etl_queries table to add a new node or edge:

Column Name |

Description |

Applicable For |

|---|---|---|

Type |

Indicates the column name. Enter the value as NODE or EDGE. |

Applicable for node and edge queries. |

DF_NAME |

Indicates the name for the node or edge. |

Applicable for node and edge queries. |

SOURCE |

Indicates the source of the data. For example: FCDM or ICIJ |

Applicable for node and edge queries. |

DATAFRAME |

Indicates the properties of the node or edge. NOTE: Enter this value only if the data source is Hive and not .a .csv file. |

Applicable for node and edge queries. |

QUERY |

· If the source is Hive, provide the Hive query. · If the source is a .csv file, provide the query in the following format: spark.read.format("csv").option("header", "true").option("mode", "DROPMALFORMED").load("(##FILEPATH##)").select("node_1","node_2","rel_type","SourceID").withColumn("Label",lit("address of")).withColumnRenamed("node_1","from").withColumnRenamed("node_2","to").withColumnRenamed("rel_type","EDGE_TYPE").withColumnRenamed("SourceID","Source").filter(col("EDGE_TYPE")==="registered_address").withColumn("node_ID",concat(lit("#NUMBER#"),col("node_ID"))) For information on the Spark query parameters, see Appendix - Spark Query Parameters Appendix - Spark Query Parameters . NOTE: Ensure that the source .csv file is UTF-8 compatible. |

Applicable for node and edge queries. |

KEY_COLUMN_NAME |

Set the value to the column name of your unique identifier, if the query is for node. For example: 'node_id'. |

Applicable for node query. |

SOURCE_NODE |

Provide the DF_NAME of the node from which the edge starts from. |

Applicable for edge query. |

DESTINATION_NODE |

Provide the DF_NAME of the node from which the edge ends to. |

Applicable for edge query. |

SOURCE_KEY_COLUMN_NAME |

Set the value to the column name which has key_column values of the Source Node. For example: 'from_id' |

Applicable for edge query. |

DESTINATION_KEY_COLUMN_NAME |

Set the value to the column name which has key_column values of the Destination Node. For example: 'to_id' |

Applicable for edge query. |

ACTIVE |

Expected values: 'Y'/'N'. Set the value to Y to consider ETL and Graph loading. |

Applicable for node and edge queries. |

4. If the source is a .csv file, configure the file path in the fcc_studio_etl_files table.

NOTE |

Ensure that the source .csv file is UTF-8 compatible. |

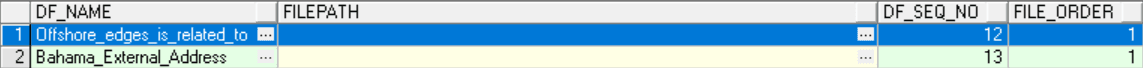

5. Enter the following details in the fcc_studio_etl_files table to add file path:

Column Name |

Description |

|---|---|

DF_NAME |

Indicates the name of the node or edge. |

DF_SEQ_NO |

Indicates the unique sequence ID for each file. |

FILEPATH |

Enter the path where the .csv files are stored. NOTE: If one data frame has multiple .csv files, then make separate entries for all the files. For example: see Figure fcc_studio_etl_files Table . |

FILEORDER |

If data must be imported from multiple files, specify the order in which the files must be read. |