As a data set flows through Big Data Discovery, it is useful to know what happens to it along the way.

- BDD does not update or delete source Hive tables. When BDD runs, it only creates new Hive tables to represent BDD data sets. This way, your source Hive tables remain intact if you want to work with them outside of Big Data Discovery.

- Most of the actions in the BDD data set lifecycle take place because you select them. You control which actions you want to run. Indexing in BDD is a step that runs automatically.

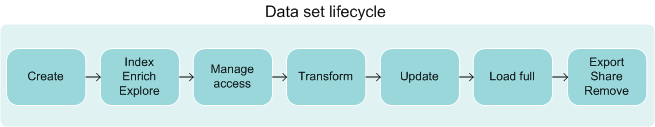

This diagram shows stages in the data set's lifecycle as it flows through BDD:

- Create the data set.

You create a data set in BDD in one of two ways:

- Uploading source data using Studio. You can upload source data in delimited files and upload from a JDBC data source. When you upload source data, BDD creates a corresponding Hive source table based on the source data file.

- Running the Data Processing CLI to discover Hive tables and create data sets in Studio based on source Hive tables. Each source Hive table has a corresponding data set in Studio.

The Catalog of data sets in Studio displays two types of data sets. Some data sets originate from personally-loaded files or a JDBC source. Other data sets are loaded by Data Processing from source Hive tables.

- Optionally,

you can choose to

enrich the data set. The data enrichment step in a data

processing workflow samples the data set and runs the data enrichment modules

against it. For example, it can run the following enrichment modules: Language

Detection, Term Frequency/Inverse Document Frequency (TF/IDF), Geocoding

Address, Geocoding IP, and Reverse Geotagger. The results of the data

enrichment process are stored in the data set in Studio and not in the Hive

tables.

Note: The Data Processing (DP) component of BDD optionally performs this step as part of creating the data set.

- Create an index of the data set. The Dgraph process creates binary files, called the Dgraph database, that represent the data set (and other configuration). The Dgraph accesses its database for each data set, to respond to Studio queries. You can now explore the data set.

- Manage access to a data set. If you uploaded a data set, you have private access to it. You can change it to give access to other users. Data sets that originated from Hive are public. Studio's administrators can change these settings.

- Transform the data

set. To do this, you use various transformation options in

Transform. In addition, you can create a new data set (this

creates a new Hive table), and commit a transform script to modify an existing

data set.

If you commit a transform script's changes, Studio writes the changes to the Dgraph, and stores the changes in the Dgraph's database for the data set. Studio does not create a new Hive table for the data set. You are modifying the data set in the Dgraph, but not the source Hive table itself.

- Update the data set.

To update the data set, you have several options. For example, if you loaded

the data set from a personal data file or imported from a JDBC source, you can

reload a newer version of this data set in Catalog. If the data set was loaded

from Hive, you can use DP CLI to refresh data in the data set.

Also, you can Load Full Data Set. This option is useful for data sets that represent a sample. If a data set is in a project, you can also configure the data set for incremental updates with DP CLI.

- Export the data set. When your data set is in a project, you can export it. For example, you can export a data set to HDFS, after you have applied transformations to it. This way, you can continue working with this data set using other tools. Also, you can export a data set and create a new data set in Catalog. Even though on this diagram Export is shown as the last step, you can export the data set at any stage in its lifecycle, after you add the data set to a project.

- Share the data set. At any stage in the data set's lifecycle, you can share the data set with others.

- Remove the data set.

When you delete a data set from Studio, the data set is removed from the

Catalog and is no longer accessible in Studio. However, deleting the data set

does not remove the corresponding source Hive table that BDD created, when it

loaded this data set.

It's important to note that BDD does not update or delete original source Hive tables. BDD only creates new Hive tables to represent BDD data sets. You may need to ask your Hive data base administrator to remove old tables as necessary to keep the Hive data base clean. If the Hive data base administrator deletes a Hive table from the data base, the Hive Table Detector detects that the table was deleted and removes the corresponding data set from the Catalog in Studio. The Hive Table Detector is a utility in the Data Processing component of BDD.