This chapter details the steps involved in executing various data loaders that are available within OFSAA. Data loaders move data from staging layer to processing layer.

Topics:

· Historical Rates Data Loader

· Stage Instrument Table Loader

· Transaction Summary Table Loader

· Pricing Management Transfer Rate Population Procedure

· Fact Ledger Stat Transformation

· Financial Element Dimension Population

· Material Currency Identifier

The Dimension Loader procedure populates dimension members, attributes and hierarchies from Staging dimension tables into dimension tables registered within OFSAAI AMHM framework. Users can view the members and hierarchies loaded by the dimension loader through AMHM screens.

NOTE |

The dimension loaders (drmDataLoader, STGDimDataLoader, and simpledimloader) load the strings into one target language only, the target language is derived from the database-login-session using USERENV. Refer to Support Note 1586342.1, if Hierarchy Filter is not reflecting correctly after making the changes to underlying Hierarchy. |

Topics:

· Enhancements to Support Alphanumeric Code in Dimensions

· Tables that are Part Of Staging

· Populating STG_<DIMENSION>_HIER_INTF Table

· Executing the Dimension Load Procedure

· Executing the Dimension Load Procedure using Master Table approach

· Updating DIM_<DIMENSION>_B <Dimension>_Code column with values from DIM_<DIMENSION>_ATTR table

· Truncate Stage Tables Procedure

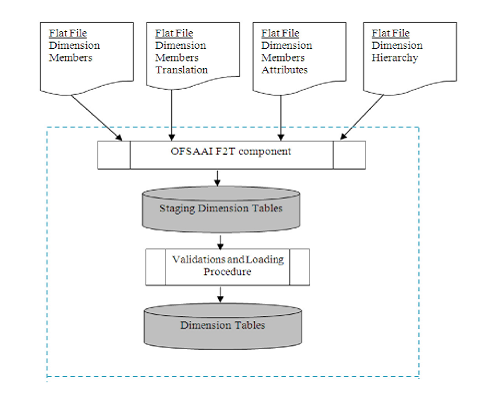

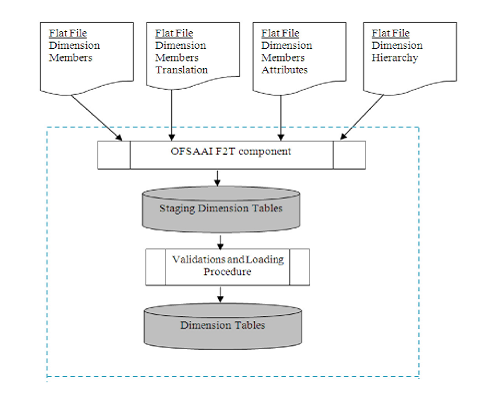

Description of Dimension Loader Overview follows

The dimension loader is used to:

· Load dimension members and their attributes from the staging area into Dimension tables that are registered with the OFSAAI AMHM framework.

· Create hierarchies in AMHM.

· Load hierarchical relationships between members within hierarchies from the staging area into AMHM.

Some of the features of the dimension loader are:

· Multiple hierarchies can be loaded from staging tables.

· Validations of members and hierarchies are similar to that of being performed within AMHM screens.

· Members can be loaded incrementally or fully synchronized with the staging tables.

NOTE |

Dimension Loaders and UIs support capturing an alphanumeric code in addition to the numeric code. |

The following Data Model components are required to support dimension member code storage; changes in {6.0/7.3.0/7.3.1} are as follows:

· Release 7.3.1: Dimension Configuration via manual updates to REV_DIMENSIONS_B columns: MEMBER_DATA_TYPE_CODE and MEMBER_CODE_COLUMN. (Also See: OFSAAI Installation & Configuration Guide 7.3 and AI Administration Guide)

· Release 6.0 (7.3): Stage Dimension Interface Table alphanumeric member code column (v_< DIM >_code).

· Release 6.0 (7.3): Stage Dimension Loader Program can directly load alphanumeric member codes

· Release 6.1.1: Some new columns are added to Staging & Processor tables as a part of FSDF. These are not required by EPM applications and not part of the T2T or FSI_D tables.

For further details on display of member codes in the user interfaces, see the OFSAAI User Guide.

Dimension data is stored in the following set of tables:

· STG _<DIMENSION>_B_INTF: Stores leaf and node member codes within the dimension.

· STG_<DIMENSION>_ TL_INTF: Stores names of leaf and node and their translations.

· STG_<DIMENSION>_ ATTR_INTF: Stores attribute values for the attributes of the dimension.

· STG_<DIMENSION>_ HIER_INTF: Stores parent-child relationship of members and nodes that are part of hierarchies.

· STG_ORG_UNIT_B_INTF: Stores leaf and node member codes within the organization unit dimension.

· STG_ORG_UNIT_TL_INTF: Stores names of leaf and node and their translations for the organization unit dimension.

· STG_ORG_UNIT_ATTR_INTF: Stores attribute values for the attributes of the organization unit dimension.

· STG_ORG_UNIIT_HIER_INTF: Stores parent-child relationship of members and nodes that are part of hierarchies for the organization unit dimension.

· STG_HIERARCHIES_INTF: Stores master information related to hierarchies.

Data present in the above set of staging dimension tables are loaded into the following set of dimension tables.

· DIM_<DIMENSION>_ B: Stores leaf and node member codes within the dimension.

· DIM_<DIMENSION>_TL: Stores names of leaf and node and their translations.

· DIM_<DIMENSION>_ATTR: Stores attribute values for the attributes of the dimension.

· DIM_<DIMENSION>_HIER: Stores parent-child relationship of members and nodes that are part of hierarchies.

· REV_HIERARCHIES: Stores hierarchy related information.

· REV_HIERARCHY_LEVELS: Stores levels of the hierarchy.

· REV_HIER_DEFINITIONS: Stores definitions of the hierarchies.

Staging tables are present for all key dimensions that are configured within the OFSAAI framework. For any custom key dimension that is added by the Client, respective staging dimension tables like STG_<DIMENSION>_B_INTF, STG_< DIMENSION>_TL_INTF, STG_<DIMENSION>_ATTR_INTF, and STG_<DIMENSION>_HIER_INTF have to be created in the ERwin model.

The STG_<DIMENSION>_HIER_INTF table is designed to hold hierarchy structure. The hierarchy structure is maintained by storing the parent child relationship in the table. In the following hierarchy there are 4 levels. The first level node is 100, which is the Total Rollup. The Total Rollup node will have the N_PARENT_DISPLAY_CODE and N_CHILD_DISPLAY_CODE as the same.

Column Name |

Column Description |

V_HIERARCHY_OBJECT_NAME |

Stores the name of the hierarchy |

N_PARENT_DISPLAY_CODE |

Stores the parent Display Code |

N_CHILD_DISPLAY_CODE |

Stores the child Display Code |

N_DISPLAY_ORDER_NUM |

Determines the order in which the structure (nodes, leaves) of the hierarchy should be displayed. This is used by the UI while displaying the hierarchy. There is no validation to check if the values in the column are in proper sequence. |

V_CREATED_BY |

Stores the created by user. Hard coded as -1 |

V_LAST_MODIFIED_BY |

Stores the last modified by user. Hard coded as -1 |

Hierarchy Structure

Description of Hierarchy Structure follows

Simple Data

V_HIERARCHY_OBJECT_NAME |

N_PARENT_DISPLAY_CODE |

N_CHILD_DISPLAY_CODE |

N_DISPLAY_ORDER_NUM |

V_CREATED_BY |

V_LAST_MODIFIED_BY |

INCOME STMT |

100 |

100 |

1 |

-1 |

-1 |

INCOME STMT |

100 |

12345678901247 |

2 |

-1 |

-1 |

INCOME STMT |

12345678901247 |

12345678901255 |

1 |

-1 |

-1 |

INCOME STMT |

12345678901255 |

10001 |

1 |

-1 |

-1 |

INCOME STMT |

12345678901255 |

10002 |

2 |

-1 |

-1 |

INCOME STMT |

12345678901247 |

12345678901257 |

2 |

-1 |

-1 |

INCOME STMT |

12345678901257 |

10006 |

1 |

-1 |

-1 |

INCOME STMT |

12345678901257 |

10007 |

2 |

-1 |

-1 |

INCOME STMT |

100 |

12345678901250 |

3 |

-1 |

-1 |

INCOME STMT |

12345678901250 |

12345678901262 |

2 |

-1 |

-1 |

INCOME STMT |

12345678901262 |

30005 |

1 |

-1 |

-1 |

INCOME STMT |

12345678901250 |

12345678901264 |

1 |

-1 |

-1 |

INCOME STMT |

12345678901264 |

30006 |

1 |

-1 |

-1 |

INCOME STMT |

12345678901264 |

30007 |

2 |

-1 |

-1 |

INCOME STMT |

12345678901264 |

30008 |

3 |

-1 |

-1 |

INCOME STMT |

12345678901264 |

30009 |

4 |

-1 |

-1 |

INCOME STMT |

100 |

12345678901268 |

4 |

-1 |

-1 |

INCOME STMT |

12345678901268 |

3912228 |

1 |

-1 |

-1 |

INCOME STMT |

3912228 |

20020 |

1 |

-1 |

-1 |

INCOME STMT |

3912228 |

20021 |

2 |

-1 |

-1 |

INCOME STMT |

3912228 |

20022 |

3 |

-1 |

-1 |

Column REV_DIMENSIONS_B.MEMBER_CODE_COLUMN

In release 7.3.1: With the introduction of alphanumeric support, REV_DIMENSIONS_B.MEMBER_CODE_COLUMN column becomes important for successful execution of the dimension loader program and subsequent T2Ts. The value in this column should be a valid code column from the relevant DIM_<DIMENSION>_B (key dimension) or FSI_<DIM>_CD (simple dimension) table. The Leaf_registration procedure populates this column. The value provided to the Leaf registration procedure should be the correct DIM_<DIM>_B.<DIM>_CODE or FSI_<DIM>_CD.<DIM>_DISPLAY_CD column. Setting this will ensure that the values in this column are displayed for both numeric and alphanumeric dimensions as Alphanumeric Code in the UI. Configuration of an alphanumeric dimension also requires manual update of the REV_DIMENSIONS_B. MEMBER_DATA_TYPE_CODE column.

For more information, see OFS AAI Installation and Configuration Guide.

This procedure performs the following functions:

· Gets the list of source and target dimension tables. The dimension tables for a given dimension are stored in REV_DIMENSIONS_B table. The stage tables for a given dimension are stored in FSI_DIM_LOADER_SETUP_DETAILS.

· The parameter Synchronize Flag can be used to completely synchronize data between the stage and the dimension tables. If the flag = 'Y' members from the dimension table which are not present in the staging table will be deleted. If the flag is 'N' the program merges the data between the staging and dimension table.

· The Loader program validates the members/attributes before loading them.

The program validates the number of records in the base members table - STG_<DIMENSION>_B_INTF and translation members table - STG_<DIMENSION>_TL_INTF. The program exits if the number of records does not match

In case values for mandatory attributes are not provided in the staging tables, the loader program populates the default value (as specified in the attribute maintenance screens within AMHM of OFSAAI) in the dimension table.

The program validates for data types of attribute value. For example an attribute that is configured as 'NUMERIC' cannot have non-numeric values.

Dimension Loader validates the attribute against their corresponding dimension table. If any of the attributes is not present, then an error message will be logged inFSI_MESSAGE_LOG table.

Dimension Loader will check the number of records in Dim_<Dim_Name>_B and Dim_<Dim_Name>_TL for the language. In case any mismatch is found, then an error will be logged and loading will be aborted.

· If all the member level validations are successful the loader program inserts the data from the staging tables to the dimension tables

Note: In release 6.0 (7.3) The stage dimension loader program is modified to move alphanumeric code values from STG_< DIMENSION >_B_INTF.V_< DIM >_CODE to DIM_< DIM >_B.< DIM >_CODE column. Previously, DIM_< DIM>_B.< DIM >_CODE column was populated using the fn_updateDimensionCode procedure from the code attributes. With this enhancement users can directly load alphanumeric values.

The fn_updateDimensionCode procedure is still available for users who do not want make any changes to their ETL procedures for populating the dimension staging tables (for example, STG_< DIMENSION >_B_INTF,, STG_< DIMENSION>_ATTR_INTF).

· After this, the loader program loads hierarchy data from staging into hierarchy tables.

· In case of hierarchy data the loader program validates if the members used in the hierarchy are present in the STG_<DIMENSION>_B_INTF table.

· The program validates if the hierarchy contains multiple root nodes and logs error messages accordingly, as multiple root nodes are not supported.

· Dimension Loader will check special characters in Hierarchy. Hierarchy name with special characters will not be loaded.

· Following are the list of special characters which are not allowed in Hierarchy Name:

^&\'

After execution of the dimension loader, the user must execute the reverse population procedure to populate OFSA legacy dimension and hierarchy tables.

Dimension Leaf values can have a maximum of 14 digits.

Only 26 key (processing) dimensions are allowed in the database. Examples of seeded key leaf types are Common COA ID, Organizational Unit ID, GL Account ID, Product ID, Legal Entity ID.

The maximum number of columns that the Oracle database allows in a unique index is 32. This is the overriding constraint. After subtracting IDENTITY_CODE, YEAR_S, ACCUM_TYPE_CD, CONSOLIDATION_CD, and ISO_CURRENCY_CD, this leaves 27 columns available for Key Processing Dimensions (leaf dimensions). BALANCE_TYPE_CD is now part of the unique index so this brings the maximum number of leaf columns down to 26.

There is an integrity check performed during dimension data loading to confirm if dimension members are included in a hierarchy definition. If they are included, these members should not be deleted from the dimension member pool. If dimension members are deleted or made inactive as part of the data load, the validation will return an error message, cannot delete a member that is used as part of a hierarchy.

If you wish to override this validation, an additional parameter can be passed to the Dimension Data Loader program(fn_drmDataLoader), for example: force_member_delete. The parameter can be set to Y or N. Inputting Y allows you to override the used in hierarchy dependency validation. Inputting N is the default behavior, which performs the validation check to confirm if members are used in a hierarchy or not.

Below is the function:

function fn_drmDataLoader(batch_run_id varchar2,

as_of_date varchar2,

pDimensionId varchar2,

pSynchFlag char default 'Y',

force_member_delete char default 'N')

FSI_DIM_LOADER_SETUP_DETAILS table should have record for each dimension that has to be loaded using the dimension loader. The table contains seeded entries for key dimensions that are seeded with the application.

The following are sample entries in the setup table:

Column Name |

Description |

Sample Value |

n_dimension_id |

This stores the Dimension ID |

1 |

v_intf_b_table_name |

Stores the name of the Staging Base table |

Stg_org_unit_b_intf |

v_intf_member_column |

Stores the name of the Staging Member Column Name |

V_org_unit_id |

v_intf_tl_table_name |

Stores the name of the Staging Translation table |

Stg_org_unit_tl_intf |

v_intf_attr_table_name |

Stores the name of the Staging Member Attribute table |

Stg_org_unit_attr_intf |

v_intf_hier_table_name |

Stores the name of the Staging Hierarchy table |

Stg_org_unit_hier_intf |

d_start_time |

Start time of loader - updated by the loader program. |

|

d_end_time |

End time of loader - updated by the loader program. |

|

v_comments |

Stores Comments. |

Dimension loader for organization unit. |

v_status |

Status updated by the Loader program. |

|

v_intf_member_name_col |

Stores the name of the Member |

V_org_unit_name |

v_gen_skey_flag |

Flag to indicate if surrogate key needs to be generated for alphanumeric codes in the staging. Applicable only for loading dimension data from master tables. Not applicable for loading dimension data from interface tables. Note: Although the application UI may display an alphanumeric dimension member ID, the numeric member ID is the value stored in member-based assumption rules, processing results, and audit tables. Implications for Object Migration: Numeric dimension member IDs should be the same in both the Source and Target environments, to ensure the integrity of any member-based assumptions you wish to migrate. If you use the Master Table approach for loading dimension data and have set it up to generate surrogate keys for members, this can result in differing IDs between the Source and Target and therefore would be a concern if you intend to migrate objects which depend on these IDs. |

|

v_stg_member_column |

Name of the column that holds member code in the staging table. Applicable for loading dimension data from both master tables and interface tables. (sample value v_org_unit_code) – this appears to be the alphanumeric code |

v_org_unit_code |

v_stg_member_name_col |

Name of the column that holds member name in the staging table. Applicable only for loading dimension data from master tables. Not applicable for loading dimension data from interface tables. |

|

v_stg_member_desc_col |

Name of the column that holds description in the staging table. Applicable only for loading dimension data from master tables. Not applicable for loading dimension data from interface tables. |

|

NOTE |

Ensure FSI_DIM_LOADER_SETUP_DETAILS.V_STG_MEMBER_COLUMN is updated as mentioned following for Legal Entity and Customer dimensions. |

||

Dimension Loader Approach |

V_STG_MEMBER_COLUMN for Legal Entity |

V_STG_MEMBER_COLUMN for Customer |

Using Interface Table (fn_drmDataLoader) |

V_LV_CODE |

V_CUST_REF_CODE |

Using Master Table (fn_STGDimDataLoader) |

V_ENTITY_CODE |

V_PARTY_ID |

You can execute this procedure either from SQL*Plus or from within a PL/SQL block or from the Batch Maintenance window within OFSAAI framework.

To run the procedure from SQL*Plus, login to SQL*Plus as the Schema Owner. The function requires 4 parameters – Batch Run Identifier, As of Date, Dimension Identifier, Synchronize flag (Optional). The syntax for calling the procedure is:

function fn_drmDataLoader(batch_run_id varchar2,

as_of_date varchar2,

pDimensionId varchar2,

pSynchFlag char default 'Y',

force_member_delete char default 'N')

where

· BATCH_RUN_ID is any string to identify the executed batch.

· AS_OF_DATE in the format YYYYMMDD.

· pDIMENSIONID dimension id.

· pSynchFlag this parameter is used to identify if a complete synchronization of data between staging and dimension table is required. The default value is 'Y'.

NOTE |

With Synch flag N, data is moved from Stage to Dimension tables. Here, an appending process happens. You can provide a combination of new Dimension records plus the data that has undergone change. New records are inserted and the changed data is updated into the Dimension table. With Synch flag Y, the Stage table data will completely replace the Dimension table data. There are a couple of checks in place to ensure that stage_dimension_loader is equipped with similar validations that the UI provides. The Data Loader does a Dependencies Check before a member is deleted. The validation checks, if there are members used in the Hierarchy that are not present in the DIM_< DIM >_B table. This is similar to the process of trying to delete a member from the UI, which is being used in the Hierarchy definition. You are expected to remove or delete such Hierarchies from the UI before deleting a member. |

For Example:

Declare

num number;

Begin

num := fn_drmDataLoader ('INFODOM_20100405','20100405' ,1,'Y','N');

End;

To execute the procedure from the OFSAAI Batch Maintenance, create a new Batch with the Task as TRANSFORM DATA and specify the following parameters for the task:

· Datastore Type: Select appropriate datastore from list

· Datastore Name: Select appropriate name from the list

· IP address: Select the IP address from the list

· Rule Name: fn_drmDataLoader

· Parameter List: Dimension ID, Synchronize Flag

The fn_drmdataloader function calls STG_DIMENSION_LOADER package which loads data from the stg_<dimension>_hier_intf to the dim_<dimension>_hier table.

From Release 8.0, RUNIT.sh utility is available to resave the UMM Hierarchy Objects. The data for AMHM hierarchies which is stored in dim_<dimension>_hier table is changed due to the fn_drmdataloader function, so the RUNIT.sh utility is executed to refresh the UMM hierarchies which have been implicitly created due to the AMHM hierarchies. This file resides under ficdb/bin area.

To run the utility directly from the console:

1. Navigate to$FIC_DB_HOME/bin of OFSAAI FIC DB tier to execute RUNIT.sh file

The following parameter needs to be provided:

§ INFODOM- Specify the information domain name whose hierarchies are to be refreshed. This is the first parameter and mandatory parameter

§ USERID- specify the AAI user id who is performing this activity. This is second parameter and mandatory as well

§ HIERARCHY- specify the hierarchy code to be refreshed. In case multiple hierarchies need to be refreshed the same can be provided and tilde (~) separated values. This is third parameter and non-mandatory parameter

For example: ./RUNIT.sh,<INFODOM>,<USERID>,<CODE1~CODE2~CODE3>

NOTE |

In case the third parameter is not specified, then all the hierarchies present in the infodom will be refreshed. |

To run the utility through the Operations module:

2. Navigate to the Operations module and define a batch.

3. Add a task by selecting the component as RUN EXECUTABLE.

4. Under Dynamic Parameter List panel, specify ./RUNIT.sh,<INFODOM>,<USERID>,<CODE1~CODE2~CODE3> in the Executable field.

After saving the Batch Definition, execute the batch to resave the UMM Hierarchy Objects.

The text and explanation for each of these exceptions follows. If you call the procedure from a PL/SQL block you may want to handle these exceptions appropriately so that your program can proceed without interruption.

· Exception 1: Error. errMandatoryAttributes

This exception occurs when the stage Loader program cannot find any data default value for mandatory attributes.

· Exception 2: Error. errAttributeValidation

This exception occurs when there is a data type mis-match between the attribute value and configured data-type for the attribute.

· Exception 3: Error. errAttributeMemberMissing

If there is a mismatch in the count between the member's base and translation table.

FSI_DIM_LOADER_SETUP_DETAILS table should have a record for each dimension that has to be loaded. The table contains entries for key dimensions that are seeded with the application.

The following columns must be populated for user-defined Dimensions.

v_stg_member_column

v_stg_member_name_col

v_stg_member_desc_col

NOTE |

Before running DRM_DIMENSION_LOADER for Legal Entity dimension, update the value of FSI_DIM_LOADER_SETUP_DETAILS.V_STG_MEMBER_COLUMN as V_LV_CODE (which is the column available in STG_LEGAL_ENTITY_B_INTF table). |

Additionally, the FSI_DIM_ATTRIBUTE_MAP table should be configured with column attribute mapping data. This table maps the columns from a given master table to attributes.

N_DIMENSION_ID |

This stores the Dimension ID |

V_STG_TABLE_NAME |

This holds the source Stage Master table |

V_STG_COLUMN_NAME |

This holds the column from the master table |

V_ATTRIBUTE_NAME |

This holds the name of the attribute the column maps to |

V_UPDATE_B_CODE_FLAG |

This column indicates if the attribute value can be used to update the code column in the DIM_<Dimension>_B table. Note: fn_STGDimDataLoader does not use FSI_DIM_ATTRIBUTE_MAP.V_UPDATE_B_CODE_FLAG |

You can execute this procedure either from SQL*Plus or from within a PL/SQL block or from the Batch Maintenance window within OFSAAI framework. To run the procedure from SQL*Plus, login to SQL*Plus as the Schema Owner. The function requires 5 parameters: – Batch Run Identifier , As of Date, Dimension Identifier , MIS-Date Required Flag, Synchronize flag (Optional). The syntax for calling the procedure is:

function fn_STGDimDataLoader(batch_run_id varchar2,

as_of_date varchar2,

pDimensionId varchar2,

pMisDateReqFlag char default 'Y',

pSynchFlag char default 'N')

where

· BATCH_RUN_ID is any string to identify the executed batch.

· AS_OF_DATE in the format YYYYMMDD.

· pDIMENSIONID dimension id.

· pMisDateReqFlag is used to identify if AS-OF_DATE should be used in the where clause to filter the data.

· pSynchFlag is used to identify if a complete synchronization of data between staging and fusion table is required. The default value is 'Y'.

For Example

Declare

num number;

Begin

num := fn_STGDimDataLoader ('INFODOM_20100405','20100405' ,1,'Y','Y' );

End;

To execute the procedure from OFSAAI Batch Maintenance, create a new Batch with the Task as TRANSFORM DATA and specify the following parameters for the task:

· Datastore Type: Select appropriate datastore from list

· Datastore Name: Select appropriate name from the list

· IP address: Select the IP address from the list

· Rule Name: fn_STGDimDataLoader

· Parameter List: Dimension ID, MIS Date Required Flag , Synchronize Flag

Clients could face a problem while loading customer dimension into AMHM using the Master table approach.

Configuring the setup table for CUSTOMER dimension is pretty confusing while dealing with attributes like FIRST_NAME , MIDDLE_NAME and LAST_NAME.

Most clients would like to see FIRST_NAME , MIDDLE_NAME and LAST_NAME forming the name of the member within the customer dimension.

Currently the STG_DIMENSION_LOADER disallows concatenation of columns.

Moreover the concatenation might not ensure unique values.

As a solution to this problem we can work on the following options:

1. Create a view on STG_CUSTOMER_MASTER table with FIRST_NAME, MIDDLE_NAME and LAST_NAME concatenated and identify this column as NAME.

2. Configure the name column from the view in FSI_DIM_LOADER_SETUP_DETAILS

3. Increase the size of DIM_CUSTOMER_TL.NAME column.

4. Disable the unique index on DIM_CUSTOMER_TL.NAME or append Customer_code to the NAME column.

5. The NAME column will be populated into the DIM_CUSTOMER_TL.NAME column.

Populate customer_code into the DIM_CUSTOMER_TL.NAME column.

The stage dimension loader procedure does not insert or update the <Dimension>_code column in the Dim_<Dimension>_B table. This is an alternate method for updating the < Dimension>_Code column in the Dim_< Dimension>_B table, retained to accommodate implementations prior to the enhancement where we enable loading the code directly to the dimension table instead of from the attribute table. It is not recommended for new installations. This section explains how the <Dimension>_code can be updated.

Steps to be followed

1. A new attribute should be created in the REV_DIM_ATTRIBUTES_B / TL table.

NOTE |

You should use the existing CODE attribute for the seeded dimensions. PRODUCT CODE, COMMON COA CODE, and so on. |

2. The fsi_dim_attribute_map table should be populated with values.

The following columns must be populated:

§ N_DIMENSION_ID (Dimension id)

§ V_ATTRIBUTE_NAME (The attribute name)

§ V_UPDATE_B_CODE_FLAG (This flag should be 'Y'). Any given dimension can have only one attribute with V_UPDATE_B_CODE_FLAG as 'Y'. This should only be specified for the CODE attribute for that dimension.

Example:

N_DIMENSION_ID |

4 |

V_ATTRIBUTE_NAME |

'PRODUCT_CODE' |

V_UPDATE_B_CODE_FLAG |

'Y' |

V_STG_TABLE_NAME |

'stg_product_master' |

V_STG_COLUMN_NAME |

'v_prod_code' |

NOTE |

The values in V_STG_TABLE_NAME and V_STG_COLUMN_NAME are not used by the fn_updateDimensionCode procedure, however these fields are set to NOT NULL and should be populated. |

3. Load STG_<DIMENSION>_ATTR_INTF table with data for the new ATTRIBUTE created.

NOTE |

The attribute values must first be loaded using the stage dimension loader procedure, fn_drmDataLoader, before running this procedure. This procedure will pull values from the DIM_<DIMENSION>_ATTR table. If these rows do not exist for these members prior to running this procedure, the DIM_<DIMENSION>_B.<DIMENSION>_CODE field will not be updated. |

4. Execute the fn_updateDimensionCode function. The function updates the code column with values from the DIM_<DIMENSION>_ATTR table.

You can execute this procedure either from SQL*Plus or from within a PL/SQL block or from the Batch Maintenance window within OFSAAI framework.

To run the procedure from SQL*Plus, login to SQL*Plus as the Atomic Schema Owner. The function requires 3 parameters – Batch Run Identifier , As of Date, Dimension Identifier. The syntax for calling the procedure is:

function fn_updateDimensionCode (batch_run_id varchar2,

as_of_date varchar2,

pDimensionId varchar2)

where

· BATCH_RUN_ID is any string to identify the executed batch.

· AS_OF_DATE in the format YYYYMMDD.

· pDIMENSIONID dimension id

For Example

Declare

num number;

Begin

num := fn_updateDimensionCode ('INFODOM_20100405','20100405',1 );

End;

You need to populate a row in FSI_DIM_LOADER_SETUP_DETAILS.

For example, for FINANCIAL ELEM CODE, to insert a row into FSI_DIM_LOADER_SETUP_DETAILS, following is the syntax:

INSERT INTO FSI_DIM_LOADER_SETUP_DETAILS (N_DIMENSION_ID) VALUES ('0'); COMMIT;

To execute the procedure from OFSAAI Batch Maintenance, create a new Batch with the Task as TRANSFORM DATA and specify the following parameters for the task:

· Datastore Type: Select appropriate datastore from list

· Datastore Name: Select appropriate name from the list

· IP address: Select the IP address from the list

· Rule Name: Update_Dimension_Code

· Parameter List: Dimension ID

This procedure performs the following functions:

· The procedure queries the FSI_DIM_LOADER_SETUP_DETAILS table to get the names of the staging table used by the Dimension Loader program.

· The MIS Date option only works to the Master Table approach (fn_STGDimDataLoader) dimension loader. It is not applicable to dimension data loaded using the standard Dimension Load Procedure (fn_drmDataLoader).

Executing the Truncate Stage Tables Procedure

You can execute this procedure either from SQL*Plus or from within a PL/SQL block or from the Batch Maintenance window within OFSAAI framework.

To run the procedure from SQL*Plus, login to SQL*Plus as the Schema Owner. The function requires 4 parameters – Batch Run Identifier, As of Date, Dimension Identifier, Mis Date Required Flag. The syntax for calling the procedure is:

function fn_truncateStageTable(batch_run_id varchar2,

as_of_date varchar2,

pDimensionId varchar2,

pMisDateReqFlag char default 'Y')

where

· BATCH_RUN_ID is any string to identify the executed batch.

· AS_OF_DATE in the format YYYYMMDD.

· pDIMENSIONID dimension id.

· pMisDateReqFlag is used to identify the data needs to be deleted for a given MIS Date. The default value is 'Y'.

For Example

Declare

num number;

Begin

num := fn_truncateStageTable ('INFODOM_20100405','20100405' ,1,'Y' );

End;

To execute the procedure from OFSAAI Batch Maintenance, create a new Batch with the Task as TRANSFORM DATA and specify the following parameters for the task:

· Datastore Type: Select appropriate datastore from list

· Datastore Name: Select appropriate name from the list

· IP address: Select the IP address from the list

· Rule Name: fn_truncateStageTable

· Parameter List: Dimension ID, MIS-Date required Flag

Currently the dimension loader program works only for key dimensions.

Simple Dimension Loader provides the ability to load data from stage tables to Simple dimension tables.

For example, the user can load data intoFSI_ACCOUNT_OFFICER_CD and FSI_ACCOUNT_OFFICER_MLS using the Simple Dimension Loader program.

Simple dimension of type 'writable and editable' can use this loading approach. This can be identified by querying rev_dimensions_b.write_flag = 'Y', rev_dimensions_b.dimension_editable_flag ='Y' and rev_dimensions_b.simple_dimension_flag = 'Y'.

Topics:

· Creating Simple Dimension Stage Table

· Configuration of Setup Tables

· Executing the Simple Dimension Load Procedure

You can create stage tables for the required simple dimensions by using the following template:

STG_<DIM>_MASTER |

|||

COLUMN_NAME |

DATA TYPE |

PRIMARY KEY |

NULLABLE |

v_< DIM>_display_code |

Varchar2(10) |

Y |

N |

d_Mis_date |

Date |

Y |

N |

v_Language |

Varchar2(10) |

Y |

N |

v_< DIM>_NAME |

Varchar2(40) |

|

N |

v_Description |

Varchar2(255) |

|

N |

v_Created_by |

Varchar2(30) |

|

Y |

v_Modified_by |

Varchar2(30) |

|

Y |

Here is a sample structure:

STG_ACCOUNT_OFFICER_MASTER |

|||

COLUMN_NAME |

DATA TYPE |

PRIMARY KEY |

NULLABLE |

v_acct_officer_display_code |

Varchar2(10) |

Y |

N |

d_Mis_date |

Date |

Y |

N |

v_Language |

Varchar2(10) |

Y |

N |

v_Name |

Varchar2(40) |

|

N |

v_Description |

Varchar2(255) |

|

N |

v_Created_by |

Varchar2(30) |

|

Y |

v_Modified_by |

Varchar2(30) |

|

Y |

Here are some examples:

· Example For FSI CD/MLS tables:

CREATE TABLE <XXXXX>_FSI_<DIM>_CD -- ACME_FSI_ACCT_STATUS_CD

(<DIM>_CD NUMBER(5) -- ACCT_STATUS_CD

,LEAF_ONLY_FLAG VARCHAR2(1)

,ENABLED_FLAG VARCHAR2(1)

,DEFINITION_LANGUAGE VARCHAR2(10)

,CREATED_BY VARCHAR2(30)

,CREATION_DATE DATE

,LAST_MODIFIED_BY VARCHAR2(30)

,LAST_MODIFIED_DATE DATE

<dim>_display_CD VARCHAR2(10)

);

· Example for FSI_<DIM>_MLS table:

CREATE TABLE <XXXXX>_FSI_<DIM>_MLS -- ACME_FSI_ACCT_STATUS_CD

(<DIM>_CD NUMBER(5) -- ACCT_STATUS_CD

,LANGUAGE VARCHAR2(10)

,<DIM> VARCHAR2(40) -- ACCT_STATUS

,DESCRIPTION VARCHAR2(255)

,CREATED_BY VARCHAR2(30)

,CREATION_DATE DATE

,LAST_MODIFIED_BY VARCHAR2(30)

,LAST_MODIFIED_DATE DATE

);

NOTE |

FSI_<DIM>_CD and FSI_<DIM>_MLS should follow the same standards as mentioned above, else Loader will not work as expected. |

The REV_DIMENSIONS_B table holds the following target table information:

The target FSI_<DIM>_CD/MLS table can be retrieved from REV_DIMENSIONS_B table as follows:

· dimension_id: Holds the id of the simple dimension that needs to be loaded.

· member_b_table_name: Holds the name of the FSI_<DIM>_CD target table. For example, FSI_ACCOUNT_OFFICER_CD

· member_tl_table_name: Holds the name of the FSI_<DIM>_MLS table name. For example, FSI_ACCOUNT_OFFICER_MLS

· member_col: Holds the Column Name for which Surrogate needs to be generated. For example, ACCOUNT_OFFICER_CD

· member_code_column: Holds the Name of the joining column name from FSI_<DIM>_CD Display code column. For example, ACCOUNT_OFFICER_DISPLAY_CD

· key_dimension_flag: N

· dimension_editable_flag: Y

· write_flag: Y

· simple_dimension_flag: Y

The FSI_DIM_LOADER_SETUP_DETAILS stores the STG_<DIM>_MASTER table details as follows:

FSI_DIM_LOADER_SETUP_DETAILS |

STG_<DIM>_MASTER |

N_DIMENSION_ID |

<dimension_id> For example, 617 |

V_INTF_B_TABLE_NAME |

Stage table name For example, STG_ACCOUNT_OFFICER_MASTER |

V_GEN_SKEY_FLAG |

Default will be 'Y', it generates Surrogate Key. When 'N' then stage display code column will be used as a surrogate key. For example, FSI_ACCOUNT_OFFICER_CD.ACCOUNT_OFFICER_DISPLAY_CD should be numeric. |

V_STG_MEMBER_COLUMN |

Stores the stage display code column. For example, STG_ACCOUNT_OFFICER_MASTER.v_acct_officer_display_code |

V_STG_MEMBER_NAME_COL |

Stores the stage column name. For example, STG_ACCOUNT_OFFICER_MASTER. v_Name |

V_STG_MEMBER_DESC_COL |

Stores the stage description column name. For example, STG_ACCOUNT_OFFICER_MASTER. v_description |

There are two ways to execute the simple dimension load procedure:

· Running Procedure Using SQL*Plus

To run the procedure from SQL*Plus, login to SQL*Plus as the Schema Owner:

function fn_simpledimloader(batch_run_id VARCHAR2, as_of_date VARCHAR2, pdimensionid VARCHAR2,

pMisDateReqFlag char default 'Y', psynchflag CHAR DEFAULT 'N')

SQLPLUS > declare

result number;

begin

result := fn_simpledimloader ('SimpleDIIM_BATCH1','20121212','730','N','Y');

end;

/

§ BATCH_RUN_ID is any string to identify the executed batch.

§ AS_OF_DATE is in the format YYYYMMDD.

§ pDIMENSIONID is the dimension ID.

§ pSynchFlag this parameter is used to identify if a complete synchronization of data between staging and dimension table is required. The default value is 'Y'.

§ pMisDateReqFlag : Filter will be placed on the input stage table to select only the records which falls on the given as_of_date. Default value is Y. If complete stage table data needs to be considered, then it should be passed 'N'.

NOTE |

With Synch flag N, data is moved from Stage to Dimension tables. Here, an appending process happens. You can provide a combination of new Dimension records plus the data that has undergone change. New records are inserted and the changed data is updated into the Dimension table. With Synch flag Y, the Stage table data will completely replace the Dimension table data. |

· Simple Dimension Load Procedure Using OFSAAI Batch Maintenance.

To execute Simple Dimension Loader from OFSAAI Batch Maintenance, a seeded Batch is provided.

The batch parameters are:

§ Datastore Type: Select the appropriate datastore from list

§ Datastore Name: Select the appropriate name from the list

§ IP address: Select the IP address from the list

§ Rule Name: fn_simpledimloader

§ Parameter : 'Pass the dimension id for which DT needs to be executed, psynchflag'

For example, '730,N,Y'

NOTE |

In case of FSI_ACCOUNT_OFFICER_CD query: SELECT dimension_id FROM rev_dimensions_b where member_b_table_name = 'FSI_ACCOUNT_OFFICER_CD' Pass the dimension_id. |

§ Psynchflag: By default it is N, data is moved from Stage to Dimension tables. Here, an appending process happens. You can provide a combination of new Dimension records plus the data that has undergone change. New records are inserted and the changed data is updated into the Dimension table. With Synch flag 'Y', the Stage table data will completely replace the Dimension table data.

Below are the list of error messages which can be viewed in view log from UI or fsi_messge_log table from back end filtering for the given batch id. On successful completion of each task, messages gets into log table.

In the event of failure, following are the list of errors that may occur during the execution:

· Exception 1: When REV_DIMENSIONS_B is not having proper setup details.

Meaning: For Simple Dimension write_flag, simple_dimension_flag, dimension_editable_flag should be Y in rev_dimensions_b for the given Dimension id.

· Exception 2: When FSI_DIM_LOADER_SETUP_DETAILS table is not having proper set up details.

Meaning: Setup details are not found for the dimension id.

· Exception 3: When Display code Column is non numeric and trying to use as a surrogate key.

Meaning: Display code Column should be numeric as v_gen_skey_flag N

Historical data for currency exchange rates, interest rates and economic indicators can be loaded into the OFSAA historical rates tables through the common staging area. The T2T component within OFSAAI framework is used to move data from the Stage historical rate tables into the relevant OFSAA processing tables. After loading the rates, users can view the historical rate data through the OFSAA Rate Management UI's.

Topics:

· Tables Related to Historical Rates

· Executing the Historical Rates Data Loader T2T

Historical rates are stored in the following staging area tables:

· STG_EXCHANGE_RATE_HIST: This staging table contains the historical exchange rates for Currencies used in the system.

· STG_IRC_RATE_HIST: This staging table contains the historical interest rates for the Interest Rate codes used in the system.

· STG_IRC_TS_PARAM_HIST: This staging table contains the historical interest rate term structure parameters, used by the Monte Carlo engine.

· STG_ECO_IND_HIST_RATES: This staging table stores the historical values for the Economic Indicators used in the system.

Historical rates in OFSAA Rate Management are stored in the following processing tables:

· FSI_EXCHANGE_RATE_HIST: This table contains the historical exchange rates for the Currencies used in the system.

· FSI_IRC_RATE_HIST: This table contains the historical interest rates for the Interest Rate codes used in the system.

· FSI_IRC_TS_PARAM_HIST: This table stores the historical interest rate term structure parameters, used by the Monte Carlo engine.

· FSI_ECO_IND_HIST_RATES: This table contains the historical values for the Economic Indicators used in the system.

Data for historical rates commonly comes from external systems. Such data must be converted into the format of the staging area tables. This data can be loaded into the staging area using the F2T component of the OFSAAI framework. Users can view the loaded data by querying the staging tables and various log files associated with the F2T component.

You can launch the Historical Rates Data Loader from the following:

· Interest Rates Summary page

· PL/SQL block

· Operations Batch

To launch from the Interest Rates Summary page:

1. Click the Data Loader icon on the Interest Rates Summary grid toolbar.

2. A warning message will appear: Upload all available Interest Rates and Parameters?

3. Click Yes. The process will load all valid data included in the staging table.

There are four pre-defined T2T mappings configured and seeded in OFSAA for the purpose of loading historical rates. These can be executed from the Batch Maintenance within OFSAAI.

To execute the Historical Exchange Rates Data Loader, create a new Batch and specify the following parameters:

· Datastore Type: Select appropriate datastore from the drop down list

· Datastore Name: Select appropriate name from the list. Generally it is the Infodom name.

· IP address: Select the IP address from the list

· Rule Name: T2T_EXCHANGE_RATE_HIST

· Parameter List: No Parameter is passed. The only parameter is the As of Date selection which is made when the process is executed.

To execute the Historical Interest Rates Data Loader, create a new Batch and specify the following parameters:

· Datastore Type: Select appropriate datastore from the drop down list

· Datastore Name: Select appropriate name from the drop down list

· IP address: Select the IP address from the list

· Rule Name: T2T_IRC_RATE_HIST

· Parameter List: No Parameter is passed. The only parameter is the As of Date selection which is made when the process is executed.

To execute the Historical Term Structure Parameter Data Loader, create a new Batch and specify the following parameters:

· Datastore Type: Select appropriate datastore from list

· Datastore Name: Select appropriate name from the list

· IP address: Select the IP address from the list

· Rule Name: T2T_IRC_TS_PARAM_HIST

· Parameter List: No Parameter is passed. The only parameter is the As of Date selection which is made when the process is executed.

To execute the Historical Economic Indicator Data Loader, create a new Batch and specify the following parameters:

· Datastore Type: Select appropriate datastore from the drop down list

· Datastore Name:: Select appropriate name from the drop down list

· IP address: Select the IP address from the list

· Rule Name: T2T_ECO_IND_HIST_RATES

· Parameter List: No Parameter is passed. The only parameter is the As of Date selection which is made when the process is executed.

After executing any of the above batch processes, check the T2T component logs and batch messages to confirm the status of the data load.

The T2T component can fail under the following scenario:

· Unique constraint error: Target table may already contain data with the primary keys that the user is trying to load from the staging area.

The T2T component can only perform Insert operations. In case the user needs to perform updates, previously loaded records should be deleted before loading the current records. Function fn_deleteFusionTables is used for deleting the records in the target that are present in the source. This function removes rows in the table if there are matching rows in the Stage table. This function requires entries in the FSI_DELETE_TABLES_SETUP table to be configured. Configure the following table for all columns that need to be part of the join between the Stage table and Equivalent table.

Users can create new or use existing Data Transformations for deleting a Table. The parameters for the Data Transformation are:

· 'Table to be deleted'

· Batch run ID

· As of Date

Column Name |

Column Description |

Sample Value |

STAGE_TABLE_NAME |

Stores the source table name for forming the join statement |

STG_LOAN_CONTRACTS |

STAGE_COLUMN_NAME |

Stores the source column name for forming the join statement |

V_ACCOUNT_NUMBER |

FUSION_TABLE_NAME |

Stores the target table name for forming the join statement |

FSI_D_LOAN_CONTRACTS |

FUSION_COLUMN_NAME |

Stores the target column name for forming the join statement |

ACCOUNT_NUMBER |

NOTE |

Insert rows in FSI_DELETE_TABLES_SETUP for all columns that can be used to join the stage with the equivalent table. In case if the join requires other dimension or code tables, a view can be created joining the source table with the respective code tables and this view can be part of the above setup table. |

The Forecast Rate Data Loader procedure loads forecast rates into the OFSAA ALM Forecast rates processing area tables from staging tables. In ALM, Forecast Rate assumptions are defined within the Forecast Rate Assumptions UI. The Forecast Rates Data Loader supports the Direct Input and Structured Change methods only for exchange rates, interest rates and economic indicators. Data for all other forecast rate methods should be input through the User Interface. After executing the forecast rates data loader, users can view the information in the ALM - Forecast Rates Assumptions UI.

Topics:

· Forecast Rate Data Loader Tables

· Populating Forecast Rate Stage Tables

· Forecast Rate Loader Program

· Executing the Forecast Rate Data Load Procedure

Forecast rate assumption data is stored in the following staging area tables:

· STG_FCAST_XRATES: This table holds the forecasted exchange rate data for the current ALM modeling period.

NOTE |

For Direct Input Method, both N_FROM_BUCKET and N_TO_BUCKET column contain the same bucket number for the record in STG_FCAST_XRATES table. |

· STG_FCAST_IRCS: This table holds the forecasted interest rate data for the current ALM modeling period.

· STG_FCAST_EI: This table holds the forecasted economic indicator data for the current ALM modeling period.

Rates present in the above staging tables are copied into the following ALM metadata tables.

· FSI_FCAST_IRC_DIRECT_INPUT, FSI_FCAST_IRC_STRCT_CHG_VAL.

· FSI_FCAST_XRATE_DIRECT_INPUT, FSI_FCAST_XRATE_STRCT_CHG.

· FSI_FCAST_EI_DIRECT_INPUT, FSI_FCAST_EI_STRCT_CHG_VAL

· STG_FCAST_EI

v_forecast_name |

The Name of the Forecast Rate assumption rule as defined. The Forecast name indicates the Short Description for the Forecast Rate Sys ID as stored in the FSI_M_OBJECT_DEFINITION_TL table. In case the forecast sys id is provided, then populate this field with -1. |

v_scenario_name |

This field indicates the Scenario Name for which the Forecast Rate data is applicable. |

v_economic_indicator_name |

This field indicates the Economic Indicator Name for which the Forecast data is applicable. |

n_from_bucket |

This field indicates the Start Bucket Number for the given scenario. |

fic_mis_date |

This field indicates the current period As of Date applicable to the data being loaded. |

n_fcast_rates_sys_id |

The System Identifier of the forecast rate assumption rule to which this data will be loaded. In case forecast name and folder are provided, then populate this field with -1. |

v_folder_name |

Name of the folder that holds the Forecast Rate assumption rule definition. In case the forecast sys id is provided, then populate this field with -1. |

v_ei_method_cd |

The Forecast method of economic indicator values include: Direct Input or Structured change. |

|

Use DI - For Direct Input or SC - For Structured Change |

n_economic_indicator_value |

This field indicates the value for the Economic Indicator for the given scenario and time bucket. |

n_to_bucket |

This field indicates the End Bucket Number for the assumption. |

· STG_FCAST_XRATES

v_forecast_name |

The Name of the Forecast Rate assumption rule as defined. The Forecast name indicates the Short Description for the Forecast Rate Sys ID as stored in the FSI_M_OBJECT_DEFINITION_TL table. In case the forecast sys id is provided, then populate this field with -1. |

v_scenario_name |

This field indicates the Scenario Name for which the Forecast Rate data is applicable. |

v_iso_currency_cd |

From ISO Currency Code (like USD, EUR, JPY, GBP) of the forecast rate. |

n_from_bucket |

This field indicates the Start Bucket Number for the given scenario. |

fic_mis_date |

This field indicates the As of Date for which the data being loaded is applicable. |

n_fcast_rates_sys_id |

The System Identifier of the assumption rule to which this data will be loaded. In case forecast name and folder are provided, then populate this field with -1. |

v_folder_name |

Name of the folder that holds the Forecast Rate assumption rule definition. In case the forecast sys id is provided, then populate this field with -1. |

n_to_bucket |

This field indicates the End Bucket Number for the given scenario. |

v_xrate_method_cd |

The Forecast method for exchange rate values include: Direct Input or Structured change. Use DI - For Direct Input or SC - For Structured Change |

n_exchange_rate |

This field indicates the Exchange rate for the Currency and given bucket Range.The value in N_EXCHANGE_RATE should be the rate used to convert 1 unit of the V_TO_CURRENCY_CD currency to the currency stored in V_FROM_CURRENCY_CD. For example, if V_TO_CURRENCY_CD = 'USD', then enter the exchange rate to convert 1 unit USD to another currency. |

· STG_FCAST_IRCS

v_forecast_name |

The Name of the Forecast Rate assumption rule as defined. The Forecast name indicates the Short Description for the Forecast Rate Sys ID as stored in the FSI_M_OBJECT_DEFINITION_TL table. In case the forecast sys id is provided, then populate this field with -1. |

v_scenario_name |

This field indicates the Scenario Name for which the Forecast Rate data is applicable. |

v_irc_name |

The IRC Name indicates the Name of Interest Rate Code . |

n_interest_rate_term |

This field indicates the Interest Rate Term applicable for the row of data. |

v_interest_rate_term_mult |

This field indicates the Interest Rate Term Multiplier for the row of data being loaded. |

n_from_bucket |

This field indicates the Start Bucket Number for the given scenario. |

fic_mis_date |

This field indicates the As of Date for which the data being loaded is applicable. |

n_fcast_rates_sys_id |

The System Identifier of the interest rate code forecast rate definition. In case the forecast name and folder are provided, then populate this field with -1. |

v_folder_name |

Name of the folder that holds the Forecast Rate assumption rule definition. In case the forecast sys id is provided, then populate this field with -1. |

n_interest_rate |

This field indicates the Interest Rate Change for the specified Term and for the given scenario. |

n_to_bucket |

This field indicates the End Bucket Number for the given scenario. |

v_irc_method_cd |

The Forecast method of interest rate code values include: Direct Input or Structured change. |

|

Use DI - For Direct Input or SC - For Structured Change |

The Forecast Rate Loader program updates the existing forecast rates to new forecast rates in the ALM Forecast Rate tables for Direct Input and Structured Change forecasting methods.

NOTE |

The Forecast Rate Loader can only update existing forecast rate assumption rule definitions. The initial Forecast Rate assumption rule definition and initial methods must be created through the Forecast Rates user interface within Oracle ALM. |

The Forecast Rates Data Loader performs the following functions:

The User can load forecast rate assumptions for either a specific Forecast Rate assumption rule or multiple forecast rates assumption rules.

1. To Load a specific Forecast Rate assumption rule, the user should provide either the Forecast Rate name and a folder name as defined in Oracle ALM or the Forecast Rate System Identifier.

2. When the load parameter is to load a specific Forecast Rate assumption rule for a given As of Date, the loader checks for Forecast Name/Forecast Rate System Identifier's presence in the Object Definition Registration Table. If it's present, then the combination of Forecast Name/Forecast Rate system Identifier and As of Date is checked in each of the Forecast Rate Staging Tables one by one.

3. The data loading is done from each of the staging tables for the Direct Input and Structured change methods where the Forecast Name and As of Date combination is present.

4. When the load parameter is the Load All Option (Y), the Distinct Forecast Name from the 3 staging tables is verified for its presence in Object Definition Registration table and the loading is done for each of the Forecast Names.

5. Messages for each of the steps is written into the FSI_MESSAGE_LOG table.

After the Forecast rate loader processing is completed, the user should query the ALM Forecast Rate tables to look for the new forecast rates. Also, the user can verify the data just loaded using the Forecast Rate Assumption UI.

You can launch the Forecast Data Loader from the following:

· Forecast Rates Summary page

· PL/SQL block

· Operations Batch

To launch from the Forecast Rates Summary page:

1. Click the Data Loader icon on the Forecast Rates Summary page. A warning message will appear: Upload all available Forecast Rates?

2. Click Yes. The process will load all valid data included in the staging table.

To run the Forecast Rate Loader from SQL*Plus, login to SQL*Plus as the Schema Owner. The procedure requires six parameters

1. Batch Execution Identifier (batch_run_id)

2. As of Date (mis_date)

3. Forecast Rate System Identifier (pObject_Definition_ID)

4. Option for Loading All or any Specific Forecast Rate assumption rule. If the Load All option is 'N' then either the Forecast Rate Assumption rule Name Parameter with the Folder Name or Forecast Rate Sys ID should be provided else it raises an error (pLoad_all)

5. Forecast Rate assumption rule Name (pForecast_name)

6. Folder name (pFolder_Name)

7. The syntax for calling the procedure is:

fn_stg_forecast_rate_loader(batch_run_id varchar2,

mis_date varchar2,

pObject_Definition_ID number,

pLoad_all char default 'N',

pForecast_name varchar2,

pFolder_Name varchar2

p_user_id varchar2

p_appid varchar2

)

where

§ BATCH_RUN_ID is any string to identify the executed batch.

§ mis_date in the format YYYYMMDD.

§ pObject_Definition_ID -The Forecast Rate System Identifier in ALM

§ pLoad_all indicates option for loading all forecast rates.

§ pForecast_Name. This can be null i.e '' when the pLoad_all is 'Y' else provide a valid Forecast Rate assumption rule Name.

§ pFolder_Name indicates the name of the Folder where the forecast rate assumption rule was defined.

§ p_user_id indicates the user mapped with the application in rev_app_user_preferences. This will be used to fetch as of date from rev_app_user_preferences.This is a mandatory parameter.

§ p_appid is the application name. This is a mandatory parameter.

For Example:

If the user wants to Load all forecast rates assumption rules defined within a folder, say RTSEG then

Declare

num number;

Begin

Num:= fn_stg_forecast_rate_loader('INFODOM_FORECAST_RATE_LOADER',

'20100419',

null,

'Y',

Null,

'RTSEG',

'ALMUSER1',

'ALM');

End;

The loading is done for all forecast rates under folder 'RTSEG' for as of Date 20100419.

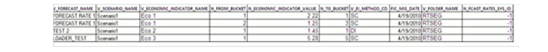

Sample Data for STG_FCAST_IRCS to Load all forecast rates defined within a folder

Description of STG_FCAST_IRCS follows

Sample Data for STG_FCAST_XRATES to Load all forecast rates defined within a folder

Description of STG_FCAST_XRATES follows

Sample Data for STG_FCAST_EI to Load all forecast rates defined within a folder

Description of STG_FCAST_EI follows

8. If the user wants to Load a specific forecast rate assumption rule, they should provide the unique Forecast Rate System Identifier.

Declare

num number;

Begin

Num:= fn_stg_forecast_rate_loader('INFODOM_FORECAST_RATE_LOADER',

'20100419',

10005,

'N',

Null,

Null,

'ALMUSER1',

'ALM');

End;

Sample Data for STG_FCAST_IRCS to load data for specific Forecast Rate providing the Forecast Rate System Identifier

Description of STG_FCAST_IRCS follows

Sample Data for STG_FCAST_XRATES to load data for specific Forecast Rate providing the Forecast Rate System Identifier

Description of STG_FCAST_XRATES follows

Sample Data for STG_FCAST_EI to load data for specific Forecast Rate providing the Forecast Rate System Identifier

Description of STG_FCAST_EI follows

NOTE |

To Load data for specific Forecast Rate providing the Forecast Rate System Identifier, the value of Forecast rate Name and Folder Name in the staging tables should be -1. |

9. If the user wants to Load a specific forecast rate assumption rule within the Folder providing the name of Forecast Rate as defined in ALM.

Declare

num number;

Begin

Num:= fn_stg_forecast_rate_loader('INFODOM_FORECAST_RATE_LOADER',

'20100419',

Null,

'N',

'LOADER_TEST',

'RTSEG',

'ALMUSER1',

'ALM');

End;

Sample Data for STG_FCAST_XRATES to Load a specific forecast rate within the Folder providing the name of Forecast Rate as defined in ALM

Description of STG_FCAST_EI follows

Sample Data for STG_FCAST_EI to Load a specific forecast rate within the Folder providing the name of Forecast Rate as defined in ALM

Description of STG_FCAST_EI follows

If the NUM value is 1, it indicates the load completed successfully, check the FSI_MESSAGE_LOG for more details.

To execute Forecast Rate Loader from OFSAAI Batch Maintenance, a seeded Batch is provided.

<INFODOM>_FORECAST_RATE_LOADER is the Batch ID and Forecast Rate Loader is the description of the batch.

1. The batch has a single task. Edit the task.

2. If the user intends to load data for all Forecast Rates under a Folder, then provide the batch parameters as shown.

§ Datastore Type: Select the appropriate datastore from list

§ Datastore Name: Select the appropriate name from the list

§ IP address: Select the IP address from the list

§ Rule Name: Forecast_Rate_loader

§ Datastore Type: Select the appropriate datastore from list

§ Datastore Name: Select the appropriate name from the list

§ IP address: Select the IP address from the list

§ Rule Name: Forecast_Rate_loader

Sample Data for STG_FCAST_IRCS to Load all forecast rates defined within a folder

Sample Data for STG_FCAST_XRATES to Load all forecast rates defined within a folder

Sample Data for STG_FCAST_EI to Load all forecast rates defined within a folder

3. If the user wants to load data for a specific Forecast Rate assumption rule, provide the Forecast Rate System Identifier, then define the batch parameters.

§ Datastore Type: Select the appropriate datastore from list

§ Datastore Name: Select the appropriate name from the list

§ IP address: Select the IP address from the list

§ Rule Name: Forecast_Rate_loader

Sample Data for STG_FCAST_IRCS to load data for a specific Forecast Rate assumption rule, with the Forecast Rate System Identifier already provided

Sample Data for STG_FCAST_XRATES to load data for a specific Forecast Rate assumption rule with the Forecast Rate System Identifier already provided

Sample Data for STG_FCAST_EI to load data for a specific Forecast Rate assumption rule with the Forecast Rate System Identifier already provided

NOTE |

To Load data for specific Forecast Rate assumption rules, provide the Forecast Rate System Identifier and the value of Forecast rate Name and Folder Name in the staging tables should be -1. |

4. If the user wants to load data for specific Forecast Rate assumption rules, provide the Forecast Rate Name as defined in ALM, then define the batch parameters as shown.

§ Datastore Type: Select an appropriate datastore from list

§ Datastore Name: Select an appropriate name from the list

§ IP address: Select the IP address from the list

§ Rule Name: Forecast_Rate_loader

Sample Data for STG_FCAST_IRCS to Load a specific forecast rate assumption rule, within the Folder, provide the name of Forecast Rate rule as defined in ALM

Sample Data forSTG_FCAST_XRATES to Load a specific forecast rate assumption rule, within the Folder, provide the name of Forecast Rate rule as defined in ALM

Sample Data for STG_FCAST_EI to Load a specific forecast rate assumption rule, within the Folder, provide the name of Forecast Rate rule as defined in ALM

5. Save the Batch.

6. Execute the Batch for the required As of Date.

The Forecast Rate Data Loader can have the following exceptions:

· Exception 1: Error. While fetching the Object Definition ID from Object Registration Table

This exception occurs if the forecast rate assumption rule name is not present in the FSI_M_OBJECT_DEFINTION_TL table short_desc column.

· Exception 2: Error. More than one Forecast Sys ID is present.

This exception occurs when there is more than one Forecast Sys ID present for the given forecast rate assumption rule name.

· Exception 3: Error. Forecast Rate assumption rule Name and As of Date combination do not exist in the Staging Table.

This exception occurs when the Forecast Rate assumption rule Name and as of date combination do not exist in the Staging Table.

Prepayment Model assumptions are defined within the Prepayment Model rule User Interfaces in OFSAA ALM, HM and FTP applications. You can input prepayment rates directly through the UI, or import the rates from Excel into the UI. You can also use the Prepayment Rate Data Loader procedure to populate Prepayment Model rates into the OFSAA metadata table from the corresponding staging table. This data loader program can be used to update the Prepayment Model rates on a periodic basis. After loading the prepayment rates, you can view the latest data in the Prepayment Model assumptions UI.

Topics:

· Prepayment Rate Loader Tables

· Executing the Prepayment Model Data Loader

The Loader uses the following staging and target tables:

· STG_PPMT_MODEL_HYPERCUBE: This staging table contains prepayment rates for the selected prepayment dimensions.

· FSI_PPMT_MODEL_HYPERCUBE: The loader copies rates into this target table for the associated Prepayment Dimension combinations present in the FSI_M_PPMT_MODEL table.

The Prepayment Rate Data Loader program populates the target OFSAA Prepayment Model table with the values from the staging table. The procedure will load prepayment rate data for a specified Prepayment Model rule or all Prepayment models that are present in the staging table. The program assumes that the Prepayment Model assumptions have already been defined using OFSAA Prepayment Model rule UIs before loading Prepayment Model rates.

The program performs the following functions:

1. The Data Loader accepts the AS_OF_DATE as a parameter, that is, date to load all prepayment rates from the Staging table into the OFSAA metadata table for the specific as of date.

2. The program performs certain checks to determine if:

The Prepayment Model dimensions present in staging are the same as those present in the OFSAA Prepayment Model metadata tables.

The bucket members of each of the dimensions present in staging are same as those present in the metadata tables.

The number of records present in the STG_PPMT_MODEL_HYPERCUBE table for a Prepayment Model is less than or equal to the maximum number of records that are allowed, which is determined by multiplying the number of buckets per dimension of the Prepayment Model.

PPMT_MDL_SYS_ID |

DIMENSION_ID |

NUMBER_OF_BUCKETS |

20100405 |

8 |

2 |

20100405 |

4 |

3 |

Then the maximum number of records = number of buckets of dimension 8 * number of buckets of dimension 4

That is, maximum number of records = 2 * 3

Therefore, maximum number of records = 6 records

Check is made by Prepayment Rate Data Loader whether the number of records present in STG_PPMT_MODEL_HYPERCUBE table for a Prepayment model 20100405 is less than or equal to 6 or not.

3. If the above quality checks are satisfied, then the rates present in the Staging table are updated to the OFSAA prepayment model metadata table.

4. Any error messages are logged in the FSI_MESSAGE_LOG table and can be viewed in OFSAAI Log Viewer UI.

After the Prepayment Rate loader is completed, you should query the FSI_PPMT_MODEL_HYPERCUBE table to look for the new rates. Also, you can verify the data using the Prepayment Model Assumption UI.

Populating the data into STG_PPMT_MODEL_HYPERCUBE

· V_PPMT_MDL: The Name of the Prepayment Model as stored in FSI_M_OBJECT_DEFINITION_TL table. If Prepayment Model name is given, also provide the Folder name. If the Prepayment Model System ID is provided, then populate this field with -1.

· N_ORIG_TERM: Original term of the contract

· N_REPRICING_FREQ: The number of months between instrument repricing

· N_REM_TENOR: Remaining term of the contract (in Months)

· N_EXPIRED_TERM: Expired term of the contract (in Months)

· N_TERM_TO_REPRICE: Repricing term of the contract (in Months)

· N_COUPON_RATE: The current gross rate on the instrument

· N_MARKET_RATE: Forecast rate representing alternate funding

· N_RATE_DIFFERENCE: Spread between the current gross rate and the market rate

· N_RATE_RATIO: Ratio of the current gross rate to the market rate

· N_PPMT_RATE: User defined prepayment rate for the associated dimension value combination

· FIC_MIS_DATE: The As of Date for which the data being loaded is applicable

· V_FOLDER_NAME: Name of the Folder which holds the Prepayment Model. If the Prepayment Model System ID is provided, then populate this field with -1. If Folder name is provided, then provide Prepayment Model name as well.

· N_PPMT_MDL_SYS_ID: The System Identifier (Object Definition ID) of the Prepayment Model to which this data will be loaded. If Prepayment Model name and Folder are provided, then populate this field with -1.

Column mapping from source to target

Source STG_PPMT_MODEL_HYPERCUBE to Target FSI_PPMT_MODEL_HYPERCUBE mapping:

N_ORIG_TERM -> ORIGINAL_TERM

N_REPRICING_FREQ ->REPRICING_FREQ

N_REM_TENOR -> REMAINING_TERM

N_EXPIRED_TERM -> EXPIRED_TERM

N_TERM_TO_REPRICE -> TERM_TO_REPRICE

N_COUPON_RATE -> COUPON_RATE

N_MARKET_RATE -> MARKET_RATE

N_RATE_DIFFERENCE -> RATE_DIFFERENCE

N_RATE_RATIO -> RATE_RATIO

N_PPMT_RATE -> PPMT_RATE

N_PPMT_MDL_SYS_ID -> PPMT_MDL_SYS_ID when N_PPMT_MDL_SYS_ID <> -1, otherwise it performs a lookup in FSI_M_OBJECT_DEFINITION_TL based on the Name and Folder provided in the staging tabl

Example

Based on the FSI_M_PPMT_MODEL table, for data in the staging table with Prepayment Model System ID 20100405:

PPMT_MDL_SYS_ID DIMENSION_ID NUMBER_OF_BUCKETS

20100405 8 2

20100405 4 3

The maximum number of records = (number of buckets of dimension 8) * (number of buckets of dimension 4).

That is, maximum number of records = 2 * 3

Therefore, maximum number of records = 6 records.

The Prepayment Rate Data Loader checks whether the number of records present in STG_PPMT_MODEL_HYPERCUBE table for Prepayment Model 20100405 is less than or equal to 6.

If the above quality checks are satisfied, then the rates present in the Staging table are updated to the OFSAA Prepayment Model metadata table.

Any error messages are logged in the FSI_MESSAGE_LOG table.

You can launch the Data Loader from the following:

· Prepayment Models summary page

· PL/SQL block

· Operations Batch

· Prepayment Models summary page: To launch from the Prepayment Models summary page:

a. Click the Data Loader icon on the Prepayment Models summary grid toolbar. A warning message will appear: Upload all available Prepayment Rates?

b. Click Yes. The process will load all valid data included in the staging table.

· PL/SQL block: To execute theLoader within a PL/SQL block:

To run the function from SQL*Plus, log in to SQL*Plus as the Schema Owner. The loader requires two parameters

§ Batch Execution Name

§ As Of Date

Syntax:

fn_PPMT_RATE_LOADER(batch_run_id IN VARCHAR2, as_of_date IN VARCHAR2)

Where:

§ BATCH_RUN_ID is any string to identify the executed batch.

§ As_of_Date is the execution date in the format YYYYMMDD.

For Example:

Declare

num number;

Begin

Num:= fn_PPMT_RATE_LOADER('INFODOM_20100405','20100405');

End;

The loader is executed for the given as of date. If the return value (NUM) is 1, this indicates the load completed successfully. Check the FSI_MESSAGE_LOG for more details.

· Operations Batch: To run from Operations Batch framework:

You can create a new Batch with the Component = 'TRANSFORM DATA' and specify the following parameters for the task:

§ Datastore Type: Select appropriate datastore from list

§ Datastore Name: Select appropriate name from the list

§ IP address: Select the IP address from the list

§ Rule Name: ppmt_rate_loader

§ Parameter List: None

Viewing the results:

Any error messages are logged in the FSI_MESSAGE_LOG table. If you launch the Loader from the Prepayment Models Summary page or Operations Batch, you can view processing messages in OFSAAI in the Operations and selectView Log UI, where the Component Type = Data Transformation and the Batch Run ID = the ID for your run.

You can also spot check results of the load as follows:

· Query the FSI_PPMT_MODEL_HYPERCUBE table to confirm existence of the new rates.

· Use the Prepayment Model rule UI to select your rule and View your rates.

The Prepayment Model Rate Loader can have the following exceptions:

· Exception 1: Error while fetching the Object Definition ID from Object Definition Table.

This exception occurs if the prepayment model name is not present in the FSI_M_OBJECT_DEFINTION_TL table.

· Exception 2: Error. More than one prepayment model sys ID is present for the given definition.

This exception occurs when there is more than one Prepayment Model System ID present for the Prepayment Model name in staging.

· Exception 3: Error. Data is present in additional dimension ID column than those defined in FSI_M_PPMT_MODEL.

This exception occurs if rates are specified in staging for the dimensions that are not part of the Prepayment Model definition.

· Exception 4: The value in the Dimension ID column is not matching with the value present in the corresponding column in metadata table.

This exception occurs if rates are specified in staging for the dimension members that are not part of the Prepayment Model definition.

· Exception 5: The number of records for the staging table for a given Prepayment Model Name is more than those calculated by multiplying the number of buckets in FSI_M_PPMT_MODEL table for the given model name.

This exception occurs if there are excess records in staging compared to OFSAA metadata tables for the given Prepayment Model.

Data in staging instrument tables are moved into respective OFSAA processing instrument tables using OFSAAI T2T component. After loading the data, users can view the loaded data by querying the processing instrument tables.

Topics:

· Mapping To OFSAA Processing Tables

· Populating Accounts Dimension

· Executing T2T Data Movement Tasks

Following are examples of some of the various application staging instrument tables:

· STG_LOAN_CONTRACTS: holds contract information related to various loan products including mortgages.

· STG_TD_CONTRACTS: holds contract information related to term deposit products.

· STG_CASA: holds information related to Checking and Savings Accounts.

· STG_OD_ACCOUNTS: holds information related to over-draft accounts.

· STG_CARDS: holds information related to credit card accounts.

· STG_LEASES: holds contract information related to leasing products.

· STG_ANNUITY_CONTRACTS: holds contract information related to annuity contracts.

· STG_INVESTMENTS: holds information related to investment products like bond, equities, and so on.

· STG_MM_CONTRACTS: holds contract information related to short term investments in money market securities.

· STG_BORROWINGS: holds contract information related to various inter-bank borrowings.

· STG_FX_CONTRACTS: holds contract information related to FX products like FX Spot, FX Forward, and so on. Leg level details, if any, are stored in various leg-specific columns within the table.

· STG_SWAPS_CONTRACTS: holds contract information related to various types of swaps. Leg level details, if any, are stored in various leg-specific columns within the table.

· STG_OPTION_CONTRACTS: holds contract information related to various types of options. Leg level details, if any, are stored in various leg-specific columns within the table.

· STG_FUTURES: holds contract information related to interest rate forwards and all types of futures. Leg level details, if any, are stored in various leg-specific columns within the table.

· STG_LOAN_COMMITMENTS: contains all existing columns from STG_LOAN_CONTRACTS

NOTE |

You can modify any existing instrument table to include the columns by adding the COMMITMENT_CONTRACTS super type. If you want to execute the Forward Rate transfer pricing pricing against tables in addition to FSI_D_LOAN_COMMITMENTS, then add the required columns and do so by adding the COMMITMENT_CONTRACTS super type via ERWIN. |

Data can be loaded into staging tables through F2T component of OFSAAI. After data is loaded, check for data quality within the staging tables, before moving into OFSAA processing tables. Data quality checks can include:

· Number of records between external system and staging instrument tables.

· Valid list of values in code columns of staging.

· Valid list of values in dimension columns like product, organization unit, general ledger, and so on. These members should be present in the respective dimension tables.

· Valid values for other significant columns of staging tables.

Following are examples of some of the pre-defined application T2T mappings between the above staging tables and processing tables:

· T2T_LOAN_CONTRACTS: for loading data from STG_LOAN_CONTRACTS to FSI_D_LOAN_CONTRACTS.

· T2T_MORTGAGES: for loading data from STG_LOAN_CONTRACTS to FSI_D_MORTGAGES.

· T2T_CASA: for loading data from STG_CASA to FSI_D_CASA.

· T2T_CARDS: for loading data from STG_CARDS to FSI_D_CREDIT_CARDS.

· T2T_TD_CONTRACTS: for loading data from STG_TD_CONTRACTS to FSI_D_TERM_DEPOSITS.

· T2T_ANNUITY_CONTRACTS: for loading data from STG_ANNUITY_CONTRACTS to FSI_D_ANNUITY_CONTRACTS.

· T2T_BORROWINGS: for loading data from STG_BORROWINGS to FSI_D_BORROWINGS.

· T2T_FORWARD_CONTRACTS: for loading data from STG_FUTURES to FSI_D_FORWARD_RATE_AGMTS.

· T2T_FUTURE_CONTRACTS: for loading data from STG_FUTURES to FSI_D_FUTURES.

· T2T_FX_CONTRACTS: for loading data from STG_FX_CONTRACTS to FSI_D_FX_CONTRACTS.

· T2T_INVESTMENTS: for loading data from STG_INVESTMENTS to FSI_D_INVESTMENTS.

· T2T_LEASES_CONTRACTS: for loading data from STG_LEASES_CONTRACTS to FSI_D_LEASES.

· T2T_MM_CONTRACTS: for loading data from STG_MM_CONTRACTS table to FSI_D_MM_CONTRACTS.

· T2T_OPTION_CONTRACTS: for loading data from STG_OPTION_CONTRACTS to FSI_D_OPTION_CONTRACTS.