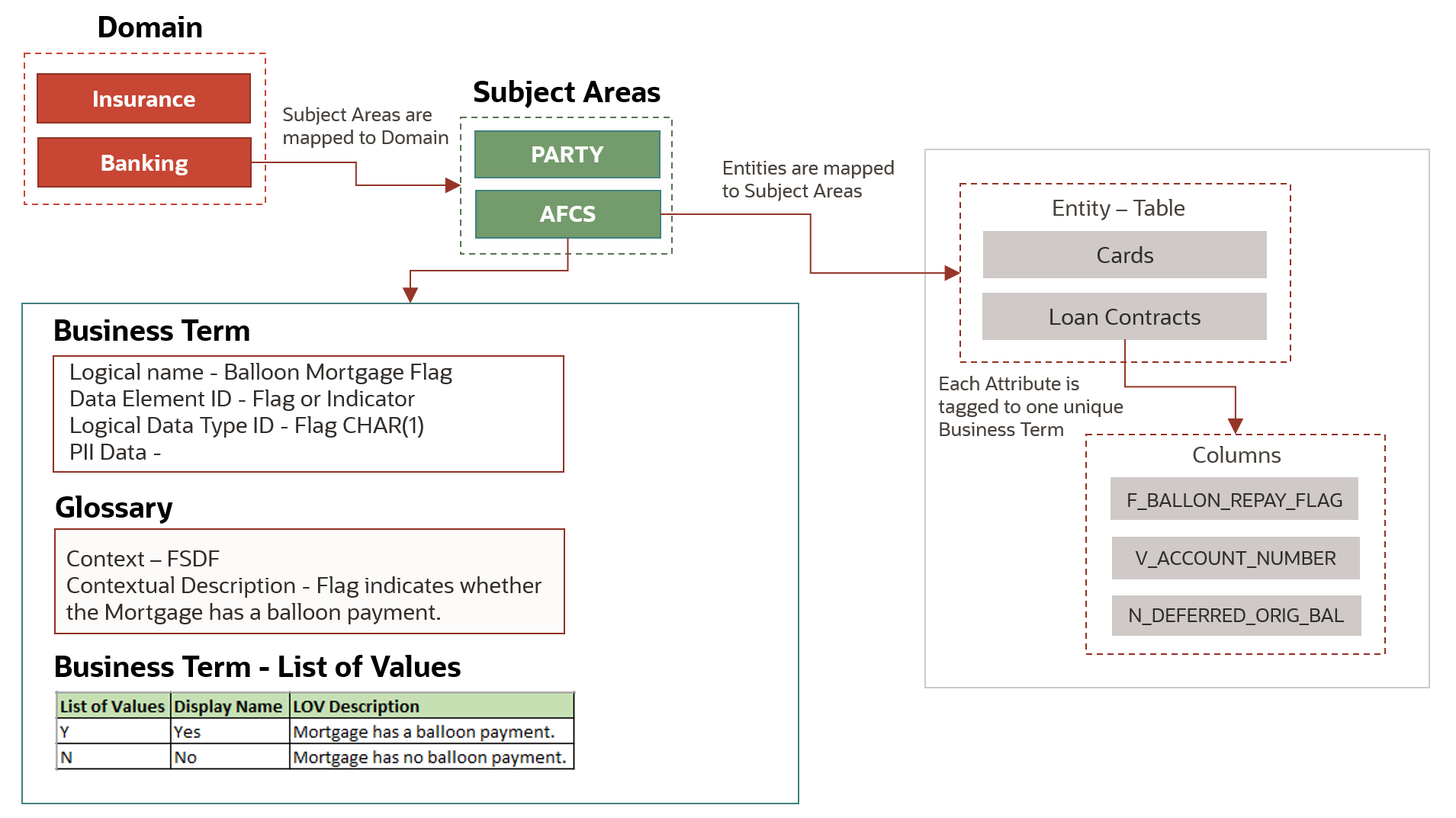

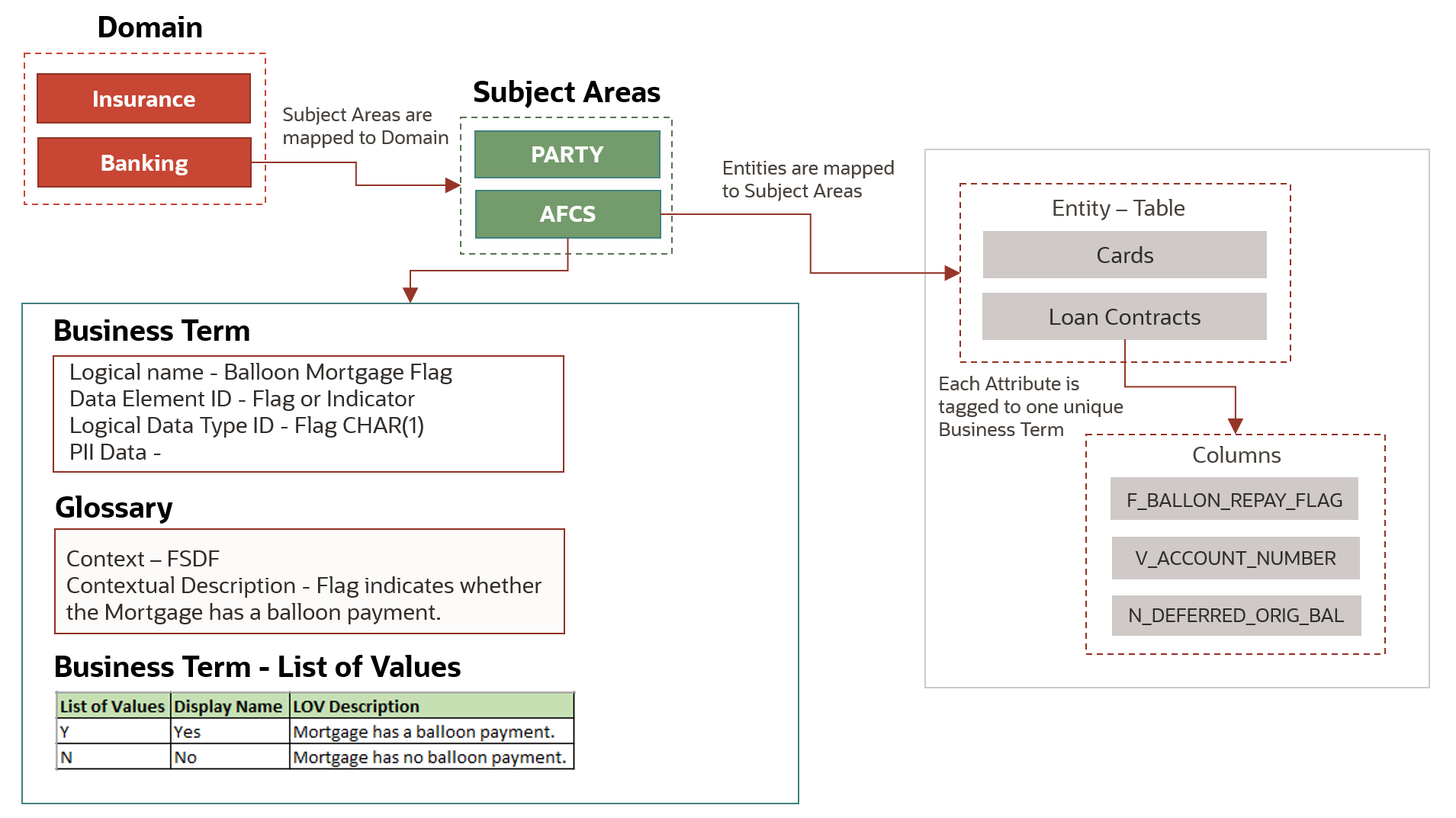

A well defined structure is followed to seed and organize the Data Catalog Contents for efficient discovery and management. The structure starts with a Domain, which for example, in the OFSAA context can be either Banking or Insurance, and for this Domain, the Contents are created. Each Domain can have multiple Subject Areas, and a collection of Entities and Business Terms associated with those Subject Areas.

Figure: Data Catalog Components

The Data Catalog consists of the following Components:

· Domain

· Subject Area

· Entity

· Business Term

· Glossary

· Data Quality Check Rules

A Domain represents the category of the Business in the Finance Industry.

Data Catalog consists of the Data Catalog Framework and Seeded with Contents related to a specific Line of Business or Industry, which is referred to as a Domain.

In OFSAA, you can select the required Domain. For example, Banking, Insurance, and so on.

By selecting the Domain Name, you can restrict viewing the Catalog Components only to the intended Domains and deploy the Data Model also selectively.

A Subject Area represents a high-level data organization, which belongs to a group of related areas within a specific functional area of a Finance Organization.

Entities are grouped together into distinct Subject Areas based on the business functions or processing supported for a better identification and usage.

The relationship type between Domain and Subject Area is Many-to-Many.

The following are a few examples of Subject Areas in the Data Catalog:

· Accounting Foundation Cloud Service

· Party

· Product Processor

· Transaction

· Product

Data Catalog has a provision to define the Parent-Child Relationship between the Subject Areas. Therefore, you can define the Parent-Child Relationship as follows if there is a business need:

· Domain to Subject Area Relationship

Each Business Term is related to an Entity of the Data Catalog. The Entities consist of Attributes. The Data Quality Rules are built for the Entities, so that the System Input Data can be verified and validated.

The Entities support data movements to ensure that when the Input Data is staged, standardized, and processed, there is a mechanism to move that data into the Cells for analytical consumption.

Entity consists of a set of Attributes. Each Attribute is mapped to a unique Business Term. Each Attribute derives its characteristics such as Data Type, Constraints, and so on from the Business Definitions.

Data Catalog defines granularity of each of the Entity it holds.

V_GRAIN_ID |

V_GRAIN_NAME |

|---|---|

DATE |

Date |

ACCT |

Customer Account |

ACCT_TXN |

Customer Account Transactions |

ACCT_ENT |

Accounting Entries |

GL_DATA |

General Ledger Data |

EXCHANGE_RATE |

Exchange Rates |

PARTY_CONSENT |

Party Consent |

For example, as the Loan Contracts Entity contains information at the Account Level, the grains of Loan Entity is defined as ACCT.

A Business Term is a Functional Keyword that represents a unique functional aspect of the Financial Artefacts (Entities and Attributes). There is a business meaning to each Financial Artefact, and therefore, a business name is created in the form of a Business Term. Then the Business Term is used to find and fit into a purpose of different contexts. Therefore, Business Terms are expressions of the Participants/Actors, their Activities, and requirements conveyed in common business.

The approach to arrive at the Logical View of the Physical Model has inverted in the Data Catalog. Contrary to the previous practices, first the Functional Keyword or Artefacts are identified, collated, and then expressed in common business language, and then the corresponding Physical Model is developed.

Multiple Business Terms are a part of each Subject Area. A Business Term is a singular term irrespective of multiple places it appears in and the Business Term will have a recognizable naming pattern.

Metadata is attached to the Business Terms.

The Content Structure allows you to define relationship between related Business Terms for better discovery and usage in the aspect of sourcing.

Business Term |

Related Business Term |

Relationship Type |

|---|---|---|

Current Write Off Amount |

Write Off General Ledger Code |

MEASURE_GL |

To create or update a Business term, see the Manage Business Terms Section.

In the process of creating a Business Term, certain characteristics are also defined that serves as input when arriving to a Physical Model Structure. These characteristics include origin, classification, logical data type, PII term indicator, list of accepted values, and data quality check applicable for a Business Term.

To create or update a Business term, see the Manage Business Terms Section.

The Business Term Properties are as follows:

· Term ID

· Logical name

· Origin

· Data Element ID

· Language

· Logical Data Type ID

· PII Flag

· LOV Code

· Display Name

· LOV Description

NOTE:

The Business Terms for which PII Flag is set and for those Business Terms that are used in Entities, the underlying attributes of those Entities will be redacted by applying the appropriate redaction policies. A normal user cannot query or view the redacted data. Only users with exempt redaction policy role can view or query the data.

For each Business Term, there is a corresponding Glossary. The Glossary explains the meaning and purpose of each Business Term, which is the context. The Glossary consists of the information such as description, usage, sample values, and usage examples of the Business Terms.

Data Catalog defines and seeds the List of Values for all the Business Terms that are Flags. The List of Values help you with data sourcing and creating Data Quality Checks.

List of Values |

Display Name |

List of Values Description |

|---|---|---|

Y |

Yes |

Mortgae has a balloon payment. |

N |

No |

Mortgae has no balloon payment. |

Data Catalog Contents include Data Quality Check Rules. These Rules are defined at the Business Term and Entity Level, and seeded as a part of the Data Catalog Content.

The following are the types of Data Quality Checks and their definitions:

Data Quality Check |

Definition |

|---|---|

Blank Value Check |

Identifies if the base column is empty considering the blank space. |

Column Reference/Specific Value Check |

Compares the base column data with another column of the base table or with a specified direct value by using a list of pre-defined operators. |

Data Length Check |

Checks for the length of the base column data by using a minimum and maximum value, and identifies if it falls outside the specified range. |

Duplicate Check |

Is used when a combination of the column is unique and identifies all duplicate data of a base table in terms of the columns selected for the duplicate check. |

List of Value Check |

It can be used to verify values where a dimension/master table is not present. This check identifies if the base column data does not match with a value or specified code in a list of values. |

NULL Value Check |

Identifies if NULL is specified in the base column. |

Referential Integrity Check |

Identifies all the base column data that has not been referenced by the selected column of the referenced table. Here, the user specifies the reference table and columns. |

Range Check |

Identifies if the base column data falls outside a specified range of a Minimum and Maximum value. Value Needs to be between 0 and 100. |

The controls are specific to reports.

Use this Run Pipeline (Process) to perform the Data Quality Checks on source records for various data elements.

To use and execute the Source Data Quality Check Process in the Process Orchestration, do the following:

1. To access the Source Data Quality Check Process Pipeline, on the Home Page, select the Process Orchestration. The Process Modeller Page is displayed.

2. On the Process Modeller Page, search and select the Source Data Quality Check Process. The Process Flow Page is displayed. This Process Flow is designed on the Drawing Canvas using the Transition, Activity, and Widgets Components available in the floating toolbar. RUN DQ RULE Widgets representing DQ Groups are set up in parallel to each other. A Data Service Widget called as DQReportingEngine is added at the end meant for reporting Data Quality Checks.

3. To view the details of any Widget, double-click on the Widget and the details related to its Activity, Transition, and Notification are displayed. On the drawing canvas, you can select and see the Definition, Data Fields, and Application Rule details.

4. To execute the Run, you can select the Run Parameter Values using the Execution Button on the Process Flow Page or on the Process Modeller Page.

Go to the Process Modeller Page to execute the Run. Click the Menu Button corresponding to the Source Data Quality Check Process that needs to be executed. Click Execute Run. The Execution Page is displayed.

5. On the Execution Page, to execute the Run with parameters, select With Parameters in the Execution Type List. Select the required As of Date for which the Data Quality Checks need to be processed. Click the Apply Button to initiate the Run Pipeline execution.

NOTE:

The execution of the Run Pipeline is triggered using the selected Extraction Date.

See the Process Orchestration Section for more details about the Process Orchestration Framework.

6. To verify the Run Execution of the Source Data Quality Check Process, do the following:

a. To open the Process Monitor Page, on the Process Modeller Page, click the Process Monitor Button or select Process Flow Monitor on the Process Modeller Menu.

b. The Process Monitor Page is displayed, which lists all the Run Instances corresponding to the Source Data Quality Check Process. On the Process Monitor Page, search by the Process ID, or by the Process Name Source Data Quality Check Process, and select the Process Instance for the required Run Pipeline (Process) that was executed.

7. The Process Flow Page is displayed with the Run Execution Status on each Node of the Source Data Quality Check Process.

8. To verify the Run Execution Logs, do the following:

a. On the Process Monitor Page, click the required Process Instance for which you need to verify the Execution Logs. The Process Flow Page is displayed with the Run Execution Status on each Node.

b. To see the Execution Status details of a Node, double-click on that Node. The Execution Status details Page is displayed. Click Execution Logs. The Log Viewer Page is displayed, which lists all the Logs related to the Process Instance. To see the details of a log entry, click the Show More Button. Click outside the Log Viewer Page to close it.

A Slowly Changing Dimension (SCD) is a dimension that stores and manages both current and historical data over time in a Data Warehouse.

The three types of SCDs are as follows:

· Type 1 SCDs - Overwriting: In a Type 1 SCD, the new data overwrites the existing data. Therefore, the existing data is lost as it is not stored anywhere else. No additional information needs to be specified to create a Type 1 SCD.

· Type 2 SCDs - Creating another dimension record: A Type 2 SCD retains the full history of values. When the value of a chosen attribute changes, the current record is closed. A new record is created with the changed data values and this new record becomes the current record. Each record contains the effective time and expiration time to identify the time period between which the record was active.

· Type 3 SCDs - Creating a current value field: A Type 3 SCD stores two versions of values for certain selected level attributes. Each record stores the previous value and the current value of the selected attribute. When the value of any of the selected attributes changes, the current value is stored as the old value and the new value becomes the current value.

Data Catalog supports Type1 and Type 2 types of SCD.

The Dimension Population Process in the Process Orchestration displays the available SCDs with details such as Map Reference Number and Entity Name.

A Business Hierarchy refers to organizing data into logical tree structure to represent the groups and relations among various levels at which measure can be viewed. A measure can be viewed at different levels depending upon the hierarchy breakdown of the dimension category.

Data Catalog supports data loading using the following Hierarchies:

· Account Hierarchy Population

· Cash Flow Type Hierarchy Population

· Employee Hierarchy Population

· GL Account Hierarchy Population

· Legal Entity Hierarchy Population

· Line Of Business Hierarchy Population

· Organization Unit Hierarchy Population

· Party Hierarchy Population

· Product Hierarchy Population

NOTE:

You must provide the snapshot for the Hierarchy code that has been corrected or modified when you reload the Hierarchy data.

Hierarchy data loading is a part of the Dimension Population Process.

Use this Run Pipeline (Process) to manage past and historical data for various Dimensions.

To use and execute the Dimension Population Process in the Process Orchestration, do the following:

1. To access the Dimension Population Process Pipeline, on the Home Page, select the Process Orchestration. The Process Modeller Page is displayed.

2. On the Process Modeller Page, search and select the Dimension Population Process. The Process Flow Page is displayed. This Process Flow is designed on the Drawing Canvas using the Transition, Activity, and Widgets Components available in the floating tool bar. SCD Widgets representing individual SCDs are set up in parallel to each other. At the end of this process, the Connectors representing Hierarchies are set in parallel.

3. To view the details of any Node, double-click on the Node and the details related to its Activity, Transition, and Notification are displayed. On the drawing canvas, you can select and see the Definition, Data Fields, and Application Rule details.

4. To execute the Run, you can select the Run Parameter Values using the Execution Button on the Process Flow Page or on the Process Modeller Page.

Go to the Process Modeller Page to execute the Run. Click the Menu Button corresponding to the Dimension Population Process that needs to be executed. Click Execute Run. The Execution Page is displayed.

5. On the Execution Page, to execute the Run with parameters, select With Parameters in the Execution Type List. Select the required As of Date for which the SCDs need to be processed. Click the Apply Button to initiate the Run Pipeline execution.

NOTE:

The execution of the Run Pipeline is triggered using the selected Extraction Date.

See the Process Orchestration Section for more details about the Process Orchestration.

6. To verify the Run Execution of the Dimension Population Process, do the following:

a. To open the Process Monitor Page, on the Process Modeller Page, click the Process Monitor Button or select Process Flow Monitor on the Process Modeller Menu.

b. The Process Monitor Page is displayed, which lists all the Run Instances corresponding to the Dimension Population Process. On the Process Monitor Page, search by the Process ID, or by the Process Name Dimension Population Process, and select the Process Instance for the required Run Pipeline (Process) that was executed.

7. The Process Flow Page is displayed with the Run Execution Status on each Node of the Dimension Population Process.

8. To verify the Run Execution Logs, do the following:

a. On the Process Monitor Page, click the required Process Instance for which you need to verify the Execution Logs. The Process Flow Page is displayed with the Run Execution Status on each Node.

b. To see the Execution Status details of a Node, double-click on that Node. The Execution Status details Page is displayed. Click Execution Logs. The Log Viewer Page is displayed, which lists all the Logs related to the Process Instance. To see the details of a log entry, click the Show More Button. Click outside the Log Viewer Page to close it.