Archiving Shipping and Pegging History

Use the Data Archive Manager in PeopleTools to move shipped demand lines and peg chains from the transaction tables to the history tables.

|

Page Name |

Definition Name |

Usage |

|---|---|---|

|

PSARCHRUNCNTL |

Use this page to submit batch Application Engine jobs to move shipping data and peg chains between the transactional tables and the history tables. |

|

|

Define Archive Query Binds Page |

PSARCHRUNQRYBND |

Enter the bind variables for shipping and pegging history. |

Use the Data Archive Manager in PeopleTools to remove shipped and canceled demand lines from a business unit with ship dates that fall within a specified date range.

Rules Used to Determine Eligibility for Archiving Shipping History

The following rules identify which demand lines are eligible for archiving:

The demand line must be depleted by the Deplete On Hand Qty (Depletion) process (IN_FUL_DPL) or must have been previously canceled.

The demand line for sales orders, intercompany transfers, and non-inventory item demand lines must be picked up by PeopleSoft Billing.

If the demand line requires intrastat reporting, these reports must be generated.

The demand line must have a ship date that falls within the specified date range.

All demand lines of an order must be eligible for archiving before any of the demand lines for that order can be archived.

All order lines must be archived before order header records (ISSUE_HDR_INV and MSR_HDR_INV) can be archived.

All peg chains must be archived before their related pegged demand lines can be archived.

Tables Accessed and Updated for Shipping History

The Data Archive Manager archives and then purges the following tables:

IN_DEMAND

IN_DEMAND_ADDR

IN_DEMAND_BI

IN_DEMAND_CMNT

IN_DEMAND_TRPC

IN_DEMAND_HASH

SHIP_HDR_INV

SHIP_SERIAL_INV

SHIP_CMNT_INV

IN_DELIVERY_ORD

DEMAND_PHYS_INV

ISSUE_HDR_INV

MSR_HDR_INV

LOT_ALLOC_INV

EST_SHIP_INV

EST_SHIP_IN_TMP

SHIP_CNTR_INV

SHIP_CNTRLS_INV

The Data Archive Manager purges the historical data in the IN_SHIP_DOC_TRK table without archiving it. Data from this file cannot be recovered once it is purged.

Use the Data Archive Manager in PeopleTools to move peg chains in the IN_PEGGING table to its history table, IN_PEG_HIST. This routine removes completed and canceled peg chains within a specified date range.

Table Accessed and Updated for Pegging History

The IN_PEGGING table is accessed and updated by the Data Archive Manager.

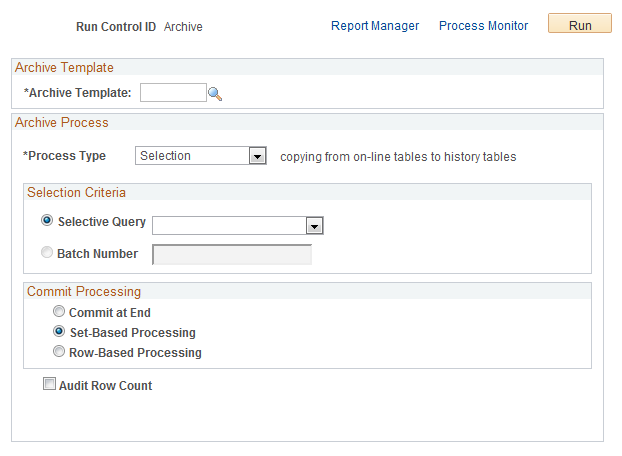

Use the Archive Data To History page (PSARCHRUNCNTL) to use this page to submit batch Application Engine jobs to move shipping data and peg chains between the transactional tables and the history tables.

Navigation:

This example illustrates the fields and controls on the Archive Data to History process page. You can find definitions for the fields and controls later on this page.

PeopleSoft Data Archive Manager provides an integrated and consistent framework for archiving data from PeopleSoft applications. Using the Archive Data To History page you can define a job to move shipping data and peg chains from transactional tables to history tables.

Field or Control |

Description |

|---|---|

Archive Template |

Select the archive template, INDEMAND, to move shipping data from transactional tables to history tables. Select the archive template, INPEG to move peg chain data to the history table. |

Process Type |

Select the type of action to be performed, the options are:

|

Selective Query |

Specify the archive query defined within the archive template to use at runtime. If there are bind variables, you will be prompted to enter the bind variables when you click the Define Binds link.

Once you enter the archive query, the Define Binds link is displayed. |

Define Binds |

Select this link to access the Define Archive Query Binds page (PSARCHRUNQRYBND) where you can enter the bind variables for shipping and pegging history. |

Batch Number |

For archiving processes that are based on data in the history tables (such as delete data from transactional tables, copy data from history tables to transactional tables, and delete data from history tables), you will be prompted to enter an Archive Batch Number. |

Commit at End |

Data Archive Manager processes data using set-based processing, but doesn't issue any commits to the database server until the entire process has completed. For example, if your Archive Template is defined with Pre- and Post- Application Engine programs, the Data Archive Manager first executes the Pre-Application Engine program, then it processed all of the tables in the Archive Template, next it executes the Post-Application Engine program. Upon successful execution of all these steps, a commit is issued to the database. When you select this option, the set-based processing option is automatically selected as well. |

Set-Based Processing |

Data is processed by passing a single SQL statement per record to be archived to the database server. A commit is issued to the database server after successful completion of each SQL statement. |

Row-Based Processing |

Data Archive Manager processes data one row at a time using PeopleCode fetches. This method of archiving is more memory intensive and takes longer than set-based processing. However, for archiving processes that contain significant amounts of data, row-based processing could be used to reduce adverse affects on the database server. Row-based processing is appropriate when you're archiving large amounts of data from transactional tables and wish to issue commits more frequently. If you select this option, you must enter a commit frequency. |

Commit Frequency |

Specify the number of rows to process before issuing a commit to the database. |

Audit Row Count |

Select to audit the number of rows in the record that meet the criteria. This number is displayed in the Number of Rows field on the Audit Archiving page |

See the product documentation for PeopleTools: Lifecycle Management Guide Migrating Data with Data Mover