2 DSR Features and Functions

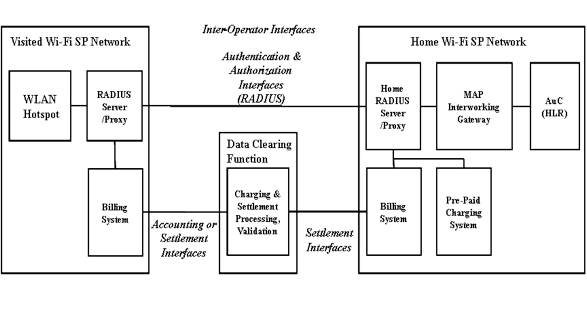

Primary function of the DSR is as a Diameter relay per RFC 6733 to route Diameter traffic based on provisioned routing data. As a result, the DSR reduces the complexity and cost of maintaining a large number of SCTP connections in LTE, IMS, and 3G networks. It simplifies the Diameter network and streamlines the provisioning of Diameter interfaces. The DSR supports flexible traffic load sharing and redundancy schemes and offloads Diameter clients and servers from having to perform many of these tasks, thereby reducing cost and time to market and freeing up valuable resources in the end points. For a full list of all supported Diameter interfaces please see, Supported Diameter Interfaces.

DSR network elements are deployed in geographically diverse mated pairs with each NE servicing signaling traffic to form a collection of Diameter clients, servers, and agents. The DSR Message Processor (MP) provides the Diameter message handling function and each DSR MP supports connections to all Diameter peers (defined as an element to which the DSR has a direct transport connection).

2.1 Overview

One primary function of the DSR is as a Diameter relay per RFC 6733 to route Diameter traffic based on provisioned routing data. As a result, the DSR reduces the complexity and cost of maintaining a large number of SCTP connections in LTE, IMS and 3G networks, simplifies the Diameter network and streamlines the provisioning of Diameter interfaces. The DSR supports flexible traffic load sharing and redundancy schemes and offloads Diameter clients and servers from having to perform many of these tasks, thereby reducing cost and time to market and freeing up valuable resources in the end points. For a full list of all supported Diameter interfaces please see Appendix A: Supported Diameter Interfaces.

DSR network elements are deployed in geographically diverse mated pairs with each NE servicing signaling traffic to/from a collection of Diameter clients, servers, and agents. The DSR Message Processor (MP) provides the Diameter message handling function and each DSR MP supports connections to all Diameter peers (defined as an element to which the DSR has a direct transport connection).

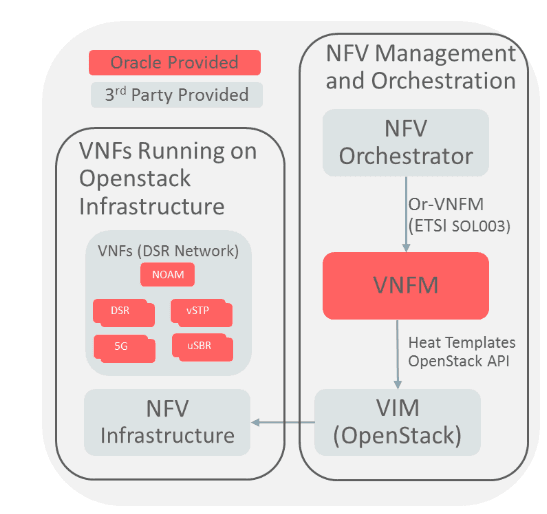

2.2 DSR system architecture

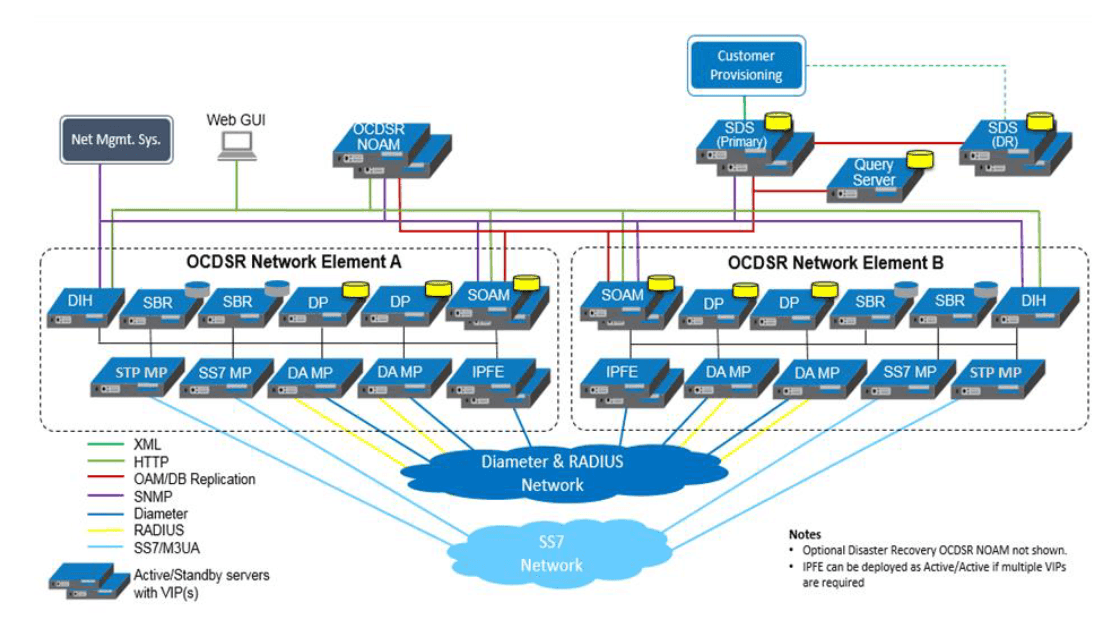

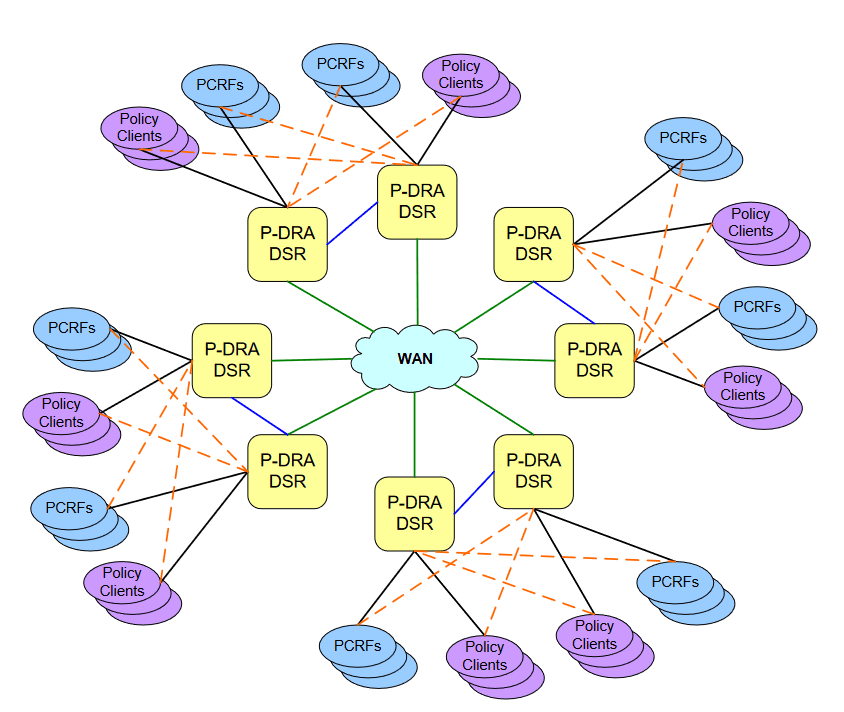

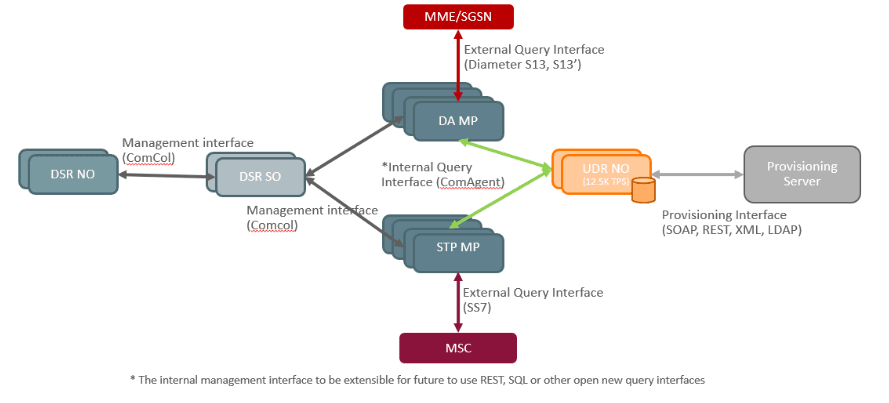

Figure 2-1 DSR 7.x Architecture

The key components of the solution are:

- Operations, Administration, Maintenance, and Provisioning (OAMP)

- System OAM per signaling nod

- Network OAMP

- Diameter Agent Message Processor (DA MP) (handles Diameter and Radius)

- SS7 Message Processor

- STP Message Processor

- IP Front End (IPFE)

- Session Binding Repository (SBR)

- Database Processor (DP) / Subscriber Data Server (SDS)

- Query Server (QS)

- Integrated Diameter Intelligence Hub (IDIH)

- User Data Repository (UDR NO)–Optional

- VNF Manager (VNFM)–Optional

- Service Proxy Function (SPF) and NRF (Network Repository Function)–Optional

List of non-compatible features in DSR 8.3:

- Diameter Intelligence Hub (DIH)

- Gateway Location Application (GLA)

- Radius

In rest of this document any reference to these non-complaint features shouldn't be considered for feature enabling.

These components are described at a higher level in the following subsections. Although each component plays a key role, the OAM and DA MP components are the mandatory components of the system.

For details on the licensing of the various DSR Features see, DSR Licensing Information User Manual, available on Oracle Help Center (OHC).

2.2.1 Operations, Administration and Maintenance

The Operations, Administration, Maintenance and Provisioning components of the DSR include the System OAM located at each signaling node and the Network OAMP (NOAMP).

- Centralized OAMP for the DSR network.

- Central location for network wide data configuration, like “topology hiding.”

- Supports SNMP northbound interface to operations support systems for fault management.

- Runs on a pair of servers in active/standby configuration or can be virtualized on the System OAM blades at one signaling site (for small systems with two DSR signaling nodes only).

- Optionally supports disaster recovery site for geographic redundancy.

- Provides configuration and management of topology data.

- Maintains event and security logs.

- Centralizes collection, access to measurements, and reports.

- Centralized view of key operational metrics which identifies potential operational issues.

- Centralized architecture for the configuration and management of geo-redundant state DBs for policy and charging proxy.

- Centralized OAM interface for the node.

- Provides mechanism to configure the diameter data (routing tables, mediation, so on).

- Maintains local copy of the configuration database.

- Supports SNMP northbound interface to operations support systems for fault management.

- Provides mechanism to create user groups with various access levels.

- Maintains event and security logs.

- Centralizes collection, access to measurements, and reports.

- Centralized view of key operational metrics which identifies potential operational issues.

2.2.2 Diameter Agent Message Processor (DA MP)

The DA MP hosts Proxy applications such as address resolution, policy, charging Application, charging Proxy and scales by adding blades or instances.

Key characteristics of a DA MP are as follows:

- Provides application specific handling of real-time Diameter and/or RADIUS messages.

- Accesses DPs for real-time version of the subscriber DB, as needed.

- Accesses session and subscriber binding from SBRs as needed.

- Interfaces with System OAM or IDIH.

2.2.3 STP Message Processor

The STP Message Processor provides the functionality of Signaling Transfer Point (STP).

Key characteristics of an STP MP are as follows:

- Supports M3UA in signaling gateway mode.

- Supports M2PA in client and server mode.

- Supports SCCP routing with enhanced GTT capabilities .

- Provides flow control at SCTP to manage traffic rates on each link.

- Interfaces with System OAM and supports configurations through RESTful MMI.

2.2.4 IP Front End

The DSR IP Front End provides TCP/SCTP connection based load balancing to hide the internal DSR hardware architecture and IP addresses from the customer network. The IPFE is typically deployed in sets of Active-Active pairs and it distributes connections to DA MPs. IPFE provides load balancing of connections to DA MPs. The connections are active/active with TSAs (Target Set Addresses) and they provide TCP and SCTP connectivity.

Key characteristics of an IPFE are as follows:

- Optional component of the DSR.

- Supports up to two active or standby pairs with 3.2 Gbps bandwidth per active/standby pair.

- Supported with SCTP Multi-homing.

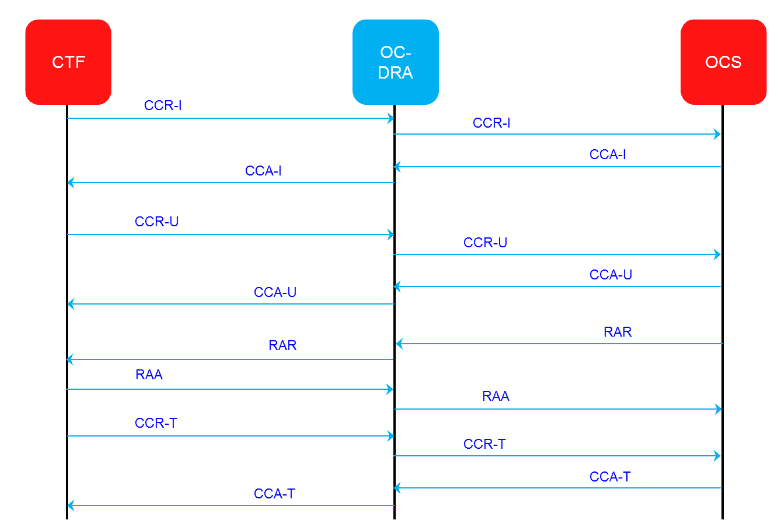

2.2.5 Session or Subscriber Binding Repository

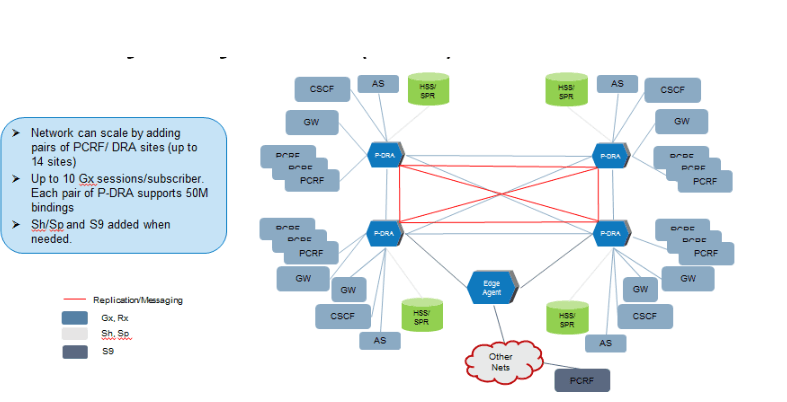

The SBR stores diameter sessions and subscriber bindings for stateful applications. The Policy Charging Application (PCA) supports Policy DRA (P-DRA) and Online Charging DRA (OC-DRA) functionalities. OC-DRA uses session database SBRs (SBR(s)) and policy DRA uses both session database SBRs (SBR(s)) and subscriber binding database SBR’s (SBR(b)). Throughout this document the SBRs are referred to individually when there are significant differences discussed, and referred as SBR, without distinguishing the application, when the attribute applies to all types.

Key characteristics of an SBR are as follows:

- Optional component of the DSR.

- Provides repository for subscriber and session state data.

- Provides DSRs with network-wide access to bindings.

- Provides procedures for in-service augmentation of the DSR signaling node-to-session SBR database relationships.

A number of capabilities are available to allow the SBR to be reconfigured once deployed including:

- Binding SBR capacity Growth/Degrowth: Allows in-service growth and degrowth of the Binding SBR database capacity in an existing P-DRA deployment, to include augmenting the physical location of the Binding SBR servers.

- Session SBR Capacity Growth/Degrowth: Allows in-service growth and degrowth of the Session SBR database capacity in an existing P-DRA / OC-DRA deployment, to include augmenting the physical location of the Session SBR servers.

- SBR Data Migration of a Session SBR Database: Allows reconfiguring an SBR Database topology by moving data from one data base to another such as: Mating/Un-Mating/Re-Mating. SBR data migration plan is used to move from an initial SBD DB to a target SBR DB without affecting traffic.

- Per mated pair sizing of Session SBR: Supports independent sizing of the Session SBR databases in a P-DRA / OC-DRA network managed by a common DSR NOAM.

- P-DRA support for 2.1M network wide MPS on P-DRA: Provides world-class scaling of policy network traffic, supporting up to 2.1 M network wide MPS of P-DRA traffic, including network-wide stateful Gx/Rx correlation to support VoLTE.

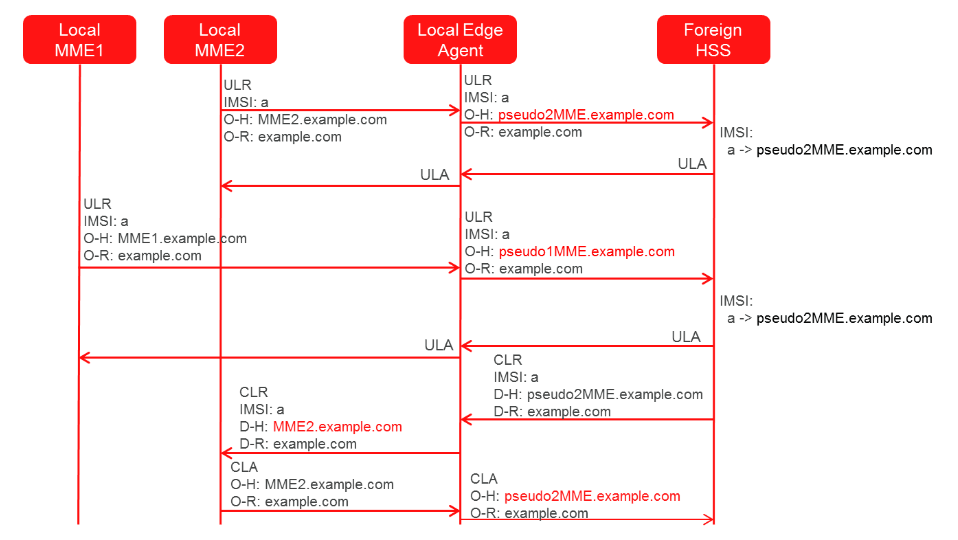

2.2.6 Subscriber Data Server

The SDS provides a centralized provisioning system for distributed subscriber data repository. The SDS is a highly-scalable database with flexible schema.

Key characteristics of the SDS are as follows:

- Interfaces with provisioning systems to provision subscriber related data.

- Interfaces with DPs at each DSR network element.

- Replicates data to multiple sites.

- Stores and maintains the master copy of the subscriber database.

- Supports bulk import of subscriber data.

- Correlates records belonging to a single subscriber.

- Provides web based GUI for provisioning, configuration, and administration of the data.

- Supports SNMP v2c northbound interface to operations support systems for fault management.

- Provides mechanism to create user groups with various access levels.

- Provides continuous automated audit to maintain integrity of the database.

- Supports backup and restore of the subscriber database.

- Runs on a pair of servers in active / hot standby, and can provide geographic redundancy by deploying two SDS pairs at diverse locations.

- Disaster Recovery site capabilities.

2.2.7 Database Processor

The database processor is the repository of subscriber data on the individual DSR node elements. The database processor hosts the full address resolution database and scales by adding blades.

Key characteristics of a DP are as follows:

- provides high capacity real-time database query capability to DA MPs.

- Interfaces with DP-SOAM (application hosted on the same blades as the DSR SOAM) for provisioning of subscriber data and for measurements reporting across all DPs.

- Maintains synchronization of data across all database processor.

- Hosting other Oracle SDS based applications.

2.2.8 Query Server

The Query Server contains a replicated copy of the local SDS database and supports a northbound MySQL interface for free-form verification queries of the SDS Provisioning Database. The query server’s northbound MySQL interface is accessible via its local server IP.

Key characteristics of the QS are as follows:

- optional component that contains a real-time, replicated instance of the subscriber DB.

- provides LDAP, XML and SQL access.

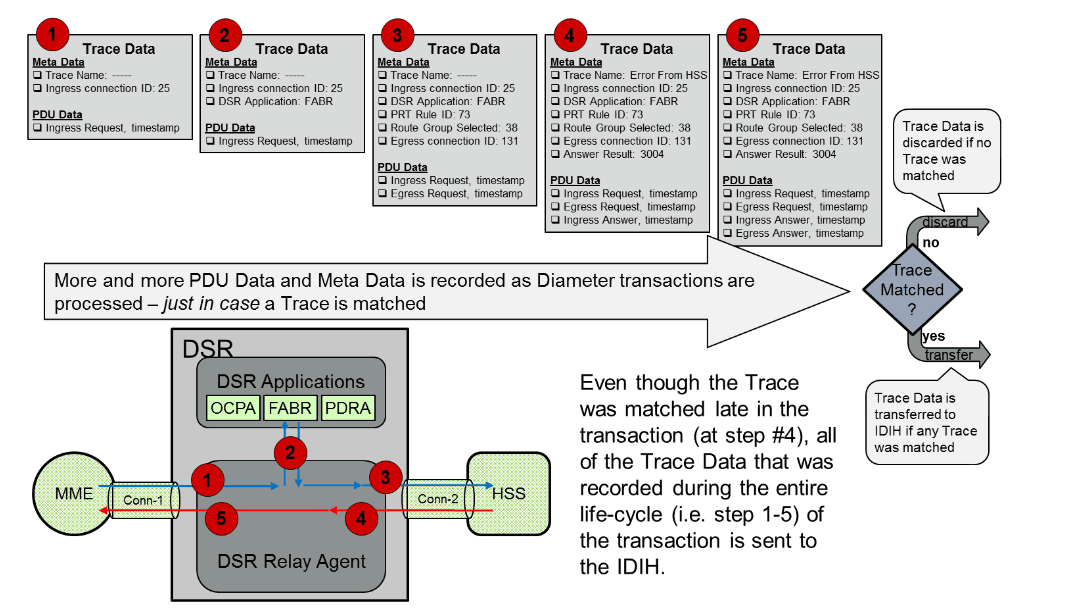

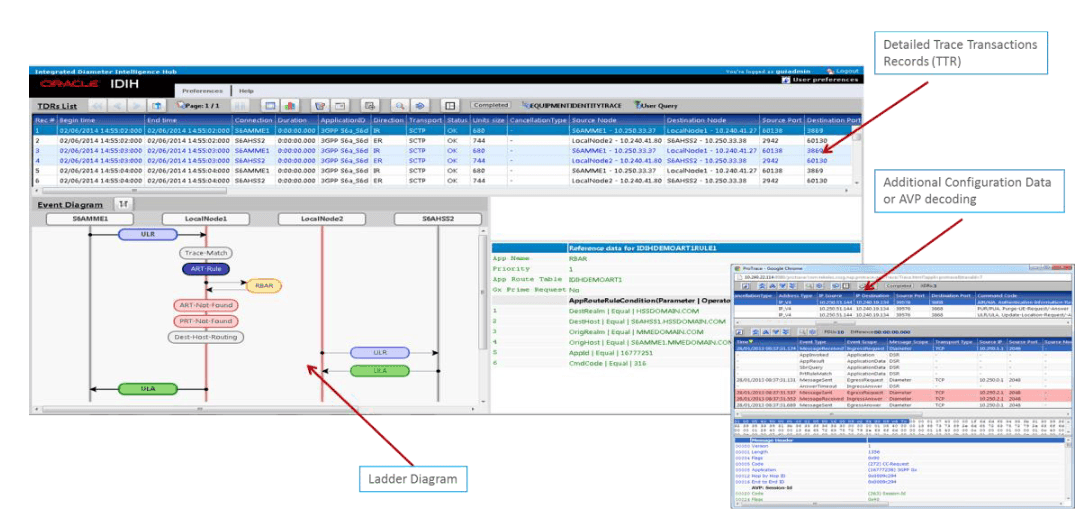

2.2.9 Integrated Diameter Intelligence Hub

The Integrated Diameter Intelligence Hub supports advanced troubleshooting for Diameter traffic handled by the DSR. The IDIH is an optional feature of the DSR that enable the selective collection and storage of diameter traffic and provides nodal diameter troubleshooting.

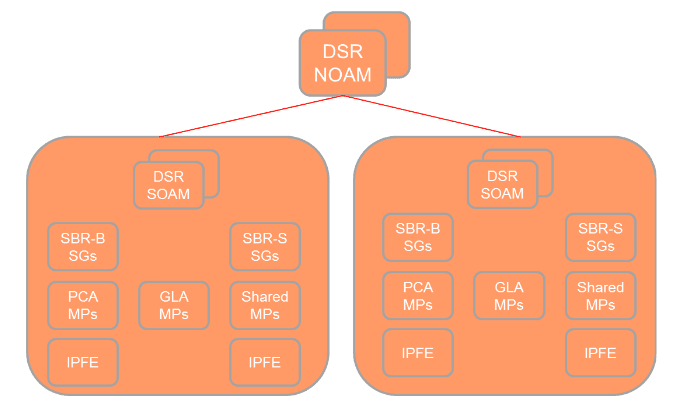

2.3 DSR OAMP

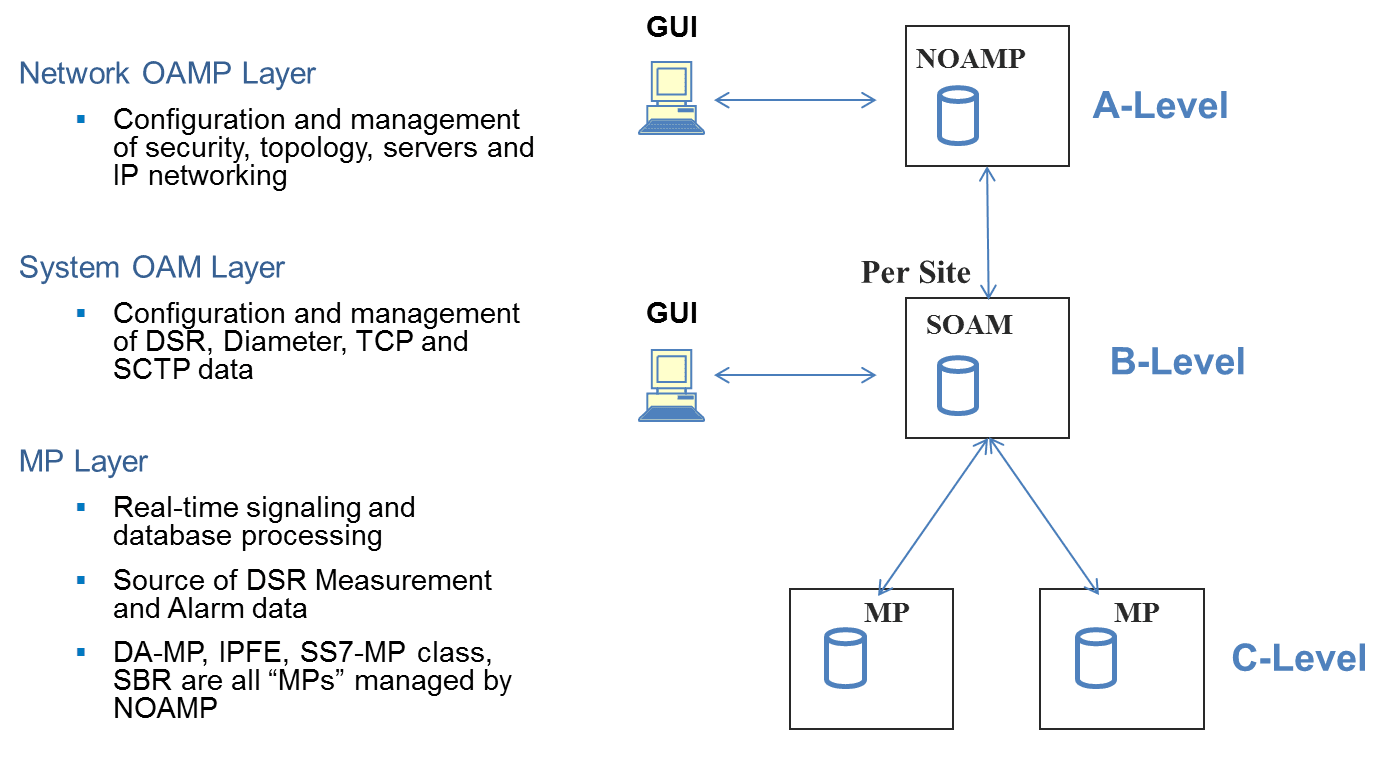

Figure 2-2 DSR 3-tiered Topology Architecture

Key services provided by the OAM components include:

- Centralized operational interface.

- Distribution of provisioned and configuration data to all message processors in all sites.

- Event collection and administration from all message processors.

- User and access administration.

- Supports northbound SNMP interface towards an operator EMS/NMS.

- Supports a web based GUI for configuration.

The DSR MPs host the Diameter and RADIUS Signaling Router applications, process Diameter and RADIUS messages.

2.3.1 Network Interfaces

Three types of network interfaces are used in the DSR:

- XMI – External Management Interface: Interface to the operator’s management network. XMI can be found on the OAM servers. All OAM&P functions are available to the user through the XMI.

- IMI – Internal Management Interface: DSRs internal management network interface. All DSR nodes have this interface and use the IMI for exchange of crucial internal data. The user does not have access to the internal management network.

- XSI – Signaling Interface: Interface to the operator’s signaling network. Only the Message Processors (MPs) have this interface. The XSI is used exclusively by the application and is not used by OAM&P for any purpose.

2.3.2 Web-Based GUI

The DSR provides a web-based graphical user interface as the primary interface that administrators and operators use to configure and maintain the network. GUI access is user id and password protected.

2.3.3 Operations and Provisioning

Operations and Provisioning of the DSR can be accomplished through one of the ten GUI sessions that are made available to the user through internal web server(s). Through the GUI, the User is able to make all operations and provisioning changes to the DSR, including:

- Network Information (does not include switch configuration)

- Network Element

- Servers

- Routing and Configuration Databases

- Status and Manage for:

- Network Elements

- Servers

- Replication

- Collection

- High Availability

- Database

- KPIs

- Processes

- Files

Network Information

The network information defines the network name, the layout or shape of the network elements and their components. It defines the interlinking and the intercommunicating of the components. The network information represents all server relationships within the application. The server relationships are then used to control data replication, data collection, and define high availability relationships. Switch configuration is not defined by the network information.

Network Elements

The DSR application is a collection of servers linked by standardized interfaces. Network Elements (NE) are containers that group and create relationships among servers in the network. A network element can contain multiple servers but a single server is part of only one network element. The DSR solution is comprised of a Network OAMP network element, at least one signaling node, and an optional database provisioning node (SDS).

2.3.4 Maintenance

The DSR provides the following maintenance capabilities:

- Alarms and Events

- Measurements

- Key Performance Indicators

- Bulk Import/Export

Alarms and Events

The platform and DSR software raise minor, major, critical alarms, and events for a wide variety of conditions. These are immediately sent to the OAM system also sent to the operator’s network management system using SNMP. Alarm or event logs at the OAM are stored up to seven days. The OAM provides a dashboard view of all alarms on the downstream MPs. This information is maintained locally up to three days.

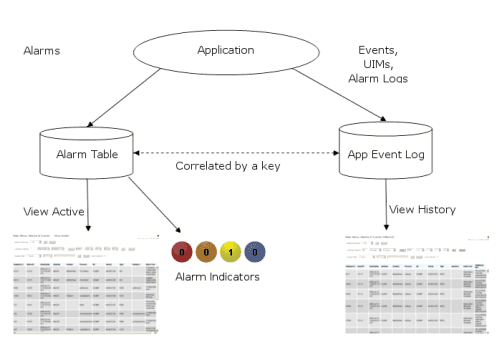

Figure 2-3 Flow of Alarms

Following are some of the alarms and events supported by DSR:

- Connection to peer failed/ restored

- Peer unavailable/available

- Connection to peer congested/not-congested

- Route list available/unavailable

- OAM server failed/ restored

- MP failed/ restored

- MP entered/exited/changed local congestion

A detailed list of all alarms supported in DSR can be found in Platform Feature Guide.

Key Performance Indicators

Key Performance Indicators (KPIs) allow the user to monitor system performance data, including CPU, memory, swap space, and uptime per server. This performance data is collected from all servers within the defined topology. Key Performance Indicators supported by the platform and DSR software are in the following tables.

Table 2-1 DSR KPI Summary

| KPI Category | KPI Examples |

|---|---|

| Server Element KPIs | A group of KPIs that appear regardless of server role such as CPU and Network Element. |

| CAPM KPIs | Counters related to computer-aided policy making such as active templates and test templates. |

| Charging Proxy Application KPIs | KPIs related to the CPA feature such as CPA Answer Message Rate, CPA Ingress Message Rate, and cSBR Query Error Rate. |

| Communications Agent KPIs | KPIs related to the communication agent such as user data ingress message rate. |

| Connection Maintenance KPIs | KPIs pertaining to connection maintenance such as RxConnAvgMPS. |

| DIAM KPIs | Basic Diameter KPIs such as Avg Rsp time and ingress trans success rate. |

| IPFE KPIs | KPIs associated with IPFE such as CPU % and IPFE Mbytes/Sec. |

| MP KPIs | KPIs relating to the message processor such as Avg Diameter Process CPU Util and average routing message rate. |

| FABR KPIs | KPIs related to the full address based resolution feature such as Ingress Message Rate and DP Response Time Average. |

| RBAR KPIs | KPIs related to the Range Based Address Resolution feature such as Average Resolved Message Rate and Ingress Message Rate. |

| SBR KPIs | KPIs related to Session Binding Repository such as Current Session Bindings and Request Rate. |

Table 2-2 Platform KPI Summary

| KPI Name | KPI Description |

|---|---|

| System.CPU_UtilPct | Reflects current CPU usage, from 0-100%. (100% means all CPU Cores are completely busy). |

| System.RAM_UtilPct | Reflects the current committed RAM usage as a percentage of total physical RAM. Based on the Committed_AS measurement from Linux /proc/meminfo. This metric can exceed 100% if the kernel has committed more resources than provided by physical RAM, in which case swapping will occur. |

| System.Swap_UtilPct | Reflects the current usage of Swap space as a percentage of total configured swap space. This metric will be 0-100%. |

| System.Uptime_Srv | Length of time since the last server reboot. |

A detailed list of all KPIs supported in DSR can be found in the Platform Feature Guide found on the Oracle Help Center (OHC).

Measurements

All components of the DSR solution measure the amount and type of messages sent and received. Measurement data collected from all components of the solution can be used for multiple purposes, including discerning traffic patterns, user behavior, traffic modeling, size traffic sensitive resources, and troubleshooting.

The measurements framework allows applications to define, update, and produce reports for various measurements:

- Measurements are ordinary counters that count occurrences of different events within the system, for example, the number of messages received. Measurement counters are also called pegs.

- Applications simply peg (increment) measurements upon the occurrence of the event that needs to be measured.

- Measurements are collected and merged at the OAM servers.

- The GUI allows reports to be generated from measurements.

A subset of the measurements supported in DSR are listed in the following table. A detailed list of all KPIs supported in DSR can be found in the Platform Feature Guide found on the Oracle Help Center (OHC).

Table 2-3 DSR Measurements

| Measurement Category | Description |

|---|---|

| Application Routing Rules | A set of measurements associated with the usage of application routing rules. These allow the user to determine which application routing rules are most commonly used and the percentage of times that messages were successfully or unsuccessfully routed. |

| Charging Proxy Application (CPA) Performance | This group contains measurements that provide performance information that is specific to the CPA application. |

| Charging Proxy Application Exception | These measurements provide information about exceptions and unexpected messages and events that are specific to the CPA application. |

| Charging Proxy Application Session DB | These measurements provide information about events that occur when the CPA queries the SBR. |

| Computer Aided Policy Making (CAPM) | A set of measurements containing usage-based measurements related to the Diameter Mediation feature. |

| Communication Agent Performance | This group is a set of measurements that provide performance information that is specific to the ComAgent protocol. They allow the user to determine how many messages are successfully forwarded and received to and from each DSR application. |

| Communication Agent Exception | This group is a set of measurements that provide information about exceptions and unexpected messages and events that are specific to the ComAgent protocol. |

| Connection Congestion | These measurements contain per-connection measurements related to Diameter connection congestion states. |

| Connection Exception | These measurements provide information about exceptions and unexpected messages and events for individual SCTP/TCP connections that are not specific to the Diameter protocol. |

| Connection Performance | This group contains measurements that provide performance information for individual SCTP/TCP connections that are not specific to the Diameter protocol. |

| DSR Application Exception | A set of measurements that provide information about exceptions and unexpected messages and events that are specific to the DSR protocol. |

| DSR Application Performance | A set of measurements that provide performance information that is specific to the DSR protocol. These allow the user to determine how many messages are successfully forwarded and received to and from each DSR application. |

| Diameter Egress Transaction | These are measurements providing information about Diameter peer-to-peer transactions forwarded to upstream peers. |

| Diameter Exception | A set of measurements that provide information about exceptions and unexpected messages and events that are specific to the Diameter protocol. |

| Diameter Ingress Transaction Exception | These measurements provide information about exceptions associate with the routing of Diameter transactions received from downstream peers. |

| Diameter Ingress Transaction Performance | A set of measurements providing information about the outcome of Diameter transactions received from downstream peers. |

| Diameter Performance | Measurements that provide performance information that is specific to the Diameter protocol. |

| Diameter Rerouting | These measurements allow the user to evaluate the amount of message rerouting attempts which are occurring, the reasons for why message rerouting is occurring, and the success rate of message rerouting attempts. |

| Full Address Based Resolution (FABR) Application Performance | A set of measurements that provide performance information that is specific to the FABR feature. They allow the user to determine how many messages are successfully forwarded and received to and from the FABR application. |

| Full Address Based Resolution (FABR) Application Exception | A set of measurements that provide information about exceptions and unexpected messages and events that are specific to the FABR feature. |

| IP Front End (IPFE) Exception | This group is a set of measurements that provide information about exceptions and unexpected messages and events specific to the IPFFE application. |

| IP Front End (IPFE) Performance | This group contains measurements that provide performance information that is specific to the IPFE application. Counts for various expected/normal messages and events are included in this group. |

| Message Copy | These measurements from the Diameter Application Server reflect the message copy performance. They allow the user to monitor the amount of traffic being copied and the percentage of times that messages were successfully or unsuccessfully copied. |

| Message Priority | This group contains measurements that provide information on message priority assigned to ingress Diameter messages. |

| Message Processor (MP) Performance | These measurements provide performance information for an MP server. |

| OAM Alarm | General measurements about the alarm system such as number of critical, major, and minor alarms. |

| OAM System | General measurements about the overall OAM system |

| Peer Node Performance | Measurements that provide performance information that is specific to a Peer Node. These measurements allow users to determine how many messages are successfully forwarded and received to/from each peer node. |

| Peer Routing Rules | These are measurements associated with the usage of peer routing rules. They allow the user to determine which peer routing rules are most commonly used and the percentage of times that messages were successfully or unsuccessfully routed using the route list. |

| Range Based Address Resolution (RBAR) Application Performance | A set of measurements that provide performance information that is specific to the RBAR application. They allow the user to determine how many messages are successfully forwarded and received to/from each RBAR application. |

| Range Based Address Resolution (RBAR) Exception | A set of measurements that provide information about exceptions and unexpected messages and events that are specific to the RBAR feature |

| Route List | A set of measurements associated with the usage of route lists. They allow the user to determine which route lists are most commonly used and the percentage of times that messages were successfully or unsuccessfully routed using the route list. |

| Routing Usage | This report allows the user to evaluate how ingress request messages are being routed internally within the relay agent. |

| Session Binding Repository (SBR) Exception | A set of measurements that provide information about exceptions and unexpected messages and events specific to the SBR application. |

| Session Binding Repository (SBR) Performance | This group contains measurements that provide performance information that is specific to the SBR application. Counts for various expected / normal messages and events are included in this group. |

Bulk Import/Export

DSR supports bulk import and export of provisioning and configuration data using comma separated values (csv) file format. The import and export operations can be initiated from the DSR GUI. The import operation supports insertion, updating, and deletion of provisioned data. Both the import and export operations generate log files.

2.3.5 DSR Dashboard

This GUI display is an operational tool allowing customers to easily identify the potential for or existence of a DSR Node or Diameter Network outage. This dashboard is accessible via the SOAM or NOAM GUI and provides the following high-level capabilities:

- Centralized view: Allows operators to view a high level summary of key operational metrics.

- Identifies potential operational issues: Assists operators in identifying problems through visual enhancements such as colorization and highlighting.

- Centralized Launch-Point: Allows operators to drill-down to the next level of status information to assist in pinpointing the source of a potential problem.

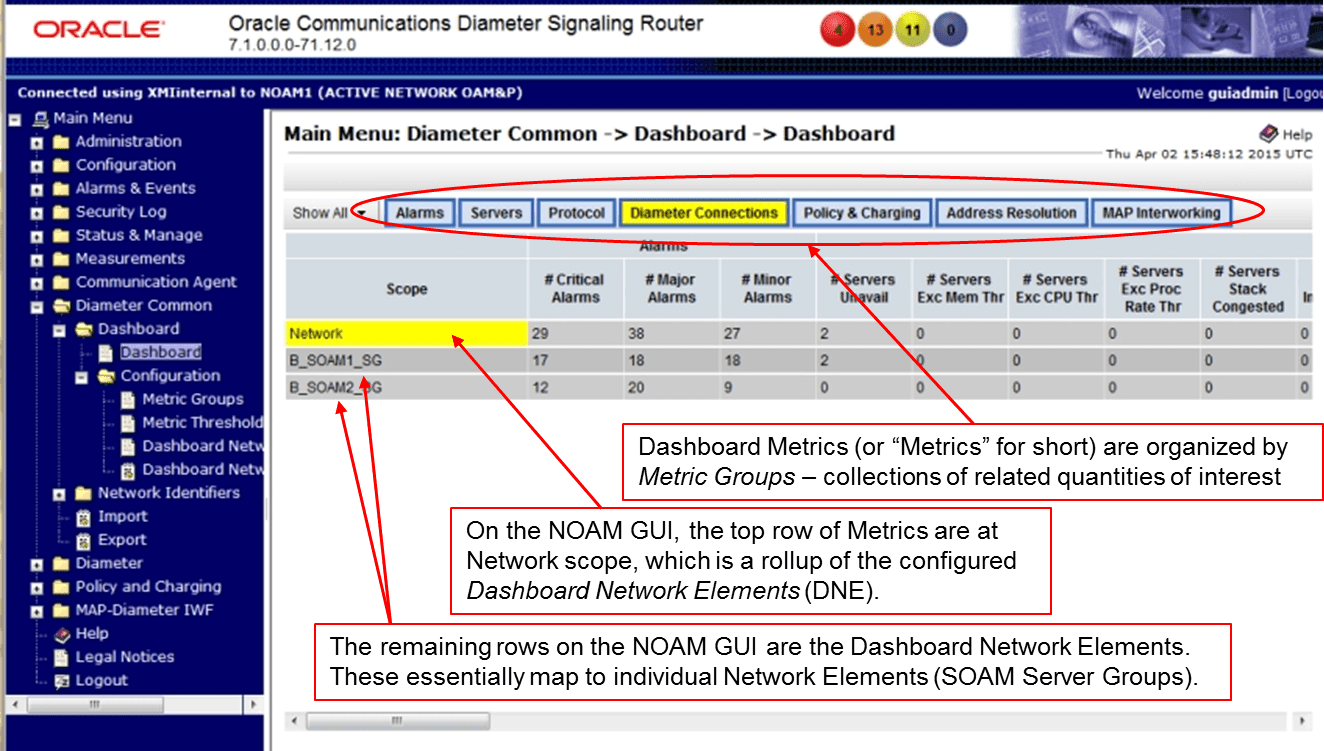

Figure 2-4 DSR Dashboard on the NOAM

The Dashboard is comprised of the following concepts and components:

Dashboard Metrics:

- Metrics are the core component of the DSR Dashboard. The operator can determine which Metrics can be viewed on their Dashboard display through configuration.

- Server metrics are maintained by each MP. Per-Server metric values are periodically pushed to their local SOAM which can be displayed on the SOAM Dashboard display.

- “Server Type” metrics allow the operator see to a roll-up of Server metrics by Server type. The formula for calculating a Server Type metric value is identical to that for calculating the per-NE metric for that metric.

- Network Element (NE) metrics are derived from per-server metrics. A “Network Element” is the set of servers managed by a SOAM. The formula for calculating a per-NE metric value is metric-specific although, in general, most NE metrics are the sum of the per-Server metrics.

- Per Network metrics are derived from per-NE summary metrics. A “Network” is the set of DSR NEs managed by a NOAM. The formula for calculating a Network metric value is identical to that for calculating the per-NE metric for that metric.

Metric Groups:

- A Metric Group allows the operator to physically group Metrics onto the Dashboard display and for creating an aggregation status for a group of metrics.

- The “status” of a Metric Group is the worst-case status of the metrics within that group.

Server Type:

- A Server Type physically groups Metrics associated with a particular type of Server (e.g., DA-MP) onto the Dashboard display and for creating summary metrics for Servers of a similar type.

- The following Server Types are supported: DA-MP, SS7-MP, IPFE, SBR, cSBR, SOAM.

Network Element (NE):

- A “Network Element” is a set of Servers which are managed by a SOAM.

- The set of servers which are managed by a SOAM is determined through standard NOAM configuration and cannot be modified via Dashboard configuration.

- A NOAM can manage up to 32 NEs.

Dashboard Network Element (NE):

- A “Dashboard Network Element” is a logical representation of a Network Element which can be assigned a set of Metrics, NE Metric Thresholds and Server Metric Thresholds via configuration that defines the content and thresholds of a SOAM Dashboard display.

- Up to 32 Dashboard NEs are supported.

Dashboard Network:

- A “Dashboard Network” is a set of Dashboard Network Elements, Metrics and associated Network Metric Thresholds that is created by configuration that defines the content and thresholds of a NOAM Dashboard display.

- The set of Dashboard Network Elements assigned to a Dashboard Network is determined from configuration.

- One Dashboard Network is supported.

Visualization Enhancements:

- Visualization enhancements such as coloring are used on the Dashboard to attract the operator’s attention to a potential problem.

- Visualization enhancements are enabled through metric thresholds.

- Visualization enhancements can be applied independently to Server Type, NE and Network summary metrics and Server metrics.

- Visualization enhancements are applied to Dashboard row and columns headers to ensure that any metric value which has exceeded a threshold but cannot be physically viewed on a single physical monitor is not totally hidden from the operator’s view.

Metric Thresholds:

- Metric thresholds allow the operator to enable visualization enhancements on the Dashboard.

- Up to three separate threshold values (e.g., thresh-1, thresh-2, thresh-3) can be assigned to each metric.

- Dashboard Network summary, Dashboard NE summary and Server metric thresholds are supported.

- Dashboard Network summary and Dashboard NE summary metric threshold values can be assigned by the operator.

- Metric thresholds are used for Dashboard visualization enhancements.

- Most (but not necessarily all) metrics have thresholds.

- Whether a Metric can be assigned thresholds is determined from configuration.

Dashboard GUI Display:

- The Dashboard GUI display allows an operator to view a set of metric values used for monitoring the status of a Network or NE.

- The NOAM Dashboard allows the operator to view both Network summary and NE summary metrics.

- The SOAM Dashboard allows the operator to view the NE’s summary metrics, its per-Server Type summary metrics and its per-Server metrics.

- Metric values are displayed as text.

- Sets of Metrics associated with network components are displayed vertically on the Dashboard in network hierarchical order. For example, on the NOAM Dashboard, Network metrics are displayed first followed by per-NE metrics.

- Each column on the Dashboard contains the set of values for a particular Metric.

- The operator can control which metrics are displayed on the Dashboard via configuration.

- The order that Metric Groups are displayed on the Dashboard is determined from configuration.

- The order that Metrics are displayed within a Metric Group on the Dashboard display is determined from configuration.

- Metrics selected for display on the Dashboard via configuration are hidden/viewed via a Dashboard GUI control based on “threshold level” filters (for example, only display metrics having at least one value exceeding its threshold-3 value).

Drill-down via hyperlinks:

- A Dashboard provides high level metrics providing an overall view of the health of one or more Network Elements of the customer’s network.

- When a visual enhancement on the Dashboard is enabled when a user-defined threshold is exceeded, the operator may want to investigate the potential problem by inspection of additional information.

- The Dashboard facilitates operator trouble-shooting via context-sensitive hyperlinks on the Dashboard to assist in viewing more detailed information via existing DSR status and maintenance screens.

- The linkage between content on the Dashboard to DSR status and maintenance screens is determined from configuration.

2.3.6 Automatic Performance Data Export

The Automatic Performance Data Export feature provides the following capabilities:

- Periodic generation and remote copy of filtered performance data.

- Proper management of the file space associated with the exported data.

Specifically, Automatic PDE provides the ability to create custom queries of performance data and to schedule periodic remote copy operations to export the performance data to remote export systems.

2.3.7 Administration

Administration functions are tasks that are supported at the system level. Administration functions of the DSR include:

- User Administration

- Passwords

- Group Administration

- User’s Session Administration

- Authorized IPs

- System Level Options

- SNMP Administration

- ISO Administration

- Upgrade Administration

- Software Versions

For more details on platform related features see, Platform Feature Guide.

2.3.7.1 Database Management

Database Management for DSR provides 4 major functions:

- Database Status - maintains status information on each database image in the DSR network and makes the information accessible through the OAM server GUI.

- Backup and Restore - Backup function captures and preserves snapshot images of Configuration and Provisioning database tables. Restore function allows user to restore the preserved databases images. The DSR supports interface to and/or integration with 3rd party backup systems (that is Symantec NetBackup).

- Replication Control - allows the User to selectively enable and disable replication

of Configuration and Provisioning data to servers.

Note:

This function is provided for use during an upgrade and should be used by Oracle Personnel only. - Provisioning Control - provides the User the ability to lockout Provisioning and

Configuration updates to the database.

Note:

This function is provided for use during an upgrade and should be used by Oracle Personnel only.

2.3.7.2 File Management

The File Management function includes a File Management Area, which is a designated storage area for any file the user requests the system to generate. The list of possible files includes, but is not limited to: database backups, alarms logs, measurement reports and security logs. The File Management function also provides secure access for file transfer on and off the servers. The easy-to-use web pages give the user the ability to export any file in the File Management Area off to an external element for long term storage. It also allows the user to import a file from an external element, such as an archived database backup image.

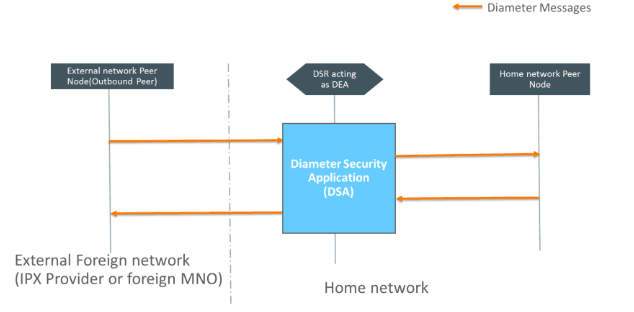

2.3.8 Security

Oracle addresses Product Security with a comprehensive strategy that covers the design, deployment, and support phases of the product life-cycle. Drawing from industry standards and security references, Oracle hardens the platform and application to minimize security risks. Security hardening includes minimizing the attack surface by removing or disabling unnecessary software modules and processes, restricting port usage, consistent use of secure protocols, and enforcement of strong authentication policies. Vulnerability management ensures that new application releases include recent security updates. In addition, a continuous tracking and assessment process identifies emerging vulnerabilities that may impact fielded systems. Security updates are delivered to the field as fully tested Maintenance Releases.

Networking topologies provide separation of signaling and administrative traffic to provide additional security. Firewalls can be established at each server with IP Table rules to establish White List and/or Black List access control. The DSR supports transporting Diameter messages over IPSec thereby ensuring data confidentiality and data integrity of Diameter messages traversing the DSR.

Oracle realizes the importance of having distinct interfaces at the Network-Network Interface layer. To maintain the separation of traffic between internal and external Diameter elements, the DSR supports separate network interfaces towards the internal and external traffic. The routing tables in DSR support the implementation of a Diameter Access Control List which make it possible to reject requests arriving from certain origin-hosts or origin-realms or for certain command codes.

Oracle recommends that Layer 2 and Layer 3 ACLs be implemented at the Border Gateway. However, Professional Services available from the Oracle Consulting team can implement Layer 2 and Layer 3 ACLs at the aggregation switch which serves as the demarcation point or at the individual MPs that serve the Diameter traffic.

In addition to supporting security at the transport and network layers, Oracle’s solution provides Access Control Lists based on IP addresses to restrict user access to the database on IP interfaces used for querying the database. These interfaces support SSL.

DSR maintains a record of all system users’ interactions in its Security Logs. Security Logs are maintained on OAM servers. Each OAM server is capable of storing up to seven days’ worth of Security Logs. Log files can be exported to an external network device for long term storage. The security logs include:

- Successful logins

- Failed login attempts

- User actions (for example, configure a new OAM, initiate a backup, view alarm log).

Please see the Diameter Signaling Router (DSR) 8.6.0.0.0 Security Guide – Available at Oracle.com docIPSec

The DSR optionally supports IPSec encryption per Diameter connection or association. Use of IPSec reduces MPS throughput by up to 40%. IPSec is supported for SCTP over IPv6 connections. The DSR IPSec implementation is based on 3GPP TS 33.210 version 9.0.0 and supports the following:

- Encapsulating Security Payload (ESP).

- Internet Key Exchange (IKE) v1 and v2.

- Tunnel Mode (entire IP packet is encrypted and/or authenticated).

- Up to 100 tunnels.

- Encryption transforms/ciphers supported: ESP_3DES (default) and AES-CBC (128 bit key length).

- Authentication transform supported: ESP_HMAC_SHA-1.

- Configurable Security Policy Database with backup and restore capability.

2.3.9 Machine/Machine Interface

DSR REST MMI’s provides Application Programming Interface allows EMS, OSS, or NMS systems at customer’s network to interface directly with the DSR in order to access, store, change and delete OAM&P. Use of the MMI will allow real time changes in the DSR that is initiated by a configuration change in north-bound customer management systems.

Benefits of REST MMI’s include:

- Industry moving towards automation of network operations.

- Provides a consistent interface for all OAM&P data.

- Advancement toward automated installations.

Administration, Configuration, Alarms & Events, Status & Manage, Measurements, Diameter, and IPFE managed objects of DSR can be managed using REST MMIs.

2.4 DSR Nodes

Each DSR message processor (MP) can host up to 48 Diameter Nodes (also called Diameter Identities). Hosting more than one node/identity allows a DSR deployment at the Network Edge where DSR acts as the single point of contact for all Diameter elements external to the operator network and similarly all internal Diameter elements use it as the point of contact when reaching Diameter servers external to the operator network. Another use case for hosting multiple Diameter nodes on each MP is to support multiple connections from an external Diameter element to the DSR.

Each Diameter Node has the following attributes:

- Diameter Realm that may be unique or shared across the nodes.

- Up to 128 local IP addresses - IPv4 or IPv6 addresses or a combination of IPv4 and IPv6 addresses. (Each DA-MP supports up to 8 local IP addresses and 32 DA-MPs are supported).

- A unique Fully Qualified Domain Name (FQDN).

DSR allows an IP address to be shared across nodes provided the combination of IP address, port and transport are unique across nodes.

See the following figure for a sample configuration:

Figure 2-5 Multiple Nodes per Message Processor

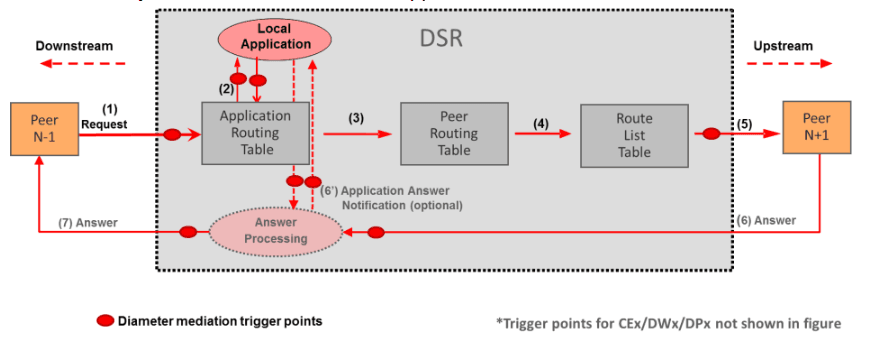

2.5 Diameter Core Routing

The DSR application provides a Diameter Routing Agent to forward messages to the appropriate destination based on information contained within the message including header information and applicable Attribute Value Pairs (AVP). As per the core Diameter specification, the DSR provides the capability to route Diameter messages based on any combination, or presence/absence, of Destination-Host, Destination-Realm, and Application-ID. In addition DSR optionally provides the capability to look at Command-Code and origination information, namely Origin-Realm and Origin-Host for advanced routing functionality. The average diameter message size supported is 2K bytes with a maximum message size of 60K bytes.

DSR high level message processing and routing is shown below. The numbers show the message flow through the system.

Figure 2-6 High Level Message Processing and Routing in DSR

DSR supports the following routing functions:

- Message routing to Diameter peers based upon user-defined message content rules.

- Message routing to Diameter peers based upon user-defined priorities and weights.

- Message routing to Diameter peers with multiple transport connections.

- Alternate routing on connection failures.

- Alternate routing on Answer timeouts.

- Alternate routing on user-defined Answer responses.

- Route management based on peer transport connection status changes.

- Route management based on OAM configuration changes.

Routing rules and rule actions are used to implement the routing behavior required by the operator. Routing rules are defined using combinations of the following data elements:

- Destination-Realm (leading, trailing characters, exact match, contains, not equal or always true).

- Destination-Host (leading, trailing characters, exact match, contains, always true, present and not equal, or presence/absence).

- Application-ID (exact match, not equal, or always true).

- Command-Code (exact match, not equal or always true).

- Origin-Realm (leading, trailing characters, exact match, contains, not equal or always true.

- Origin-Host (leading, trailing characters, exact match, contains, not equal or always true).

A set of configurable timers (100 – 180,000 milliseconds) control the length of time the DSR waits to receive an answer to an outstanding request. The maximum number of times a request can be rerouted upon connection failure or timeout is configurable from 0 – 4 retries.

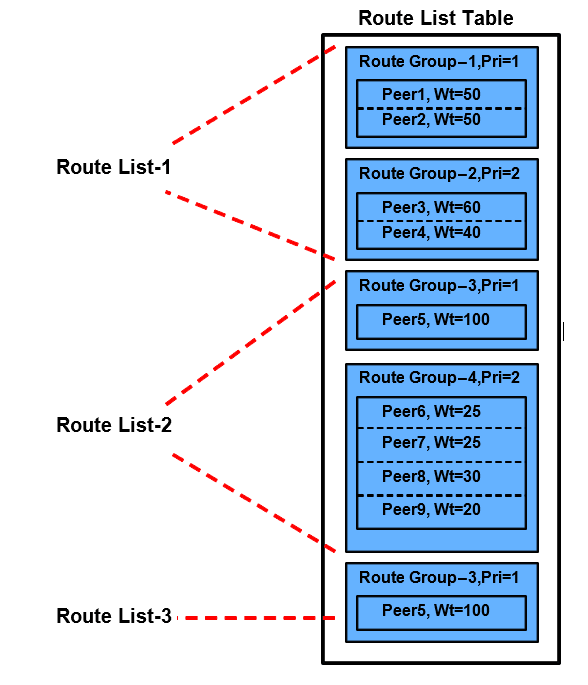

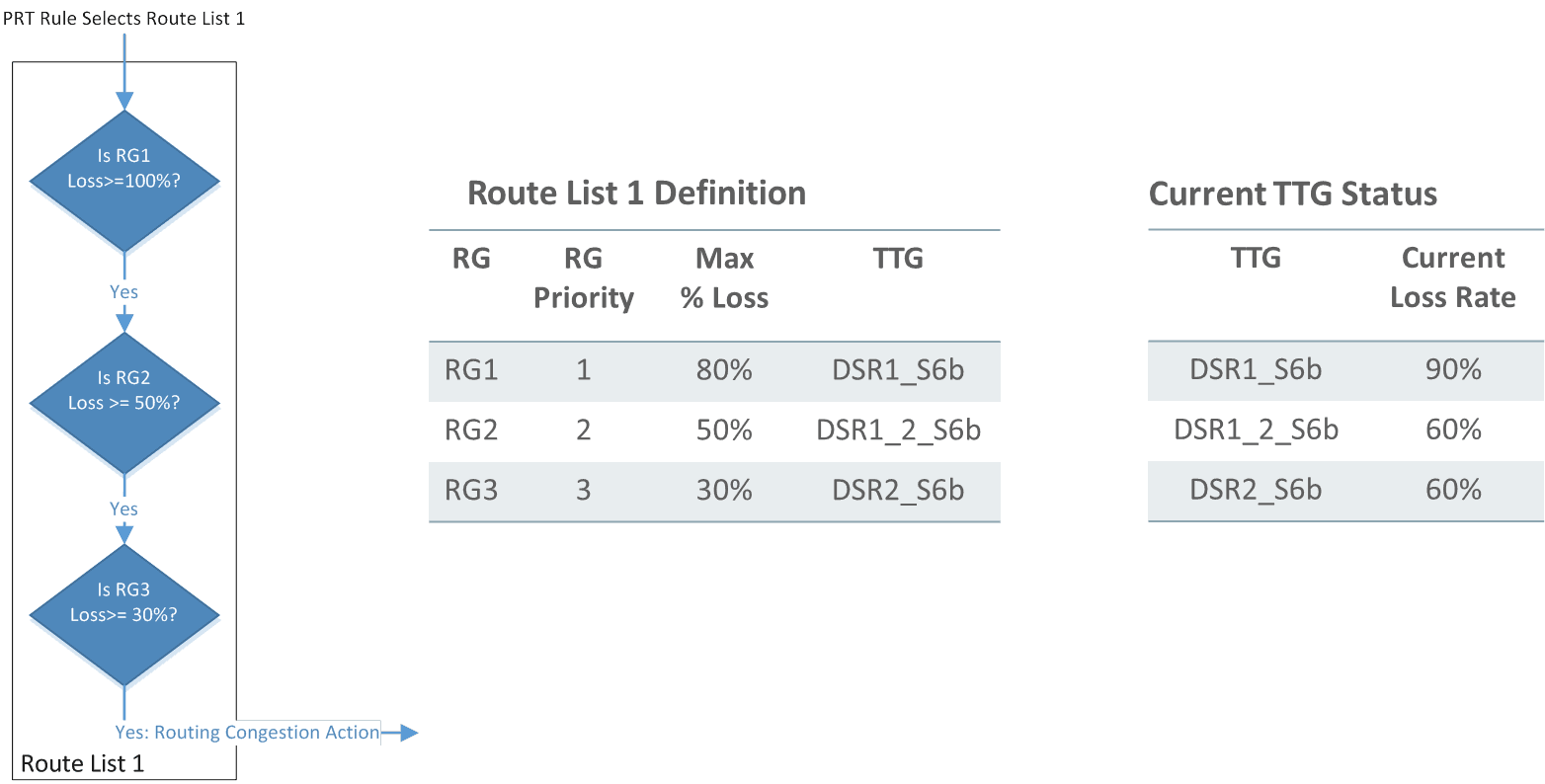

DSR supports the concepts of routes, peer route tables, peer route groups, connection route groups, route lists, and peer node groups to provide a very powerful and flexible load balancing solution. A Route Group is comprised of a prioritized list of peers or connections used for routing messages. A route list is comprised of multiple route groups – only one of which is designated as active at any one time. Each route list supports the following configurable information:

- Route List ID.

- Up to five Route Groups with associated Route Group Priority level (1-5).

- Minimum Route Group Availability Weight to control which Route Group in the Route List is actively used for routing requests.

- 0-10 optional Traffic Throttle Groups with associated Max Loss % Threshold for use with IETF Diameter Overload Indicator Conveyance (DOIC) feature.

Each Route Group supports the following configurable information:

- Route Group ID.

- Up to 160 Peer IDs -OR- 512 Connection IDs.

- Weight (1-64K) for each Peer ID or Connection ID.

When peers or connections have the same priority level a weight is assigned to each peer/connection which defines the weighted distribution of messages amongst the peers/connections. For example, if two peers with equal priority have weights 100 and 150 respectively then 40% of the messages will be forward to peer-1 (100/(100+150)) and 60% of the messages will be forward to peer-2 (150/(100+150)).

Peer Route Tables can be assigned to Peer Nodes or Application IDs. Each Peer Route Table has its own set of Peer Route Rules.

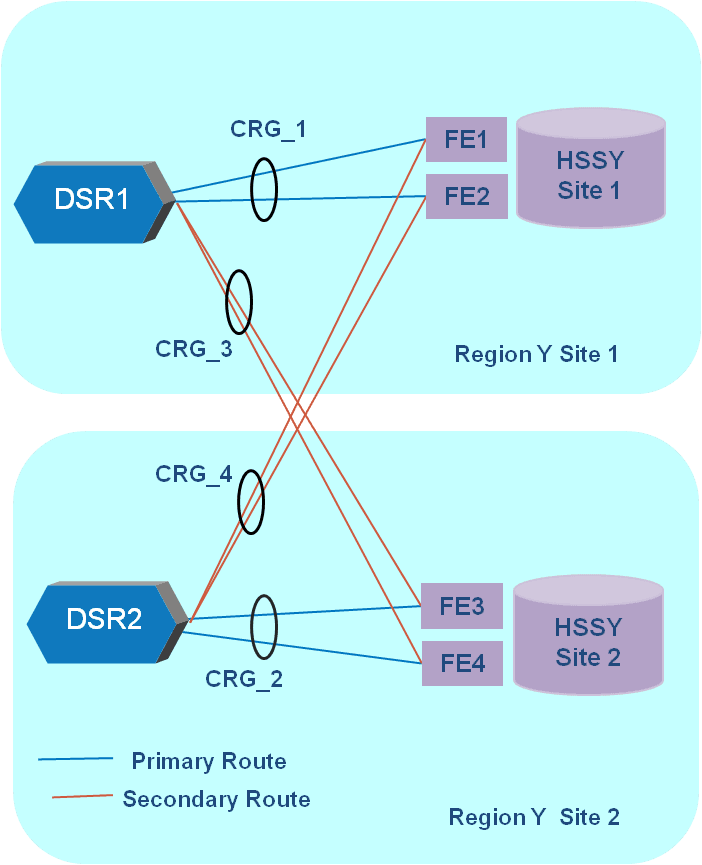

A set of peers with equal priority within a Route List is called a “Peer Route Group”. Multiple connections to the same peer can be assigned to a Connection Route Group (CRG). The use of CRGs allows for prioritized routing between connections to the same peer. An example use case would be connecting to Peers across different sites which share the same hostname. The peer within the site would be contacted for any traffic originated within the site and the remote peer should be contacted only if the local peer is unavailable.

Figure 2-7 Connection Route Group

When multiple Route Groups are assigned to a Route List, only one of the Route Groups is designated as the "Active Route Group" for routing messages for that Route List. The remaining Route Groups within the Route List are referred to as "Standby Route Groups". DSR designates the "Active Route Group" within each Route List based on the Route Group's priority and available capacity relative to the provisioned minimum capacity (described below) of the Route List. When the "Operational Status" of peers change or the configuration of either the Route List or Route Groups within the Route List change, then DSR may need to change the designated "Active Route Group" for the Route List. An example of Route List and Route Group relationships is shown below.

Figure 2-8 Route List, Route Group, Peer Relationship Example

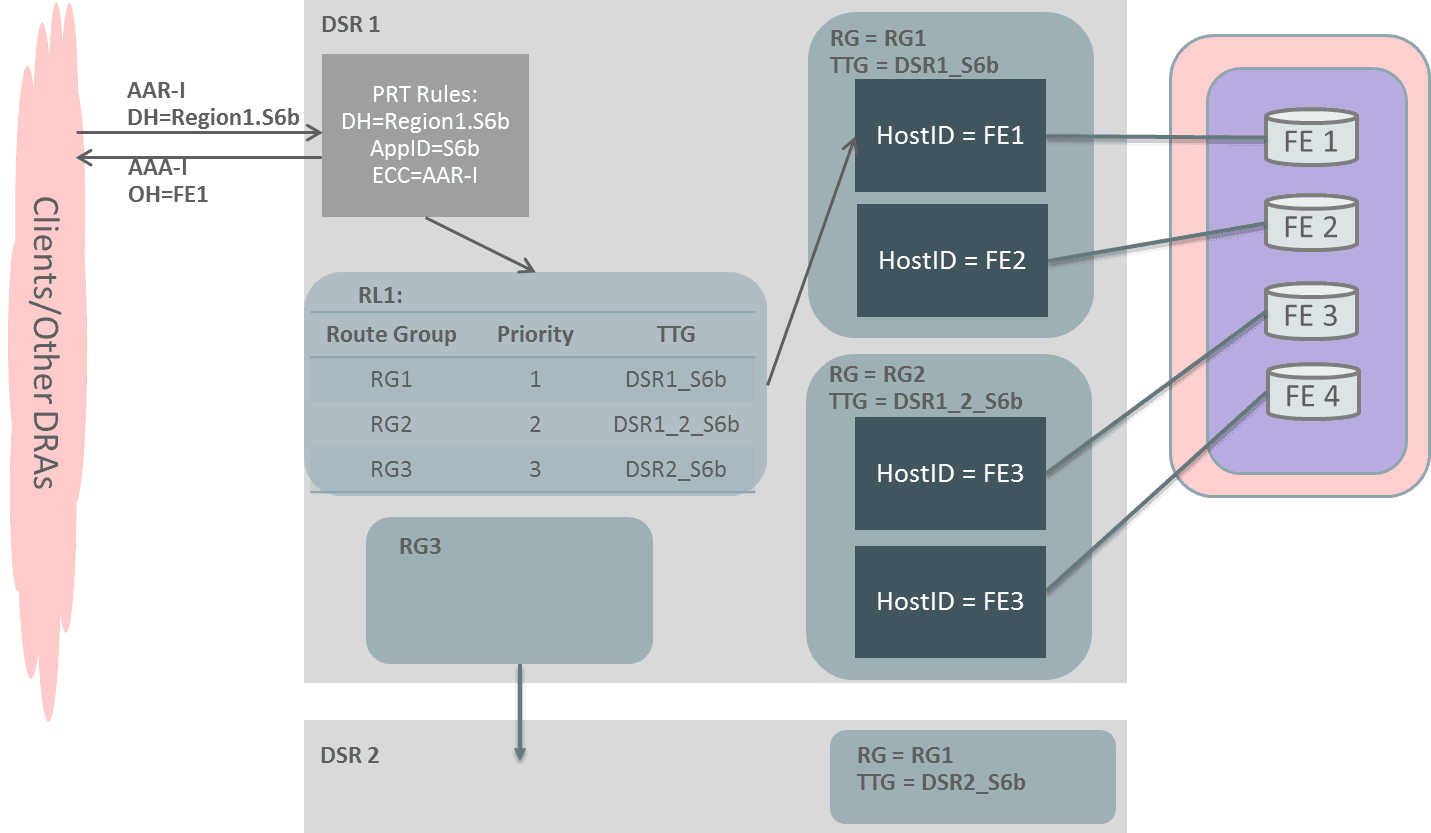

Showing a different set of route lists and route groups, an example of peer routing based on route groups with a route list is shown in the figure below.

Figure 2-9 Load Balancing Based on Route Groups and Peer Weights

DSR supports provisioning up to 160 routes in a route group (same priority) and allows for provisioning of 3 route groups per route list.

To further enhance the load balancing scheme, the DSR allows the operator to provision a “minimum route list capacity” threshold for each route list. This provisioned “minimum route list capacity” is compared against the route group capacity. The route group capacity is dynamically computed based on the availability status of each route within the route group and is the sum of all the weights of “available” routes in a route group. If the route group capacity is higher than the threshold, the route group is considered “available” for routing messages. If the route group capacity is lower (due to one of more failures on certain routes in the route group), the route group is not considered “available” for routing messages. DSR uses the highest priority (lowest value) “available” route group within a route list when routing messages over the route list. If none of the route groups in the route list are “available”, DSR will use the route group with the most “available” capacity, also honoring route group priority, when routing messages over the route list.

A peer node group is a configuration managed object that provides a container for a collection of DSR peer nodes with like attributes (Example: same network element or same capacity requirement). The user configures DSR peer nodes with their IP addresses in the peer node group container. Applications can use this IP address grouping for various functions such as IPFE for a distribution algorithm.

2.5.1 Extended Command Codes

Routing attributes by extended command code broadens the definition of a Diameter command code to include additional application specific single Diameter or 3GPP AVP content per command code. ECC are used for advanced routing selection and are comprised of the following attributes:

- ECC Name

- CC value

- AVP code value

- AVP data value

For example, there are four types of Credit-Control-Request (CCR) transactions which are uniquely identified by the content of the CCR’s “CC-Request-Type” AVP: (For a complete list of ECCs please see the DSR Documentation set available at Oracle.com on the Oracle Technology Network (OTN).)

- Initial_Request (typically called CCR-I).

- Update_Request (typically called CCR-U).

- Termination_Request (typically called CCR-T).

- Event_Request (typically called CCR-E).

Extended command codes can be used in Routing Option Sets (ROS), Pending Answer Timer (PAT), and Message Priority Configuration Set (MPCS) (see Message).

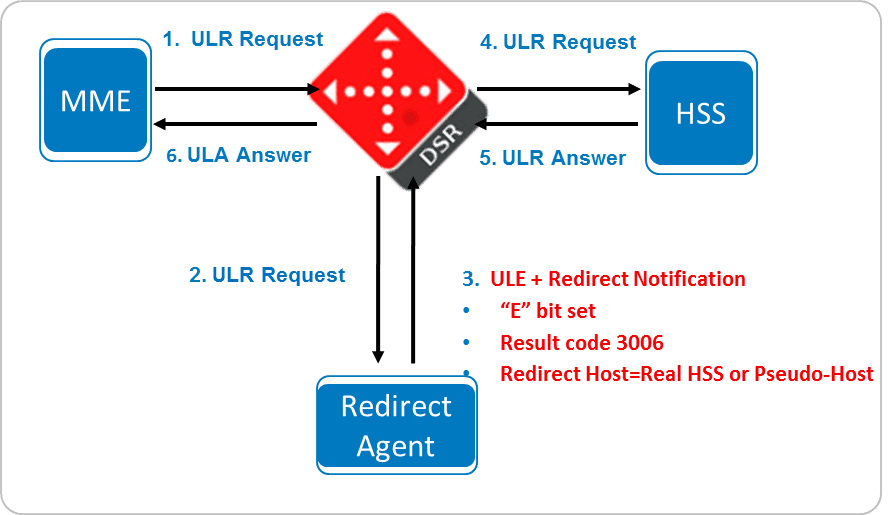

2.5.2 Redirect Agent Support

The DSR supports the processing of notifications sent by a Redirect Agent. The DSR processes the redirect notification (DIAMETER_REDIRECT_INDICATION response) and continues routing the original request upstream using the Redirect-Host in the response (RFC7633). In addition, the DSR processes realm redirect notification and continues routing the original request upstream using the Redirect-Realm in the response (RFC7075). Finally, an optional re-evaluation of the application routing table and peer routing table is supported for routing the redirected request.

Figure 2-10 Redirect Agent

2.6 Routing and Transaction Related Parameters in the DSR

The DSR has a hierarchical configuration and selection criteria for routing and transaction related (ART, PRT, ROS and PAT) parameters. Customers can configure DSR and choose per ingress peer node scoped additional transaction-specific granularity in routing and transaction parameters selection process.

Customers can create Transaction Configuration Groups which are composed of Transaction Configuration Sets. The Transaction Configuration Sets are composed of individual Diameter Transactions (represented by Appl-id+Extended Command Codes) with each transaction optionally specifying an ART, PRT, ROS and PAT. Once a Transaction Configuration Group is associated with an ingress peer, any Requests from the peer that match a Transaction Configuration Set within the assigned Transaction Configuration Group uses the associated ART, PRT, ROS and PAT if specified. The following table provides the precedence order for routing and transaction related parameter selection.

Table 2-4 Modified Routing and Transaction Parameter Selection Precedence Order

| Parameter Selection Criteria | Parameter Selection Precedence Order | |||

|---|---|---|---|---|

| DSR Configuration Elements | ROS (Note 3) | PAT | ART (Note 1) | PRT (Note 2) |

| Ingress Peer Node Selected Transaction Configuration Group | 1 | 1 | 1 | 1 |

| Ingress Peer Node | 2 | 2 | 2 | 2 |

| Egress Peer Node | NA | 3 | NA | NA |

| Default Transaction Configuration Group | 3 | 4 | 4 | 3 |

| System Default | 4 | 5 | 4 | 4 |

Note:

- For multiple DRA Application invocation on the same message, the applications can select a different ART and override the core routing ART precedence.

- Local DSR applications can select a different PRT and override this core routing PRT precedence

- Existing OAM configuration rule: A Routing Option Set with a configured Pending Answer Timer cannot be associated with an application-ID.

DSR supports configuring of up to 100 Transaction Configuration Groups, where each group instance can contain up to 1000 transaction configuration set entries. The maximum transaction set entries per DSR system cannot be greater than 1000.

2.6.1 Peer Routing Table

A peer route table is a set of prioritized peer routing rules that define routing to peer nodes based on message content. Peer routing rules are prioritized lists of user-configured rules that define where to route a message to upstream peer nodes. Routing is based on message content matching a peer routing rule’s conditions. There are six peer routing rule parameters:

- Destination-Realm

- Destination-Host

- Application-ID

- Command-Code

- Origin-Realm

- Origin-Host

When a diameter message matches the condition of peer routing rules then the action specified for the rule occurs. If you choose to route the diameter message to a peer node, the message is sent to a peer node in the selected route list based on the route group priority and peer node configured capacity settings. If you choose to send an answer, then the message is not routed and the specified diameter answer code is returned to the sender.

Peer routing rules are assigned a priority in relation to other peer routing rules. A message is handled based on the highest priority routing rule that it matches. The lower the number a peer routing rule is assigned the higher priority it has. (1 is the highest priority and 1000 is the lowest priority.)

If a message does not match any of the peer routing rules and the destination-host parameter contains a Fully Qualified Domain Name (FQDN) matching a peer node, then the message is directly routed to that peer node if it has an available connection. If there is not an available connection, the message is routed using the alternate implicit route configured for the peer node.

PRT Partitioning

Routing rules can be prioritized (1 – 1000) for cases where an inbound Diameter request may match multiple user-defined routing rules. The DSR supports up to 500 PRTs on the DSR. Any one of the PRTs can be optionally associated with either the (ingress) peer or Ingress Peer Node selected Transaction Configuration Group or Default Transaction Configuration Group. A local application can also specify the PRT that needs to be used for routing a request. Each of these PRTs have no more than 1000 rules and the total number of rules across all PRTs cannot exceed 50,000. A system wide PRT is also present by default and is used if a PRT has not been assigned.

The PRT can be associated with the ingress peer node which can be useful to separate routing tables for example for LTE domain, IMS domain, or routing partners.

Rule Action defines the action to perform when a routing rule is invoked. Actions supported are:

- Route to Peer - use Route List Table.

- Send Answer Response - an Answer response is sent with a configurable Result-Code and no further message processing occurs.

- Abandon With No Answer - discard the message and no Answer is sent to the originating Peer Node.

Forward to Peer Route Table - forward the message to the specified Peer Route Table.

The table below is used to determine the PRT instance to be used:

Table 2-5 PRT Precedence

| PRT Used | PRT specified by local app (if supported) | PRT associated with Ingress Peer Node Selected Transaction Configuration Group | PRT associated with an Ingress Peer | PRT associated with Default Transaction Configuration Group | Default PRT |

|---|---|---|---|---|---|

| Default PRT | No | No | No | No | Yes |

| Default Transaction Configuration Group PRT | No | No | No | Yes | Yes |

| Peer PRT | No | No | Yes | Don’t Care | Yes |

| PRT associated with Ingress Peer Node Selected Transaction Configuration Group | No | Yes | Don’t Care | Don’t Care | Yes |

| Local App PRT | Yes | Don’t Care | Don’t Care | Yes | Yes |

2.6.2 Application Routing Table

An Application Routing Table (ART) contains one or more application routing rules that can be used for routing request messages to DSR applications. Up to 400 application routing rules can be configured per Application Route Table. Up to 1,500 Application Route Tables can be configured per DSR network element, a total of upto 65,000 application routing rules across all ARTs can be configured across the application route tables per network element.

An application routing rule defines message routing to a DSR application based on message content matching the application routing rule's conditions. There are six application routing rule parameters:

- Destination-Realm

- Destination-Host

- Application-Id

- Command-Code

- Origin-Realm

- Origin-Host

When a diameter message matches the conditions of an application routing rule the message is routed to the DSR application specified in the rule.

Rule Action defines the action to perform when a routing rule is invoked. Actions supported are:

- Route to Application: Route the message to the local Application associated with this Rule.

- Forward to Egress Routing: ART search stops and moves on to PRT (Peer Route Table).

- Send Answer Response: ART generates an Answer. This Answer unwinds any previously encountered DSR Applications that wants to process the Answer. Normal controls for Answer are given (Result-Code vs Experimental Result Code, Result-Code value, Vendor-ID, and ErrorMessage string).

- Forward to Application Route Table: ART forwards the request message to the specified Application Route Table.

- Forward to Peer Route Table: ART will forward the request message to the specified Peer Route Table.

Abandon With No Answer: Discard the message and no Answer is sent to the originating Peer Node Application. Routing rules are assigned a priority in relation to other Application Routing Rules. A message is handled based on the highest priority routing rule that it matches. The lower the number an application routing rule is assigned the higher priority it has. (1 is highest priority and 1000 is the lowest priority.)

One or more DSR applications must be activated before application routing rules can be configured.

2.6.3 Routing Option Sets

A Routing Option Set defines the request attempt timeout and/or the routing actions the DSR takes in response to a connection failure, no-peer-response or connection congestion conditions. These are assigned per App ID, or Ingress Peer Node. This feature allows for the creation of up to 50 routing option sets (ROS) (including default) which can then be optionally associated to a diameter transaction in several ways (in precedence order):

- If the Transaction Configuration Group is selected on the ingress peer node configuration object, then the Transaction Configuration Group is used and the longest/strongest match search criteria is applied.

- The Routing Option Set is assigned to the ingress peer node.

- The Routing Option Set is assigned to the default TCG.

- The system default ROS is used.

Some items included in the Routing Option Set are:

- Resource Exhausted Action

- No Peer Response Action

- Connection Failure

- Connection Congestion Action

- Maximum Forwarding

- Transaction LifeTime

- Pending Answer Timer (PAT)

Alternate routing is supported in cases of transport failure, message response timeout and upon receipt of user defined answer responses.

Alternate Routing on Answer

- User defines which Result Codes trigger alternate routing.

- User defines which Application IDs are associated with each Result Code.

Alternate routing on transport failure

- Connection failure occurs after message has been sent.

- T-bit set on re-routed message to warn of possible duplicate.

Alternate routing on timeout

- No response received for message.

- T-bit set on re-routed message to warn of possible duplicate.

2.6.4 Pending Answer Timer

Pending Answer Timers specify the amount of time the DSR waits for an Answer after sending a Request to a Peer Node. DSR allows for the specification of up to16 pending answer timers that can be associated with the transactions/peers. This allows for different peers to respond to answers with different response times.

This feature addresses the ability to configure the Pending Answer Timer in the DSR which can then be optionally associated to a diameter transaction in several ways (in precedence order):

- If the Transaction Configuration Group is select on the ingress peer node configuration object, then the transaction configuration group is used and the longest/strongest match criteria is applied for request message parameters to compare and if a match is found, then the PAT assigned to the transaction set defined under this group.

- The PAT from the ROS assigned to the ingress peer node is used.

- The PAT assigned to the egress peer node is used.

- The PAT assigned to the default TCG is used.

- The System default PAT is used.

2.6.5 Transport

The DSR supports SCTP and TCP transport simultaneously including support for both protocols to the same Diameter peer. The DSR supports UDP transport for Radius. The DSR supports up to 64 connections per single Diameter peer which can either be uni-homed via TCP or SCTP or multi-homed via SCTP. The DSR maintains the availability status of each Diameter peer. Supported values are available, unavailable and degraded.

The following information are some of the configurable items for each connection:

- Peer Host FQDN, Realm ID and optionally IPv4 or IPv6 address.

- Local Host and Realm ID (defined as part of the Diameter node).

- Message Priority Configuration Set.

- Egress Throttling Configuration Set.

- Remote Busy Usage / Remote Busy Abatement Timer.

- Transport Congestion Abatement Time-out.

- DSR Local Node status as the connection initiator only, initiator & responder (default) or responder-only.

- Other connection characteristics such as timer values detailed below.

-

For SCTP connections:

- RTO.Initial

- RTO.Min

- RTO.Max

- RTO.Max.Init

- Association.Max.Retrans

- Path.Max.Retrans

- Max.Init.Retrans

- HB.Interval

- SACK Delay

- Maximum number of Inbound and Outbound Streams

- Partial Reliability Lifetime

- Socket Send/Rx Buffer

- Max Burst

- Datagram Bundling

- Maximum Segment Size

- Fragmentation Flag

- Data Chunk Delivery Flag

For TCP connections:

- Nagle Algorithm ON/OFF indicator.

- Socket Send/Rx Buffer.

- Maximum Segment Size (bytes).

- TCP Keep Alive.

- TCP Idle Time For Keep Alive.

- TCP Probe Interval For Keep Alive.

- TCP Keep Alive Max Count.

- Diameter Connect Timer (Tc as per RFC6733).

- Diameter Watchdog Timer Initial value (as per RFC3539).

- Diameter Capabilities Exchange Timer (Oracle extension to RFC6733).

- Diameter Disconnect Timer (Oracle extension to RFC6733).

- Diameter Proving Mode (Oracle extension to RFC3539).

- Diameter Proving Timer (Oracle extension to RFC3539).

- Diameter Proving Times (Oracle extension to RFC3539).

DSR supports multiple SCTP streams as follows:

- DSR negotiates the number of SCTP inbound and outbound streams with peers per RFC4960 during connection establishment using the number of streams configured for the connection.

- DSR sends CER, CEA, DPR, and DPA messages on outbound stream 0/

- If stream negotiation results in more than 1 outbound stream toward a peer, DSR evenly distributes DWR, DWA, Request, and Answer messages across non-zero outbound streams.

- DSR accepts and processes messages from the peer on any valid inbound stream.

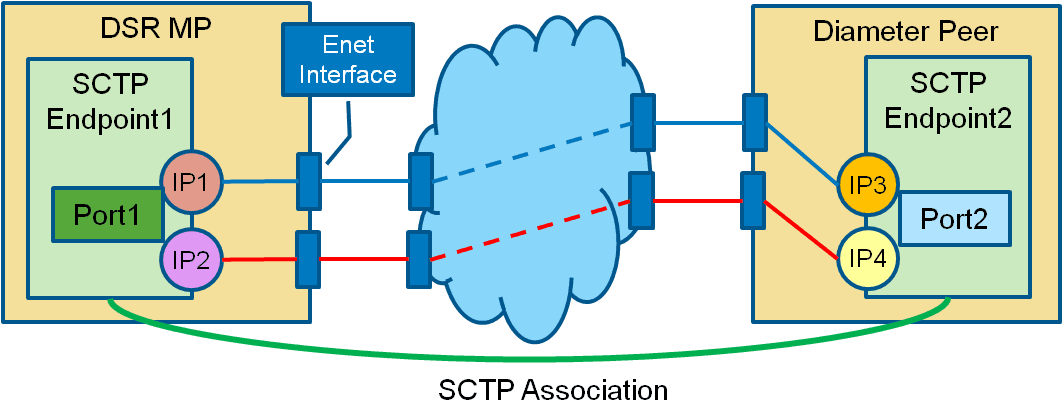

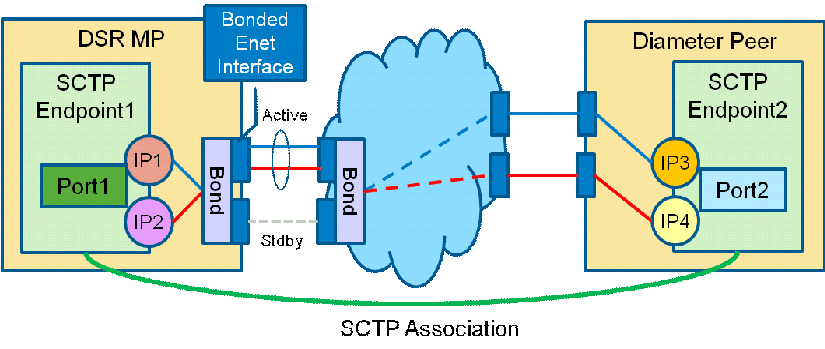

The DSR supports SCTP multi-homing as an option which provides a level of fault tolerance against IP network failures. By implementing multi-homing the DSR can establish an alternate path to the Diameter peers it connects to through the IP network using SCTP protocol. Failure of the primary network path will result in the DSR re-routing Diameter messages through the configured alternate IP path. Multi-homed associations can be created through multiple IP interfaces on a single MP blade. This is independent of any port bonding existing on the Ethernet interfaces. Multi-homing is supported for both IPv4 and IPv6 networks but IPv4 and IPv6 cannot co-exist on the same connection.

Figure 2-11 SCTP Multi-Homing

Figure 2-12 SCTP Multi-Homing via Port Bonding

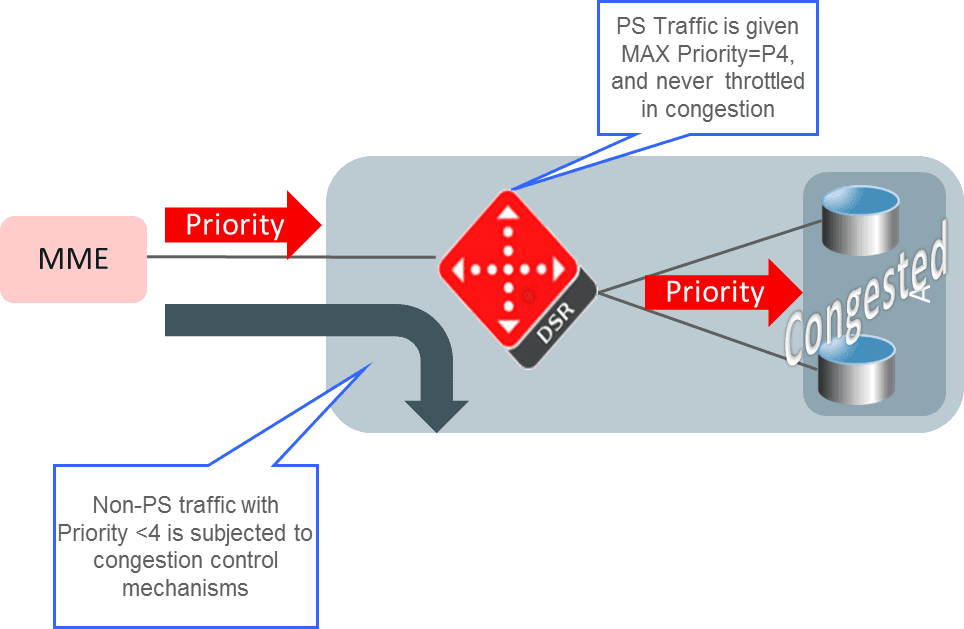

2.6.6 Message Prioritization

This feature provides a method for DSR administrators to assign message priorities to incoming Diameter requests. This priority configuration can be associated with a connection, peer node, application routing rule, or a peer routing rule. As messages arrive they are marked with a message priority. Once the message priority is set it can be used as input into decisions around load shedding and message throttling.

The Message Priority Configuration Set (MPCS) table is used for this configuration. The following are some of the defined methods used for setting message priority:

- Based on the connection upon which a message arrives.

- Based on the peer from which a message is sent.

- Based on an Application Routing Rule .

- Based on a Peer Routing Rule.

Each MPCS contains the following information:

MPCS ID – The ID is used when associating the configuration set with a connection.

Set of Application-ID, Command-code, priority tuples, also called message priority rules.

- Application-ID – The Diameter application-ID. The application-id can be a wildcard indicating that all application-ids match this message priority rule.

- Command-code – The Diameter command-code. The command-code can be a

wildcard indicating that all command-codes within the specified application match

this message priority rule.

Note:

If multiple command-codes with the same appl-id are to get the same message priority then there will be a separate message priority rule tuple for each command-code. - Priority – The priority applied to all request messages that match the Application-ID, Command-Code combination.

2.6.7 Diameter Routing Message Priority

RFC 7944 Diameter Routing Message Priority (DRMP) is the IETF standard which defines a mechanism to allow Diameter endpoints to indicate the relative priority of Diameter transactions. With this information, Diameter nodes can factor that priority into routing, resource allocation and overload abatement decisions. Message priority is embedded into IETF defined DRMP AVP of diameter messages with priority value ranging from 0 through 15 where 0 is the highest priority value and 15 is the lowest priority value. DRMP allows message priority assignment based on Diameter transactions that is request and answer message shall have same message priority. DSR uses the DRMP AVP based message priorities for message throttling decisions during congestion conditions similar to message priorities defined using Message Priority Configuration Set (MPCS) at DSR.

DSR provides the system configuration option to enable the support for 16 message priorities or legacy 5 message priorities. DRMP feature can be used at DSR only if the support for 16 message priorities has been enabled. DRMP feature can be enabled for individual Diameter Application Ids which allows DSR to assign message priorities to ingress diameter messages based on DRMP AVP only for configured Diameter Application Id’s. If no DRMP AVP is present in the ingress diameter message then message priority shall be assigned based on MPCS configurations at DSR. The operator is also provided a configuration option called “Answer Priority Mode”, a System Options attribute, for selecting which method to use for assigning priority to Answer messages - via the DSR legacy method of reserving the highest priorities for normal Answers (Highest Priority Mode) or the DRMP method of making the Answer priority the same as the Request priority (Request Priority Mode). When Highest Priority Mode is set, DSR ignores DRMP AVPs in Answer messages because the operator has chosen to ignore the DRMP method of assigning priority to Answers.

2.6.8 TLS / DTLS

The DSR optionally supports TLS for TCP connections and DTLS for SCTP associations in the DSR. This provides RFC compliant support for security protocol enabled certificate and key exchange. TLS/DTLS can be independently enabled on each DSR diameter connection. TLS/DTLS encrypts packets within a segment of network TCP connections or SCTP associations at the application layer using asymmetric cryptography for key exchange, symmetric encryption for privacy, and message authentication codes for message integrity. TLS/DTLS provides tighter encryption via handshake mechanisms. This feature uses the certificate management component from platform. Please see DSR for more information on the certificate management feature Capability Exchanges.

The Capability Exchanges on the DSR provide flexibility to inter-op with other Diameter nodes. These enhancements include:

- Support of any Application –Id.

- Configurable list of Application-Ids (up to 20 maximum) that can be advertised to the peer on a per connection basis.

- Authentication of minimum mandatory Application-Ids in the advertised list.

- Support for more than one Vendor specific Application-Id.

2.6.9 Configurable Disable of CEx Peer IP Validation

The DSR provides a mechanism to enable or disable the validation of Host-IP-Address AVPs in the CEx message against the actual peer connection IP address on a per connection configuration set basis.

2.6.10 Diameter Peer Discovery

The base Diameter protocol specification RFC6733 mandates that both dynamic Diameter agent discovery and manual configuration mechanisms be supported by all Diameter implementations; and either or both may be used in the network deployment.

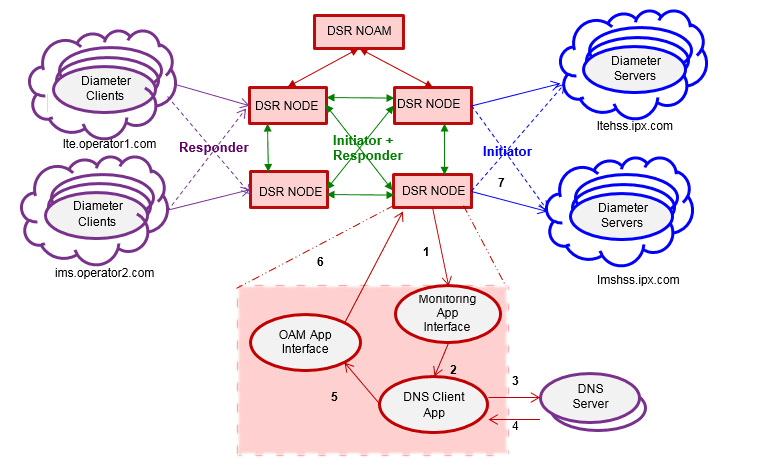

From the DSR signaling point of view there are three basic use-cases for dynamic Diameter peer discovery.

- initiator mode: DSR discovering the last-hop Diameter peers.

- initiator+responder mode: DSR discovering Diameter (Edge) Agent for further handling of a Diameter operation. It is combination of the above 2 uses cases between two end-points.

The DSR supports the above listed deployment use-cases. The support for Dynamic Peer Discovery provides:

- the capability to configure realms that are dynamically discovered using RFC 6733 extended NAPTR methods.

- For a DNS Client Application instance that performs dynamic discovery.

- OAM functions that update/create the managed objects that are used for Diameter signaling.

- The ability to accept connections from configured realms.

Figure 2-13 Dynamic Diameter Peer Discovery: Example

In the above example for ‘initiator’ mode, each DSR node does the following:

- Monitors configuration changes.

- Creates tags required for Diameter extended NAPTR (S-NAPTR) query.

- Invokes DNS Client Application Interface for query resolution towards configured DNS Servers.

- Provides DNS Client Application Interface, processes the DNS responses and resolves NAPTR, SRV, A, AAAA lookups

- Performs target server resolution mapping to Diameter peer attributes for specified realm.

- Invokes OAM interface to update discovered Diameter peer attributes in DSR configuration managed objects.

- Replicates DSR configuration managed objects to DA-MPs. The signaling functions become aware of the required peer attributes and initiates connection establishment and Diameter capabilities exchange.

In the above example for ‘initiator+responder’ mode, ‘Initiator+responder’ mode for peer discovery is possible using one ‘initiator+responder’ connection.

2.6.11 Implicit Realm Routing

Implicit Realm Routing provides realm routing using DNS SRV load balancing information. The figure below illustrates the high level flow of Diameter Request forwarding/routing decision points on DA-MP blades. Note that Destination-Realm and Application-Id based implicit realm routing is added after the Destination-Host based implicit routing. Implicit realm routing is only performed for routing messages to dynamically discovered peers.

Figure 2-14 High Level DSR Routing Flow – Fall through to Dest-Realm Based Implicit Routing

2.6.12 DNS Support

The DSR supports DNS lookups for resolving peer host names to an IP address. The operator can configure up to two DNS server addresses designated as primary and secondary servers. The wait time for DNS queries for connections initiated by the DSR is configurable between 100 to 5000 milliseconds with a default of 500 milliseconds. This process is used for both dynamic peer discovery and A/AAAA lookups.

The DSR supports both A (Ipv4) and AAAA (Ipv6) DNS queries. If the configured local IP address of the connection is Ipv4 the DSR will perform an “A” lookup and if it is Ipv6 the DSR will perform an “AAAA” lookup. If the IP address of the connection is undefined by the operator, the DSR will resolve the host name using both A and AAAA DNS queries when initiating the connection. The DSR can either use the peer’s FQDN or an FQDN specified for the connection as a hostname for the DNS lookup.

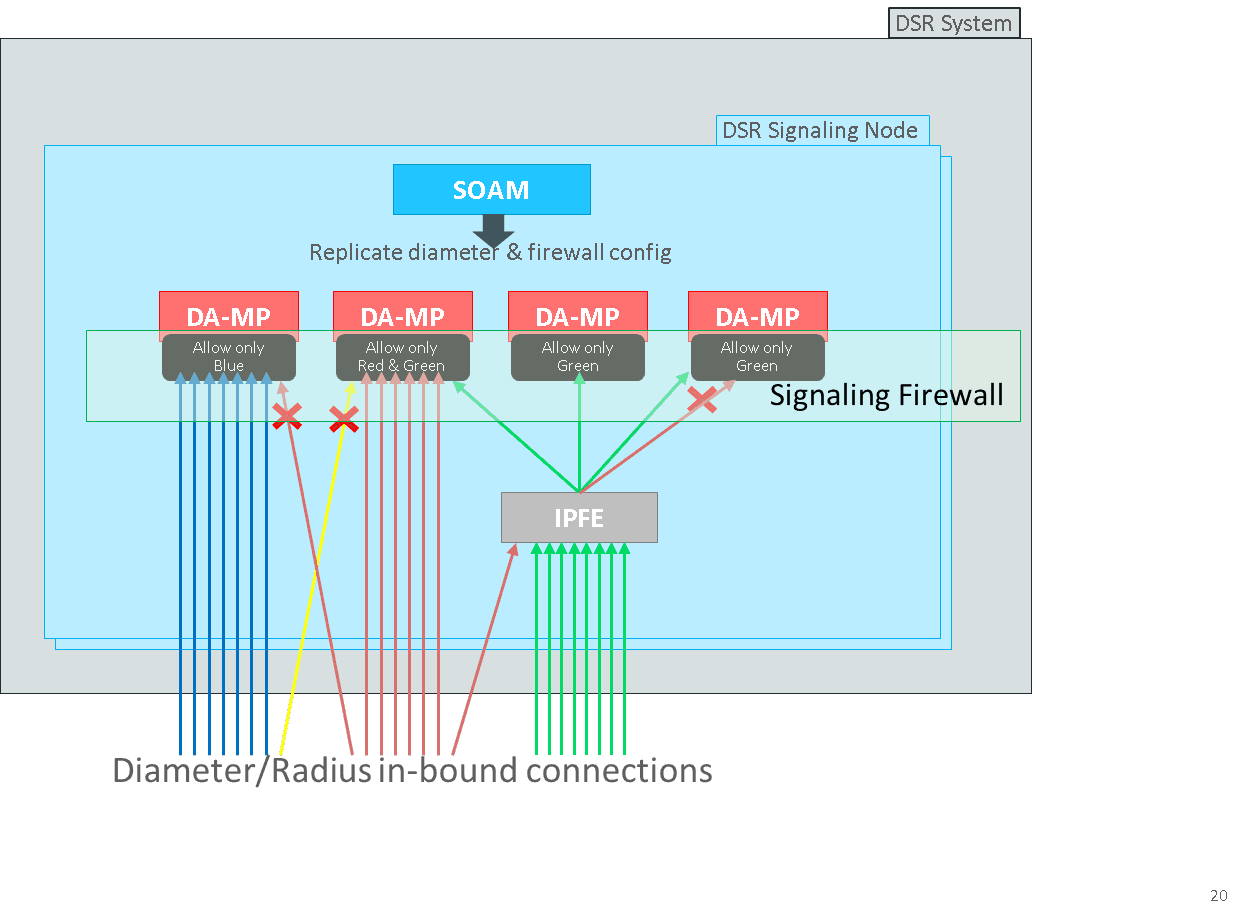

2.6.13 Signaling Firewall

Signaling Firewall feature is the network security feature of DSR which configures native Linux ‘iptable’ rules in the Linux firewall on each DA-MP server to allow only essential network traffic pertaining to the active signaling configuration. The in-bound signaling traffic is accepted by the DSR application only over the administratively enabled Diameter and Radius connections configured at DSR SOAM.

Signaling Firewall feature provides the following capabilities at DSR:

- Capability to automatically configure the Linux firewall to allow desired signaling network traffic on DA-MPs.

- Capability to dynamically update the Linux firewall configuration on DA-MPs to allow or disallow signaling traffic.

- Capability to administer (Enable and Disable) the DSR Signaling Firewall on the Signaling Node via System OAM configuration user interfaces.

Figure 2-15 DSR Signaling Firewall

Note:

This feature does not apply to IPFE servers and hence there is no impact on the IPFE function.2.6.14 Support Answer on Any Connection

DSR supports processing of answer messages from connections that are different to the connections used to send the request to the upstream Peer node. This feature can be enabled for individual Peers configured at DSR. Upstream Peer nodes can respond back answers to request received from DSR on any connection without the need to follow the same path as the received request.

Figure 2-16 Answer processing on connection different from the connection used to send Request message

2.6.15 Congestion Control

The DSR supports local and remote congestion control via the use of congestion levels. Congestion levels are defined for which only a percentage of Request messages are processed during the congestion period. The DSR supports a method for limiting the volume of Diameter Request traffic that DSR is willing to receive from DSR peers. In addition, the DSR provides a method for partitioning the MPS capacity among DSR peer connections, providing some user-configurable prioritization of DSR traffic handling. Congestion levels correspond to minor, major and critical alarms associated with resource utilization. The percentage of Request messages to be processed for each level is shown below. The DSR may return a user configurable Answer message when a Request message is not successfully routed during congestion. Under severe congestion conditions, the DSR may not return an Answer message. Request messages that are not processed will be discarded. An OAM event will be raised upon entering and exiting congestion levels. If the Next Generation Network Priority Service (NGN-PS) feature is enabled, these DSR Congestion Control mechanisms do not affect processing of NGN-PS messages. Refer to Next Generation Network Priority Service (NGN-PS) for more information.

Figure 2-17 Congestion Control

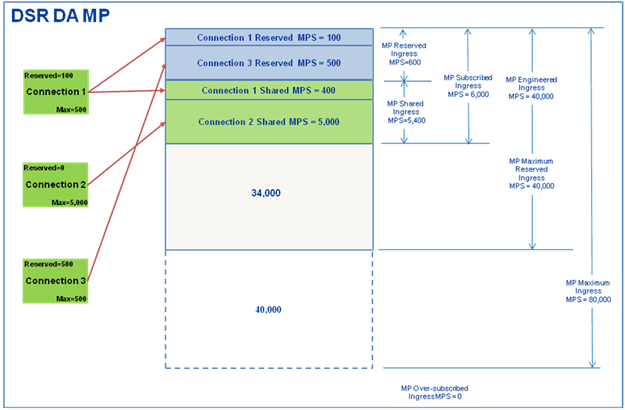

2.6.15.1 Per Connection Ingress MPS Control

The Per-Connection Ingress MPS Control feature provides the following:

- A method to reserve/guarantee a user-configured minimum ingress message capacity for each peer connection.

- A method for limiting the ingress message capacity for a peer connection to a user-configured maximum.

- A method for multiple peer connections to have a ‘shared’ ingress message capacity.

- A method to prevent the total reserved ingress message capacity of all active peer connections on a DA MP from exceeding the DA MP’s capacity.

- A method for limiting the overall rate at which a DA MP attempts to process messages from all peer connections.

- A method for coloring (Green or Yellow) messages ingressing a DSR.

There are six user-configurable capacity configuration set parameters for DSR Connections: Ingress MPS Minor Alarm Threshold, Ingress MPS Major Alarm Threshold, Abatement Time, Reserved Ingress MPS, Maximum Ingress MPS and Convergence Time. Additional details on some of these follow.

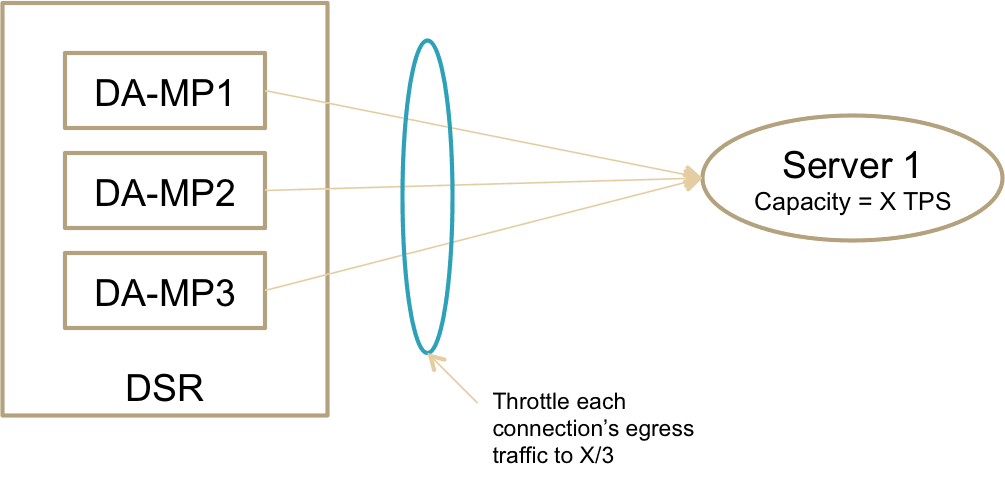

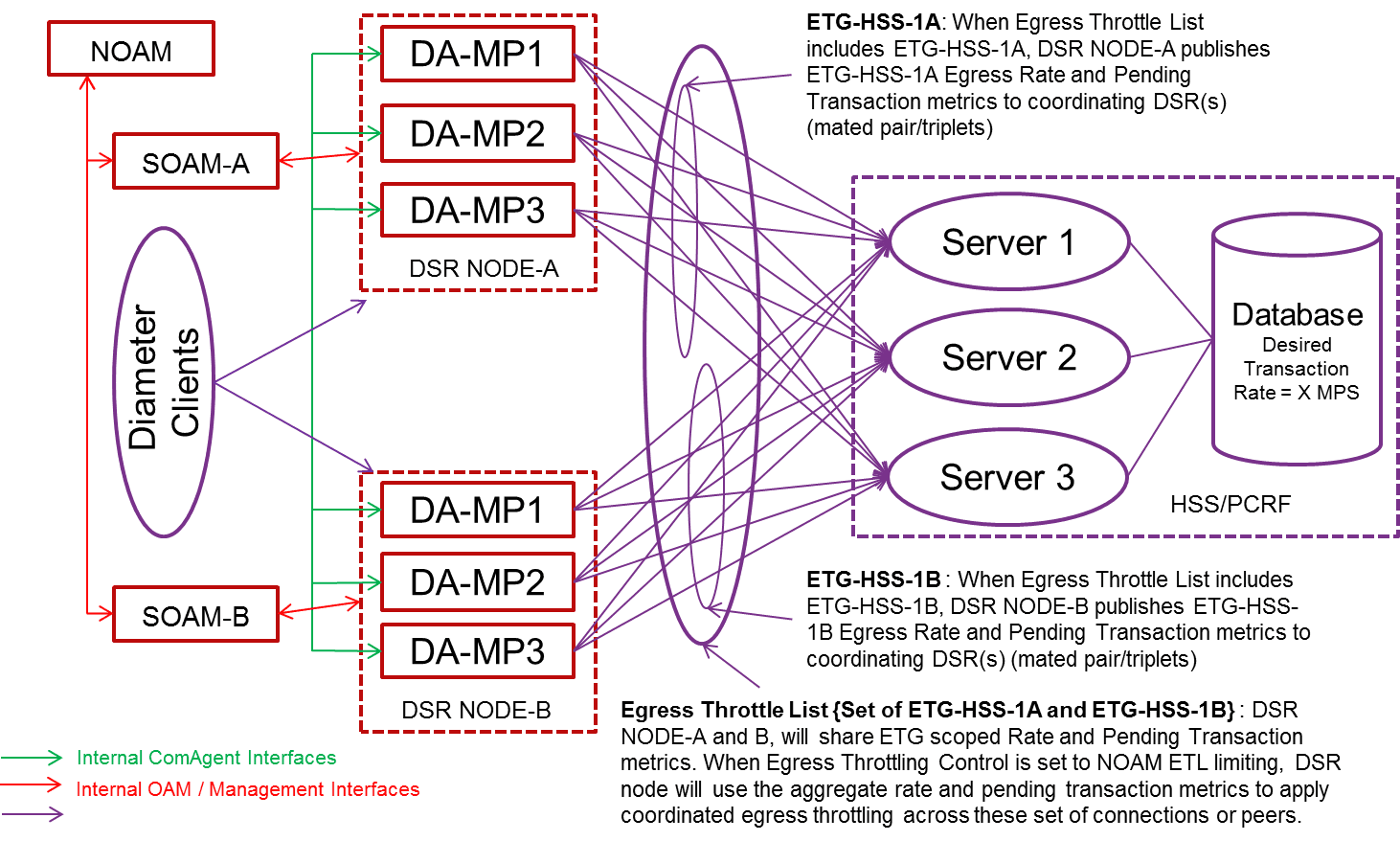

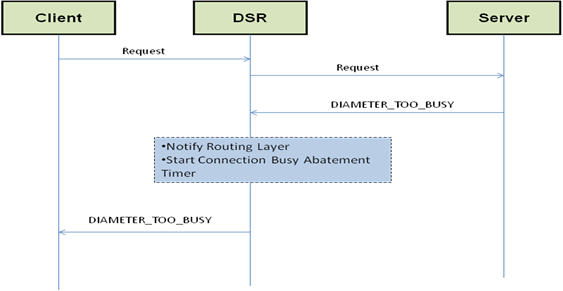

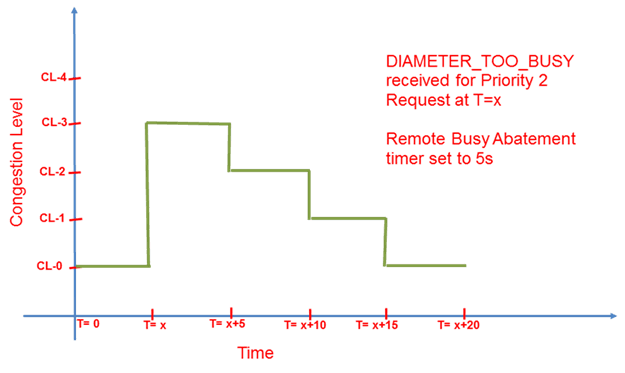

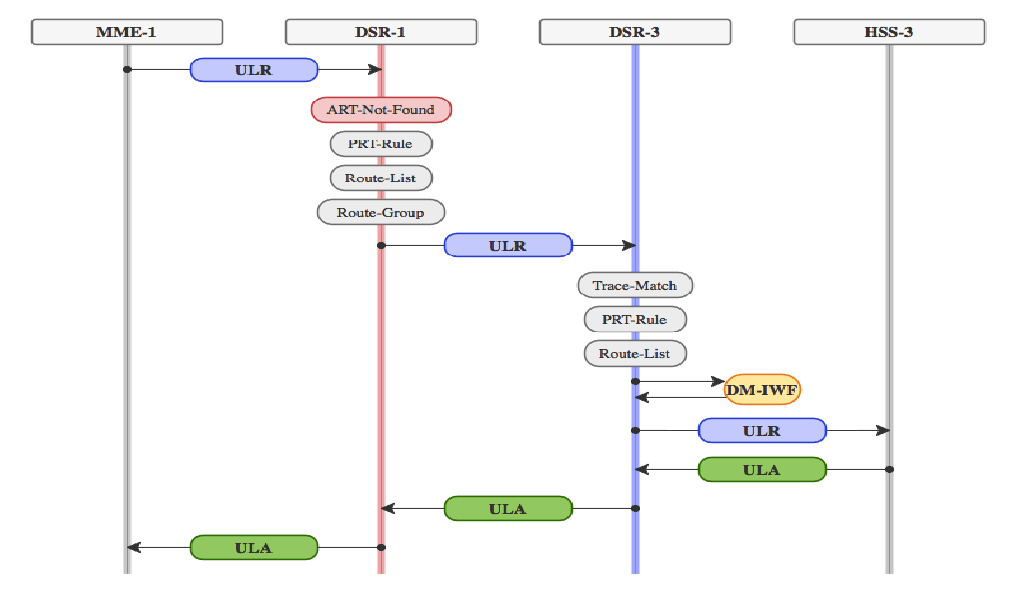

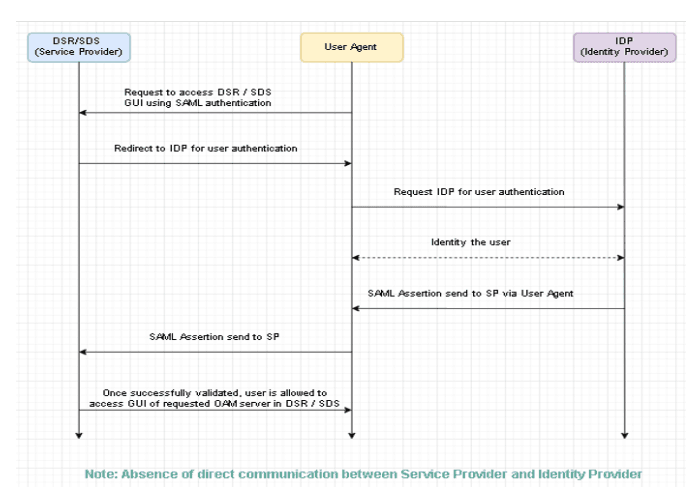

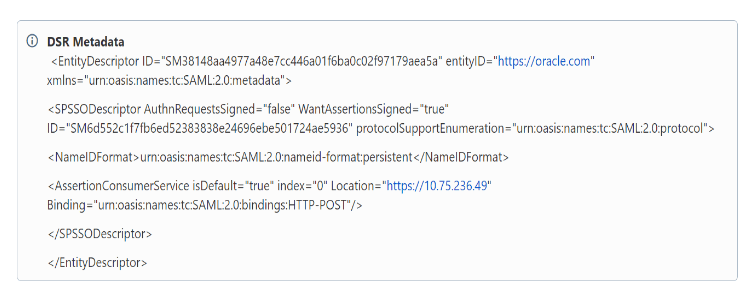

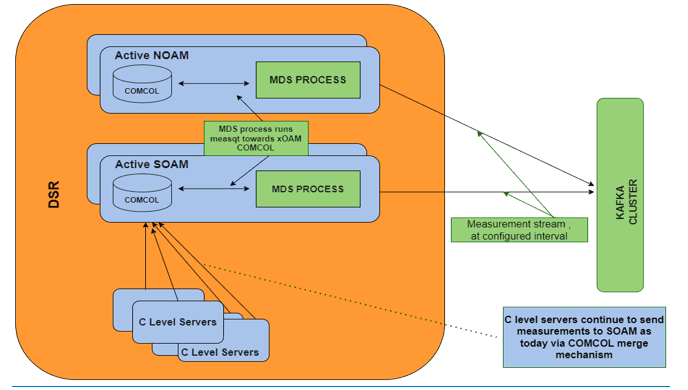

- Reserved Ingress MPS: