Topologies

Learn about network topologies for Oracle Database@Azure.

Oracle provides example network topologies based on different Oracle Database@Azure use cases, including:

- Local VNet Topology: Same availability zone connectivity

- VNet Peering Topology: Same availability zone with multiple VM clusters

- Hub-and-Spoke VNet Peering Topology: Cross-VNet connectivity in the same region with hub and spoke

- Global Connectivity Between Regions: Cross-region connectivity

- On-premises network with Hub-and-Spoke: On-premises (hybrid) connectivity with hub and spoke

Topology Components

The topologies use the following components:

- Azure Region: An Azure region is a geographical area in which one or more physical Azure data centers, called availability zones, reside. Regions are independent of other regions, and vast distances can separate them (across countries or even continents).

Azure and OCI regions are localized geographic areas. For Oracle Database@Azure, an Azure region is connected to an OCI region, with availability zones (AZs) in Azure connected to availability domains (ADs) in OCI. Azure and OCI region pairs are selected to minimize distance and latency.

- Azure VNet: Microsoft Azure Virtual Network (VNet) is the fundamental building block for your private network in Azure. VNet enables many types of Azure resources, such as Azure virtual machines (VM), to securely communicate with each other, the internet, and on-premises networks.

- Azure Delegated Subnet: Subnet delegation is Microsoft's ability to inject a managed service, specifically a platform-as-a-service (PaaS) service, directly into your virtual network. This allows you to designate or delegate a subnet to be a home for an external managed service inside of your virtual network, such that external service acts as a virtual network resource, even though it is an external PaaS service.

- Azure VNIC: The services in Azure data centers have physical network interface cards (NICs). Virtual machine instances communicate using virtual NICs (VNICs) associated with the physical NICs. Each instance has a primary VNIC that's automatically created and attached during launch and is available during the instance's lifetime.

- Azure Route table (User Defined Route – UDR): Virtual route tables contain rules to route traffic from subnets to destinations outside a VNet, typically through gateways. Route tables are associated with subnets in a VNet.

- Azure Virtual Network Gateway: Azure Virtual Network Gateway establishes secure, cross-premises connectivity between an Azure virtual network and an on-premises network. It allows you to create a hybrid network that spans your data center and Azure.

Example Topologies

Oracle Database@Azure and application connectivity can be configured in various ways to suit organizational needs. The following sections are the key network topologies:

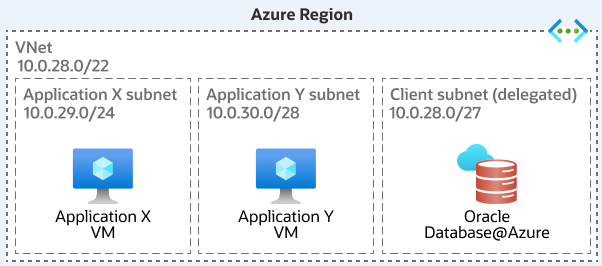

Local VNet Topology

The Local VNet Topology is fundamentally emphasizes the principle of co-locating application resources and the Oracle Database@Azure database within the same Azure VNet, primarily for performance optimization. While it can be deployed within a single AZ, this topology pattern can also span multiple Availability Zones if the VNet itself is designed to do so (e.g., application subnets in AZ1 and AZ2, Oracle Database@Azure delegated subnet in AZ1). The core principle remains: applications and the database reside within the same VNet boundary.

The following architecture shows a local VNet topology:

Use Cases

This topology is the preferred choice when:

- Minimizing network latency between applications and the Oracle database is the paramount concern.

- Network simplicity and avoiding VNet peering costs are preferable.

- Multiple distinct applications or application tiers need to access the same Oracle Database@Azure cluster but require logical separation using different subnets within the shared VNet.

Component Breakdown

The components are as follows:

- Azure Components: VNet, Delegated Subnet, Application Subnet(s), Route Tables (system routes), Azure Private DNS Zone.

- Implicit OCI Components: Service VCN, Client Subnet, Backup Subnet, Control Plane Link, OCI Private DNS, OCI Security Lists/NSGs.

Key Considerations

- Latency: Provides best (lowest) network latency between co-located applications and the database.

- Cost: Most cost-effective network topology because of the absence of VNet peering charges.

- Security Relies on subnet-level isolation using Azure NSGs (for apps) and OCI Security Lists/NSGs (for the database). Offers less network segmentation compared to designs using separate VNets (like Hub-Spoke). All applications share the same VNet address space, which might be a concern in highly segmented environments.

- Manageability: Relatively simple network configuration and management.

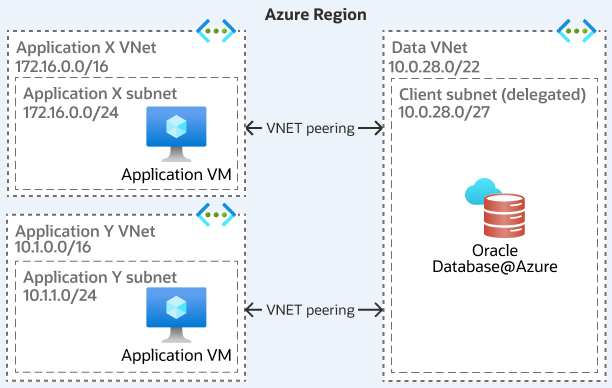

VNet Peering Topology

This topology involves establishing direct connections between Application VNets and the VNet hosting the Oracle Database@Azure delegated subnet using Azure VNet Peering. This setup doesn't necessarily rely on a central Hub VNet for transit between these specific VNets. The configuration can range from a simple peering between theOracle Database@Azure VNet and a single Application VNet to a more complex mesh where multiple Application VNets are directly peered with the Oracle Database@Azure VNet.

The following architecture shows a local VNet peering topology:

Use Cases

This topology is preferred choice when the following occurs:

- Single Application Deployment: Direct peering between the application VNet and the Oracle Database@Azure VNet for low-latency communication.

- Multi-Tier Application Architecture: Direct peering between VNets of different application tiers and the Oracle Database@Azure VNet for efficient communication.

- Microservices Architecture: Direct peering between multiple microservice VNets and the Oracle Database@Azure VNet for scalable deployment.

- Development and Testing Environments: Direct peering between development, testing, and production VNets and the Oracle Database@Azure VNet for isolated access.

- Disaster Recovery Setup: Direct peering between the disaster recovery VNet and the Oracle Database@Azure VNet for efficient failover.

- Data Analytics and Reporting: Direct peering between the analytics VNet and the Oracle Database@Azure VNet for timely data insights.

- Compliance and Regulatory Requirements: Direct peering between compliant VNets and the Oracle Database@Azure VNet for secure and efficient access.

Component Breakdown

The following components for this topology:

- Azure Components:

- VNet (hosting Oracle Database@Azure)

- Azure Subnet (Delegated)

- VNet(s) (hosting Applications)

- Azure Subnet(s) (Application)

- VNet Peering connection(s) (Regional)

- Azure Route Table(s) (Typically system routes suffice for direct peering unless specific overrides needed)

- Azure Private DNS Zone(s) (Linked across peered VNets for resolution)

- (Optional for Global) VPN Gateway / Azure Virtual WAN

- Implicit OCI Components:

- Service VCN, Client Subnet, Backup Subnet, Control Plane Link, OCI Private DNS, OCI Security Lists/NSGs.

Key Considerations

- Latency: Offers low latency for regional peering, comparable to the Local VNet topology as traffic flows directly over the Azure backbone between peered VNets. Latency for global peering (via VPN/vWAN) is much higher, dictated by the inter-region distance.

- Cost: VNet peering incurs ingress and egress data transfer charges between the peered VNets. In a mesh scenario with many VNets peered to the Oracle Database@Azure VNet, these costs can accumulate based on traffic volume.

- Security: Network security relies on Azure NSGs applied to the Application VNets/subnets and the OCI Security Lists/NSGs applied to the Oracle Database@Azure delegated subnet/VNICs. This model lacks the centralized inspection point offered by the Hub-Spoke topology. Security policy management is distributed across the peered VNets.

- Manageability: Configuration is simpler than Hub-Spoke for a small number of peerings. However, managing a large number of direct peering relationships (a "full mesh") can become complex in terms of tracking connections, managing potential IP overlaps (though peering requires non-overlapping IPs), and implementing consistent routing or security policies.

- Scalability: While peering itself is scalable, the operational complexity of managing numerous direct peerings can be a challenge compared to the structured approach of Hub-Spoke.

- Global Connectivity Limitation: The inability to use direct Global VNet Peering for Oracle Database@Azure traffic without extra tunneling (VPN) or services (vWAN) is a significant constraint for designing simple, direct cross-region application access.

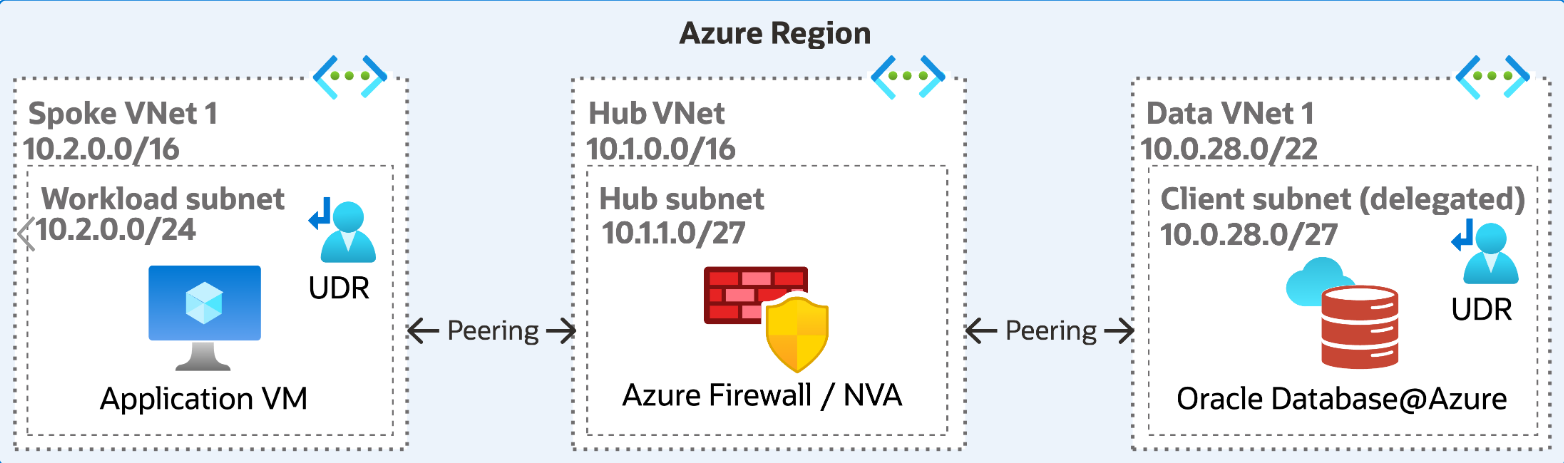

Hub-and-Spoke VNet Peering Topology

The Hub VNet functions as a centralized point of connectivity, helping communication between applications and databases. The spoke VNets establish connections with the Hub by VNet peering and require an Azure Route table (UDR) to route to the Hub NVA. Select this topology if you don't have latency-sensitive applications because of the additional hop required and consider that VNet peering results in ingress and egress costs.

The following architecture shows a hub-and-spoke VNet peering topology with Azure Firewall or NVA:

Use Cases

- Centralized Security Management: Use a hub VNet to centralize security services such as firewalls and VPN gateways for multiple spoke VNets.

- Shared Services Access: Enable shared services such as DNS and Active Directory in the hub VNet accessible by all spoke VNets.

- Simplified Network Management: Manage network policies and routing centrally in the hub VNet for consistent application across all spoke VNets.

- Hybrid Cloud Integration: Connect on-premises networks to the hub VNet for seamless integration with Azure resources in spoke VNets.

- Multi-Region Connectivity: Use the hub VNet to help global connectivity between regional spoke VNets for high availability and resilience.

- Resource Isolation: Isolate different departments or projects in separate spoke VNets while maintaining centralized control in the hub VNet.

- Cost Optimization: Optimize operational costs by centralizing expensive network resources in the hub VNet shared by several spoke VNets.

Components Breakdown

- Azure Components:

- Hub VNet (hosting shared services)

- Spoke VNet(s) (for Applications)

- Spoke VNet (for Oracle Database@Azure, containing Delegated Subnet)

- Azure Subnet (Delegated)

- Azure Subnet(s) (Application)

- Azure Subnet (GatewaySubnet in Hub)

- Azure Subnet (AzureFirewallSubnet or NVA subnet in Hub)

- VNet Peering (Spokes to Hub)

- Azure Route Table(s) with UDRs (on Spokes, Hub subnets)

- Azure Firewall or third-party NVA (in Hub)

- Azure VPN/ExpressRoute Gateway (Optional, in Hub)

- Azure Private DNS Zone(s) (linked to VNets)

- Implicit OCI Components:

- Service VCN, Client Subnet, Backup Subnet, Control Plane Link, OCI Private DNS, OCI Security Lists/NSGs.

Key Considerations

- Centralization & Control: Provides excellent centralization for security policy enforcement, connectivity management (on-prem, cross-region), and shared services deployment.

- Latency: Introduces more network latency compared to the Local VNet topology because of the extra hop required through the Hub VNet and potentially the Firewall/NVA. Each hop adds delay, which can be significant for chatty applications. Minimizing hops by using direct spoke-to-spoke peering (if feasible and secure) or avoiding unnecessary NVA traversal is recommended where performance is critical.

- Cost: Incurs VNet peering charges for all traffic transiting the Hub. Significant costs associated with Azure Firewall or NVA licensing and throughput, plus potential Gateway costs.

- Security: Enhanced security posture because of centralized inspection and segmentation. However, the PMTUD complexities require careful configuration to avoid connectivity issues.

- Manageability: More complex to set up and manage because of the increased number of VNets, peering configurations, and intricate UDR management required across the topology.

Routing and Security

Routing and security are defining characteristics of this topology:

- User-Defined Routes (UDRs): UDRs are essential to control traffic flow.

- On Application Spokes: UDRs are needed to force traffic destined for the Oracle Database@Azure Spoke's address range (or potentially all non-local traffic) to the internal IP address of the Azure Firewall/NVA in the Hub VNet as the next hop.

- On the Oracle Database@Azure Spoke (including delegated subnet): UDRs are required to route return traffic back toward the Application Spokes through the Hub Firewall/NVA. The UDR prefix specificity rules (prefix must be >= delegated subnet CIDR) must be followed.

- On the Hub's Gateway Subnet (if applicable): UDRs might direct traffic arriving from on-premises toward the Firewall/NVA before it reaches the spokes.

- NVA/Azure Firewall: The central security appliance in the Hub VNet inspects and filters traffic flowing between Application Spokes and the Oracle Database@Azure Spoke, enforcing organizational security policies. It can also provide Network Address Translation (NAT) if required.

- Path MTU Discovery (PMTUD) Challenges: A significant complication arises when routing Oracle Database@Azure traffic through NVAs/Firewalls. The underlying Oracle Database@Azure network infrastructure often uses a smaller MTU. PMTUD relies on ICMP "Fragmentation Needed" messages (Type 3, Code 4) to negotiate the correct Maximum Segment Size (MSS). However:

- Azure's default NSG rules block ICMP between non-directly peered VNets (the Oracle Database@Azure service VNet isn't directly peered with Application Spokes).

- Firewalls/NVAs often filter ICMP traffic by default.

- Asymmetric routing in multi-node NVAs or Azure Firewall (which lacks session persistence configuration) can cause return ICMP packets to be dropped by a different firewall instance than the one that processed the original flow.

- Mitigation: Requires explicitly allowing inbound ICMP from the Oracle Database@Azure router's source IP range (for example, 100.64.0.0/10 as per ) on the application VM's NSG, ensuring the firewall/NVA allows the necessary ICMP traffic bi-directionally, and configuring session persistence on NVAs where possible. If using Azure Firewall or NVAs without session persistence, manually lowering the MTU on the client VMs might be the only viable workaround. Deploying a simple NVA (Linux router) within the Oracle Database@Azure VNet itself has also been proposed as a solution for certain routing limitations.

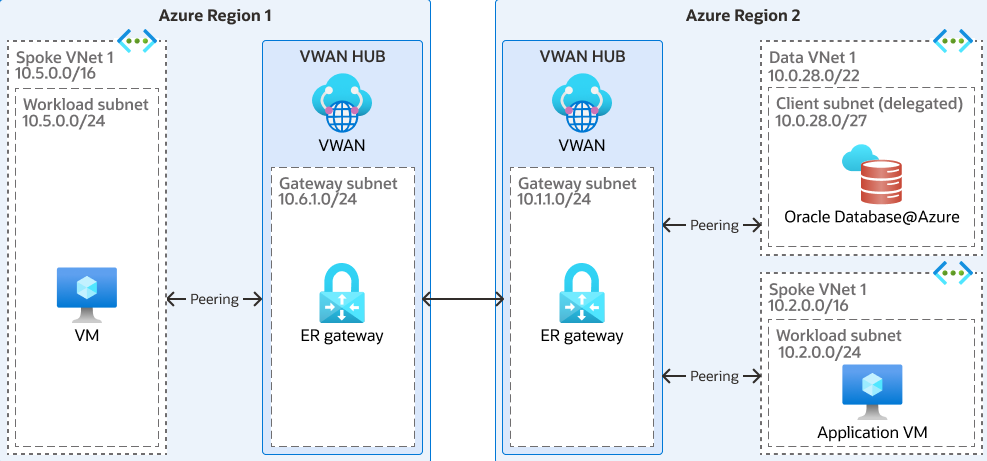

Global Connectivity Between Regions

To implement cross-region connectivity for Oracle Database@Azure, set up the Virtual WAN (VWAN) Hub in both regions to establish seamless global connectivity. Within the Hub, configure a firewall to inspect all traffic, ensuring the security of the Hub. The VWAN Hub centrally manages routing and streamlines network operations, providing a unified approach to network management. However, it's important to consider that using this topology incurs additional costs and might introduce latency.

The following architecture shows global connectivity between regions:

Use Cases

- Global Data Access: Enable seamless access to Oracle Database@Azure across multiple regions for global applications.

- Disaster Recovery: Implement cross-region connectivity to ensure robust disaster recovery and failover capabilities.

- High Availability: Achieve high availability by distributing Oracle Database@Azure instances across different regions.

- Performance Optimization: Optimize application performance by connecting regional resources to the nearest Oracle Database@Azure instance.

- Compliance and Data Sovereignty: Meet compliance and data sovereignty requirements by hosting data in specific regions while maintaining connectivity.

- Centralized Management: Use cross-region connectivity to centralize network management and streamline operations.

- Cost Efficiency: Balance operational costs by leveraging regional resources and connectivity for Oracle Database@Azure.

Components Breakdown

- Azure Components:

- VNets (in Primary and Standby Regions)

- Azure Subnets (Delegated in each VNet)

- Connectivity Service: Azure Virtual WAN (Hubs, Connections) OR VPN Gateways OR VNet Peering (Regional, potentially Global with VPN overlay)

- Azure Route Table(s) with UDRs (to direct traffic across regions via chosen path)

- Azure Private DNS Zone(s) (requiring a strategy for cross-region name resolution, possibly via vWAN DNS features or conditional forwarding)

- Implicit OCI Components:

- Service VCNs (in paired OCI Primary and Standby Regions)

- Client Subnets, Backup Subnets

- Control Plane Links

- OCI Security List(s) / NSG(s)

- OCI Private DNS Zone(s)

- OCI DRGs, potentially OCI Remote Peering Gateways (RPGs) if using OCI network path for replication.

- Oracle Components:

- Oracle Data Guard configuration (Primary/Standby roles, redo transport settings).

Key Considerations

- Latency: Inter-region network latency is the primary factor influencing Data Guard performance and the choice of replication mode (Asynchronous recommended). The specific connectivity mechanism (vWAN, VPN, OCI Path) will impact the actual latency achieved.

- Cost: Data egress charges between Azure regions can be large for Data Guard replication traffic flowing over the Azure network. Costs associated with vWAN, VPN Gateways, or potentially the OCI path (though mentions first 10TB/month free for OCI cross-region) must be factored in.

- Bandwidth: The cross-region network link must have enough bandwidth to accommodate the peak redo generation rate of the primary database to avoid replication lag.

- RPO/RTO: The design must align with the organization's Recovery Point Objective (RPO) and Recovery Time Objective (RTO). Asynchronous replication implies RPO > 0. RTO depends on the failover mechanism (manual or automated with Fast-Start Failover) and the time taken to switch roles and redirect applications.

- Complexity: Implementing and managing cross-region networking and Data Guard adds significant complexity compared to single-region deployments. Failover and switchover procedures must be well-defined and regularly tested.

- Connectivity Path Choice: Deciding whether to route Data Guard traffic via the default Azure path or explicitly via the OCI network path is a critical design decision. The Azure path might be simpler to configure initially but subject to standard Azure inter-region performance and costs. The OCI path, while potentially offering better performance or different cost structures , requires more intricate routing configurations involving both Azure UDRs and potentially OCI routing policies to override the default Azure path. This decision requires careful evaluation based on performance tests, cost analysis, and operational capabilities.

Routing and Security

- Routing:

- Centralized Routing Management: The VWAN Hub centrally manages routing, simplifying network operations and ensuring consistent routing policies across regions.

- Optimized Path Selection: VWAN Hub selects the most efficient paths for data transmission between regions, reducing latency and improving performance.

- Dynamic Routing Updates: Automatically updates routing tables to adapt to network changes and maintain optimal connectivity.

- Inter-Region Connectivity: Facilitates seamless connectivity between VNets in different regions, enabling global access to Oracle Database@Azure.

- Route Propagation: Propagates routes between connected VNets and on-premises networks, ensuring comprehensive network integration.

- Security:

- Firewall Configuration: Configure firewalls within the VWAN Hub to inspect and filter all traffic, enhancing security.

- Traffic Inspection: Implement deep packet inspection to monitor and control data flow between regions, preventing unauthorized access.

- Network Segmentation: Use VWAN Hub to segment network traffic, isolating sensitive data and applications for improved security.

- Secure Connectivity: Establish secure connections using VPN or ExpressRoute to protect data in transit between regions.

- Access Control: Apply stringent access control policies to manage permissions and ensure only authorized entities can access the Oracle Database@Azure.

- Threat Detection: Integrate threat detection and response mechanisms within the VWAN Hub to identify and mitigate security threats in real-time.

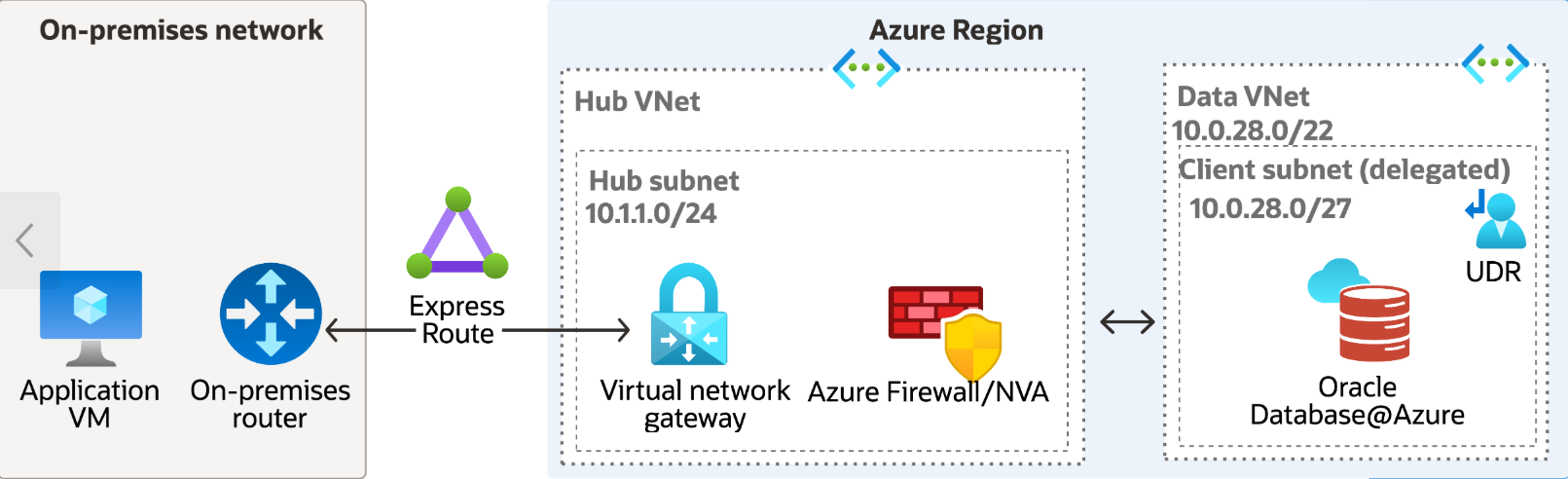

On-Premises Network with Hub-and-Spoke

Connecting on-premises data centers or user networks securely to applications in Azure that use Oracle Database@Azure is a common requirement. The gateway learns the routes through transitivity. The database spoke VNet learns the route through peering. If you select this topology, consider that this topology incurs costs and adds latency. Azure provides two primary mechanisms for establishing this hybrid connectivity:

- Azure VPN Gateway: This service enables the creation of secure, encrypted Site-to-Site (S2S) IPsec/IKE VPN tunnels over the public internet between an on-premises VPN device and an Azure VPN Gateway deployed in an Azure VNet (typically a Hub VNet in enterprise scenarios). Oracle Database@Azure supports connectivity through Active/Passive VPN gateways and Active/Active Zone-Redundant gateways, but notably not standard Active/Active VPN gateways.

- Azure ExpressRoute: This service provides a private, dedicated connection between the on-premises network and the Microsoft global network through a connectivity provider. An ExpressRoute circuit terminates at an ExpressRoute Gateway deployed in an Azure VNet (again, typically a Hub VNet). Oracle Database@Azure supports connectivity via both Local and Global ExpressRoute circuits. However, ExpressRoute FastPath, an optimization that bypasses the gateway for certain traffic, is not supported for Oracle Database@Azure connectivity.

The following architecture shows a hub-and-spoke on-premises topology:

Use Cases

- Hybrid Cloud Integration: Connect on-premises networks to the hub VNet for seamless integration with Azure resources in spoke VNets.

- Centralized Security Management: Use the hub VNet to centralize security services for both on-premises and Azure resources.

- Data Migration: Facilitate secure and efficient data migration from on-premises databases to Oracle Database@Azure via the hub VNet.

- Disaster Recovery: Implement disaster recovery solutions by connecting on-premises networks to the hub VNet for failover to Oracle Database@Azure.

- Compliance and Regulatory Requirements: Ensure compliance by securely connecting on-premises networks to the hub VNet for controlled access to Oracle Database@Azure.

- Performance Optimization: Optimize application performance by connecting on-premises networks to the nearest hub VNet for efficient access to Oracle Database@Azure.

- Resource Isolation: Isolate sensitive on-premises resources while maintaining secure connectivity to Oracle Database@Azure through the hub VNet.

Components Breakdown

- Azure Components:

- Hub VNet (Common, hosting Gateway and optionally Firewall)

- Oracle Database@Azure Spoke VNet

- Azure Subnet (Delegated)

- Azure Subnet (GatewaySubnet in Hub)

- Azure Subnet (AzureFirewallSubnet or NVA subnet in Hub, optional)

- Azure VPN Gateway or Azure ExpressRoute Gateway (in Hub)

- VNet Peering (Hub to Oracle Database@Azure Spoke, with Gateway Transit enabled)

- Azure Route Table(s) with UDRs (on Delegated Subnet, GatewaySubnet, potentially App Subnets)

- Azure Firewall or NVA (Optional, in Hub)

- Implicit OCI Components:

- Service VCN, Client Subnet, Backup Subnet, Control Plane Link, OCI Security Lists/NSGs.

- On-Premises Components:

- Customer Gateway / VPN Device / Router

- Firewall

- ExpressRoute Circuit (if using ExpressRoute)

Key Considerations

- Bandwidth & Latency: ExpressRoute generally offers higher, more predictable bandwidth and lower latency compared to internet-based VPNs. The choice depends on application performance requirements and budget.

- Reliability & SLA: ExpressRoute typically provides higher availability SLAs compared to VPN Gateways. Redundancy is critical; use multiple VPN tunnels, zone-redundant gateways, or dual ExpressRoute circuits connected to different locations for high availability.

- Security: VPN provides IPsec encryption over the internet. ExpressRoute provides a private connection, bypassing the public internet; encryption (for example, MACsec or IPsec tunnels over the private peering) can be added if required. Firewalls on-premises and potentially in the Azure Hub provide essential traffic filtering.

- Routing Complexity: Requires careful configuration of routing protocols (BGP is common for ExpressRoute and dynamic VPNs) or static routes on-premises, Azure UDRs, and VNet Peering Gateway Transit settings. Misconfigurations can lead to connectivity failures or suboptimal routing.

- Cost: Includes costs for Azure VPN or ExpressRoute Gateways, ExpressRoute circuit charges (port speed, data plan, provider fees), and associated data transfer costs.

Routing and Security

- Routing:

- Centralized Routing Management: The hub VNet centrally manages routing, ensuring consistent routing policies across both Azure regions and on-premises networks.

- Optimized Path Selection: The hub VNet selects the most efficient paths for data transmission between regions and on-premises networks, reducing latency and improving performance.

- Dynamic Routing Updates: Automatically updates routing tables to adapt to network changes, maintaining optimal connectivity between on-premises and Azure resources.

- Inter-Region Connectivity: Facilitates seamless connectivity between VNets in different regions and on-premises networks, enabling global access to Oracle Database@Azure.

- Route Propagation: Propagates routes between connected VNets, on-premises networks, and other regions, ensuring comprehensive network integration.

- Hybrid Network Integration: Integrates on-premises networks with Azure VNets through the hub, enabling unified management and streamlined operations.

- Security:

- Firewall Configuration: Configure firewalls within the hub VNet to inspect and filter all traffic, enhancing security for both Azure and on-premises networks.

- Traffic Inspection: Implement deep packet inspection to monitor and control data flow between regions and on-premises networks, preventing unauthorized access.

- Network Segmentation: Use the hub VNet to segment network traffic, isolating sensitive data and applications for improved security across regions and on-premises networks.

- Secure Connectivity: Establish secure connections using VPN or ExpressRoute to protect data in transit between on-premises networks and Azure regions.

- Access Control: Apply stringent access control policies to manage permissions and ensure only authorized entities can access Oracle Database@Azure from on-premises networks.

- Threat Detection: Integrate threat detection and response mechanisms within the hub VNet to identify and mitigate security threats in real-time across regions and on-premises networks.

- Compliance and Regulatory Adherence: Ensure compliance with regulatory requirements by implementing robust security measures within the hub VNet for both Azure and on-premises connectivity.

Resources

To learn more on Azure Network Topologies, see Network planning for Oracle Database@Azure from the Microsoft documentation library.