Configuring Spark Structured Streaming using Workflows

You can configure a streaming task inside a workflow for continuous processing of stream data.

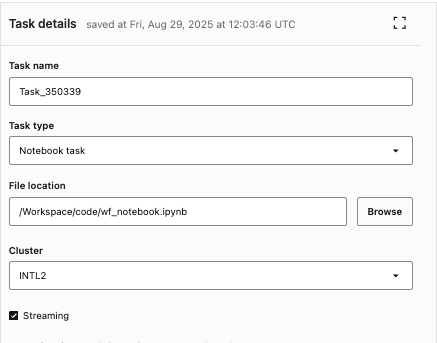

You first need to create a job and then add one Notebook or Python task to that job to begin using workflows with streaming in Oracle AI Data Platform Workbench.

After a Streaming task is started, it continues to run until you manually stop it. During regular monthly maintenance, the Streaming task is stopped and restarted by the service without requiring any action from your end.