About This Recipe

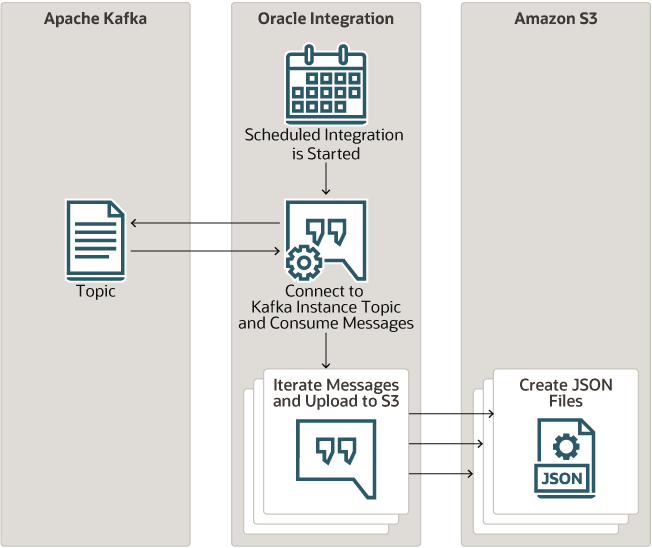

This recipe exports messages from an Apache Kafka topic to an Amazon S3 bucket as JSON files. Each Apache Kafka message is exported as a JSON file that contains the contents of the message.

To use the recipe, you must install the recipe and configure the connections and other resources within the recipe. Subsequently, you can activate and run the integration flow manually or specify an execution schedule for it. When triggered, the integration flow queries for messages in the specified Apache Kafka topic and loads predefined number of messages. Then the integration iterates over each message and exports them as JSON files into the specified Amazon S3 bucket.

System and Access Requirements

-

Oracle Integration, Version 21.2.1 or higher

-

Amazon Web Services (AWS) with Amazon S3

-

Apache Kafka

-

Confluent

-

An account in AWS with Administrator role

-

An account in Confluent with Administrator role

Recipe Schema

This section provides an architectural overview of the recipe.

Description of the illustration apachekafka-amazons3.png

When the integration flow of the recipe is triggered by an execution schedule or manual submission, it queries the Apache Kafka instance's topic for messages. If messages are present in the specified topic, the integration fetches a predefined number of messages. It iterates over the messages and exports them into Amazon S3. For each exported message a corresponding JSON file that contains the contents of the message is created in the specified Amazon S3 bucket.