Before You Begin

This 60-minute tutorial demonstrates some good practices for training and improving your intents.

Background

The purpose of this lab is to become familiar with tips and techniques for training and testing your skills. Specifically, you will add utterances to a skill to train it to understand several use cases. Then you'll work with a colleague to iteratively test your skill, adding more test data as you go along to fine-tune intent resolution.

What Do You Need?

- Access to an Oracle Digital Assistant instance.

- The tutorial's starter skill (

Pizzeria_Starter-v3-2.zip). - Optionally (but highly recommended!), a test partner.

Get the Pizzeria_Starter Skill

We'll start with a skill that has been pre-populated with a few intents. You will first import the skill and then clone it.

If you are doing this tutorial with a partner, just one of you needs to import the skill. (In fact, once one of you has imported it, it's not possible for the other to do the same.)

Import the Starter Skill

- If you haven't done so already, download

Pizzeria_Starter-v3-2.zip. - Log into Oracle Digital Assistant.

- Click

in the top left corner to open the side menu.

in the top left corner to open the side menu. - Expand Development and then click Skills.

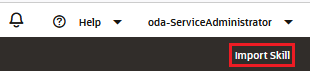

- Click Import Skill (located at the upper right of the page).

- Browse to, and then select,

Pizzeria_Starter-v2-1.zip. Then click Open.Note: It's possible that the import will fail because someone has already imported the starter skill. In that case, you'll just use the copy of the skill that was already imported.

Clone the Starter Skill

To make sure that your work doesn't collide with others doing this tutorial, you will work with a clone of the skill.

To create a clone of the skill:

- On the Skills page, within the tile for the

Pizzeria_Starterskill, click and then select Clone.

and then select Clone. - In the Display Name field, enter

<YourInitials>_Pizzeria_Starter.For example:

AB_Pizzeria_Starter. - Select the Open cloned skill afterwards checkbox.

- Leave the other fields unchanged.

- Click Clone.

The clone of the skill should open on the Intents page.

Enable Insights

We'll later use the Insights Retrainer to retrain the skill to properly handle phrases that it misclassifies.

The Insights feature should already be enabled in your skill. To make sure:

- In the left navigation of your skill, click

.

. - Make sure that the General tab is selected.

- Scroll down to the Enable Insights switch and make sure that it is in the On position.

Create Utterances and Do a Round of Batch Testing

The pizza.reg.FileComplaint, pizza.reg.OpenFranchise, and pizza.reg.TrackOrder intents don't have any example utterances yet, so we'll need to create those. Along the way, we'll create some extra utterances and use those as testing data to help measure the success of the skill in understanding a conversation with a user.

Here's a rundown on the purpose of intents:

pizza.reg.FileComplaint– Used when the customer has an issue that needs to be resolved, probably by a live agent.pizza.reg.OpenFranchise– Allows the customer to inquire about opening up a franchise to sell pizzas online.pizza.reg.TrackOrder– Used when the customer wants to check the status of an order, cancel an order, or check the progress of an order.

Read Up on How to Write Good Utterances

To get off to the best possible start with your training model, you may want to spend a few minutes reading up on how to create a robust set of initial utterances. See this TechExchange article on best practices for writing utterances.

Create Utterances

- In Notepad or a similar text editor, write phrases that you think would be representative utterances for the

pizza.reg.FileComplaint,pizza.reg.OpenFranchise, andpizza.reg.TrackOrderintents.Write 10 utterances each for these intents.

Add Utterances to the Intents

For each intent, add seven of the utterances you have just written. Put the remaining nine utterances aside.

- Select

in the left navbar.

in the left navbar. - Select the

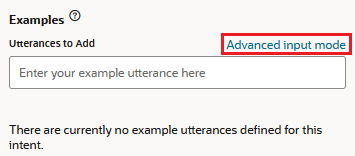

pizza.reg.FileComplaintintent. - In the Examples section, click Advanced input mode to expand the input field.

- In the Enter your example utterances here field, paste the seven utterances that you have written for

pizza.reg.FileComplaintand then click Create. - Repeat Steps 2 through 4 to add training utterances to the

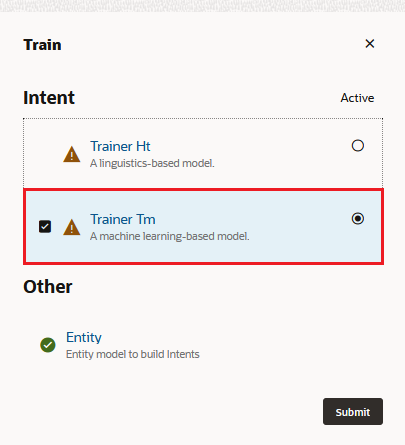

pizza.reg.OpenFranchiseandpizza.reg.TrackOrderintents. - When you've added all of the utterances, click Train.

- Leave Trainer Tm selected, and click Submit.

Create Test Cases

Now you'll create test cases using the nine utterances that you did not add to the skill's training set. Each test case has a test utterance and the intent that it's expected to resolve to. These test cases are compiled into a test suite. For this tutorial, the test suite is called IntentsTutorial.

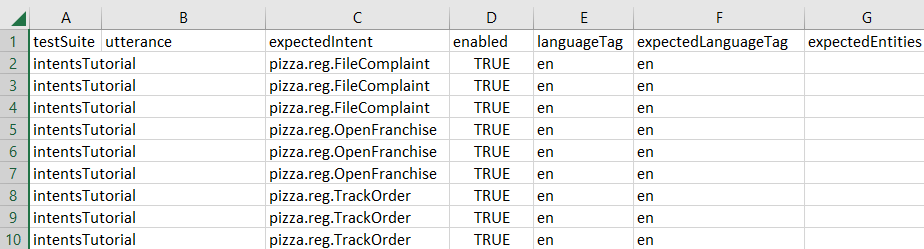

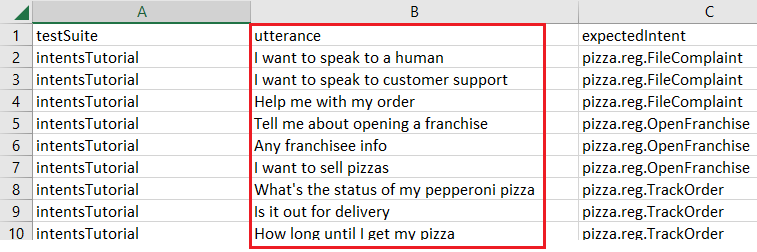

You add a batch of test cases using a comma-delimited CSV file. You'll create this file using batchtest-starter.txt. The header row of this file has the following fields:

testSuite,utterance,expectedIntent,enabled,languageTag,expectedLanguageTag,expectedEntities

testSuite: The name of the test suite. All test cases must belong to a test suite. For this tutorial, the test suite is namedintentsTutorial.utterance: The utterance that you are testing. This utterance must not belong to the training set.expectedIntent: The intent that you expect the utterance to match with.enabled: Iftrue, the test case is included in the test run.languageTag: The two-letter language tag for the utterance.expectedLanguageTag: The two-letter language tag for the language the utterance should resolve to.expectedEntities: The entity values that should be matched in the test utterance, represented as an array.

Note:

For this tutorial, we'll focus just on adding content to theutterance field. You don't have to add entries for any of the other fields.To create the batch test file:

- Save batchtest-starter.txt to your machine.

- Change the extension of the file from

.txtto.csv. - Open the file in either a spreadsheet program (to enable you to visualize the test data in columns) or in a plain text editor such as Notepad (where you see fields delimited by commas). This file has three entries for each intent.

Note:

For this tutorial, we're using a spreadsheet program. - Within the file, fill in an utterance for each entry.

For example, you might insert "What's the status of my pepperoni pizza" as the utterance for the first

pizza.reg.TrackOrdertest entry. - Save the file as

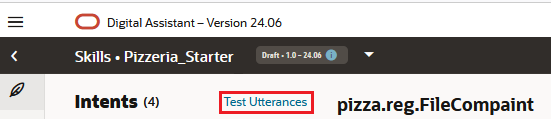

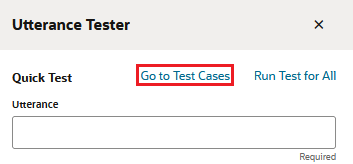

batchtest1.csv. - Back in Digital Assistant, click Test Utterances.

- In the Utterance Tester, click Go to Test Cases.

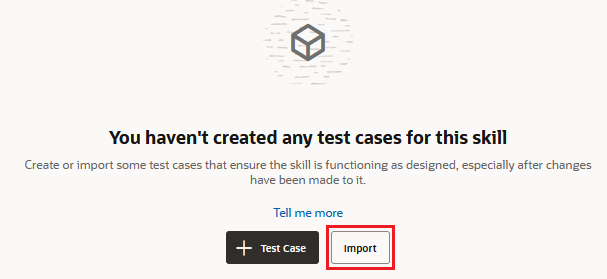

- Click Import.

- Browse to, then select,

batchtest.csvin the file chooser. Then click Open. - Click Upload. Wait a few seconds for the test cases to load.

- Once the test cases appear in the dialog, select the IntentsTutorial test suite.

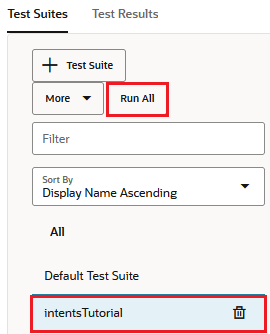

- Click Run All.

- Click Start in the New Test Run dialog. Wait for the test run to complete (this may take a few minutes).

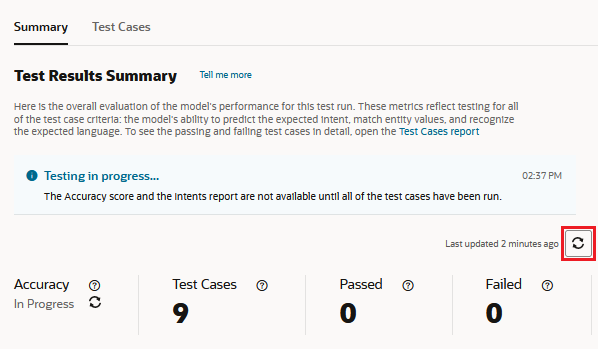

Tip:

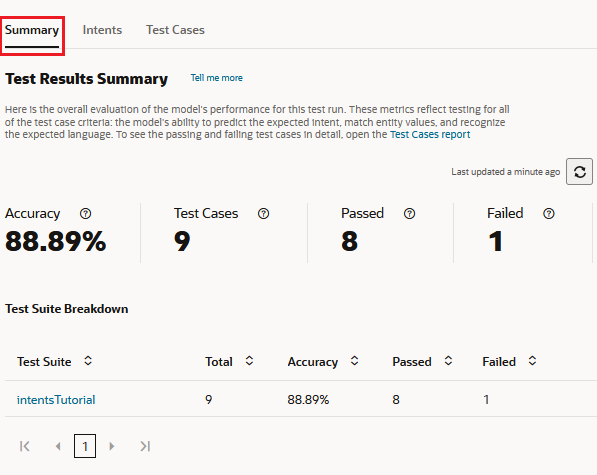

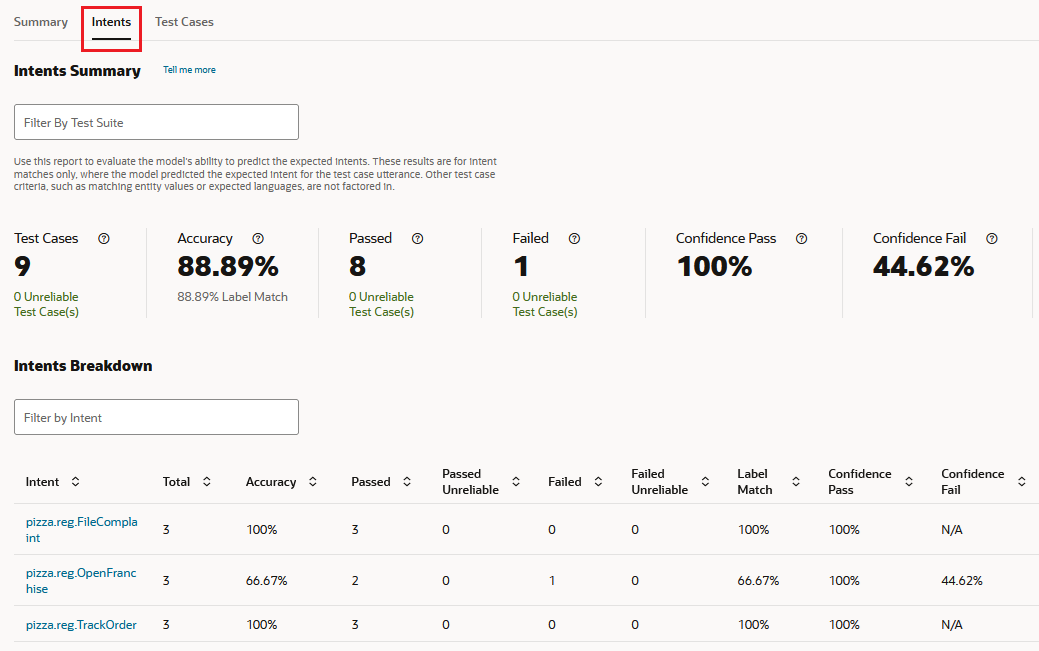

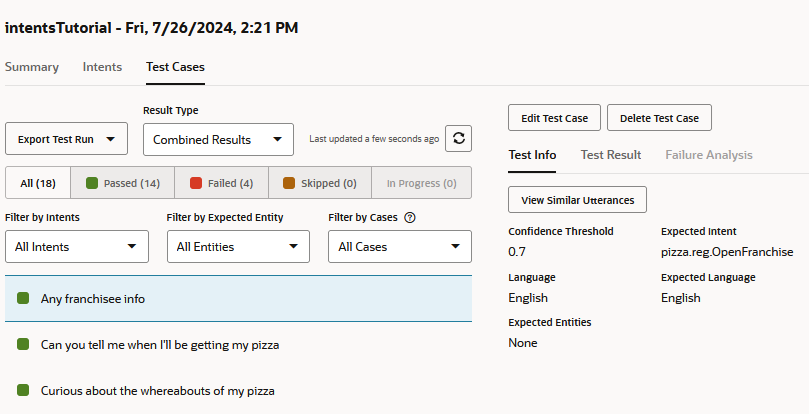

To check if the report has finished running, click Refresh. - Click Intents to evaluate how the test cases resolved to their expected intents. Note that the Accuracy metric here may not match the Accuracy metric in Summary report. In this report, Accuracy does not refer to the success rate of the test cases, but instead refers to the model's ability to match the test case utterances in the test run at, or above, the skill's Confidence Threshold setting.

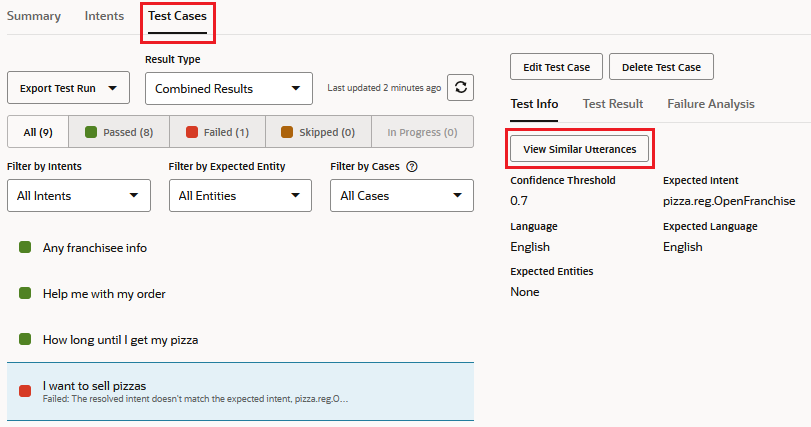

- To find out about the failing test cases, click the Test Cases tab.

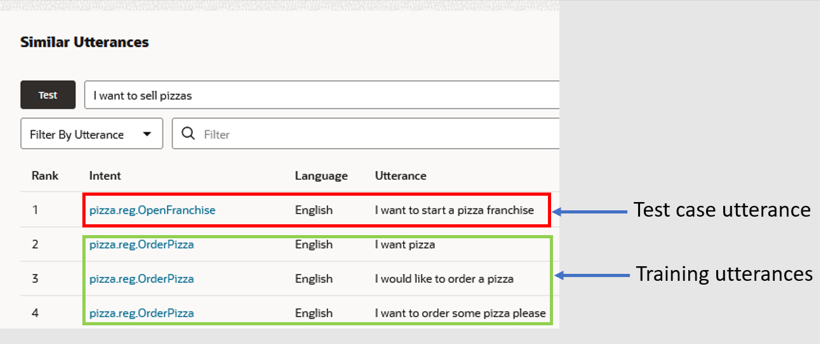

- For any test cases that have failed, click View Similar Utterances in the report's Test Info tab.

- Take a look at the Similar Utterances report to compare the test case utterance to its nearest match in the training set. Did the test case fail because it is too similar to the utterances used for another intent?

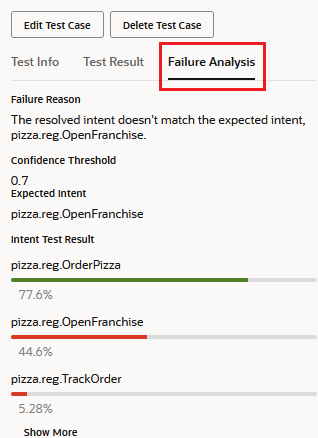

- To find out which intent a failed test case resolved to, open the Failure Analysis tab.

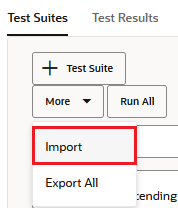

Note:

When there are no test cases for the skill, the Import button appears at the bottom of the page. If there are already test cases there (or there have been test cases that have been deleted), choose Import from the More menu.

The completed Summary report shows you the number of passing and failing test cases in the test run. The Accuracy metric for the test run is measured in terms of the number test cases that passed compared to the total number of test cases that were included in the test run.

Take a look at the results and take note of anything that you find surprising.

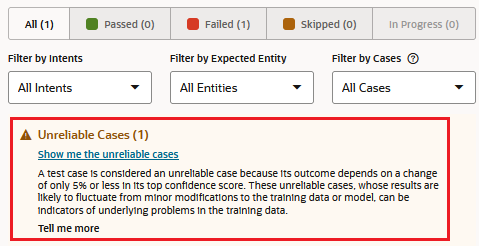

Note:

Some of your test cases might be classified as "unreliable", meaning that they may not consistently produce the same results across test runs because they resolve within 5% or less of the Confidence Threshold setting. The fragility of these test cases may indicate that the utterances that they represent in the training data may be too few in number and that you may need to balance the intent's training data with similar utterances.

Notes on What You Just Did

When developing a new skill, it is likely that you don't have any existing training utterances, so you have to synthesize utterances to train the model. Here you have undertaken the good practice of using some utterances to train the model while reserving others for batch testing.

By using some of the phrases for batch testing, you are always able to test your intents and compare whether these phrases are resolving to the intents you expect. As more people use the skill and you find that you need to update the training model with further utterances, it is extremely important to continue reserving some of the new utterances for the batch tests. This will help you ensure that your model resolves more and more phrases correctly over time.

Iteratively Test Your Intents

At this point, you have done one round of testing. To make your training corpus more robust, you'll want to do several more rounds and make any necessary adjustments to your utterances as you go along. This iterative approach is a good practice for your skill development.

Have a Test Partner Test Your Skill

As part of this iterative testing, you'll want to get other people involved in training your skill, since phrases you think of to match an intent will probably vary from what other people come up with.

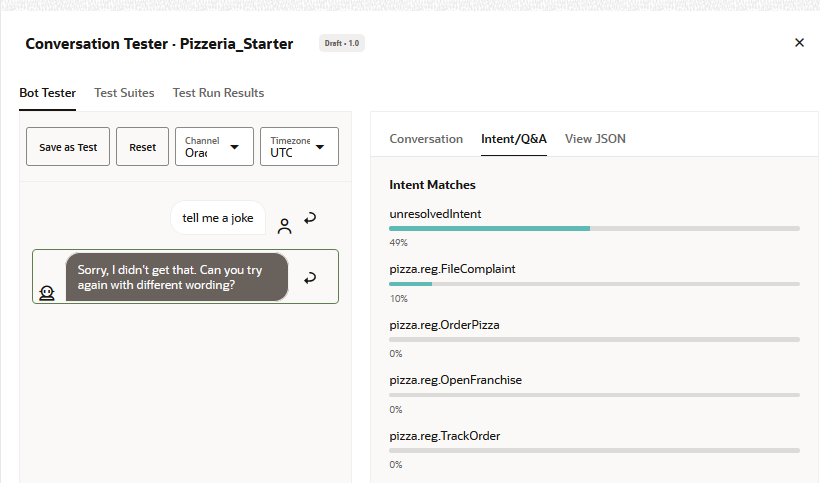

In this part of the tutorial, you'll use the Conversation Tester (not the Utterance Tester), which you'll need to record the conversations in Insights.

- Find a test partner.

- Have your partner open your skill (while you open your partner's skill).

- In your parter's skill, click Preview

to open the Conversation Tester.

to open the Conversation Tester. - In the Bot Tester, enter a phrase that you expect to resolve to a specific intent and then press Enter.

- Repeat the above step with two more utterances for the same intent.

- Enter three more utterances each for the remaining two intents.

- Once you have finished entering phrases, close the tester.

Use the Insights Retrainer to Improve the Model

The Insights feature enables you to see how your users are interacting with your skill. The Retrainer feature of Insights enables you to add real user utterances to your training model.

Now let's evaluate your training partner's utterances and use the Retrainer to update your training model to correctly classify the utterances that resolved incorrectly.

- Re-open your copy of the skill

- Open the skill's Insights by clicking

.

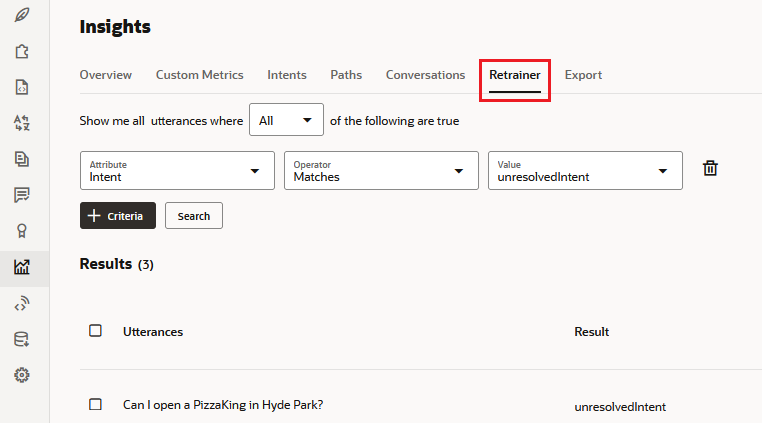

. - Click the Retrainer tab.

- By default, the Retrainer report is filtered by the utterances that couldn't be resolved to any intent. As a result, these utterances got were resolved to

unresolvedIntentinstead. In this case, the report lists any of your lab partner's utterances that could not be resolved to any of the three intents that we have been working with.Tip:

You can filter this report by intent to review the utterances that resolved to a specific intent and to make sure that the utterances resolved appropriately. - Before we evaluate the utterances that were successfully resolved to one of the intents, we're first going to evaluate, and if needed, reassign the unresolved utterances. To get started, select the checkbox for the utterance.

- Click the Select Intent drop-down for that entry and then select the intent that it should have resolved to.

- Click the Select Language drop-down for that entry and select English.

- Click Add Example.

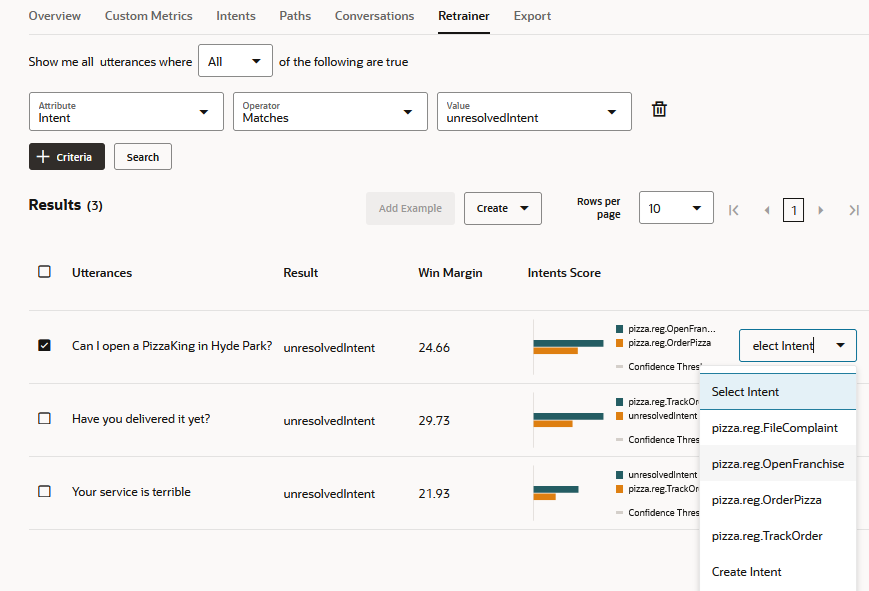

- From the Value drop-down list, select an intent and click Search. You should now see the entries for the selected intent.

- As before, evaluate whether each of those utterances have resolved correctly, and for any that haven't, add them as examples to the appropriate intent(s).

- Repeat these steps for the remaining intents.

- If you've added any utterances to the training set, then you'll need to update the model. Click the Train button.

- Leave Trainer Tm selected and click Submit.

Add More Utterances to the Batch Test

Based on what you learned from the phrases your testing partner added, you should also augment your test batch.

- Create a copy of

batchtest1.csvand save it asbatchtest2.csv. - In

batchtest2.csv, create one new entry for each intent by copying an existing entry for that intent. - For each copied entry, replace the utterance with a new utterance.

You can construct these new utterances by varying the wording of utterances that were entered by your test partner.

- Click

.

. - Click Test Utterances.

- In the Utterance Tester window, click Go to Test Cases.

- Click the More drop-down list, select Import, and import the

batchtest2.csvfile. - In left side of the Utterance Tester dialog, select the intentsTutorial test suite.

- Click Run All and then click Start.

- Click the Test Results tab.

You should see that around 18 tests have been run in this batch. This is because the tests in your

batchtest2.csvfile were added to the intentsTutorial test suite.

Description of the illustration - Evaluate the results of the new test.

- Are you getting better results?

- Has the accuracy improved?

- Are you seeing obvious misclassifications?

- With your partner, or perhaps a new partner, repeat the exercises in this section with three more utterances for each intent.

- Close the Utterance Tester.

Handling Spam

Now let's spend some time on the question of spam or other misuse of the skill. Up to 40% of a skill's workload may have nothing to do with the skill's intended use, and the skill needs to be able to gracefully handle this.

When you use the Trainer TM trainer (as you are in this tutorial), your skill is trained to recognize when input is out of the domain of the skill. Such input is matched to unresolvedIntent, which is an intent that is implicitly defined for each skill.

To see this in action:

- In the top navigation of the skill, click Preview

.

. - In the Utterance field, enter tell me a joke and press Enter.

- In the right side of the tester, click the Intent/Q&A tab.

You should see a bar graph showing how well the phrase matched with the various intents, including

unresolvedIntent.Note:

If none of the intents match with a confidence score that exceeds the Confidence Threshold for the test,unresolvedIntentis considered to be the matching intent. - In the Utterance field, enter and she is buying the stairway to heaven and then press Enter.

The results should be similar, with a relatively high score for unresolvedIntent.

- For fun, enter a few more random phrases and see how they resolve.

Note:

It's also possible to explicitly create an unresolvedIntent intent and add training utterances for it. This might be useful if you find that there are out-of-domain utterances that are resolving to your intents or you want to further reduce the chance that out-of-domain input will inappropriately resolve to one of your intents.Other Tips for Optimizing Intent Resolution

For future reference, here are some other things you can do to improve the quality of intent resolution:

- Among your utterances, include key phrases that are specific to one intent, and add them as short phrases (not as full sentences).

For example, if "no claims protection" is relevant for only one intent in a skill, add utterances such as "no claims protection", "protected no claims", and "no claims bonus" for that intent.

- Repeat key utterances (those that you think will be representative of typical user input) with some slight variations.

- Check where you think utterances could apply to different intents. If there is significant overlap, consider combining those intents.

- After applying any changes, be sure to rerun your tests and evaluate their impact.

Note:

Ultimately the best data for training your skill will come from real user utterances. Furthermore, in most cases, Trainer Tm is better suited for resolving real-world phrases. However it does require more sample utterances to give those better results.Learn More

- Intent Training and Testing in Using Oracle Digital Assistant

- Insights in Using Oracle Digital Assistant

- Skill Quality Reports in Using Oracle Digital Assistant

Best Practices for Building and Training Intents

F17425-06

July 2024

Shows how to build up training utterances for intents in a skill and iteratively train and test them.

This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited.

If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable:

U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. The terms governing the U.S. Government's use of Oracle cloud services are defined by the applicable contract for such services. No other rights are granted to the U.S. Government.

This software or hardware is developed for general use in a variety of information management applications. It is not developed or intended for use in any inherently dangerous applications, including applications that may create a risk of personal injury. If you use this software or hardware in dangerous applications, then you shall be responsible to take all appropriate fail-safe, backup, redundancy, and other measures to ensure its safe use. Oracle Corporation and its affiliates disclaim any liability for any damages caused by use of this software or hardware in dangerous applications.

Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners.

Intel and Intel Inside are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. AMD, Epyc, and the AMD logo are trademarks or registered trademarks of Advanced Micro Devices. UNIX is a registered trademark of The Open Group.

This software or hardware and documentation may provide access to or information about content, products, and services from third parties. Oracle Corporation and its affiliates are not responsible for and expressly disclaim all warranties of any kind with respect to third-party content, products, and services unless otherwise set forth in an applicable agreement between you and Oracle. Oracle Corporation and its affiliates will not be responsible for any loss, costs, or damages incurred due to your access to or use of third-party content, products, or services, except as set forth in an applicable agreement between you and Oracle.