Scheduling a Data Rule, Integration, or Batch Definition to Run in the Job Scheduler

You can schedule the execution times of a data load rule or batch in Data Management or an integration in Data Integration.

To schedule a data load rule, integration, or batch:

-

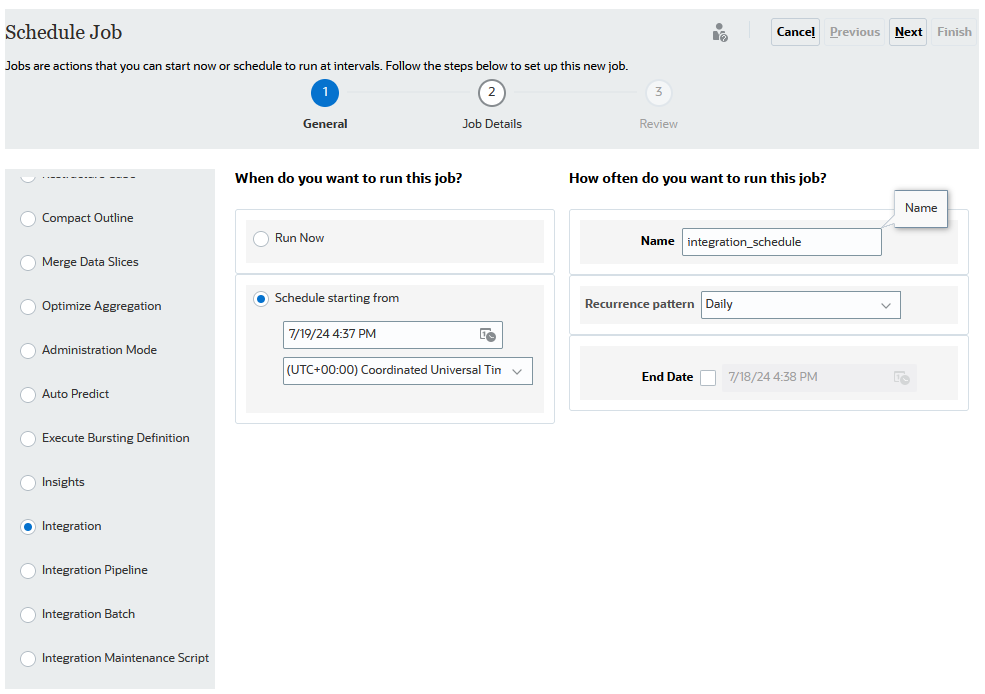

Click Application, then Jobs, and then Schedule Jobs.

-

From the Schedule Jobs page, then What type of job is this?, select Integration.

- Click Next.

-

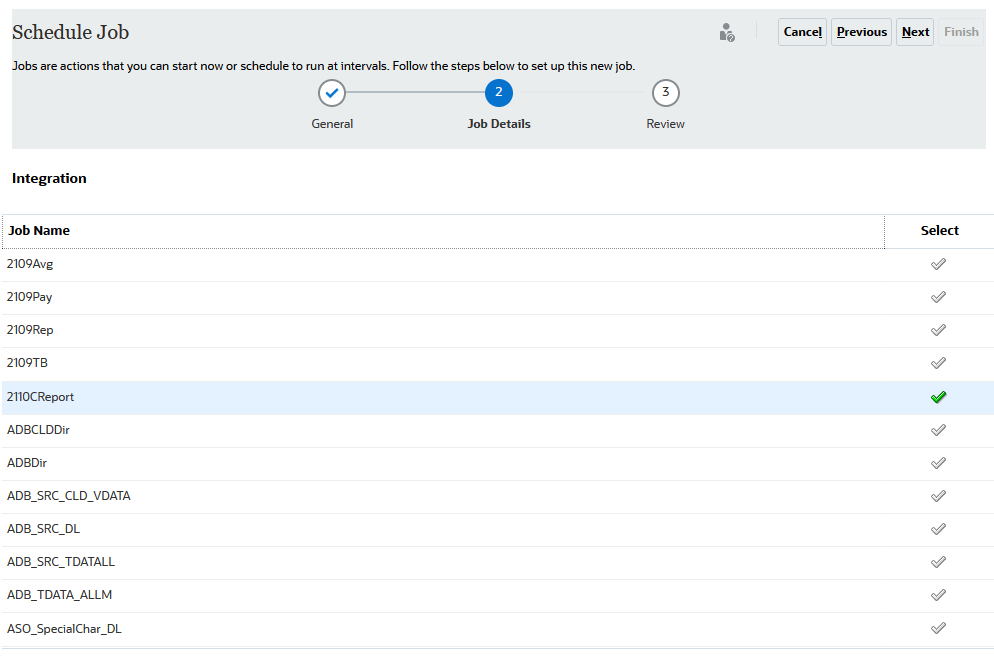

From Job Name, select the data rule or integration to schedule and click

.

.

- Click Next.

-

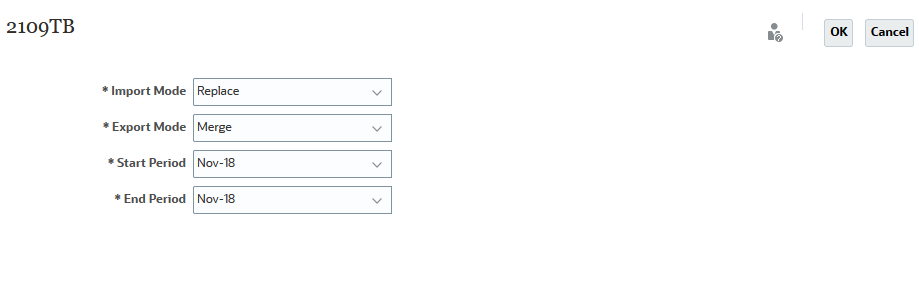

From Import Mode, select the method for importing data.

Options include:

-

Append—Keep existing rows for the POV but append new rows to the POV. For example, a first-time load has 100 rows and second load has 50 rows. In this case, 50 rows are appended. After this load, the row total for the POV is 150.

-

Replace—Clears all data for the POV in the target, and then loads from the source or file. For example, a first-time load has 100 rows, and a second load has 70 rows. In this case, 100 rows are removed, and 70 rows are loaded to TDATASSEG. After this load, the row total is 70.

For a Planning application, Replace clears data for Year, Period, Scenario, Version, and Entity that you are loading, and then loads the data from source or file. Note that when you have a year of data in the Planning application, but are only loading a single month, this option clears the entire year before performing the load.

Note:

When running an integration in Replace mode to an ASO cube, if the scenario member is a shared member, then only a Numeric data load is performed. Be sure to specify the member name with fully qualified name including complete hierarchy. The All Data Type load methods does not work when the scenario member is a shared member.Note:

Replace Mode is not supported for the load method "All data types with auto-increment of line item." -

Merge—(Account Reconciliation only). Merge changed balances with existing data for the same location.

Merge mode eliminates the need to load an entire data file when only a few balances have changed since the last time data was loaded into Account Reconciliation. If mappings change between two loads, customers must reload the full data set.

For example, a customer might have 100 rows of existing balances for one number account IDs, each which has an amount of $100.00. If the customer runs the integration in merge mode and the source has one row for one account ID with an amount of $80, then after running integration, there are one hundred rows of balances, 99 each of which have a balance of $100.00, and 1 which has a balance of $80.00.

-

No Import—Skip the import of data entirely.

-

Map and Validate—Skip importing the data but reprocess the data with updated mappings.

-

-

From Export Mode, select the method for exporting data to the target application.

Options include:

-

Merge—Overwrite existing date with the new data from the load file. (By default, all data load is processed in the Merge mode.) If data does not exist, create new data.

-

Replace—Clears all data for the POV in the target, and then loads from the source or file. For example, a first-time load has 100 rows, and a second load has 70 rows. In this case, 100 rows are removed, and 70 rows are loaded to the staging table. After this load, the row total is 70.

For a Planning application, Replace clears data for Year, Period, Scenario, Version, and Entity that you are loading, and then loads the data from source or file. Note that when you have a year of data in the Planning application, but are only loading a single month, this option clears the entire year before performing the load.

-

Accumulate—Accumulate the data in the application with the data in the load file. For each unique point of view in the data file, the value from the load file is added to the value in the application.

-

Subtract—Subtract the value in the source or file from the value in the target application. For example, when you have 300 in the target, and 100 in the source, then the result is 200.

-

Dry Run—(Financial Consolidation and Close and Tax Reporting only) Scan a data load file for invalid records without loading data it to the target application. The system validates the data load file and lists any invalid records into a log, which lists 100 errors or less. For each error, the log indicates each record in error with its corresponding error message. Log details are available in Process Details.

Note:

Dry Run ignores the Enable Data Security for Admin Users target option and always uses the REST API for the administrator user. -

No Export—Skip the export of data entirely.

-

Check—After exporting data to the target system, display the Check report for the current POV. If check report data does not exist for the current POV, a blank page is displayed.

-

-

From Start Period, select the first period for which data is to be loaded.You can filter periods by simply typing the character(s) to filter by. For example, type J to filter by months beginning J such as June or July. You can also click the drop down and specify additional filter criteria in the edit box shown below More results available, please filter further. This period name must be defined in period mapping.

This period name must be defined in period mapping.

Note:

Use a Start Period and End Period that belong to a single Fiscal Year. If a period range crosses fiscal years, you run into the following issues:

- When loading data in replace mode, the system clear data for both years

- When exporting data, you will get duplicate data

The above is applicable for all modes of data load including standard and quick mode.

-

From End Period, select the last period for which data is to be loaded. This period name must be defined in period mapping.

-

Click OK.