Step 1: Stage and Load File to the TDATASEG_T table

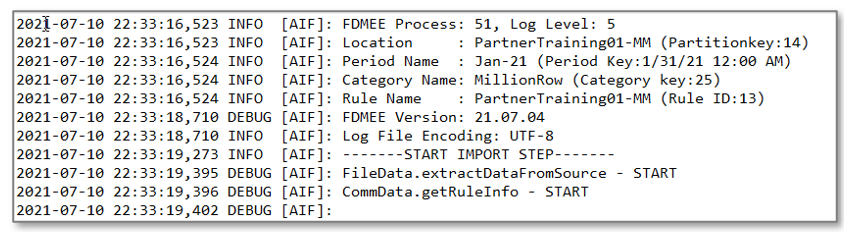

This step is used to initialize the system for processing, and to load the source data into the temporary table used for mapping. Sections of a sample log file for a million row data file are provided below:

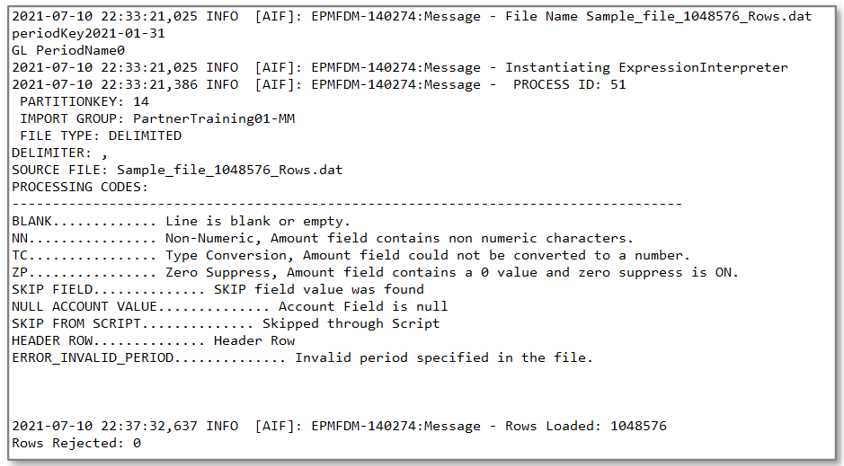

In this example, the process started as 22:33:16,523 in log level 5. When tuning and debugging, it is recommended to use log level 5 to see full details of the processing run. The next figure shows the completion of the load to the TDATASEG_T table:

The log shows that 1,048,576 rows were loaded and 0 rows were rejected. The approximate time for staging and file loading before mapping was 4 minutes and 16 seconds. There isn’t anything that you can tune in regard to this step with a file-based load, so this is a fixed component of the overall process. Note that users may specify business rules to run during selected events during the load process, and if you have included business rules, make sure that these are also performing as required. The performance of business rules is not controlled by Data Integration, and these should be tuned in the target application when they are causing performance issues.