9 Templates

By using the Oracle Machine Learning Notebooks templates, you can collaborate with other users by sharing your work, publishing your work as reports, and by creating notebooks from templates. You can store your notebooks as templates, share notebooks, and provide sample templates to other users.

Note:

You can also collaborate with other Oracle Machine Learning Notebooks users by providing access to your workspace. The authenticated user can then access the projects in your workspace, and access your notebooks. The access level depends on the permission type granted - Manager, Developer, or Viewer. For more information about collaboration among users, see How to collaborate in Oracle Machine Learning Notebooks- Use the Personal Templates

Personal Templates lists the notebook templates that you have created. - Use the Shared Templates

In the Shared Templates, you can share notebook templates with all authenticated users the notebook templates you create from existing notebooks available in Templates. - Use the Example Templates

The Example Templates page lists the pre-populated Oracle Machine Learning notebook templates. You can view and use these templates to create your notebooks.

9.1 Use the Personal Templates

Personal Templates lists the notebook templates that you have created.

-

View selected templates in read-only mode.

-

Create new notebooks from selected templates.

-

Edit selected templates.

-

Share selected notebook templates in Shared Templates.

-

Delete selected notebook templates.

- Create Notebooks from Templates

You can create new notebooks from an existing template, and store them in Personal Templates for later use. - Share Notebook Templates

You can share templates from Personal Templates. You can also share templates for editing. - Edit Notebook Templates Settings

You can modify the settings of an existing notebook template in Personal Templates.

Parent topic: Templates

9.1.1 Create Notebooks from Templates

You can create new notebooks from an existing template, and store them in Personal Templates for later use.

Parent topic: Use the Personal Templates

9.1.2 Share Notebook Templates

You can share templates from Personal Templates. You can also share templates for editing.

Parent topic: Use the Personal Templates

9.1.3 Edit Notebook Templates Settings

You can modify the settings of an existing notebook template in Personal Templates.

Parent topic: Use the Personal Templates

9.2 Use the Shared Templates

In the Shared Templates, you can share notebook templates with all authenticated users the notebook templates you create from existing notebooks available in Templates.

-

Like templates

-

Create notebooks from templates

-

View templates

-

Template name

-

Description

-

Number of likes

-

Number of creations

-

Number of static views

-

Create templates by clicking New Notebook

-

Edit template settings by clicking Edit Settings

-

Delete any selected template by clicking Delete

-

Search templates by Name, Tag, Author

-

Sort templates by Name, Date, Author, Liked, Viewed, Used

-

View templates by clicking Show Liked Only or Show My Items Only

- Create Notebooks from Templates

You can create new notebooks from an existing template, and store them in Personal Templates for later use. - Edit Notebook Templates Settings

You can modify the settings of an existing notebook template in Personal Templates.

Parent topic: Templates

9.2.1 Create Notebooks from Templates

You can create new notebooks from an existing template, and store them in Personal Templates for later use.

Parent topic: Use the Shared Templates

9.2.2 Edit Notebook Templates Settings

You can modify the settings of an existing notebook template in Personal Templates.

Parent topic: Use the Shared Templates

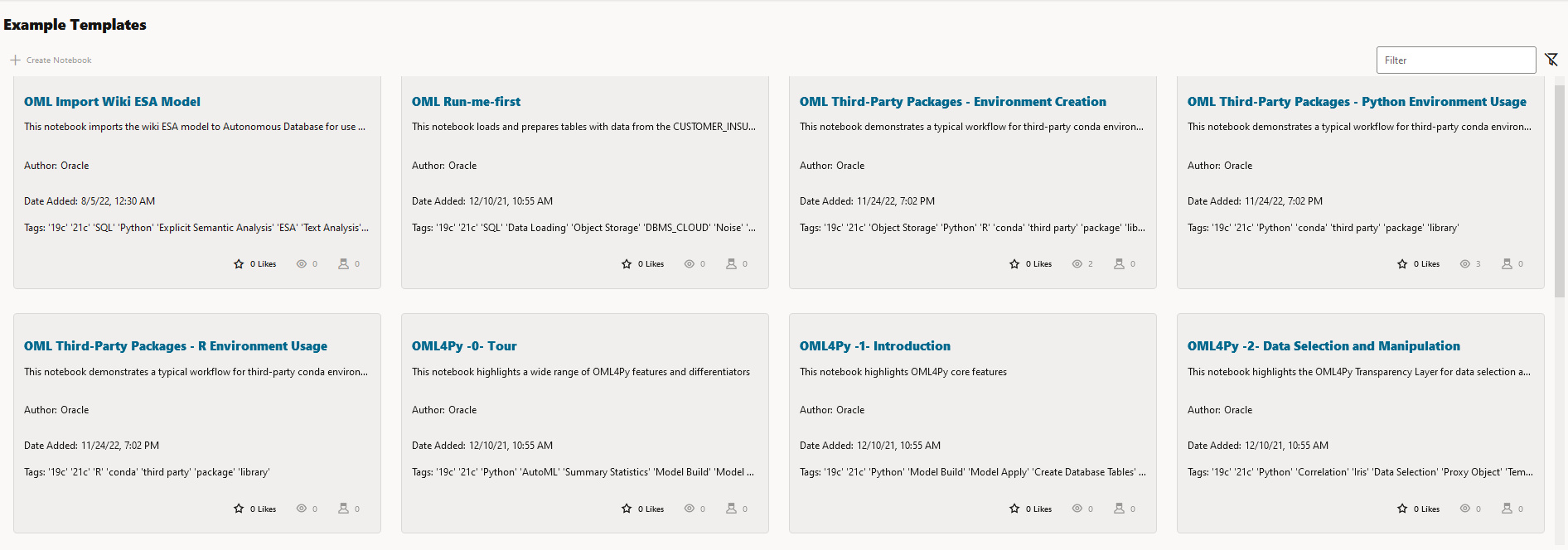

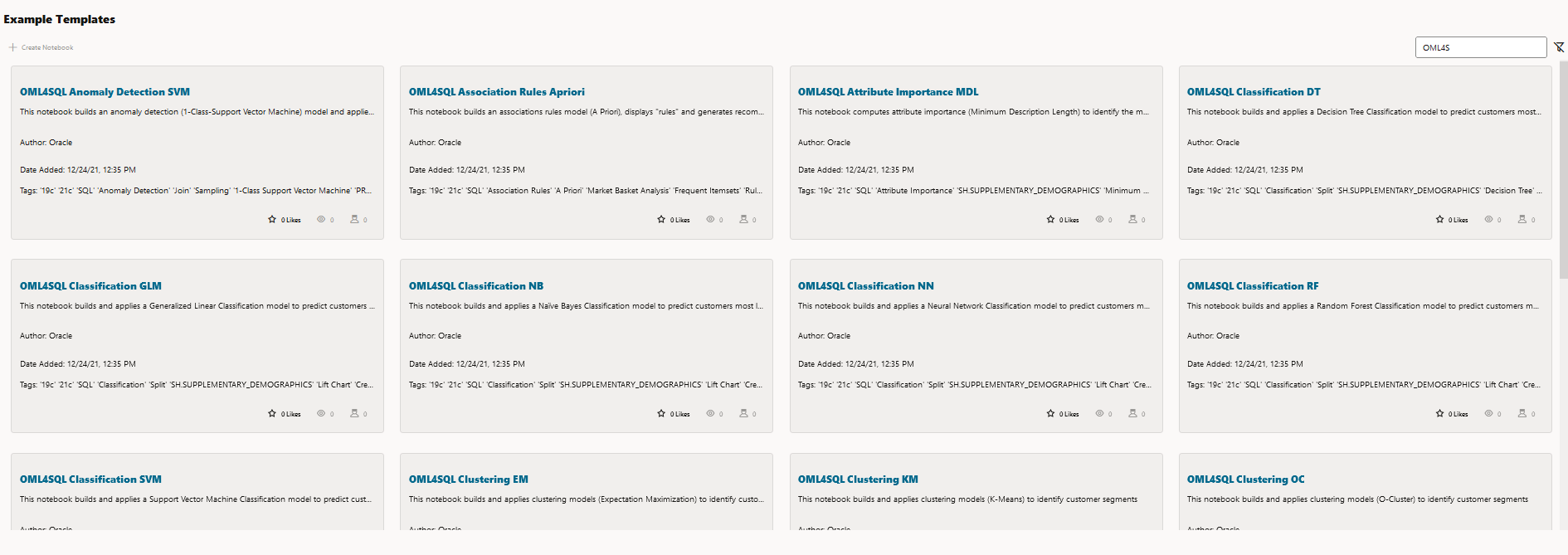

9.3 Use the Example Templates

The Example Templates page lists the pre-populated Oracle Machine Learning notebook templates. You can view and use these templates to create your notebooks.

-

Template name

-

Description

-

Number of likes. Click Likes to mark it as liked.

-

Number of static views

-

Number of uses

-

Search templates by Name, Tag, Author

-

Sort templates by Name, Date, Author, Liked, Viewed, Used

-

View templates that are liked by clicking Show Liked only

- Create a Notebook from the Example Templates

Using the Oracle Machine Learning Example Templates, you can create a notebook from the available templates. - Example Templates

Oracle Machine Learning Notebooks provide you notebook example templates that are based on different machine learning algorithms and languages such as Python, R, and SQL. The example templates are processed in Oracle Autonomous AI Database.

Parent topic: Templates

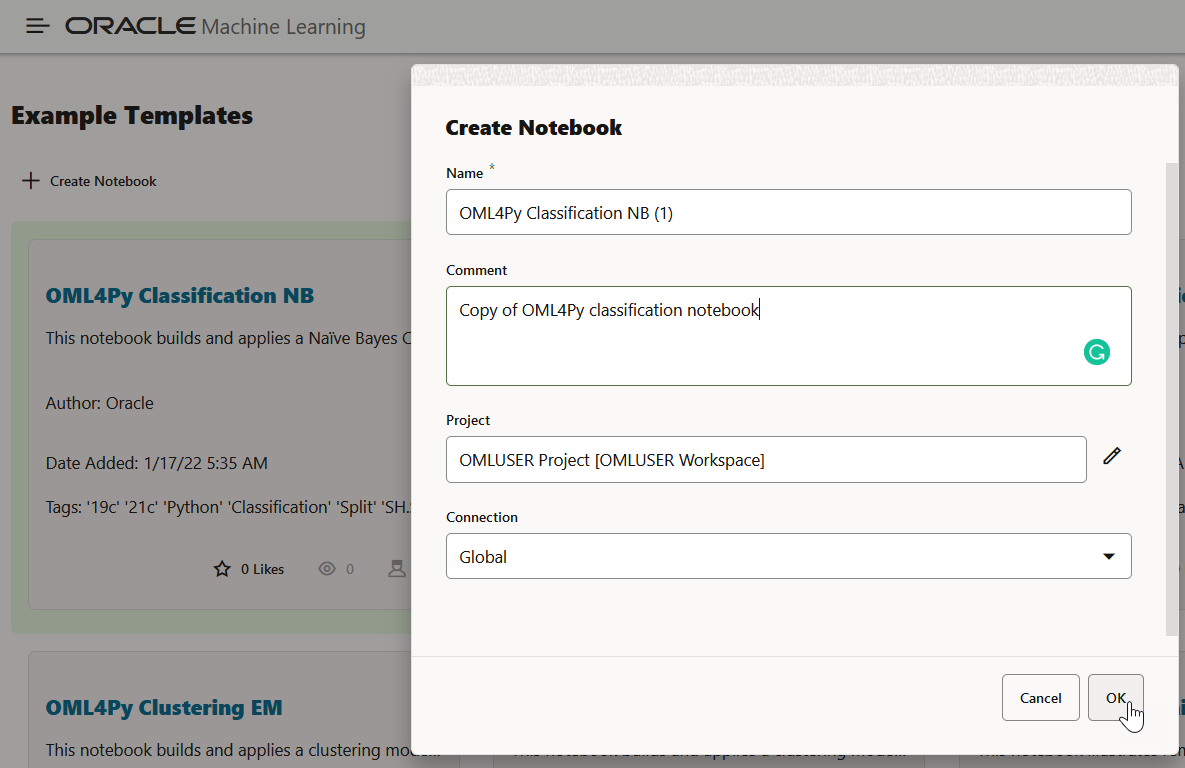

9.3.1 Create a Notebook from the Example Templates

Using the Oracle Machine Learning Example Templates, you can create a notebook from the available templates.

Parent topic: Use the Example Templates

9.3.2 Example Templates

Oracle Machine Learning Notebooks provide you notebook example templates that are based on different machine learning algorithms and languages such as Python, R, and SQL. The example templates are processed in Oracle Autonomous AI Database.

- OML Export and Import Serialized Models: Use this notebook to export and import native serialized models using the

DBMS_DATA_MINING.EXPORT_SERMODELandDBMS_DATA_MINING.IMPORT_SERMODELprocedures. Oracle Machine Learning provides APIs to streamline the process of migrating models across databases and platforms. - OML Wiki ESA Model: Use this notebook for text document

categorization by calculating semantic relatedness (how similar in meaning

two words or pieces of text are to each other) between the documents and a

set of topics that are explicitly defined and described by humans. The Oracle Machine Learning for SQL function

ESA, Oracle Machine Learning for Python functionoml.esaand Oracle Machine Learning for R functionore.odmESAextract text-based features from a corpus of documents and performs document similarity comparisons. In this notebook, the wiki ESA model is imported to Autonomous AI Database for use with the following OML template notebook examples:- OML4SQL Feature Extraction ESA Wiki Model

- OML4Py Feature Extraction ESA Wiki Model

- OML4R Feature Extraction ESA Wiki Model

- OML Services Batch Scoring: Use this notebook to run batch scoring jobs through a REST interface via OML Services. OML Services supports batch scoring for regression, classification, clustering, and feature extraction.

- Authenticate the database user and obtain a token

- Create a batch scoring job

- View the details and output of the batch scoring job

- Update, disable, and delete a batch scoring job

- OML Services Data Monitoring: Use this notebook to perform data monitoring. This notebook runs provides you with the data monitoring workflow steps through the REST interface that includes:

- Authenticate the database user and obtain a token

- Create a data monitoring job

- View the details and output of the data monitoring job

- Update, disable, and delete a data monitoring job

- OML Services Model Monitoring: Use this notebook to understand and perform model monitoring. This notebook runs provides you with the model monitoring workflow steps through the REST interface that includes:

- Authenticate the database user and obtain a token

- Create a model monitoring job

- View the details and output of the model monitoring job

- Update, disable, and delete a model monitoring job

- OML Third-Party Packages - Environment Creation: Use this notebook to download and activate the Conda environment, and to use the libraries in your notebook sessions. Oracle Machine Learning Notebooks provide a Conda interpreter to install third-party Python and R libraries in a Conda environment for use within Oracle Machine Learning Notebooks sessions, as well as within Oracle Machine Learning for Python and Oracle Machine Learning for R embedded execution calls.

The third-party libraries installed in Oracle Machine Learning Notebooks can be used in:

- Standard Python

- Standard R

- Oracle Machine Learning for Python embedded Python execution from the Python, SQL and REST APIs

- Oracle Machine Learning for R embedded R execution from the R, SQL, and REST APIs

Note:

The Conda environment is installed and managed by the ADMIN user withOML_SYS_ADMINrole. The administrator can create a shared environment, and add or delete packages from it. The Conda environments are stored in the Object Storage bucket associated with the Autonomous AI Database.Conda is an open source package and environment management system that enables the use of virtual environments containing third-party R and Python packages. With conda environments, you can install and update packages and their dependencies, and switch between environments to use project-specific packages.

This template notebook OML Third-Party Packages - Environment Creation contains a typical workflow for third-party environment creation and package installation in Oracle Machine Learning Notebooks.

- Section 1 contains commands to create and test Conda environments.

- Section 2 contains commands to create a Conda environment, install packages, and commands to upload the conda environment to an Object Storage bucket associated with the Oracle Autonomous AI Database.

Figure 9-2 Conda Example Templates

- OML Third-Party Packages - Python Environment Usage: Use

this template notebook to understand the typical workflow for third-party

environment usage in Oracle Machine Learning Notebooks using Python and Oracle Machine Learning for Python. You download and use the libraries in Conda environments that were

previously created and saved to an Object Storage bucket folder associated

with the Autonomous AI Database.

This notebook contains commands to:

- List all environments stored in Object Storage

- List a named environment stored in Object Storage

- Download and activate the

mypyenvenvironment - List the packages available in the Conda environment

- Import Python libraries

- Load datasets

- Build models

- Score models

- Create Python user defined functions (UDFs)

- Run the user defined functions (UDFs) in Python

- Create and run user defined functions in the SQL and REST APIs for embedded Python execution

- Create and run Python user defined functions using the SQL API for embedded Python execution - asynchronous mode

- OML Third-Party Packages - R Environment Usage: Use this template notebook to understand the typical workflow for third-party environment usage in Oracle Machine Learning for R.

This notebook contains commands to:

- List all environments stored in Object Storage

- List a named environment stored in Object Storage

- Download and activate the

myrenvenvironment - Show the list of available OML4R Conda environments

- Import R libraries

- Load and prepare data

- Build models

- Score models

- Create R user defined functions (UDFs)

- Run user defined functions (UDFs) in R

- Save the user defined functions (UDFs) to the script repository

- Run the R user defined function in SQL and REST APIs for embedded R execution

- Add the OML user to the Access Control List

- Run the R user defined functions using the REST API for embedded R execution in synchronous mode

- OML4R-1: Introduction: Use this notebook to understand how to:

- Load the ORE Library

- Create database tables

- Use the transparency layer

- Rank attributes for predictive value using the in-database attribute importance algorithm

- Build predictive models, and

- Score data using these models

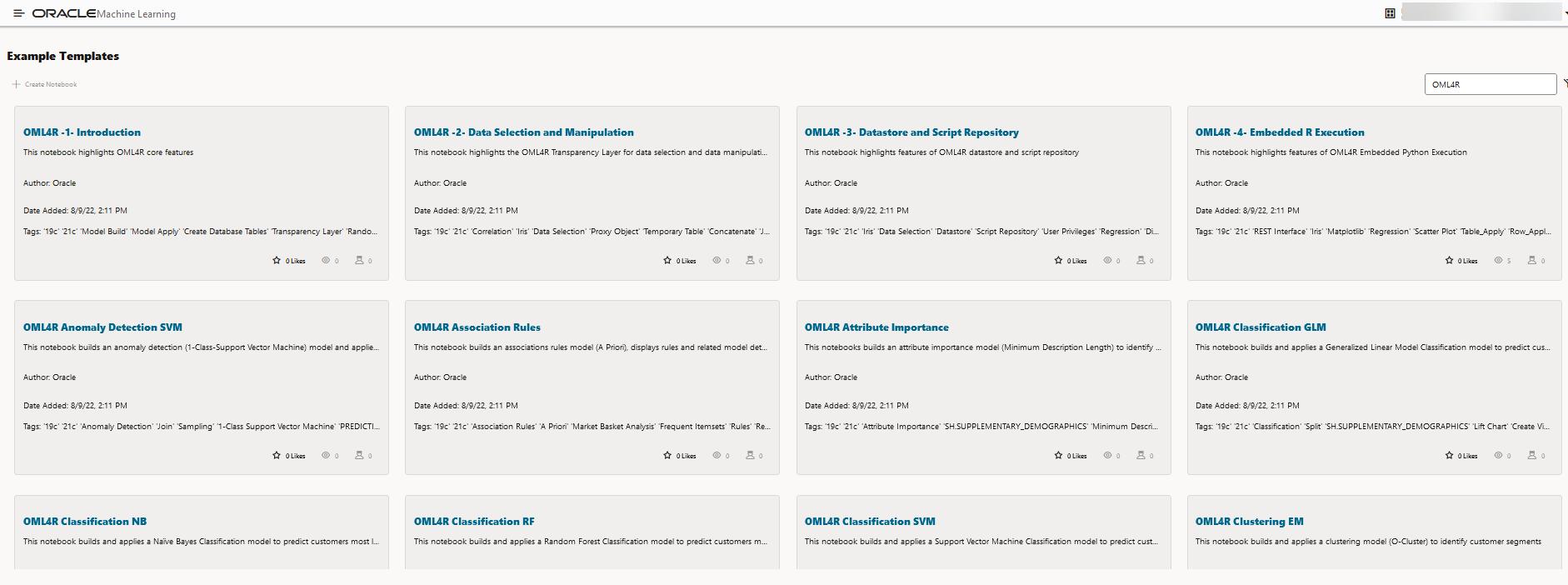

Figure 9-3 Oracle Machine Learning for R Example Templates

- OML4R-2: Data Selection and Manipulation: Use this notebook to understand the features of the transparency layer involving data selection and manipulation.

- OML4R-3: Datastore and Script Repository: Use this notebook to understand the datastore and script repository features of OML4R.

- OML4R-4: Embedded R Execution: Use this notebook to

understand OML4R embedded R execution. First, a linear model is built in R

directly, then a user-define R function is created to build the linear

model, the function is then saved to the script repository, and the data is

scored in parallel using R engines spawned by the Oracle Autonomous AI Database environment. The notebook also demonstrates how to call these scripts

using the SQL interface and REST API for R with embedded R execution.

Note:

To use the SQL API for embedded R execution, a user-defined R function must reside in the OML4R script repository, and an Oracle Machine Learning (OML) cloud account USERNAME, PASSWORD, and URL must be provided to obtain an authentication token. - OML4R Anomaly Detection Support Vector Machine (SVM): Use this notebook to build a One-Class SVM model and then use it to flag unusual or suspicious records.

- OML4R Association Rules Apriori: Use this notebook to build association rules models using the A Priori algorithm with data from the SH schema (SH.SALES). All computation occurs inside Oracle Autonomous AI Database.

- OML4R Attribute Importance Minimum Description Length (MDL): Use this notebook to compute Attribute Importance, which uses the Minimum Description Length algorithm, on the SH schema data. All functionality runs inside Oracle Autonomous AI Database. Oracle Machine Learning supports Attribute Importance to identify key factors such as attributes, predictors, variables that have the most influence on a target attribute.

- OML4R Classification Generalized Linear Model (GLM): Use this notebook to predict customers most likely to be positive responders to an Affinity Card loyalty program. This notebook builds and applies a classification generalized linear model using the Sales History (SH) schema data. All processing occurs inside Oracle Autonomous AI Database.

- OML4R Classification Naive Bayes (NB): Use this notebook to predict customers most likely to be positive responders to an Affinity Card loyalty program. This notebook builds and applies a classification decision tree model using the Sales History (SH) schema data. All processing occurs inside Oracle Autonomous AI Database.

- OML4R Classification Random Forest (RF): Use this notebook to use the Random Forest algorithm for classification in OML4R, and predict customers most likely to be positive responders to an Affinity Card loyalty program.

- OML4R Classification Modeling to Predict Target Customers using Support Vector Machine (SVM): Use this notebook to use the Classification modeling to predict Target Customers using Support Vector Machine Model.

- OML4R Clustering - Identifying Customer Segments using Expectation Maximization Clustering: Use this notebook to understand how to identify natural clusters of customers using the CUSTOMERS data set from the SH schema using the unsupervised learning Expectation Maximization (EM) algorithm. The data exploration, preparation, and machine learning run inside Oracle Autonomous AI Database.

- OML4R Clustering - Identifying Customer Segments using K-Means Clustering: Use this notebook to understand how to identify natural clusters of customers using the

CUSTOMERSdata set from the SH schema using the unsupervised learning K-Means (KM) algorithm. The data exploration, preparation, and machine learning run inside Oracle Autonomous AI Database. - OML4R Clustering - Orthogonal Partitioning Clustering (OC): Use this notebook to understand how to identify natural clusters of customers using the CUSTOMERS data set from the SH schema using the unsupervised learning k-Means algorithm. The data exploration, preparation, and machine learning runs inside Oracle Autonomous AI Database.

- OML4R Data Cleaning Outlier: Use this notebook understand and to exclude records with outliers using OML4R.

- OML4R Data Cleaning - Recode Synonymous Values: Use this notebook to recode synonymous value using OML4R.

- OML4R Data set Creation: Use this notebook to load the sample data sets

MTCARSandIRISand to import them into your Oracle Autonomous AI Database instance using theore.create()function.

Note:

The following example template notebooks prefixed with an asterisk (*), use theCUSTOMER_INSURANCE_LTV data set. This data set is generated by the OML Run-me-first notebook. Hence, you must run the OML Run-me-first notebook that is available under the Examples Templates.

- * OML4R Data Cleaning Missing Data: Use this notebook to perform missing value replacement using OML4R.

- * OML4R Data Cleaning Duplicate Removal: Use this notebook to remove duplicate records using OML4R.

- * OML4R Data Transformation Binning: Use this notebook to bin numerical columns using OML4R.

- * OML4R Data Transformation Categorical Record: Use this notebook to recode a categorical string variable to a numeric variable and string-to-string recoding using OML4R.

- * OML4R Data Transformation: Normalization and Scaling: Use this notebook to normalize and scale data using OML4R.

- * OML4R Data Transformation: One-Hot Encoding: Use this notebook to perform one-hot encoding using OML4R.

- * OML4R Feature Selection - Supervised Algorithm: Use this notebook to perform feature selection using in-database supervised algorithms using OML4R. This notebook demonstrates how to build a Random Forest model to predict whether the customer would buy insurance or not, and then use Feature Importance to perform feature selection.

- * OML4R Feature Selection Using Summary Statistics: Use this notebook to perform feature selection using summary statistics using OML4R. This notebook demonstrates how to use OML4R to select features based on number of distinct values, null values, proportion of constant values.

- OML4R Feature Engineering Aggregation: Use this notebook to perform aggregation for min, max, mean, and count using OML4R. This template uses the

SALEStable present in theSHschema, and shows how to create features by aggregating the amount sold for each customer and product pair. - OML4R Feature Extraction Explicit Semantic Analysis (ESA) Wiki Model: This notebook uses the use the Wikipedia Model as an example. Use this notebook to use the Oracle Machine Learning for R function

ore.odmESAto extract text-based features from a corpus of documents and performs document similarity comparisons. All processing occurs inside Oracle Autonomous AI Database.Note:

The pre-built Wikipedia model must be installed in your Autonomous AI Database instance to run this notebook. - OML4R Data Transformation Date Data types: Use this notebook to perform various operations on date and time data using database table proxy objects using Oracle Machine Learning for R.

- OML4R Feature Extraction Singular Value Decomposition (SVD): Use this notebook to use the in-database SVD for feature extraction. This notebook uses the Oracle Machine Learning for R function

ore.odmSVDto create a model that uses the Singular Value Decomposition (SVD) algorithm for feature extraction. - OML4R Partitioned Model Support Vector Machine (SVM): Use this notebook to build an SVM model to predict the number of years a customer resides at their residence but partitioned on customer gender. The model is then used to predict the target, then predict the target with prediction details.

- OML4R Regression Generalized Linear Model (GLM): Use this notebook to understand how to predict numerical values using multiple regression. This notebook uses the Generalized Linear Model algorithm.

- OML4R Regression Neural Network (NN): Use this notebook to understand how to predict numerical values using multiple regression. This notebook uses the Neural Network algorithm.

- OML4R Regression Support Vector Machine (SVM): Use this notebook to understand how to predict numerical values using multiple regression. This notebook uses the Support Vector Machine algorithm.

- OML4R REST API: Use this notebook to understand how to use the OML4R REST API to call user-defined R functions, and to list those available in the R script repository.

Note:

To run a script, it must reside in the R script repository. An Oracle Machine Learning cloud service account user name and password must be provided for authentication. - OML4R Statistical Function: Use this notebook to understand and use various statistical functions. The notebook uses data from the SH schema through the OML4R transparency layer.

- OML4R Text Mining Support Vector Machine (SVM): Use this notebook to understand how to use unstructured text data to build machine learning models, leverage Oracle Text, use Oracle Machine Learning in-database algorithms predictive features, and extract features from text columns.

This notebook builds a Support Vector Machine (SVM) model to predict customers that are most likely to be positive responders to an Affinity Card loyalty program. The data comes from a text column that contains user generated comments.

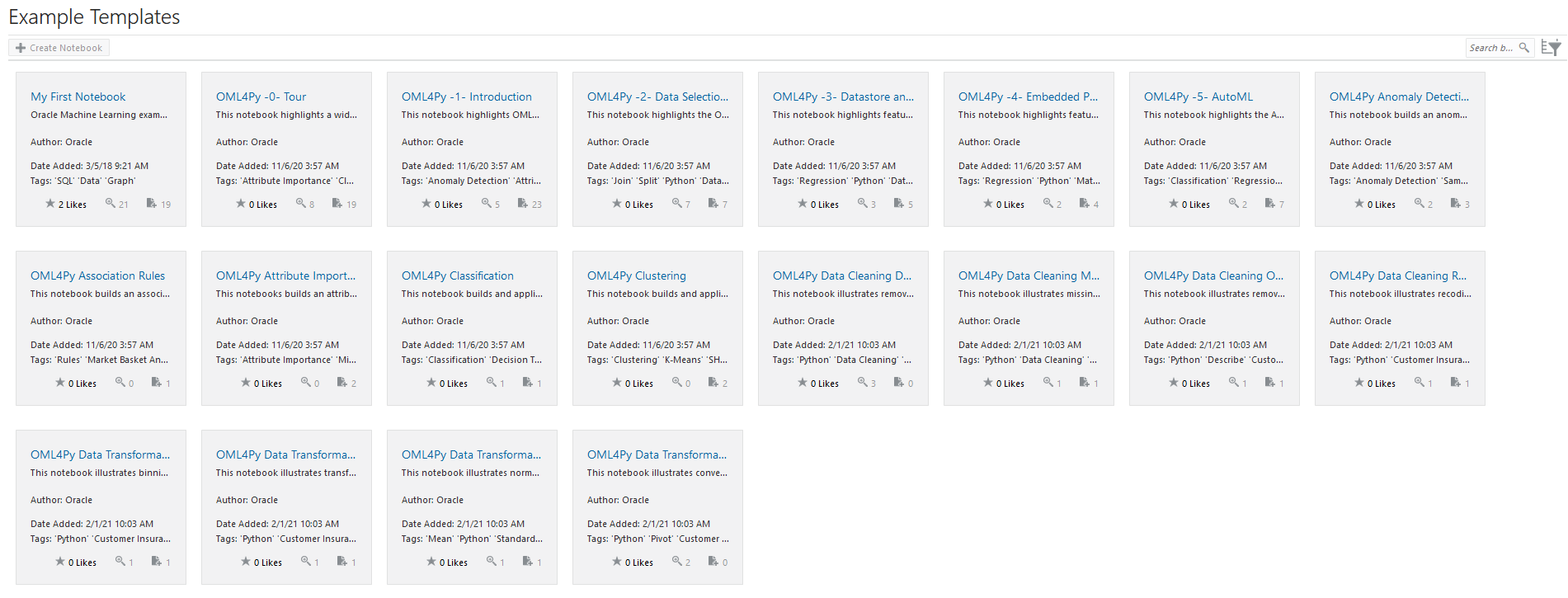

Oracle Machine Learning for Python Example Templates

Figure 9-4 Oracle Machine Learning for Python Example Templates

- My First Notebook: Use the My First Notebook notebook for basic machine learning functions, data selection and data viewing. This template uses the

SHschema data. - OML4Py -0- Tour: This notebook is the first of a series 0 through 5 that is intended to introduce you to the range of OML4Py functionality through short examples.

- OML4Py -1- Introduction: This notebook provides an overview on how to load OML library, create database tables, use the transparency layer, rank attributes for predictive value using the in-database attribute importance algorithm, build predictive models, and score data using these models.

- OML4Py -2- Data Selection and Manipulation: Use this notebook to learn how to work with the transparency layer which involves data selection and manipulation.

- OML4Py -3- Datastores: Use this notebook to learn how to work with datastores, move objects between datastore and a Python sessions, manage datastore privileges, save model objects and Python objects in a datastore, delete datastores and so on.

- OML4Py -4- Embedded Python Execution: Use this notebook to understand embedded Python Execution. In this notebook, a linear model is build in Python directly, and then a function is created that uses Python engines spawned by the Autonomous AI Database environment.

- OML4Py -5- AutoML: Use this notebook to understand the AutoML workflow in OML4Py. In this notebook, the

WINEdata set from scikit-learn is used. Here, AutoML is used for classification on thetargetcolumn, and for regression on thealcoholcolumn.

Note:

The following example template notebooks prefixed with an asterisk (*), use theCUSTOMER_INSURANCE_LTV_PY data set. This data set has duplicated values artificially generated by OML4SQL Noise notebook. Hence, you must run the OML4SQL Noise first before running the notebook.

- * OML4Py Data Cleaning Duplicates Removal: Use this notebook to understand how to remove duplicate records using OML4Py. This notebook uses the customer insurance lifetime value data set which contains customer financial information, lifetime value, and whether or not the customer bought insurance.

- * OML4Py Data Cleaning Missing Data: Use this notebook to understand how to fill in missing values using OML4Py. This notebook uses the customer insurance lifetime value data set which contains customer financial information, lifetime value, and whether or not the customer bought insurance.

- * OML4Py Data Cleaning Recode Synonymous Values: Use this notebook to understand how to recode synonymous value using OML4Py. This notebook uses the customer insurance lifetime value data set which contains customer financial information, lifetime value, and whether or not the customer bought insurance.

- OML4Py Data Cleaning Outlier Removal: Use this notebook to understand how to clean data to remove outliers. This notebook uses the

CUSTOMER_INSURANCE_LTVdata set which contains customer financial information, lifetime value, and whether or not the customer bought insurance. In the data setCUSTOMER_INSURANCE_LTV, the focus is on numerical values and removal of records with values in the top and bottom 5%. - OML4Py Data Transformation Binning: Use this notebook to understand how to bin a numerical column and visualize the distribution.

- OML4Py Data Transformation Categorical - Convert Categorical Variables to Numeric Variables: Use this notebook to understand how to convert categorical variables to numeric variables using OML4Py. The notebooks demonstrates how to convert a categorical variable with each distinct level/value coded to an integer data type.

- OML4Py Data Transformation Normalization and Scaling: Use this notebook to understand how to normalize and scale data using z-score (mean and standard deviation), min max scaling, and log scaling.

Note:

When building or applying a model using in-database Oracle Machine Learning algorithms, automatic data preparation will normalize data automatically as needed, by specific algorithms. - OML4Py Data Transformation One Hot Encoding: Use this notebook to understand how to perform one hot encoding using OML4Py. Machine learning algorithms cannot work with categorical data directly. Categorical data must be converted to numbers. This notebook uses the customer insurance lifetime value data set which contains customer financial information, lifetime value, and whether or not the customer bought insurance.

Note:

If you plan to use the in-database algorithms, one hot encoding is automatically applied for those algorithms requiring it. The in-databae algorithms automatically explode the categorical columns and fit the model on the prepared data internally. - OML4Py Anomaly Detection: Use this notebook to detect anomalous records, customers or transactions in your data. This template uses the unsupervised learning algorithm 1-Class Support Vector Machine. The notebook template builds a 1-Class Support Vector Machine (SVM) model.

- OML4Py Association Rules: Use this notebook for market basket analysis of your data, or to detect co-occurring items, failures or events in your data. This template uses the apriori Association Rules model using the

SHschema data (SH.SALES). - OML4Py Attribute Importance: Use this notebook to identify key attributes that have maximum influence over the target attribute. The target attribute in the build data of a supervised model is the attribute that you want to predict. The template builds an Attribute Importance model using the

SHschema data. - OML4Py Classification: Use this notebook for predicting customer behavior and similar predictions. The template builds and applies the classification algorithm Decision Tree to build a Classification model based on the relationships between the predictor values and the target values. The template uses the

SHschema data. - OML4Py Clustering: Use this notebook to identify natural clusters in your data. The notebook template uses the unsupervised learning k-Means algorithm on the

SHschema data. - OML4Py Data Transformation : Use this notebook to convert categorical variables to numeric variables using OML4Py. This template shows how to convert a categorical variable with each distinct level/value coded to an integer data type.

- OML4Py Data Set Creation: Use this notebook to create data set from sklearn package to OML data frame using OML4Py.

- OML4Py Feature Engineering Aggregation: Use this notebook template to fill in missing values using OML4Py. This notebook uses the

SHschema SALES table, which contains transaction records for each customer and products purchased. Features are created by aggregating the amount sold for each customer and product pair. - OML4Py Feature Selection Supervised Algorithm Based: Use this notebook to perform feature selection using in-database supervised algorithms using OML4Py.

- OML4Py Feature Selection Summary Statistics: Use this notebook template to perform feature selection using summary statistics using OML4Py. The notebook shows how to use OML4Py to select features based on number of distinct values, null values, proportion of constant values. The data set used here

CUSTOMER_INSURANCE_LTV_PYhas null values generated by the OML4SQL Noise notebook artificially. You must first run the OML4SQL Noise notebook before running the OML4Py Feature Selection Summary Statistics notebook. - OML4Py Partitioned Model: Use this notebook to build partitioned models. This notebook builds an SVM model to predict the number of years a customer resides at their residence but partitioned on customer gender. It uses the model to predict the target, and then predict the target with prediction details.

Oracle Machine Learning enables automatically building of an ensemble model comprised of multiple sub-models, one for each data partition. Sub-models exist and are used as one model, which results in simplified scoring using the top-level model only. The proper sub-model is chosen by the system based on partition values in the row of data to be scored. Partitioned models achieve potentially better accuracy through multiple targeted models.

- OMP4Py REST API: Use this notebook to call embedded Python execution. OML4Py contains a REST API to run user-defined Python functions saved in the script repository. The REST API is used when separation between the client and the Database server is beneficial. Use the OML4Py REST API to build, train, deploy, and manage scripts.

Note:

To run a script, it must reside in the OML4Py script repository. An Oracle Machine Learning cloud service account user name and password must be provided for authentication. - OML4Py Regression Modeling to Predict Numerical Values: Use this notebook to predict numerical values using multiple regression.

- OML4Py Statistical Functions: Use this notebook to use various statistical functions. The statistical functions use data from the

SHschema through the OML4Py transparency layer. - OML4Py Text Mining: Use this notebook to build models using Text Mining capability in Oracle Machine Learning.

In this notebook, an SVM model is built to predict customers most likely to be positive responders to an Affinity Card loyalty program. The data comes with a text column that contains user generated comments. With a few additional specifications, the algorithm automatically uses the text column and builds the model on both the structured data and unstructured text.

Oracle Machine Learning for SQL Example Templates

Figure 9-5 Oracle Machine Learning for SQL Example Templates

- OML4SQL Anomaly Detection: Use this notebook to detect unusual or rare occurrences. Oracle Machine Learning supports anomaly detection to identify rare or unusual records (customers, transactions, etc.) in the data using the semi-supervised learning algorithm One-Class Support Vector Machine. This notebook builds a 1Class-SVM model and then uses it to flag unusual or suspicious records. The entire machine learning methodology runs inside Oracle Autonomous AI Database.

- OML4SQL Association Rules: Use this notebook to apply Association Rules machine learning technique, also known as Market Basket Analysis to discover co-occurring items, states that lead to failures, or non-obvious events. This notebook builds associations rules models using the A Priori algorithm with the

SH.SALESdata from theSHschema. All computation occurs inside Oracle Autonomous AI Database. - OML4SQL Attribute Importance - Identify Key Factors: Use this notebook to identify key factors, also known as attributes, predictors, variables that have the most influence on a target attribute. This notebook builds an Attribute Importance model, which uses the Minimum Description Length algorithm, using the

SHschema data. All functionality runs inside Oracle Autonomous AI Database. - OML4SQL Classification - Predicting Target Customers: Use this notebook to predict customers that are most likely to be positive responders to an Affinity Card loyalty program. This notebook builds and applies classification decision tree models using the

SHschema data. All processing occurs inside Oracle Autonomous AI Database. - OML4SQL Clustering - Identifying Customer Segments: Use this notebook to identify natural clusters of customers. Oracle Machine Learning supports clustering using several algorithms, including k-Means, O-Cluster, and Expectation Maximization. This notebook uses the CUSTOMERS data set from the

SHschema using the unsupervised learning k-Means algorithm. The data exploration, preparation, and machine learning runs inside Oracle Autonomous AI Database. - OML4SQL Data Cleaning - Removing Duplicates: Use this notebook to remove duplicate records using Oracle SQL. The notebook uses the customer insurance lifetime value data set which contains customer financial information, lifetime value, and whether or not the customer bought insurance. The data set

CUSTOMER_INSURANCE_LTV_SQLhas duplicate values generated by the OML4SQL Noise notebook.Note:

You must first run the OML4SQL Noise notebook before running the OML4SQL Data Cleaning notebook. - OML4SQL Data Cleaning - Missing Data: Use this template to replace missing values using Oracle SQL and the DBMS_DATA_MINING_TRANSFORM package. The data set

CUSTOMER_INSURANCE_LTV_SQLhas missing values artificially generated by the OML4SQL Noise notebook. You must first run the OML4SQL Noise notebook before running the OML4SQL Data Cleaning notebook.Note:

When building or applying a model using in-database Oracle Machine Learning algorithms, this operation may not be needed separately if automatic data preparation is enabled. Automatic data preparation automatically replaces missing values of numerical attributes with the mean and missing values of categorical attributes with the mode. - OML4SQL Data Cleaning Outlier Removal: Use this notebook to remove outliers using Oracle SQL and the DBMS_DATA_MINING_TRANSFORM package. The notebook uses the customer insurance lifetime value data set, which contains customer financial information, lifetime value, and whether or not the customer bought insurance. In the data set

CUSTOMER_INSURANCE_LTV, it focuses on numeric values and removes records with values in the top and bottom 5%. - OML4SQL Data Cleaning Recode Synonymous Values: Use this notebook to recode synonymous value of a column using Oracle SQL. The notebook uses the customer insurance lifetime value data set, which contains customer financial information, lifetime value, and whether or not the customer bought insurance. The data set

CUSTOMER_INSURANCE_LTV_SQLhas recoded values generated by the OML4SQL Noise notebook. You must first run the OML4SQL Noise notebook before running the OML4SQL Data Cleaning - Recode Synonymous Values notebook. - OML4SQL Data Transformation Binning: Use this notebook to bin numeric columns using Oracle SQL and the DBMS_DATA_MINING_TRANSFORM package. This notebook shows how to bin a numerical column and visualize the distribution.

- OML4SQL Data Transformation Categorical: Use this notebook to convert a categorical variable to a numeric variable using Oracle SQL. The notebook shows how to convert a categorical variable with each distinct level/value coded to an integer, and how to create an indicator variable based on a simple predicate.

- OML4SQL Data Transformation Normalization and Scale: Use this notebook to normalize and scale data using Oracle SQL and the DBMS_DATA_MINING_TRANSFORM package. The notebook shows how to normalize data using using z-score (mean and standard deviation), min max scaling, and log scaling. When building or applying a model using in-database Oracle Machine Learning algorithms, automatic data preparation normalizes data automatically, as needed, by specific algorithms.

- OML4SQL Dimensionality Reduction - Non-negative Matrix Factorization: Use this notebook to perform dimensionality reduction using the in-database non-negative matrix factorization algorithm. This notebook shows how to convert a table with many columns to a reduced feature set. Non-negative Matrix Factorization produces non-negative coefficients.

- OML4SQL Dimensionality Reduction - Singular Value Decomposition: Use this notebook to perform dimensionality reduction using the in-database singular value decomposition (SVD) algorithm.

- OML4SQL: Exporting Serialized Models: Use this notebook to export serialized models to Oracle Cloud Object Storage. This notebook creates Oracle Machine Learning regression and classification models and exports the models in a serialized format so that they can be scored using the Oracle Machine Learning (OML) Services REST API. OML Services provides REST API endpoints hosted on Oracle Autonomous AI Database. These endpoints enable the storing of Oracle Machine Learning models along with its metadata and create scoring endpoints for the model. The REST API for OML Services supports both Oracle Machine Learning models and ONNX format models, and enables cognitive text functionality.

- OML4SQL Feature Engineering Aggregation and Time: Use this notebook to generate aggregated features and also extract date and time features using Oracle SQL. The notebook also shows how to extract date and time features from the field

TIME_ID. - OML4SQL Feature Selection Algorithm Based: Use this notebook to perform feature selection using in-database supervised algorithms. The notebook first builds a random forest model to predict if the customer will buy insurance, then it uses Feature Importance values for feature selection. It then build a decision tree model for the same classification task, and obtains split nodes. For the top splitting nodes with highest support, features associated with those nodes are selected.

- OML4SQL Feature Selection Unsupervised Attribute Importance: Use this notebook to perform feature selection using the in-database unsupervised algorithm Expectation Maximization (EM). This notebook illustrates using the

CREATE_MODELfunction, which leverages the settings table in contrast to theCREATE_MODEL2function used in other notebooks. - OML4SQL Feature Selection Using Summary Statistics: Use this notebook to perform feature selection using summary statistics using Oracle SQL. The data set

CUSTOMER_INSURANCE_LTV_SQLhas null values generated by OML4SQL Noise notebook artificially. You must first run the OML4SQL Noise notebook before running the OML4SQL Feature Selection Using Summary Statistics. - OML4SQL Noise: Use this notebook to replace normal values by null values, and to add duplicated rows. In this notebook, the data set used by the Data Preparation notebooks is prepared, in particular those for data cleaning and feature selection. It uses the customer insurance lifetime value data set which contains customer financial information, lifetime value, and whether or not the customer bought insurance.

Note:

Run the OML4SQL Noise notebook before the Data Preparation notebooks. - OML4SQL Partitioned Model: Use this notebook to build partitioned models. Partitioned models achieve potentially better accuracy through multiple targeted models. The notebook builds an SVM model to predict the number of years a customer resides at their residence but partitioned on customer gender. The model is then used to predict the target first, and then to predict the target with prediction details.

- OML4SQL Text Mining: Use this notebook to build models using text mining capability. Oracle Machine Learning handles both structured data and unstructured text data. By leveraging Oracle Text, Oracle Machine Learning in-database algorithms automatically extracts predictive features from the text column.

This notebook builds an SVM model to predict customers most likely to be positive responders to an Affinity Card loyalty program. The data comes with a text column that contains user generated comments. With a few additional specifications, the algorithm automatically uses the text column and builds the model on both the structured data and unstructured text.

- OML4SQL Regression: Use this notebook to predict numerical values. This template uses multiple regression algorithms such as Generalized Linear Models (GLM).

- OML4SQL Statistical Function: Use this notebook for descriptive and comparative statistical functions. The notebook template uses

SHschema data. - OML4SQL Time Series: Use this notebook to build time series models on your time series data for forecasting. This notebook is based on the Exponential Smoothing Algorithm. The sales forecasting example in this notebook is based on the

SH.SALESdata. All computations are done inside Oracle Autonomous AI Database.

Parent topic: Use the Example Templates