9.1 About Automated Machine Learning

Automated Machine Learning (AutoML) provides built-in data science expertise about data analytics and modeling that you can employ to build machine learning models.

Any modeling problem for a specified data set and prediction task involves a sequence of data cleansing and preprocessing, algorithm selection, and model tuning tasks. Each of these steps require data science expertise to help guide the process to an efficient final model. Automated Machine Learning (AutoML) automates this process with its built-in data science expertise.

OML4Py has the following AutoML capabilities:

- Automated algorithm selection that selects the appropriate algorithm from the supported machine learning algorithms

- Automated feature selection that reduces the size of the original feature set to speed up model training and tuning, while possibly also increasing model quality

- Automated tuning of model hyperparameters, which selects the model with the highest score metric from among several metrics as selected by the user

AutoML performs those common modeling tasks automatically, with less effort and potentially better results. It also leverages in-database algorithm parallel processing and scalability to minimize runtime and produce high-quality results.

Note:

As thefit method of

the machine learning classes does, the AutoML functions reduce,

select, and tune provide a

case_id parameter that you can use to achieve repeatable data

sampling and data shuffling during model building.

The AutoML functionality is also available in a no-code user interface alongside OML Notebooks on Oracle Autonomous Database. For more information, see Oracle Machine Learning AutoML User Interface .

Automated Machine Learning Classes and Algorithms

The Automated Machine Learning classes are the following.

| Class | Description |

|---|---|

oml.automl.AlgorithmSelection |

Using only the characteristics of the data set and the task, automatically selects the best algorithms from the set of supported Oracle Machine Learning algorithms. Supports classification and regression functions. |

oml.automl.FeatureSelection |

Uses meta-learning to quickly identify the most relevant feature subsets given a training data set and an Oracle Machine Learning algorithm. Supports classification and regression functions. |

oml.automl.ModelTuning |

Uses a highly parallel, asynchronous gradient-based hyperparameter optimization algorithm to tune the algorithm hyperparameters. Supports classification and regression functions. |

oml.automl.ModelSelection |

Selects the best Oracle Machine Learning algorithm and then tunes that algorithm. Supports classification and regression functions. |

The Oracle Machine Learning algorithms supported by AutoML are the following:

Table 9-1 Machine Learning Algorithms Supported by AutoML

| Algorithm Abbreviation | Algorithm Name |

|---|---|

| dt | Decision Tree |

| glm | Generalized Linear Model |

| glm_ridge | Generalized Linear Model with ridge regression |

| nb | Naive Bayes |

| nn | Neural Network |

| rf | Random Forest |

| svm_gaussian | Support Vector Machine with Gaussian kernel |

| svm_linear | Support Vector Machine with linear kernel |

Classification and Regression Metrics

The following tables list the scoring metrics supported by AutoML.

Table 9-2 Binary and Multiclass Classification Metrics

| Metric | Description, Scikit-learn Equivalent, and Formula |

|---|---|

accuracy |

Calculates the rate of correct classification of the target. Formula: |

f1_macro |

Calculates the f-score or f-measure, which is a weighted average of the precision and recall. The f1_macro takes the unweighted average of per-class scores. Formula: |

f1_micro |

Calculates the f-score or f-measure with micro-averaging in which true positives, false positives, and false negatives are counted globally. Formula: |

f1_weighted |

Calculates the f-score or f-measure with weighted averaging of per-class scores based on support (the fraction of true samples per class). Accounts for imbalanced classes. Formula: |

precision_macro |

Calculates the ability of the classifier to not label a sample incorrectly. The precision_macro takes the unweighted average of per-class scores. Formula: |

precision_micro |

Calculates the ability of the classifier to not label a sample incorrectly. Uses micro-averaging in which true positives, false positives, and false negatives are counted globally. Formula: |

precision_weighted |

Calculates the ability of the classifier to not label a sample incorrectly. Uses weighted averaging of per-class scores based on support (the fraction of true samples per class). Accounts for imbalanced classes. Formula: |

recall_macro |

Calculates the ability of the classifier to correctly label each class. The recall_macro takes the unweighted average of per-class scores. Formula: |

recall_micro |

Calculates the ability of the classifier to correctly label each class with micro-averaging in which the true positives, false positives, and false negatives are counted globally. Formula: |

recall_weighted |

Calculates the ability of the classifier to correctly label each class with weighted averaging of per-class scores based on support (the fraction of true samples per class). Accounts for imbalanced classes. Formula: |

See Also: Scikit-learn classification metrics

Table 9-3 Binary Classification Metrics Only

| Metric | Description, Scikit-learn Equivalent, and Formula |

|---|---|

f1 |

Calculates the f-score or f-measure, which is a weighted average of the precision and recall. This metric by default requires a positive target to be encoded as 1 to function as expected. Formula: |

precision |

Calculates the ability of the classifier to not label a sample positive (1) that is actually negative (0). Formula: |

recall |

Calculates the ability of the classifier to label all positive (1) samples correctly. Formula: |

roc_auc |

Calculates the Area Under the Receiver Operating Characteristic Curve (roc_auc) from prediction scores. See also the definition of receiver operation characteristic. |

Table 9-4 Regression Metrics

| Metric | Description, Scikit-learn Equivalent, and Formula |

|---|---|

r2 |

Calculates the coefficient of determination (R squared). See also the definition of coefficient of determination. |

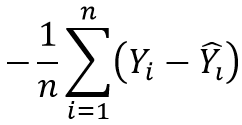

neg_mean_absolute_error |

Calculates the mean of the absolute difference of predicted and true targets (MAE). Formula:  Description of the illustration negmeanabserr.png |

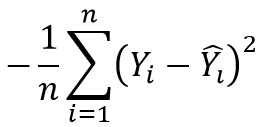

neg_mean_squared_error |

Calculates the mean of the squared difference of predicted and true targets. Formula:  Description of the illustration negmeansqerr.png |

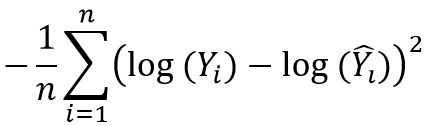

neg_mean_squared_log_error |

Calculates the mean of the difference in the natural log of predicted and true targets. Formula:  Description of the illustration negmeansqlogerr.png |

neg_median_absolute_error |

Calculates the median of the absolute difference between predicted and true targets. Formula: Description of the illustration negmedianabserr.png |

See Also: Scikit-learn regression metrics

Parent topic: Automated Machine Learning