Shard-Level High Availability

Oracle Sharding is integrated with Oracle Database replication technologies for high availability and disaster recovery at the shard level. Learn how to use Oracle’s replication technologies to make your sharded databases highly available:

About Sharding and Replication

Oracle Sharding is tightly integrated with Oracle Data Guard and Oracle GoldenGate for Oracle replication and disaster recovery.

Note:

Oracle GoldenGate replication support for Oracle Sharding High Availability is deprecated in Oracle Database 21c.Replication provides high availability, disaster recovery, and additional scalability for reads. A unit of replication can be a shard, a part of a shard, or a group of shards.

Replication topology in a sharded database is declaratively specified using GDSCTL command syntax. You can choose one of two technologies—Oracle Data Guard or Oracle GoldenGate—to replicate your data. Oracle Sharding automatically deploys the specified replication topology and enables data replication.

The availability of a sharded database is not affected by an outage or slowdown of one or more shards. Replication is used to provide individual shard-level high availability (Oracle Active Data Guard or Oracle GoldenGate). Replication is automatically configured and deployed when the sharded database is created. Optionally, you can use Oracle RAC for shard-level high availability, complemented by replication, to maintain shard-level data availability in the event of a cluster outage. Oracle Sharding automatically fails over database connections from a shard to its replica in the event of an unplanned outage.

Using Oracle Data Guard with a Sharded Database

Oracle Data Guard replication maintains one or more synchronized copies (standbys) of a shard (the primary) for high availability and data protection. Standbys may be deployed locally or remotely, and when using Oracle Active Data Guard can also be open for read-only access.

Oracle Data Guard can be used as the replication technology for sharded databases using the system-managed, user-defined, or composite method of sharding.

Using Oracle Data Guard with a System-Managed Sharded Database

In system-managed and composite sharding, the logical unit of replication is a group of shards called a shardgroup. In system-managed sharding, a shardgroup contains all of the data stored in the sharded database. The data is sharded by consistent hash across shards that make up the shardgroup. Shards that belong to a shardgroup are usually located in the same data center. An entire shardgroup can be fully replicated to one or more shardgroups in the same or different data centers.

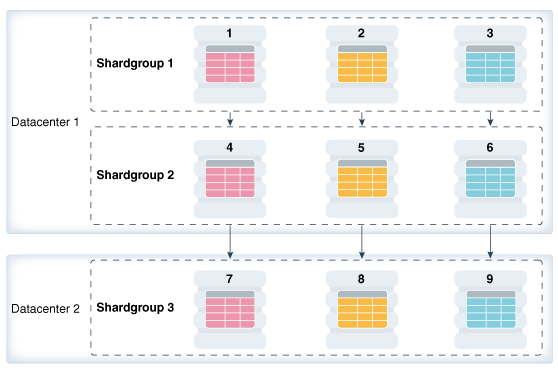

The following figure illustrates how Data Guard replication is used with system-managed sharding. In the example in the figure there is a primary shardgroup, Shardgroup 1, and two standby shardgroups, Shardgroup 2 and Shardgroup 3. Shardgroup 1 consists of Data Guard primary databases (shards 1-3). Shardgroup 2 consists of local standby databases (shards 4-6) which are located in the same data center and configured for synchronous replication. And Shardgroup 3 consists of remote standbys (shards 7-9) located in a different data center and configured for asynchronous replication. Oracle Active Data Guard is enabled in this configuration, so each standby is open read-only.

Figure 2-11 System-Managed Sharding with Data Guard Replication

Description of "Figure 2-11 System-Managed Sharding with Data Guard Replication"

The concept of shardgroup as a logical unit of replication hides from the user the implementation details of replication. With Data Guard, replication is done at the shard (database) level. The sharded database in the figure above consists of three sets of replicated shards: {1, 4, 7}, {2, 5, 8} and {3, 6, 9}. Each set of replicated shards is managed as a Data Guard Broker configuration with fast-start failover (FSFO) enabled.

To deploy replication, specify the properties of the shardgroups (region, role, and so on) and add shards to them. Oracle Sharding automatically configures Data Guard and starts an FSFO observer for each set of replicated shards. It also provides load balancing of the read-only workload, role based global services and replication lag, and locality based routing.

Run the following GDSCTL commands to deploy the example configuration shown in the figure above.

CREATE SHARDCATALOG –database host00:1521:shardcat –region dc1,dc2

ADD GSM -gsm gsm1 -listener 1571 –catalog host00:1521:shardcat –region dc1

ADD GSM -gsm gsm2 -listener 1571 –catalog host00:1521:shardcat –region dc2

START GSM -gsm gsm1

START GSM -gsm gsm2

ADD SHARDGROUP -shardgroup shardgroup1 -region dc1 -deploy_as primary

ADD SHARDGROUP -shardgroup shardgroup2 -region dc1 -deploy_as active_standby

ADD SHARDGROUP -shardgroup shardgroup3 -region dc2 -deploy_as active_standby

ADD CDB -connect cdb1

ADD CDB -connect cdb2

...

ADD CDB -connect cdb9

ADD SHARD -connect shard1 -CDB cdb1 -shardgroup shardgroup1

ADD SHARD -connect shard2 -CDB cdb2 -shardgroup shardgroup2

...

ADD SHARD -connect shard9 -CDB cdb9 -shardgroup shardgroup3

DEPLOYUsing Oracle Data Guard with a User-Defined Sharded Database

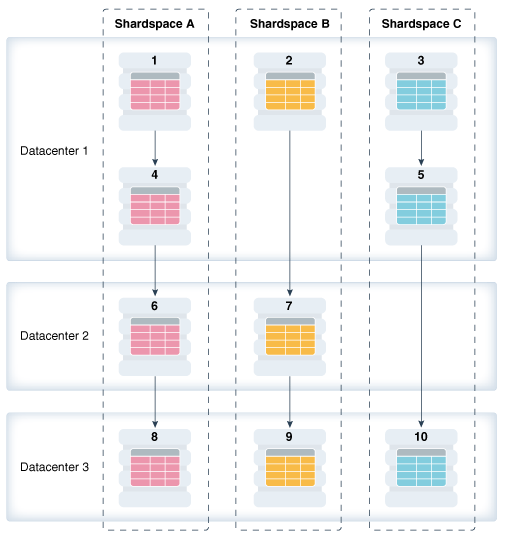

With user-defined sharding the logical (and physical) unit of replication is a shard. Shards are not combined into shardgroups. Each shard and its replicas make up a shardspace which corresponds to a single Data Guard Broker configuration. Replication can be configured individually for each shardspace. Shardspaces can have different numbers of standbys which can be located in different data centers. An example of user-defined sharding with Data Guard replication is shown in the following figure.

Figure 2-12 User-Defined Sharding with Data Guard Replication

Description of "Figure 2-12 User-Defined Sharding with Data Guard Replication"

Run the following GDSCTL commands to deploy the example configuration shown in the figure above.

CREATE SHARDCATALOG -sharding user –database host00:1521:cat –region dc1,dc2,dc3

ADD GSM -gsm gsm1 -listener 1571 –catalog host00:1521:cat –region dc1

ADD GSM -gsm gsm2 -listener 1571 –catalog host00:1521:cat –region dc2

ADD GSM -gsm gsm3 -listener 1571 –catalog host00:1521:cat –region dc3

START GSM -gsm gsm1

START GSM -gsm gsm2

START GSM -gsm gsm3

ADD SHARDSPACE -shardspace shardspace_a

ADD SHARDSPACE -shardspace shardspace_b

ADD SHARDSPACE -shardspace shardspace_c

ADD CDB -connect cdb1

ADD CDB -connect cdb2

...

ADD CDB -connect cdb10

ADD SHARD -connect shard1 -CDB cdb1 -shardspace shardspace_a

ADD SHARD -connect shard2 -CDB cdb2 -shardspace shardspace_b

...

ADD SHARD -connect shard10 -CDB cdb10 -shardspace shardspace_c

DEPLOYUsing Oracle Data Guard with a Composite Sharded Database

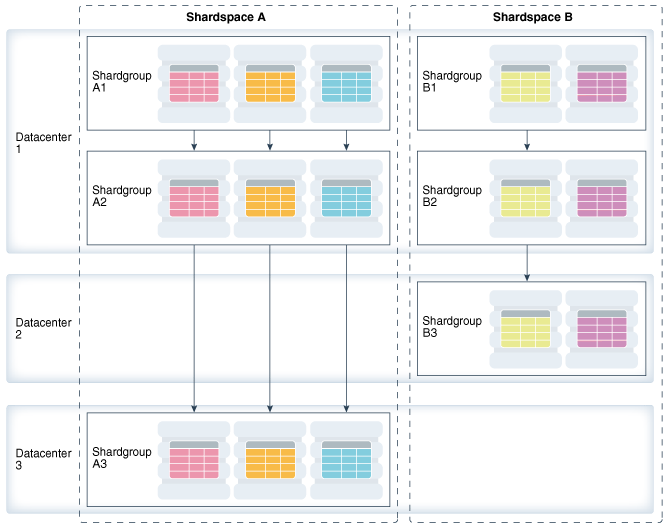

In composite sharding, similar to user-defined sharding, a sharded database consists of multiple shardspaces. However, each shardspace, instead of replicated shards, contains replicated shardgroups.

Figure 2-13 Composite Sharding with Data Guard Replication

Description of "Figure 2-13 Composite Sharding with Data Guard Replication"

Run the following GDSCTL commands to deploy the example configuration shown in the figure above.

CREATE SHARDCATALOG -sharding composite –database host00:1521:cat –region dc1,dc2,dc3

ADD GSM -gsm gsm1 -listener 1571 –catalog host00:1521:cat –region dc1

ADD GSM -gsm gsm2 -listener 1571 –catalog host00:1521:cat –region dc2

ADD GSM -gsm gsm3 -listener 1571 –catalog host00:1521:cat –region dc3

START GSM -gsm gsm1

START GSM -gsm gsm2

START GSM -gsm gsm3

ADD SHARDSPACE -shardspace shardspace_a

ADD SHARDSPACE -shardspace shardspace_b

ADD SHARDGROUP -shardgroup shardgroup_a1 –shardspace shardspace_a -region dc1

-deploy_as primary

ADD SHARDGROUP -shardgroup shardgroup_a2 –shardspace shardspace_a -region dc1

-deploy_as active_standby

ADD SHARDGROUP -shardgroup shardgroup_a3 –shardspace shardspace_a -region dc3

-deploy_as active_standby

ADD SHARDGROUP -shardgroup shardgroup_b1 –shardspace shardspace_b -region dc1

-deploy_as primary

ADD SHARDGROUP -shardgroup shardgroup_b2 –shardspace shardspace_b -region dc1

-deploy_as active_standby

ADD SHARDGROUP -shardgroup shardgroup_b3 –shardspace shardspace_b -region dc2

-deploy_as active_standby

ADD CDB -connect cdb1

ADD CDB -connect cdb2

...

ADD SHARD -connect shard1 -cdb cdb1 -shardgroup shardgroup_a1

ADD SHARD -connect shard2 -cdb cdb2 -shardgroup shardgroup_a2

...

DEPLOYConsiderations

If a single-instance primary fails over to its standby, unlike Oracle RAC, you must intervene to reinstate the old primary by starting the database in mount state. The broker will then automatically complete the reinstatement.

Using Oracle GoldenGate with a Sharded Database

Oracle GoldenGate is used for fine-grained active-active replication where all shards are writable, and each shard can be partially replicated to other shards within a shardgroup.

Note:

Oracle GoldenGate replication support for Oracle Sharding High Availability is deprecated in Oracle Database 21c.Note:

Oracle Database 21c supports only multitenant architecture (CDB). Oracle GoldenGate versions 12.3-19.1 only support Oracle Sharding with single-instance Oracle databases (release 11g through 19c.)

Oracle GoldenGate does not support the user-defined sharding method.

For system-managed sharding with Oracle GoldenGate, a shard must have at least two chunks.

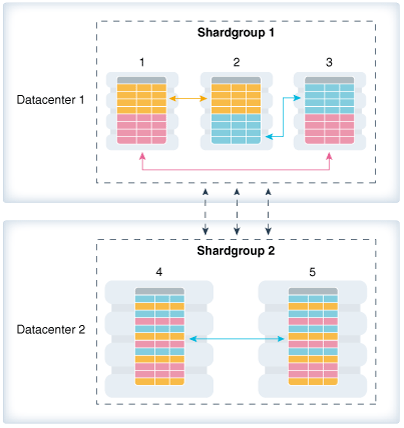

In Oracle GoldenGate, replication is handled at the chunk level. For example, in Shardgroup 1 in the following figure, half of the data stored in each shard is replicated to one shard, and the other half to another shard. If any shard becomes unavailable, its workload is split between two other shards in the shardgroup. The multiple failover destinations mitigate the impact of a shard failure because there is no single shard that has to handle all of the workload from the failed shard.

Figure 2-14 System-Managed Sharding with Golden Gate Replication

Description of "Figure 2-14 System-Managed Sharding with Golden Gate Replication"

With Oracle GoldenGate replication, a shardgroup can contain multiple replicas of each row in a sharded table; therefore, high availability is provided within a shardgroup, and there is no need to have a local replica of the shardgroup, as there is in the case of Data Guard replication. The number of times each row is replicated within a shardgroup is called its replication factor and is a configurable parameter.

To provide disaster recovery, a shardgroup can be replicated to one or more data centers. Each replica of a shardgroup can have a different number of shards, replication factor, database versions, and hardware platforms. However, all shardgroup replicas must have the same number of chunks, because replication is done at the chunk level.

Shardgroup 2 in the figure above contains the same data as Shardgroup 1, but resides in a different data center. Shards in both data centers are writable. The default replication factor, 2, is used for both shardgroups.

Note that because Shardgroup 2 contains only two shards and the replication factor is 2, the shards are fully replicated, and each of them contains all of the data stored in the sharded database. This means that any query routed to these shards can be executed without going across shards. There is only one failover destination in this shardgroup; if a shard goes down, the load on the other shard doubles.

Oracle Sharding is designed to minimize the number of conflicting updates performed to the same row on different shards. This is achieved designating a master chunk for each range of hash values and routing most of requests for the corresponding data to this chunk.

Sometimes it is impossible to avoid update conflicts because of state transitions, such as a chunk move or split, or a shard going up or down. The user may also intentionally allow conflicts in order to minimize transaction latency. For such cases Oracle GoldenGate provides automatic conflict detection and resolution which handles all kinds of conflicts including insert-delete conflicts.

See Also:

Working with Oracle GoldenGate Sharding in the Fusion Middleware Step by Step Data Replication Using Oracle GoldenGate Microservices Architecture guide for more information about using Oracle GoldenGate with Oracle Sharding.