Network Infrastructure

For network connectivity, Private Cloud Appliance relies on a physical layer that provides the necessary high-availability, bandwidth and speed. On top of this, a distributed network fabric composed of software-defined switches, routers, gateways and tunnels enables secure and segregated data traffic – both internally between cloud resources, and externally to and from resources outside the appliance.

Device Management Network

The device management network provides internal access to the management interfaces of all appliance components. These have Ethernet connections to the 1Gbit management switch, and all receive an IP address from each of these address ranges:

-

100.96.0.0/23 – IP range for the Oracle Integrated Lights Out Manager (ILOM) service processors of all hardware components

-

100.96.2.0/23 – IP range for the management interfaces of all hardware components

To access the device management network, you connect a workstation to port 2 of the 1Gbit management switch and statically assign the IP address 100.96.3.254 to its connected interface. Alternatively, you can set up a permanent connection to the Private Cloud Appliance device management network from a data center administration machine, which is also referred to as a bastion host. From the bastion host, or from the (temporarily) connected workstation, you can reach the ILOMs and management interfaces of all connected rack components. For information about configuring the bastion host, see the "Optional Bastion Host Uplink" section of the Oracle Private Cloud Appliance Installation Guide.

Note that port 1 of the 1Gbit management switch is reserved for use by support personnel only.

Data Network

The appliance data connectivity is built on redundant 100Gbit switches in two-layer design similar to a leaf-spine topology. The leaf switches interconnect the rack hardware components, while the spine switches form the backbone of the network and provide a path for external traffic. Each leaf switch is connected to all the spine switches, which are also interconnected. The main benefits of this topology are extensibility and path optimization. A Private Cloud Appliance rack contains two leaf and two spine switches.

The data switches offer a maximum throughput of 100Gbit per port. The spine switches

use 5 interlinks (500Gbit); the leaf switches use 2 interlinks (200Gbit) and 2x2

crosslinks to each spine. Each server node is connected to both leaf switches in the

rack, through the bond0 interface that consists of two 100Gbit Ethernet

ports in link aggregation mode. The two storage controllers are connected to the spine

switches using 4x100Gbit connections.

For external connectivity, 5 ports are reserved on each spine switch. Four ports are available to establish the uplinks between the appliance and the data center network; one port is reserved to optionally segregate the administration network from the data traffic.

Uplinks

The connections between the Private Cloud Appliance and the customer data center are called uplinks. They are physical cable connections between the two spine switches in the appliance rack and one or – preferably – two next-level network devices in the data center infrastructure. Besides the physical aspect, there is also a logical aspect to the uplinks: how traffic is routed between the appliance and the external network it is connected to.

Physical Connection

On each spine switch, ports 1-4 can be used for uplinks to the data center network. For speeds of 10Gbps or 25Gbps, the spine switch port must be split using an MPO-to-4xLC breakout cable. For speeds of 40Gbps or 100Gbps each switch port uses a single MPO-to-MPO cable connection. The correct connection speed must be specified during initial setup so that the switch ports are configured with the appropriate breakout mode and transfer speed.

The uplinks are configured during system initialization, based on information you provide as part of the installation checklist. Unused spine switch uplink ports, including unused breakout ports, are disabled for security reasons. The table shows the supported uplink configurations by port count and speed, and the resulting total bandwidth.

| Uplink Speed | Number of Uplinks per Spine Switch | Total Bandwidth |

|---|---|---|

|

10 Gbps |

1, 2, 4, 8, or 16 |

20, 40, 80, 160, or 320 Gbps |

|

25 Gbps |

1, 2, 4, 8, or 16 |

50, 100, 200, 400, or 800 Gbps |

|

40 Gbps |

1, 2, or 4 |

80, 160, or 320 Gbps |

|

100 Gbps |

1, 2, or 4 |

200, 400, or 800 Gbps |

Regardless of the number of ports and port speeds configured, you also select a topology for the uplinks between the spine switches and the data center network. This information is critical for the network administrator to configure link aggregation (port channels) on the data center switches. The table shows the available options.

| Topology | Description |

|---|---|

|

Triangle |

In a triangle topology, all cables from both spine switches are connected to a single data center switch. |

|

Square |

In a square topology, two data center switches are used. All outbound cables from a given spine switch are connected to the same data center switch. |

|

Mesh |

In a mesh topology, two data center switches are used as well. The difference with the square topology is that uplinks are created in a cross pattern. Outbound cables from each spine switch are connected in pairs: one cable to each data center switch. |

Topology

The physical topology for the uplinks from the appliance to the data center network is dependent on bandwidth requirements and available data center switches and ports. Connecting to a single data center switch implies that you select a triangle topology. To increase redundancy you distribute the uplinks across a pair of data center switches, selecting either a square or mesh topology. Each topology allows you to start with a minimum bandwidth, which you can scale up with increasing need. The maximum bandwidth is 800 Gbps, assuming the data center switches, transceivers and cables allow it.

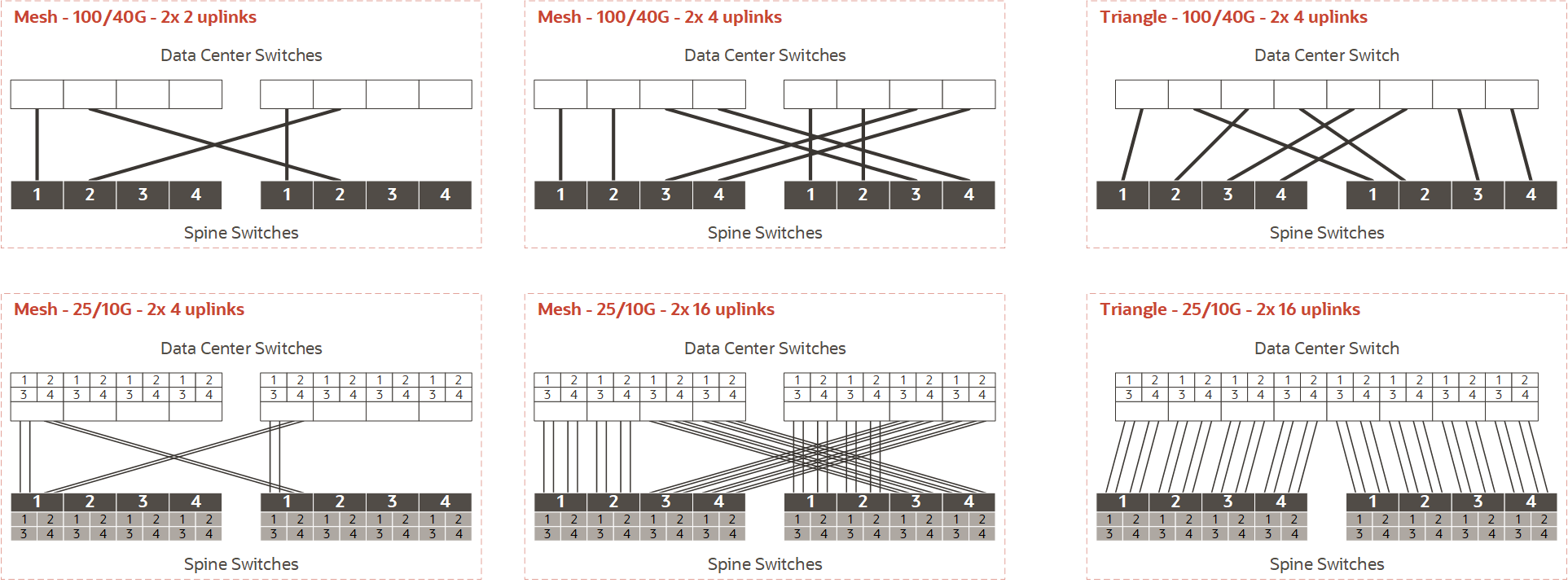

The following diagrams provide a simplified view of supported topologies, and can be used as initial guidance to integrate the rack into the data center network. Use the diagrams and the notes to determine the appropriate cabling and switch configuration for your installation. For more detailed configuration examples, refer to the chapter "Appliance Networking Reference Topologies" in the Oracle Private Cloud Appliance Installation Guide.

Diagram Notes

On the appliance side there are two spine switches that must be connected to the data center network. Both spine switches must have identical port and cable configurations. In each example, the spine switches are shown at the bottom, with all uplink ports identified by their port number. The lines represent outgoing cable connection to the data center switches, which are shown at the top of each example without port numbers.

Cabling Pattern and Port Speed

There are six examples in total, organized in two rows by three columns.

-

The top row shows cabling options based on full-port 100Gbps or 40Gbps connections. The bottom row shows cabling options using breakout ports at 25Gbps or 10Gbps speeds; the smaller boxes numbered 1-4 represent the breakout connections for each of the four main uplink ports per spine switch.

-

The third column shows a triangle topology with full-port connections and breakout connections. The difference with column two is that all uplinks are connected to a single data center switch. The total bandwidth is the same, but the triangle topology lacks data center switch redundancy.

-

There are no diagrams for the square topology. The square cabling configuration is like the mesh examples, but without the crossing patterns. Visually, all connectors in the diagrams would be parallel. In a square topology, all outgoing cables from one spine switch are connected port for port to the same data center switch. Unlike mesh, square implies that each spine switch is peered with only one data center switch.

Link Count

When connecting the uplinks, you are required to follow the spine switch port numbering. Remember that both spine switches are cabled identically, so each uplink or connection corresponds with a pair of cables.

-

With one cable per spine port, using 100 or 40 Gbps transceivers, the first uplink pair uses spine switch ports numbered "1", the second uses port 2, and so on. In this configuration, the maximum number of uplinks is four per spine switch.

-

When breakout cables are used, with 25 or 10Gbps port speeds, the first uplink pair uses port 1/1. With two or four uplinks per spine switch there is still only one full port in use. When you increase the uplink count to 8 per spine switch, ports 1/1-2/4 are in use. At 16 uplinks per spine switch, all breakout connections of all four reserved ports will be in use.

-

In a mesh topology, a particular cabling pattern must be followed: connect the first half of all uplinks to one data center switch, and the second half to the other data center switch. For example: if you have four uplinks then the first two go to the same switch; if you have eight uplinks (not shown in the diagrams) then the first four go to the same switch; if you have 16 uplinks then the first eight go to the same switch.

Mesh Topology Implications

-

In a mesh topology, the spine switch configuration expects that the first half of all uplinks is connected to one data center switch, and the second half to the other data center switch. When you initially connect the appliance to your data center network, it is straightforward to follow this pattern.

-

However, if you increase the number of uplinks at a later time, the mesh cabling pattern has a significant implication for the existing uplinks. Compare the diagrams in the first two columns: when you double the uplink count, half of the existing connections must be moved to the other data center switch. For 100/40Gbit uplinks, re-cabling is only required when you increase the link count from 2 to 4. Due to the larger number of cables, 25/10Gbit uplinks require more re-cabling: when increasing uplink count from 2 to 4, from 4 to 8, and from 8 to 16.

Logical Connection

The logical connection between the appliance and the data center is implemented entirely in layer 3. In the OSI model (Open Systems Interconnection model), layer 3 is known as the network layer, which uses the source and destination IP address fields in its header to route traffic between connected devices.

Private Cloud Appliance supports two logical connection options: you must choose between static routing and dynamic routing. Both routing options are supported by all three physical topologies.

| Connection Type | Description |

|---|---|

|

Static Routing |

When static routing is selected, all egress traffic goes through a single default gateway IP address configured on data center network devices. This gateway IP address must be in the same subnet as the appliance uplink IP addresses, so it is reachable from the spine switches. The data center network devices can use SVIs (Switch Virtual Interfaces) with VLAN IDs in the range of 2-3899. All gateways configured within a virtual cloud network (VCN) will automatically have a route rule to direct all traffic intended for external destination to the IP address of the default gateway. |

|

Dynamic Routing |

When dynamic routing is selected, BGP (Border Gateway Protocol) is used to establish a TCP connection between two Autonomous Systems: the appliance network and the data center network. This configuration requires a registered or private ASN (Autonomous System Number) on each side of the connection. Private Cloud Appliance BGP configuration uses ASN 136025 by default, this can be changed during initial configuration. For BGP routing, two routing devices in the data center must be connected to the two spine switches in the appliance rack. Corresponding interfaces (port channels) between the spine switches and the data center network devices must be in the same subnet. It is considered good practice to use a dedicated /30 subnet for each point-to-point circuit, which is also known as a route hand-off network. This setup provides redundancy and multipathing. Dynamic routing is also supported in a triangle topology, where both spine switches are physically connected to the same data center network device. In this configuration, two BGP sessions are still established: one from each spine switch. However, this approach reduces the level of redundancy. |

Supported Routing Designs

The table below shows which routing designs are supported depending on the physical topology in your data center and the logical connection you choose to implement.

Note that link aggregation across multiple devices (vPC or MLAG) is only supported with static routing. When dynamic routing is selected, link aggregation is restricted to ports of the same switch.

When the uplinks are cabled in a mesh topology, a minimum of 2 physical connections per spine switch applies. To establish BGP peering, 2 subnets are required. If the uplink count changes, the port channels are reconfigured but the dedicated subnets remain the same.

| Logical Connection | Physical Topology | Routing Design | ||

|---|---|---|---|---|

| Single Subnet | Dual Subnet | vPC/MLAG | ||

|

Static Routing |

Square |

Yes |

Yes |

Yes |

|

Mesh |

Yes |

Yes |

Yes |

|

|

Triangle |

Yes |

Yes |

Yes |

|

|

Dynamic Routing |

Square |

Yes |

– |

– |

|

Mesh |

– |

Yes |

– |

|

|

Triangle |

Yes |

– |

– |

|

Uplink Protocols

The uplinks to the data center run a variety of protocols to provide redundancy and reduce link failure detection and recovery times on these links. These protocols work with the triangle, square, or mesh topologies.

The suite of uplink protocols include:

- Bidirectional Forwarding Detection (BFD)

- Virtual Router Redundancy Protocol (VRRP)

- Hot Spare Router Protocol (HSRP)

- Equal Cost Multi-Path (ECMP)

Each is briefly described in the following sections of this topic.

BFD

In most router networks, connection failures are detected by loss of the “hello” packets sent by routing protocols. However, detection by this method often takes more than one second, routing a lot of packets on high-speed links to a destination that they cannot reach, which burdens link buffers. Increasing the “hello” packet rate burdens the router CPU.

Bidirectional Forwarding Detection (BFD) is a built-in mechanism that alerts routers at the end of a failed link that there is a problem more quickly than any other mechanism, reducing the load on buffers and CPUs. BFD works even in situations where there are switches or hubs between the routers.

BFD requires no configuration and has no user-settable parameters.

VRRPv3

The Virtual Router Redundancy Protocol version 3 (VRRPv3) is a networking protocol that uses the concept of a virtual router to group physical routers together and make them appear as one to participating hosts. This increases the availability and reliability of routing paths through automatic default gateway selections on an IP subnetwork.

With VRRPv3, the primary/active and secondary/standby routers act as one virtual router. This virtual router becomes the default gateway for any host on the subnet participating in VRRPv3. One physical router in the group becomes the primary/active touter for packet forwarding. However, if this router fails, another physical router in the group takes over the forwarding role, adding redundancy to the router configuration. The VRRPv3 “network” is limited to the local subnet and does not advertise routes beyond the local subnet.

HSRP

Cisco routers often use a redundancy protocol called the Hot Spare Router Protocol (HSRP) to improve router availability. Similar to the methods of VRRP, HSRP groups physical routers into a single virtual router. The failure of a physical default router results in another router using HSRP to take over the default forwarding of packets without stressing the host device.

ECMP

Equal Cost Multi-Path (ECMP) is a way to make better use of network bandwidth, especially in more complex router networks with many redundant links.

Normally, router networks with multiple router paths to another destination network choose one active route to a gateway router as the “best” path and use the other paths as a standby in case of failure. The decision about which path to a network gateway router to use is usually determined by its “cost” from the routing protocol perspective. In cases where the cost over several links to reach network gateways are equal, the router simply chooses one based on some criteria. This makes routing decisions easy but wastes network bandwidth as network links on paths not chosen sit idle.

ECMP is a way to send traffic on multiple path links with equal cost, making more efficient use of network bandwidth.

Administration Network

In an environment with elevated security requirements, you can optionally segregate administrative appliance access from the data traffic. The administration network physically separates configuration and management traffic from the operational activity on the data network by providing dedicated secured network paths for appliance administration operations. In this configuration, the entire Service Enclave can be accessed only over the administration network. This also includes the monitoring, metrics collection and alerting services, the API service, and all component management interfaces.

Setting up the administration network requires additional Ethernet connections from the next-level data center network devices to port 5 on each of the spine switches in the appliance. Inside the administration network, the spine switches must each have one IP address and a virtual IP shared between the two. A default gateway is required to route traffic, and NTP and DNS services must be enabled. The management nodes must be assigned host names and IP addresses in the administration network – one each individually and one shared between all three.

A separate administration network can be used with both static and dynamic routing. The use of a VLAN is supported, but when combined with static routing the VLAN ID must be different from the one configured for the data network.

Reserved Network Resources

The network infrastructure and system components of Private Cloud Appliance need a large number of IP addresses and several VLANs for internal operation. It is critical to avoid conflicts with the addresses in use in the customer data center as well as the CIDR ranges configured in the virtual cloud networks (VCNs).

These IP address ranges are reserved for internal use by Private Cloud Appliance:

| Reserved IP Addresses | Description |

|---|---|

|

CIDR blocks in Shared Address Space |

The Shared Address Space, with IP range 100.64.0.0/10, was implemented to connect customer-premises equipment to the core routers of Internet service providers. To allocate IP addresses to the management interfaces and ILOMs (Oracle Integrated Lights Out Manager) of hardware components, two CIDR blocks are reserved for internal use: 100.96.0.0/23 and 100.96.2.0/23. |

|

CIDR blocks in Class E address range |

Under the classful network addressing architecture, Class E is the part of the 32-bit IPv4 address space ranging from 240.0.0.0 to 255.255.255.255. At the time, it was reserved for future use, so it cannot be used on the public Internet. To accommodate the addressing requirements of all infrastructure networking over the physical 100Gbit connections, the entire 253.255.0.0/16 subnet is reserved. It is further subdivided into multiple CIDR blocks in order to group IP addresses by network function or type. The various CIDR blocks within the 253.255.0.0/16 range are used to allocate IP addresses for the Kubernetes containers running the microservices, the virtual switches, routers and gateways enabling the VCN data network, the hypervisors, the appliance chassis components, and so on. |

|

Link Local CIDR block |

A link-local address belongs to the 169.254.0.0/16 IP range, and is valid only for connectivity within a host's network segment, because the address is not guaranteed to be unique outside that network segment. Packets with link-local source or destination addresses are not forwarded by routers. The link-local CIDR block 169.254.239.0/24, as well as the IP address 169.254.169.254, are reserved for functions such as DNS requests, compute instance metadata transfer, and cloud service endpoints. |

All VCN traffic – from one VCN to another, as well as between a VCN and external resources – flows across the 100Gbit connections and is carried by VLAN 3900. Traffic related to server management is carried by VLAN 3901. All VLANs with higher IDs are also reserved for internal use, and VLAN 1 is the default for untagged traffic. The remaining VLAN range of 2-3899 is available for customer use.

Flex Network Integration

Optionally, Private Cloud Appliance can be integrated with Oracle Exadata, or other external systems, for a high-performance combination of compute capacity and database or storage optimization. In this configuration, external nodes are directly connected to reserved ports on the spine switches of the Private Cloud Appliance. Four 100Gbit ports per spine switch are reserved and split into 4x25Gbit breakout ports, providing a maximum of 32 total cable connections. Each database node is cabled directly to both spine switches, meaning up to 16 external nodes can be connected to the appliance. It is allowed to connect external nodes from different external racks. This feature was formerly called Exadata Networks.

Once the cable connections are in place, the administrator configures a Flex network, which enables traffic between the connected database nodes and a set of compute instances. These prerequisites apply:

-

The Flex network must not overlap with any subnets in the on-premises network.

-

The VCNs containing compute instances that connect to the external nodes, must have a dynamic routing gateway (DRG) configured.

-

The relevant subnet route tables must contain rules to allow traffic to and from the Flex network.

The Flex network configuration determines which external nodes are exposed and which subnets have access to those nodes. Access can be enabled or disabled per Exadata cluster and per compute subnet. In addition, the Flex network can be exposed through the appliance's external network, allowing other resources within the on-premises network to connect to the nodes through the spine switches of the appliance. The Flex network configuration is created and managed through the Service CLI.