11 Archiving Backups to Cloud

This procedure for archive-to-cloud builds on the techniques used for copy-to-tape. The difference is that it sends backups to cloud repositories for longer term storage.

This procedure includes steps for configuring a credential wallet to store TDE master keys, because backups are encrypted before they are archived to a cloud repository. The initial configuration tasks are performed in the Oracle Key Vault to prepare the wallet. RACLI commands were developed to assist configuring the Recovery Appliance for archive-to-cloud and using the wallet. At the end, a job template is created and run for archive-to-cloud.

Grouping Backup Pieces

The performance of copy-to-tape and archive-to-cloud is improved by grouping archived logs from protected databases' real-time redo into fewer number of backup sets.

Protected databases can achieve real-time protection by enabling real-time redo transport to the Recovery Appliance. Each received redo log on the appliance is compressed and written to the storage location as an individual archived log backup. These log backups can be archived to tape or cloud, to support fulls and incremental backups that are archived for long-term retention needs.

-

To tape: use Oracle Secure Backup (OSB) module or a third-party backup software module installed on the Recovery Appliance.

-

To cloud: use the Cloud Backup SBT module.

Inter-job latencies can happen between writing each backup piece during copy-to-tape operations. When the number of backup pieces is high, this pause constitutes a large percentage of the time the tape drive is unavailable. This means five (5) 10GB pieces will go to tape more quickly than fifty (50) 1GB pieces.

Recovery Appliance addresses inter-job latency by grouping the archived log backup pieces together and copying them as a single backup piece. Therefore this results in larger backup pieces on tape storage than previous releases. This feature is enabled by default. DMBS_RA CONFIG has the parameter group_log_max_count for setting the maximum archived logs per backup piece that is copied to tape; its default is 1. The group_log_backup_size_gb parameter is used to limit the size of these larger backup pieces; its default is 256 GB.

Pre-requisites for Archive-to-Cloud

The following prerequisites must be met before starting to use cloud storage with the Recovery Appliance.

-

Protected database(s) should already be enrolled and backups taken to the Recovery Appliance.

This is covered in Configuring Recovery Appliance for Protected Database Access. Brief review:- Create a virtual private catalog user.

- Enroll the protected database.

- Update the properties for the protected database.

-

The Recovery Appliance has been registered and enrolled at an Oracle Key Vault.

-

Archive-to-cloud features are only supported on small endian databases. Only Linux and Windows.

Big endian databases that attempt to archive-to-cloud cause error ORA-64800: unable to create encrypted backup for big endian platform, because the operation cannot create encrypted backup for big endian platforms.

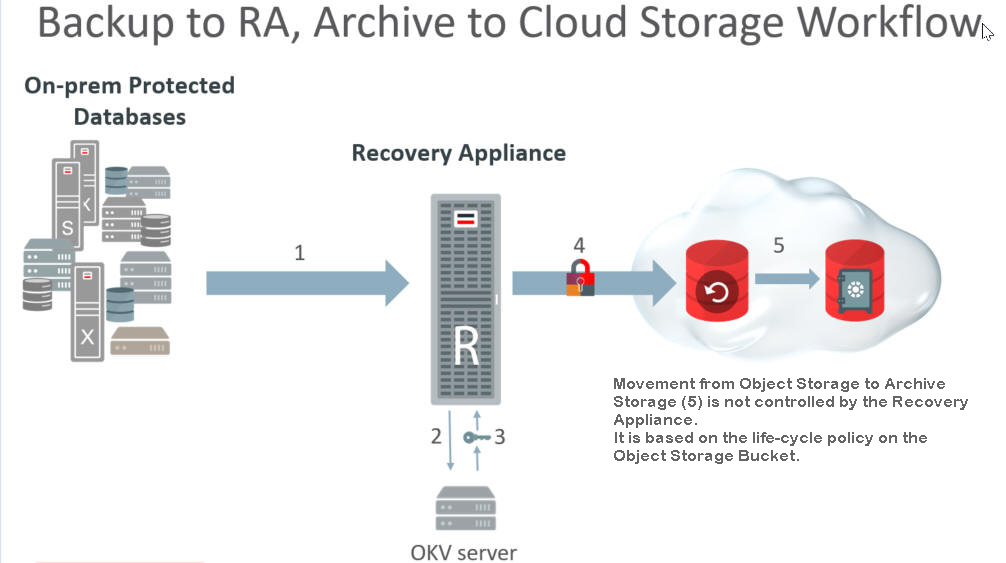

Flow for Archive-to-Cloud Storage

All backup objects archived to cloud storage are encrypted using a random Data Encryption Key (DEK). A Transparent Data Encryption (TDE) master key for each protected database is used to encrypt the DEK; the encrypted DEK is stored in the backup piece. The Oracle Key Vault (OKV) contains the TDE master keys; it does not contain the individual DEKs used to encrypt backups written to tape or cloud. A protected database may acquire many TDE master keys with time, so restoration of an individual archived object requires the protected database's master key in use at time of backup.

The following image shows the flow for backing up to a Recovery Appliance that archives to cloud storage. The restore operations are predicated on this backup and archive flow.

Figure 11-1 Flow for Backups to Cloud Storage

Description of "Figure 11-1 Flow for Backups to Cloud Storage"

-

Incremental backups of the database are performed regularly to the Recovery Appliance. This happens at a different interval than the following archive operations.

-

When the scheduled archive-to-cloud operation starts, the Recovery Appliance requests a master key for the protected database from the OKV Server.

-

The OKV returns the protected database's master key. If one doesn't exist for the protected database, a new master key is generated. (A new master key can be generated whenever desired.)

-

A DEK is generated for the backup object(s).

-

The backup objects are encrypted using the DEK.

-

Using the master key, the Recovery Appliance encrypts the DEK and stores this with the backup object.

-

-

The life-cycle policy for a given database determines if and when its backup objects are written to tape or cloud storage.

-

The life-cycle policy of the object storage bucket determines if and when a backup object in cloud storage moves from object storage to archive storage. The Recovery Appliance does not control this.

Oracle Key Vault and Recovery Appliance

The Oracle Key Vault (OKV) stores the TDE master keys and also keeps track of all enrolled endpoints.

Endpoints are the database servers, application servers, and computer systems where actual cryptographic operations such as encryption or decryption are performed. Endpoints request OKV to store and retrieve security objects.

A brief overview of the Oracle Key Vault (OKV) configurations:

-

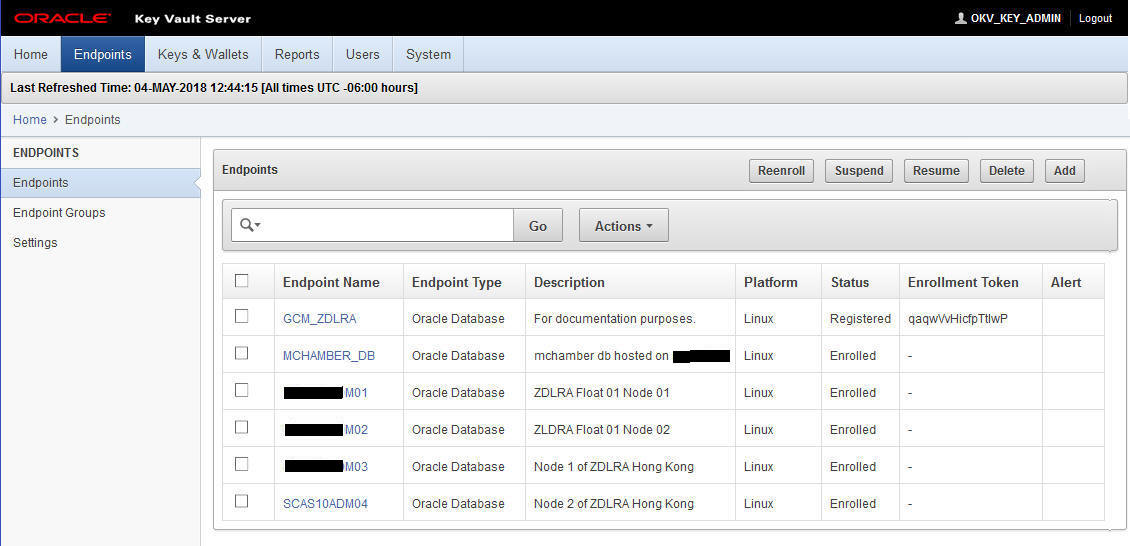

All compute nodes of the Recovery Appliance are registered and enrolled as OKV endpoints.

-

A single OKV endpoint group contains all the endpoints corresponding to all of the compute nodes of the Recovery Appliance.

-

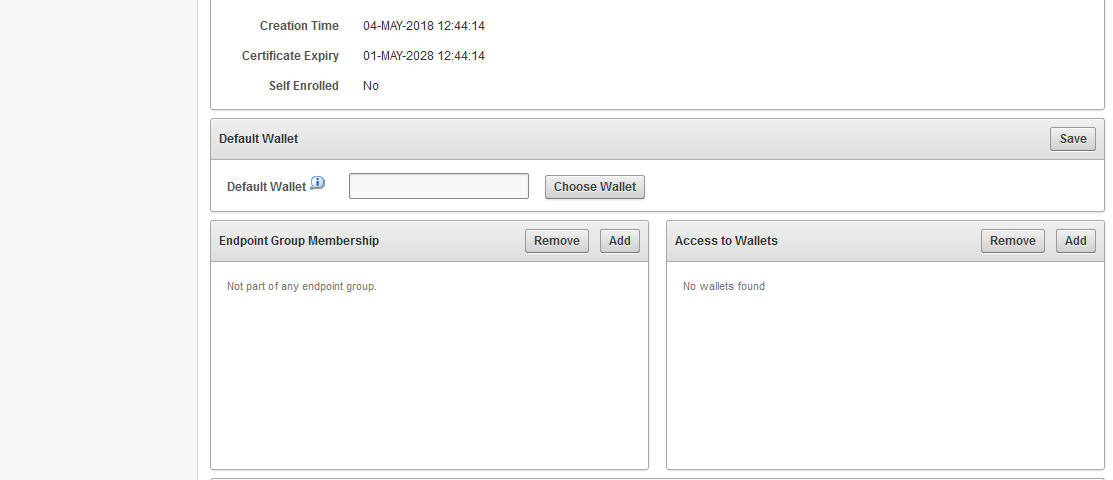

A single wallet is shared and configured as

'Default Wallet'for all endpoints corresponding to all of the compute nodes of the Recovery Appliance. -

The OKV endpoint group is configured with read/write/manage access to the shared virtual wallet.

- If more than one Recovery Appliance is involved, each Recovery Appliance has its own end point group and wallet.

-

The host-specific

okvclient.jaris created and saved during the enrollment process of each endpoint to the staging path on its respective node. If the root user is performing the operation, the/radumpis the staging path. If a named user (such asraadmin) is performing the operation, then the staging has to be in/tmp. The staged file has to be named either as-isokvclient.jaror<myHost>-okvclient.jar, where<myHost>matches whathostnamereturns.

Note:

Refer to Oracle Key Vault Administrator's Guide for more information.

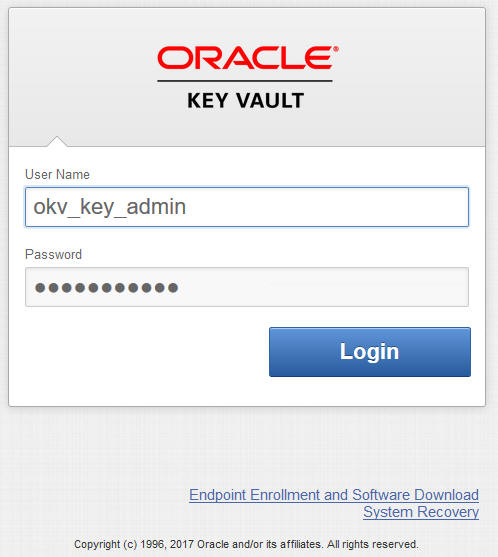

Review: Oracle Key Vault

This reference section employs concepts from the Oracle Key Vault Administrator's Guide (OKV).

The OKV administrator performs these tasks, and are a pre-requisite for the operations performed by the Recovery Appliance administrator. The OKV administrator configures the OKV Endpoints.

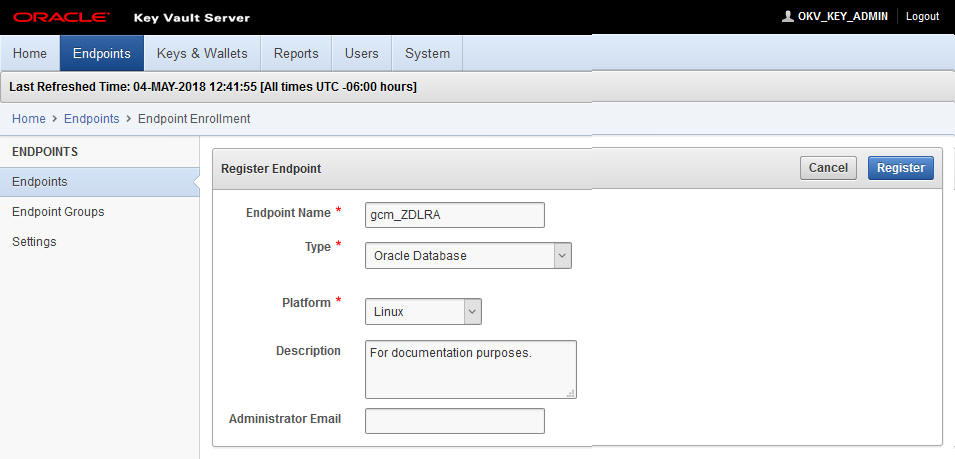

Creating the Endpoints

These operations for created an Endpoint are performed from the Key Vault Server Web Console.

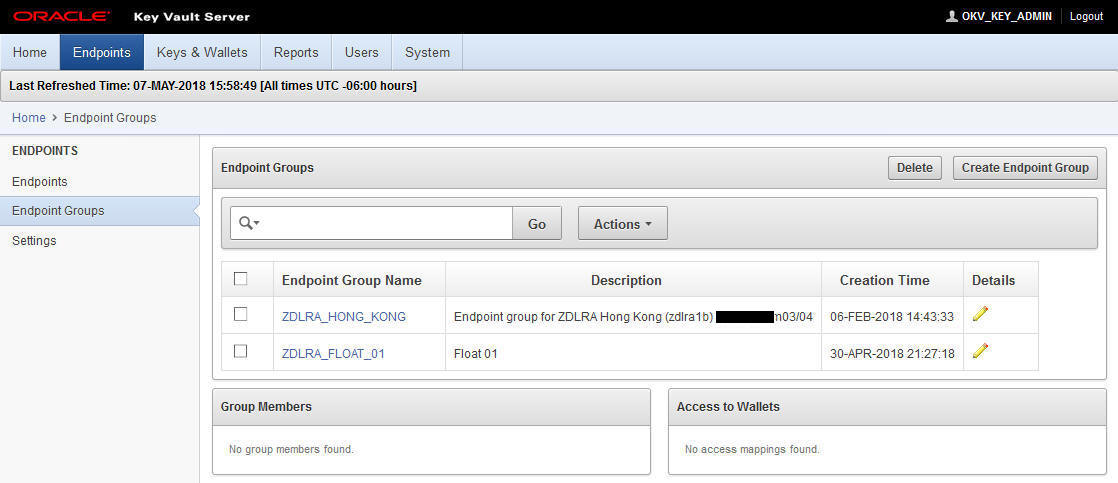

Creating the Endpoint Group

These operations for creating an Endpoint Group are performed from the Key Vault Server Web Console.

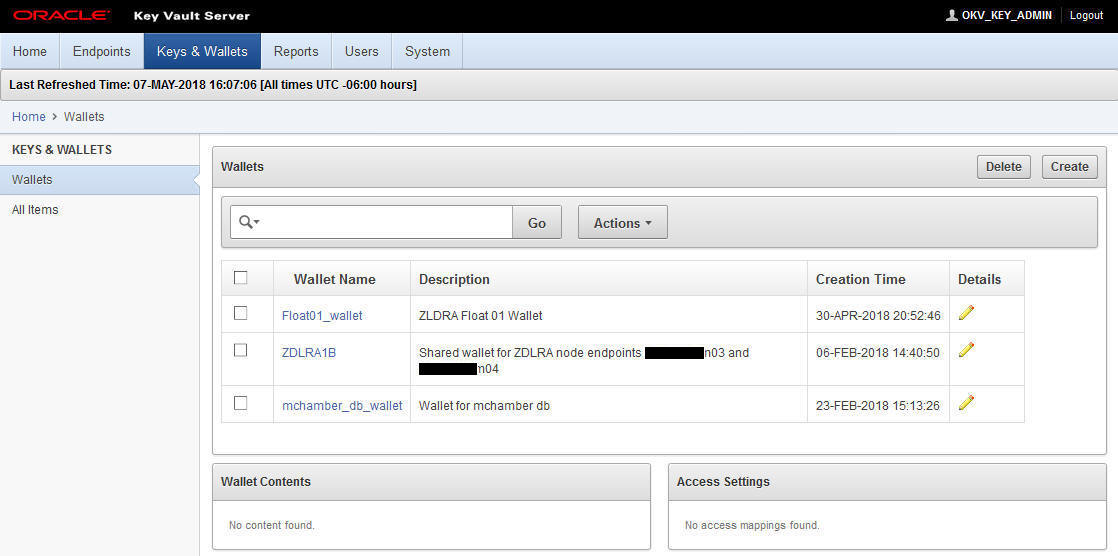

Creating a Wallet

These operations for created an Wallet are performed from the Key Vault Server Web Console.

Associating Default Wallet with Endpoints

These operations for associating the virtual wallet with an Endpoint are performed from the Key Vault Server Web Console.

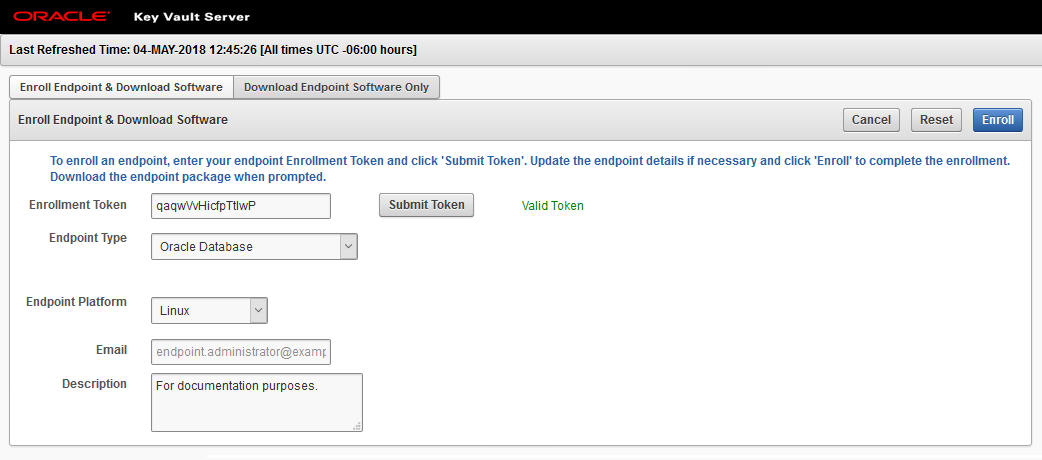

Acquiring the Enrollment Tokens

These operations for acquiring the enrollment tokens are performed from the Key Vault Server Web Console.

Downloading the OKV Client Software

These operations for downloading the OKV client software are performed from the Key Vault Server Web Console.

The follow steps are repeated for each node of the Recovery Appliance. These steps download JAR files that are specific to the Recovery Appliance node.

Note:

Do not install the JAR files at this point in time. Installation happens after other Recovery Appliance configuration steps.

The JAR files are only valid until enrollment of OKV endpoints are complete.

Recovery Appliance Cloud Archive Configuration

This section configures the Recovery Appliance to be able to use wallets and cloud objects as required for archive-to-cloud.

Configuring the Credential Wallet and Encryption Keystore

All database backup pieces are DEK encrypted before any copy-to-tape or archive-to-cloud operation.

These steps create a shared wallet to be used by all nodes of the Recovery Appliance. The wallet stores TDE master keys that encrypt the individual DEK keys.

Installing the OKV Client Software

Each node of the Recovery Appliance needs to have the appropriate client software for the Oracle Key Vault (OKV). This is accomplished using RACLI in one step.

admin_user or root user who have access to /radump, then stage the okvclient.jar file in /tmp on both nodes.

Enabling the Encryption Keystore and Creating a TDE Master Key

This task enables a keystore and creates the first TDE master key.

The OKV endpoint keystore is also known as the "OKV shared wallet." Once a keystore has been created, it must be enabled for use and the first TDE master key created for it.

Creating Cloud Objects for Archive-to-Cloud

This task creates the OCI objects Cloud_Key and Cloud_User for use with archive-to-cloud.

Adding Cloud Location

This task configures a cloud bucket location for archive-to-cloud.

Creation of a cloud_location requires that a cloud_user object has already been created. Each cloud_location creation is tied to a singular, specified cloud_user. Resulting object name translates to cloud sbt_library name, such as bucket_cloud_user. In this model, each cloud location is one-to-one cloud_user to cloud_location.

The options given to RACLI are passed to the installer, which handles setting lifecycle management for the bucket.

When completed, Object Storage is authorized to move backups to Archive Storage, as per Configuring Automatic Archival to Oracle Cloud Infrastructure.

In later steps, you need the name of the attribute set to create a sbt_job_template. This can be derived from the "racli list cloud_location --long" output. The SBT library and attribute sets created by racli can be displayed using dbms_ra, but should not be modified.

Adding an Immutable Cloud Location

This task configures an immutable cloud bucket location for archive-to-cloud.

An immutable bucket is one that retains backups in cloud storage for a period specified by the KEEP UNTIL attribute of the backup. An immutable cloud location requires two buckets that must be created in advance using the OCI Console, the ZFS console, or the OCI command line interface. The cloud buckets are:

-

Regulatory Compliance Bucket has retention rule set and locked.

-

Temporary Metadata Bucket without retention rules.

The retention rules apply to the whole bucket. Therefore, it should not use automatic lifecycle rules triggering Delete. The recommendation is one database per immutable cloud location.

Creating a Job Template

This task creates a job template for archive-to-cloud.

The options given to RACLI are passed to the installer, which handles setting lifecycle management for the bucket.

Creating or Re-Creating Protected Database TDE Master Keys

This step creates or recreates the TDE master keys used from that point forward for encrypting the DEK keys used on protected databases.

The following re-key options are available.

-

Re-key ~all~ protected databases.

SQL> exec dbms_ra.key_rekey; -

Re-key specific a protected database.

SQL> exec dbms_ra.key_rekey (db_unique_name=>'< DB UNIQUE NAME >'); -

Re-key ~all~ protected databases for a specific protection policy.

SQL> exec dbms_ra.key_rekey (protection_policy_name=>'< PROTECTION POLICY >>');

Re-keying creates new TDE master keys that are used from that point in time forward. Re-keying does not affect the availability of older master keys in the keystore.