3 Upgrade Procedures

Overview

The upgrade procedures in this document explain how to setup and perform an upgrade on the Oracle Communications Signaling, Network Function Cloud Native Environment (OCCNE) environment. The upgrade includes the OL7 base image, kubernetes, and the common services.Pre-upgrade Procedures

Following is the pre-upgrade procedures for OCCNE

upgrade:

- SSH to Bastion Host and

start docker using command below after adding the required parameters:

$ docker run -u root -d --restart=always -p 8080:8080 -p 50000:50000 -v jenkins-data:/var/jenkins_home -v /var/occne/cluster/${OCCNE_CLUSTER}:/var/occne/cluster/${OCCNE_CLUSTER} -v /var/run/docker.sock:/var/run/docker.sock ${CENTRAL_REPO}:${CENTRAL_REPO_DOCKER_PORT/jenkinsci/blueocean:1.19.0 - Get the administrator

password from the Jenkins container to log into the Jenkins user interface

running on the Bastion Host.

- SSH to the Bastion

host and run following command to get the Jenkins docker container ID:

$ docker ps | grep 'jenkins' | awk '{print $1}' Example output- 19f6e8d5639d - Get the admin

password from the Jenkins container running as bash. Execute the following

command run the container in bash mode:

$ docker exec -it <container id from above command> bash -

Run the following command from the Jenkins container while in bash mode. Once complete, capture the password for use later with user-name: admin to log in to the Jenkins GUI.

$ cat /var/jenkins_home/secrets/initialAdminPassword Example output - e1b3bd78a88946f9a0a4c5bfb0e74015 - Execute the following

ssh command from the Jenkins container in bash mode after getting the bastion

host ip address.

Note: The Bare Metal user is admusr and the vCNE user is cloud-user

ssh -t -t -i /var/occne/cluster/<cluster_name>/.ssh/occne_id_rsa <user>@<bastion_host_ip_address> - After executing the SSH command the following prompt appears: 'The authenticity of host can't be established. Are you sure you want to continue connecting (yes/no)' enter yes.

- Exit from bash mode of the Jenkins container (ie. enter exit at the command line).

- SSH to the Bastion

host and run following command to get the Jenkins docker container ID:

- Open the Jenkins GUI in a browser window using url, <bastion-host-ip>:8080 and login using the password from step 3c with user admusr

- Create a job with an

appropriate name after clicking New Item from Jenkins home page. Follow the

steps below:

- Select New Item on the Jenkins home page.

- Add a name and select the Pipeline option for creating the job

- Once the job is created and visible on the Jenkins home page, select Job. Select Configure.

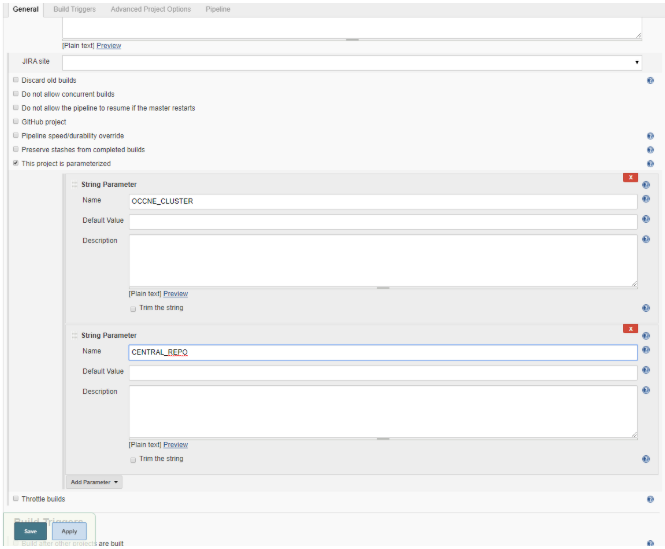

- Add parameters

OCCNE_CLUSTER and CENTRAL_REPO from configure screen. Two String Parameter

dialogs will appear, one for OCCNE_CLUSTER and one for OCCNE_REPO. Add default

value for the Default Value field in the OCCNE_CLUSTER dialog and the Default

Value field in theCENTRAL_REPO dialog.

Figure 3-1 Jenkins UI

- Copy the following

configuration to the pipeline script section in the Configure page of the

Jenkins job with substituting values for upgrade-image-version, OCCNE_CLUSTER ,

CENTRAL_REPO, and CENTRAL_REPO_DOCKER_PORT. All of these values should be known

to the user.

node ('master') { sh "docker run -i --rm -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -e ANSIBLE_NOCOLOR=1 ${CENTRAL_REPO}:${CENTRAL_REPO_DOCKER_PORT}/occne/provision:<upgrade-image-version> cp deploy_upgrade/JenkinsFile /host/artifacts" sh "docker run -i --rm -v /var/occne/cluster/${OCCNE_CLUSTER}:/host -e ANSIBLE_NOCOLOR=1 ${CENTRAL_REPO}:${CENTRAL_REPO_DOCKER_PORT}/occne/provision:<upgrade-image-version> cp deploy_upgrade/upgrade_services.py /host/artifacts" load '/var/occne/cluster/<cluster-name>/artifacts/JenkinsFile' } - Select Apply and Save

- Go back to the Job page and select Build with Parameter for the new pipeline script to be enabled for the job, this job will be aborted.

- Select Build with Parameters option to see latest Jenkins file parameters in the GUI

Setup admin.conf

Execute the pre-upgrade admin.conf setup:

Note:

Only for upgrade from 1.3.2 to 1.4.0.- Add following entries to

/etc/hosts file on Bastion Host, master and worker nodes. Add all the master

internal ip's with name lb-apiserver.kubernetes.local:

<master-internal-ip-1> lb-apiserver.kubernetes.local <master-internal-ip-2> lb-apiserver.kubernetes.local <master-internal-ip-3> lb-apiserver.kubernetes.local Example for 3 master nodes- 172.16.5.6 lb-apiserver.kubernetes.local 172.16.5.7 lb-apiserver.kubernetes.local 172.16.5.8 lb-apiserver.kubernetes.local - Login to bastion host and

get dependencies for 1.4.0 latest pipeline:

$ docker run -it --rm -v /var/occne/cluster/<cluster-name>:/host -e ANSIBLE_NOCOLOR=1 -e 'OCCNEARGS= ' <central_repo>:<central_repo_docker_port>/occne/provision:<upgrade_image_version> /getdeps/getdeps Example: $ docker run -it --rm -v /var/occne/cluster/delta:/host -e ANSIBLE_NOCOLOR=1 -e 'OCCNEARGS= ' winterfell:5000/occne/provision:1.4.0 /getdeps/getdeps- Execute below command

on Bare Metal Cluster:

$ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/<cluster-name>:/host -v /var/occne:/var/occne:rw -e 'OCCNEARGS= --tags=pre_upgrade ' <central_repo>:<central_repo_docker_port>/occne/provision:1.4.0 Example: $ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/delta:/host -v /var/occne:/var/occne:rw -e 'OCCNEARGS= --tags=pre_upgrade ' winterfell:5000/occne/provision:1.4.0 - Execute below command

on VCNE Cluster:

Run kubectl get nodes command to verify above changes were applied correctly.$ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/<cluster-name>:/host -v /var/occne:/var/occne:rw -e OCCNEINV=/host/terraform/hosts -e 'OCCNEARGS= --tags=pre_upgrade --extra-vars={"occne_vcne":"1","occne_cluster_name":"<occne_cluster_name>","occne_repo_host":"<occne_repo_host_name>","occne_repo_host_address":"<occne_repo_host_address>"} ' <central_repo>:<central_repo_port_name>/occne/provision:<upgrade_image_version> Example: $ docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/delta:/host -v /var/occne:/var/occne:rw -e OCCNEINV=/host/terraform/hosts -e 'OCCNEARGS= --tags=pre_upgrade --extra-vars={"occne_vcne":"1","occne_cluster_name":"ankit-upgrade-3","occne_repo_host":"ankit-upgrade-3-bastion-1","occne_repo_host_address":"192.168.200.9"} ' winterfell:5000/occne/provision:1.4.0 - Run following command

on all the master/ worker nodes:

yum clean all

- Execute below command

on Bare Metal Cluster:

Upgrading K8s container engine from Docker to Containerd

This

section explains the procedure to upgrade K8s container engine from docker to

container.

Note: This step is only for execution from 1.3.2 to 1.4.0 where kube version is same but there is a change to container engine for cluster, this step should be removed for future upgrade procedure.

- Get k8s dependencies for

1.4.0 k8s upgrade for containerd on Bastion Host

Example- ANSIBLE_NOCOLOR=1 OCCNE_VERSION= K8S_IMAGE=winterfell:5000/occne/k8s_install:1.4.0 CENTRAL_REPO=winterfell K8S_ARGS="" K8S_SKIP_TEST=1 K8S_SKIP_DEPLOY=1 /var/occne/cluster/<cluster-name>/artifacts/pipeline.sh - Create

upgrade_container.yml in /var/occne/cluster/<cluster_name> directory with

contents below:

- hosts: k8s-cluster tasks: - name: Switch Docker container runtime to containerd shell: "{{ item }}" with_items: - "sudo cp /etc/cni/net.d/calico.conflist.template 10-containerd-net.conflist" - "systemctl daemon-reload" - "systemctl enable containerd" - "systemctl restart containerd" - "systemctl stop docker" - "systemctl daemon-reload" - "systemctl restart kubelet" - "sudo yum remove -y docker-ce" ignore_errors: yes - Run k8s install in bash

mode to update container engine from docker to container d

Bare Metal ClustersVCNE Clusters

docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/<cluster-name>:/host -v /var/occne:/var/occne:rw -e ANSIBLE_NOCOLOR=1 -e 'OCCNEARGS= ' winterfell:5000/occne/k8s_install:1.4.0 bash

Below steps are common once in bash docker mode for both vcne and bare metal:// Get Values from Cloud Config Example- docker run -it --rm --cap-add=NET_ADMIN --network host -v /var/occne/cluster/<cluster-name>:/host -v /var/occne:/var/occne:rw -e OCCNEINV=/host/terraform/hosts -e 'OCCNEARGS=--extra-vars={"occne_vcne":"1","occne_cluster_name":"ankit-upgrade-3","occne_repo_host":"ankit-upgrade-3-bastion-1","occne_repo_host_address":"192.168.200.9"} --extra-vars={"openstack_username":"ankit.misra","openstack_password":"{Cloud-Password}","openstack_auth_url":"http://thundercloud.us.oracle.com:5000/v3","openstack_region":"RegionOne","openstack_tenant_id":"811ef89b5f154ab0847be2f7e41117c0","openstack_domain_name":"LDAP","openstack_lbaas_subnet_id":"2787146b-56fe-4c58-bd87-086856de24a9","openstack_lbaas_floating_network_id":"e4351e3e-81e3-4a83-bdc1-dde1296690e3","openstack_lbaas_use_octavia":"true","openstack_lbaas_method":"ROUND_ROBIN","openstack_lbaas_enabled":true} ' winterfell:5000/occne/k8s_install:<image_tag> bash

Wait for all pods to become ready with 1/1 and status as running. This can be done by executing kubectl get pods. Run next steps after confirming all pods are ready , running.sed -i /kubespray/roles/bootstrap-os/tasks/bootstrap-oracle.yml -re '2, 16d' sed -i /kubespray/roles/kubernetes-apps/ingress_controller/cert_manager/tasks/main.yml -re '3, 58d' // The command runs the playbook to add configuration files for containerd /copyHosts.sh ${OCCNEINV} && ansible-playbook -i /kubespray/inventory/occne/hosts \ --become \ --become-user=root \ --private-key /host/.ssh/occne_id_rsa \ /kubespray/cluster.yml ${OCCNEARGS} // Once done run the upgrade_container in bash mode below. // Around a 2 -3 minute timeout for some services may occur depending on how quickly the next command is executed. /copyHosts.sh ${OCCNEINV} && ansible-playbook -i /kubespray/inventory/occne/hosts \ --become \ --become-user=root \ --private-key /host/.ssh/occne_id_rsa \ /host/upgrade_container.yml // Note : There will be a prompt during running above task on vcne that calico.conflist.template does not exist, this is because flannel is used rather then calico. Prompt will be skipped for vcne - Test to check all

containers are managed by containerd:

// Login into any node of the cluster to see all the containers are managed by crictl sudo /usr/local/bin/crictl ps

Upgrading OCCNE

Following is the procedure to upgrade OCCNE.

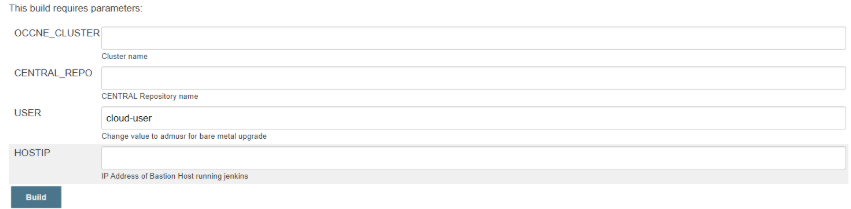

- Click the job name created in the previous step. Select the Build with Parameters option on the left top corner panel in the Jenkins GUI as shown below:

- On selecting the

Build with

Parameters option, there will be a list of parameters with a description

describing which values need to be used for the Bare-Metal upgrade vs the vCNE

upgrade.

Note: Change USER to admusr before upgrading bare-metal cluster

Figure 3-2 Jenkins UI: Build with Parameters

- After entering correct values for parameters, select Build to start upgrade.

- Once the build has started, go to the job home page to see the live console for upgrade. This can be done in two ways: Either select console output or Open Blue Ocean (Recommended is blue ocean as it will show each stage of upgrade)

- Check the job progress

from

Blue Ocean link

in the job to see each stage being executed, once upgrade is complete all the

stages will be in

Green.

Make sure before running next step that docker registry name and host name are same, if not run:

vi /var/occne/cluster/<cluster-name>/artifacts/upgrade_services.py and change hostname= os.environ['HOSTNAME'] in the script to hostname= '<bastion-registry-name>' -

After a successful upgrade, run following commands to upgrade the major version for the common services:

cd /var/occne/cluster/<cluster-name>/artifacts python -c "execfile('upgrade_services.py'); patch_random_named_pods_image()" // Wait for all the pods to be patched, run kubectl get pods to verify all the pods are running and ready flag is 1/1 python -c "execfile('upgrade_services.py'); patch_pods_image()" // Wait for all the pods to be patched, run kubectl get pods to verify all the pods are running and ready flag is 1/1 - To check if all the

elastic search data pods have restarted, run command below, sometimes it can

take time for a single pod to restart:

kubectl get pods -n occne-infra | grep data Sample output: NAME READY STATUS RESTARTS AGE occne-elastic-elasticsearch-data-0 1/1 Running 0 2d1h - Make sure that restarts value has changed to 1 from 0 after running the python command from code block above.

- Wait till data pods

restart is done, if not get information for node running the data pods and stop

elastic search data containers using the following command:

Verify all elasticsearch-data-<count> pods are with ready 1/1 , status = running and Restarts =1.$ sudo /usr/local/bin/crictl stop containerid - Once all the elastic

search data pods have restarted, run the command mentioned below:

$ python -c "execfile('upgrade_services.py'); patch_deployment_image_chart()"