8 Alerts

This section provides information on Policy alerts and their configuration.

Note:

The performance and capacity of the system can vary based on the call model, configuration, including but not limited to the deployed policies and corresponding data, for example, policy tables.8.1 Configuring Alerts

This section describes how to configure alerts in Policy. The Alert Manager uses the Prometheus measurements values as reported by microservices in conditions under alert rules to trigger alerts.

Note:

- Sample alert files are packaged with Policy Custom

Templates. The

Policy Custom Templates.zipfile can be downloaded from MOS. Unzip the folder to access the following files:- Common_Alertrules_cne1.5+.yaml

- Common_Alertrules_cne1.9+.yaml

- PCF_Alertrules_cne1.5+.yaml

- PCF_Alertrules_cne1.9+.yaml

- PCRF_Alertrules_cne1.5+.yaml

- PCRF_Alertrules_cne1.9+.yaml

- Name in the metadata

section should be unique while applying more than one unique

files. For

example:

apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: creationTimestamp: null labels: role: cnc-alerting-rules name: occnp-pcf-alerting-rules - If required, edit the threshold values of various alerts in the alert files before configuring the alerts.

- The Alert Manager and Prometheus tools should run in CNE namespace, for example, occne-infra.

- Use the following table to select the appropriate files on the

basis of deployment mode and CNE version

Table 8-1 Alert Configuration

Deployment Mode CNE 1.5+ CNE 1.9+ Converged Mode Common_Alertrules_cne1.5+.yaml

PCF_Alertrules_cne1.5+.yaml

PCRF_Alertrules_cne1.5+.yaml

Common_Alertrules_cne1.9+.yaml

PCF_Alertrules_cne1.9+.yaml

PCRF_Alertrules_cne1.9+.yaml

PCF only Common_Alertrules_cne1.5+.yaml

PCF_Alertrules_cne1.5+.yaml

Common_Alertrules_cne1.9+.yaml

PCF_Alertrules_cne1.9+.yaml

PCRF only Common_Alertrules_cne1.5+.yaml

PCRF_Alertrules_cne1.5+.yaml

Common_Alertrules_cne1.9+.yaml

PCRF_Alertrules_cne1.9+.yaml

Configuring Alerts in Prometheus for CNE version from 1.5.0 up to 1.8.x

- Copy the required files to the Bastion

Host. Place the files in the

/var/occne/cluster/<cluster-name>/artifacts/alertsdirectory on the OCCNE Bastion Host.$ pwd /var/occne/cluster/stark/artifacts/alerts $ ls occne_alerts.yaml $ vi PCF_Alertrules.yaml $ ls PCF_Alertrules.yaml occne_alerts.yaml - To set the correct file permissions, run the

following command:

$ chmod 644 PCF_Alertrules.yaml - To load the updated rules from the Bastion

host in the file to the existing

occne-prometheus-alerts Configmap, run the following

command:

$ kubectl create configmap occne-prometheus-alerts --from-file=/var/occne/cluster/<cluster-name>/artifacts/alerts -o yaml --dry-run -n occne-infra | kubectl replace -f - $ kubectl get configmap -n occne-infra - Verify the alerts in the Prometheus GUI. To do so, select the Alerts tab, and view alert details by selecting any individual rule from the list.

Configuring Alerts in Prometheus for CNE 1.9.0 and later versions

- Copy the the required file to the Bastion Host.

- To create or replace the PrometheusRule

CRD, run the following command:

$ kubectl apply -f Common_Alertrules_cne1.9+.yaml -n <namespace>$ kubectl apply -f PCF_Alertrules_cne1.9+.yaml -n <namespace>$ kubectl apply -f PCRF_Alertrules_cne1.9+.yaml -n <namespace>Note:

This is a sample command for Converged mode of deployment.To verify if the CRD is created, run the following command:kubectl get prometheusrule -n <namespace>Example:kubectl get prometheusrule -n occnp - Verify the alerts in the Prometheus GUI. To do so, select the Alerts tab, and view alert details by selecting any individual rule from the list.

Validating Alerts

- Open the Prometheus server from your browser using the <IP>:<Port>

- Navigate to Status and then Rules

- Search Policy. Policy Alerts list is displayed.

If you are unable to see the alerts, verify if the alert file is correct and then try again.

Adding worker node name in metrics

- Edit the configmap

occne-prometheus-serverin namespace -occne-infra. - Locate the the following

job:

job_name: kubernetes-pods kubernetes_sd_configs: role: pod - Add the following in the

relabel_configs:action: replace source_labels: __meta_kubernetes_pod_node_name target_label: kubernetes_pod_node_name

8.2 Configuring SNMP Notifier

This section describes the procedure to configure SNMP Notifier.

- Run the following command to edit the

deployment:

$ kubectl edit deploy <snmp_notifier_deployment_name> -n <namespace>Example:

$ kubectl edit deploy occne-snmp-notifier -n occne-infraSNMP deployment yaml file is displayed.

- Edit the SNMP destination in the deployment

yaml file as

follows:

--snmp.destination=<destination_ip>:<destination_port>Example:

--snmp.destination=10.75.203.94:162 - Save the file.

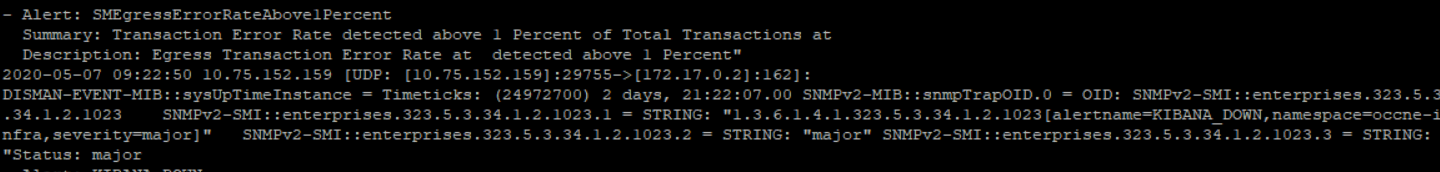

$ docker logs <trapd_container_id>Figure 8-1 Sample output for SNMP Trap

There are two MIB files which are used to generate the traps. Update these files along with the Alert file in order to fetch the traps in their environment.

toplevel.mibThis is the top level mib file, where the Objects and their data types are defined.

policy-alarm-mib.mibThis file fetches objects from the top level mib file and these objects can be selected for display.

Note:

MIB files are packaged along with CNC Policy Custom Templates. Download the file from MOS. For more information on downloading custom templates, see Oracle Communications Cloud Native Core Policy Installation and Upgrade Guide.8.3 List of Alerts

- Common Alerts - This category of alerts is common and required for all three modes of deployment.

- PCF Alerts - This category of alerts is specific to PCF microservices and required for Converged and PCF only modes of deployment.

- PCRF Alerts - This category of alerts is specific to PCRF microservices and required for Converged and PCRF only modes of deployment.

8.3.1 Common Alerts

This section provides information about alerts that are common for PCF and PCRF.

8.3.1.1 PodMemoryDoC

- Description

- Pod Resource Congestion status of {{$labels.service}} service is DoC for Memory type

- Summary

- Pod Resource Congestion status of {{$labels.service}} service is DoC for Memory type

- Severity

- Major

- Condition

- occnp_pod_resource_congestion_state{type="memory"} == 1

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.31

- Metric Used

- occnp_pod_resource_congestion_state

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.2 PodMemoryCongested

- Description

- Pod Resource Congestion status of {{$labels.service}} service is congested for Memory type

- Summary

- Pod Resource Congestion status of {{$labels.service}} service is congested for Memory type

- Severity

- Critical

- Condition

- occnp_pod_resource_congestion_state{type="memory"} == 2

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.32

- Metric Used

- occnp_pod_resource_congestion_state

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.3 POD_DANGER_OF_CONGESTION

- Description

- Pod Congestion status of {{$labels.service}} service is DoC

- Summary

- Pod Congestion status of {{$labels.service}} service is DoC

- Severity

- Major

- Condition

- occnp_pod_congestion_state == 1

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.25

- Metric Used

- occnp_pod_congestion_state

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.4 RAA_RX_FAIL_COUNT_EXCEEDS_CRITICAL_THRESHOLD

- Description

- RAA Rx fail count exceeds the critical threshold limit.

- Summary

- RAA Rx fail count exceeds the critical threshold limit.

- Severity

- CRITICAL

- Condition

- sum(rate(occnp_diam_response_local_total{msgType="RAA", appId="16777236", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="RAA", appId="16777236"}[5m])) * 100 > 90

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.35

- Metric Used

- occnp_diam_response_local_total

- Recommended Actions

- For any additional guidance, contact My Oracle Support.

8.3.1.5 RAA_RX_FAIL_COUNT_EXCEEDS_MAJOR_THRESHOLD

- Description

- RAA Rx fail count exceeds the major threshold limit.

- Summary

- RAA Rx fail count exceeds the major threshold limit.

- Severity

- MAJOR

- Condition

- sum(rate(occnp_diam_response_local_total{msgType="RAA", appId="16777236", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="RAA", appId="16777236"}[5m])) * 100 > 80 and sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="RAA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="RAA"}[5m])) * 100 <= 90

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.35

- Metric Used

- occnp_diam_response_local_total

- Recommended Actions

- For any additional guidance, contact My Oracle Support.

8.3.1.6 RAA_RX_FAIL_COUNT_EXCEEDS_MINOR_THRESHOLD

- Description

- RAA Rx fail count exceeds the minor threshold limit.

- Summary

- RAA Rx fail count exceeds the minor threshold limit.

- Severity

- MINOR

- Condition

- sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="RAA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="RAA"}[5m])) * 100 > 60 and sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="RAA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="RAA"}[5m])) * 100 <= 80

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.35

- Metric Used

- occnp_diam_response_local_total

- Recommended Actions

- For any additional guidance, contact My Oracle Support.

8.3.1.7 ASA_RX_FAIL_COUNT_EXCEEDS_CRITICAL_THRESHOLD

- Description

- ASA Rx fail count exceeds the critical threshold limit.

- Summary

- ASA Rx fail count exceeds the critical threshold limit.

- Severity

- CRITICAL

- Condition

- sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA"}[5m])) * 100 > 90

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.66

- Metric Used

- occnp_diam_response_local_total

- Recommended Actions

- For any additional guidance, contact My Oracle Support.

8.3.1.8 ASA_RX_FAIL_COUNT_EXCEEDS_MAJOR_THRESHOLD

- Description

- ASA Rx fail count exceeds the major threshold limit.

- Summary

- ASA Rx fail count exceeds the major threshold limit.

- Severity

- MAJOR

- Condition

- sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA"}[5m])) * 100 > 80 and sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA"}[5m])) * 100 <= 90

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.66

- Metric Used

- occnp_diam_response_local_total

- Recommended Actions

- For any additional guidance, contact My Oracle Support.

8.3.1.9 ASA_RX_FAIL_COUNT_EXCEEDS_MINOR_THRESHOLD

- Description

- ASA Rx fail count exceeds the minor threshold limit.

- Summary

- ASA Rx fail count exceeds the minor threshold limit.

- Severity

- MINOR

- Condition

- sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA"}[5m])) * 100 > 60 and sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA",responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{appId="16777236",msgType="ASA"}[5m])) * 100 <= 80

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.66

- Metric Used

- occnp_diam_response_local_total

- Recommended Actions

- For any additional guidance, contact My Oracle Support.

8.3.1.10 SCP_PEER_UNAVAILABLE

- Description

- Configured SCP peer is unavailable.

- Summary

- occnp_oc_egressgateway_peer_health_status != 0. SCP peer [ {{$labels.peer}} ] is unavailable.

- Severity

- Major

- Condition

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.60

- Metric Used

- occnp_oc_egressgateway_peer_health_status

- Recommended Actions

-

This alert gets cleared when unavailable SCPs become available.

For any additional guidance, contact My Oracle Support.

8.3.1.11 SCP_PEER_SET_UNAVAILABLE

- Description

- None of the SCP peer available for configured peerset.

- Summary

- (occnp_oc_egressgateway_peer_count - occnp_oc_egressgateway_peer_available_count) !=0 and (occnp_oc_egressgateway_peer_count) > 0.

- Severity

- Critical

- Condition

- One of the SCPs has been marked unhealthy.

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.61

- Metric Used

- oc_egressgateway_peer_count and oc_egressgateway_peer_available_count

- Recommended Actions

-

NF clears the critical alarm when atleast one SCP peer in a peerset becomes available such that all other SCP peers in the given peerset are still unavailable.

For any additional guidance, contact My Oracle Support.

8.3.1.12 STALE_CONFIGURATION

- Description

-

In last 10 minutes, the current service config_level does not match the config_level from the config-server.

- Summary

- In last 10 minutes, the current service config_level does not match the config_level from the config-server.

- Severity

- Major

- Condition

- (sum by(namespace) (topic_version{app_kubernetes_io_name="config-server",topicName="config.level"})) / (count by(namespace) (topic_version{app_kubernetes_io_name="config-server",topicName="config.level"})) != (sum by(namespace) (topic_version{app_kubernetes_io_name!="config-server",topicName="config.level"})) / (count by(namespace) (topic_version{app_kubernetes_io_name!="config-server",topicName="config.level"}))

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.62

- Metric Used

- topic_version

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.13 POLICY_SERVICES_DOWN

- Name in Alert Yaml File

- PCF_SERVICES_DOWN

- Description

- {{$labels.service}} service is not running!

- Summary

- {{$labels.service}} is not running!

- Severity

- Critical

- Condition

- sum by(service, namespace, category)(appinfo_service_running{application="occnp",service!~".*altsvc-cache",vendor="Oracle"}) < 1

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.1

- Metric Used

- appinfo_service_running

- Recommended Actions

-

Alert gets triggered if PCF service is down.

For any additional guidance, contact My Oracle Support.

8.3.1.14 DIAM_TRAFFIC_RATE_ABOVE_THRESHOLD

- Name in Alert Yaml File

- DiamTrafficRateAboveThreshold

- Description

- Diameter Connector Ingress traffic Rate is above threshold of Max MPS (current value is: {{ $value }})

- Summary

- Traffic Rate is above 90 Percent of Max requests per second.

- Severity

- Major

- Condition

- The total Ingress traffic rate for Diameter connector has crossed

the configured threshold of 900 TPS.

Default value of this alert trigger point in Common_Alertrules.yaml file is when Diameter Connector Ingress Rate crosses 90% of maximum ingress requests per second.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.6

- Metric Used

- ocpm_ingress_request_total

- Recommended Actions

- The alert gets cleared when the Ingress traffic rate falls below

the threshold.

Note:

Threshold levels can be configured using theCommon_Alertrules.yamlfile.It is recommended to assess the reason for additional traffic. Perform the following steps to analyze the cause of increased traffic:- Refer Ingress Gateway section in Grafana to determine increase in 4xx and 5xx error response codes.

- Check Ingress Gateway logs on Kibana to determine the reason for the errors.

For any additional guidance, contact My Oracle Support.

8.3.1.15 DIAM_INGRESS_ERROR_RATE_ABOVE_10_PERCENT

- Name in Alert Yaml File

- DiamIngressErrorRateAbove10Percent

- Description

- Transaction Error Rate detected above 10 Percent of Total on Diameter Connector (current value is: {{ $value }})

- Summary

- Transaction Error Rate detected above 10 Percent of Total Transactions.

- Severity

- Critical

- Condition

- The number of failed transactions is above 10 percent of the total transactions on Diameter Connector.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.7

- Metric Used

- ocpm_ingress_response_total

- Recommended Actions

- The alert gets cleared when the number of failed transactions are

below 10% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the

service specific errors. For instance:

ocpm_ingress_response_total{servicename_3gpp="rx",response_code!~"2.*"} - The service specific errors can be further filtered for errors specific to a method such as GET, PUT, POST, DELETE, and PATCH.

For any additional guidance, contact My Oracle Support.

- Check the service specific metrics to understand the

service specific errors. For instance:

8.3.1.16 DIAM_EGRESS_ERROR_RATE_ABOVE_1_PERCENT

- Name in Alert Yaml File

- DiamEgressErrorRateAbove1Percent

- Description

- Egress Transaction Error Rate detected above 1 Percent of Total on Diameter Connector (current value is: {{ $value }})

- Summary

- Transaction Error Rate detected above 1 Percent of Total Transactions

- Severity

- Minor

- Condition

- The number of failed transactions is above 1 percent of the total Egress Gateway transactions on Diameter Connector.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.8

- Metric Used

- ocpm_egress_response_total

- Recommended Actions

- The alert gets cleared when the number of failed transactions are

below 1% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the

errors. For instance:

ocpm_egress_response_total{servicename_3gpp="rx",response_code!~"2.*"} - The service specific errors can be further filtered for errors specific to a method such as GET, PUT, POST, DELETE, and PATCH.

For any additional guidance, contact My Oracle Support.

- Check the service specific metrics to understand the

errors. For instance:

8.3.1.17 UDR_INGRESS_TRAFFIC_RATE_ABOVE_THRESHOLD

- Name in Alert Yaml File

- PcfUdrIngressTrafficRateAboveThreshold

- Description

- User service Ingress traffic Rate from UDR is above threshold of Max MPS (current value is: {{ $value }})

- Summary

- Traffic Rate is above 90 Percent of Max requests per second

- Severity

- Major

- Condition

- The total User Service Ingress traffic rate from UDR has crossed the

configured threshold of 900 TPS.

Default value of this alert trigger point in Common_Alertrules.yaml file is when user service Ingress Rate from UDR crosses 90% of maximum ingress requests per second.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.9

- Metric Used

- ocpm_userservice_inbound_count_total{service_resource="udr-service"}

- Recommended Actions

- The alert gets cleared when the Ingress traffic rate falls below

the threshold.

Note:

Threshold levels can be configured using theCommon_Alertrules.yamlfile.It is recommended to assess the reason for additional traffic. Perform the following steps to analyze the cause of increased traffic:- Refer Ingress Gateway section in Grafana to determine increase in 4xx and 5xx error response codes.

- Check Ingress Gateway logs on Kibana to determine the reason for the errors.

For any additional guidance, contact My Oracle Support.

8.3.1.18 UDR_EGRESS_ERROR_RATE_ABOVE_10_PERCENT

- Name in Alert Yaml File

- PcfUdrEgressErrorRateAbove10Percent

- Description

- Egress Transaction Error Rate detected above 10 Percent of Total on User service (current value is: {{ $value }})

- Summary

- Transaction Error Rate detected above 10 Percent of Total Transactions

- Severity

- Critical

- Condition

- The number of failed transactions from UDR is more than 10 percent of the total transactions.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.10

- Metric Used

- ocpm_udr_tracking_response_total{servicename_3gpp="nudr-dr",response_code!~"2.*"}

- Recommended Actions

- The alert gets cleared when the number of failure transactions

falls below the configured threshold.

Note:

Threshold levels can be configured using theCommon_Alertrules.yamlfile.It is recommended to assess the reason for failed transactions. Perform the following steps to analyze the cause of increased traffic:- Refer Egress Gateway section in Grafana to determine increase in 4xx and 5xx error response codes.

- Check Egress Gateway logs on Kibana to determine the reason for the errors.

For any additional guidance, contact My Oracle Support.

8.3.1.19 POLICYDS_INGRESS_TRAFFIC_RATE_ABOVE_THRESHOLD

- Name in Alert Yaml File

- PolicyDsIngressTrafficRateAboveThreshold

- Description

- Ingress Traffic Rate is above threshold of Max MPS (current value is: {{ $value }})

- Summary

- Traffic Rate is above 90 Percent of Max requests per second

- Severity

- Critical

- Condition

- The total PolicyDS Ingress message rate has crossed the configured

threshold of 900 TPS. 90% of maximum Ingress request rate.

Default value of this alert trigger point in Common_Alertrules.yaml file is when PolicyDS Ingress Rate crosses 90% of maximum ingress requests per second.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.13

- Metric Used

- client_request_total

Note:

This is a Kubernetes metric used for instance availability monitoring. If the metric is not available, use similar metrics exposed by the monitoring system. - Recommended Actions

- The alert gets cleared when the Ingress traffic rate falls below

the threshold.

Note:

Threshold levels can be configured using theCommon_Alertrules.yamlfile.It is recommended to assess the reason for additional traffic. Perform the following steps to analyze the cause of increased traffic:- Refer Ingress Gateway section in Grafana to determine increase in 4xx and 5xx error response codes.

- Check Ingress Gateway logs on Kibana to determine the reason for the errors.

For any additional guidance, contact My Oracle Support.

8.3.1.20 POLICYDS_INGRESS_ERROR_RATE_ABOVE_10_PERCENT

- Name in Alert Yaml File

- PolicyDsIngressErrorRateAbove10Percent

- Description

- Ingress Transaction Error Rate detected above 10 Percent of Totat on PolicyDS service (current value is: {{ $value }})

- Summary

- Transaction Error Rate detected above 10 Percent of Total Transactions

- Severity

- Critical

- Condition

- The number of failed transactions is above 10 percent of the total transactions for PolicyDS service.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.14

- Metric Used

- client_response_total

- Recommended Actions

- The alert gets cleared when the number of failed transactions are

below 10% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the

service specific errors. For instance:

client_response_total{response!~"2.*"} - The service specific errors can be further filtered for errors specific to a method such as GET, PUT, POST, DELETE, and PATCH.

For any additional guidance, contact My Oracle Support.

- Check the service specific metrics to understand the

service specific errors. For instance:

8.3.1.21 POLICYDS_EGRESS_ERROR_RATE_ABOVE_1_PERCENT

- Name in Alert Yaml File

- PolicyDsEgressErrorRateAbove1Percent

- Description

- Egress Transaction Error Rate detected above 1 Percent of Total on PolicyDS service (current value is: {{ $value }})

- Summary

- Transaction Error Rate detected above 1 Percent of Total Transactions

- Severity

- Minor

- Condition

- The number of failed transactions is above 1 percent of the total transactions for PolicyDS service.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.15

- Metric Used

- server_response_total

- Recommended Actions

- The alert gets cleared when the number of failed transactions are

below 10% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the

service specific errors. For instance:

server_response_total{response!~"2.*"} - The service specific errors can be further filtered for errors specific to a method such as GET, PUT, POST, DELETE, and PATCH.

For any additional guidance, contact My Oracle Support.

- Check the service specific metrics to understand the

service specific errors. For instance:

8.3.1.22 UDR_INGRESS_TIMEOUT_ERROR_ABOVE_MAJOR_THRESHOLD

- Name in Alert Yaml File

- PcfUdrIngressTimeoutErrorAboveMajorThreshold

- Description

- Ingress Timeout Error Rate detected above 10 Percent of Totat towards UDR service (current value is: {{ $value }})

- Summary

- Timeout Error Rate detected above 10 Percent of Total Transactions

- Severity

- Major

- Condition

- The number of failed transactions due to timeout is above 10 percent of the total transactions for UDR service.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.16

- Metric Used

- ocpm_udr_tracking_request_timeout_total{servicename_3gpp="nudr-dr"}

- Recommended Actions

- The alert gets cleared when the number of failed transactions due

to timeout are below 10% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the

service specific errors. For instance:

ocpm_udr_tracking_request_timeout_total{servicename_3gpp="nudr-dr"} - The service specific errors can be further filtered for errors specific to a method such as GET, PUT, POST, DELETE, and PATCH.

For any additional guidance, contact My Oracle Support.

- Check the service specific metrics to understand the

service specific errors. For instance:

8.3.1.23 DB_TIER_DOWN_ALERT

- Name in Alert Yaml File

- DBTierDownAlert

- Description

- DB cannot be reachable!

- Summary

- DB cannot be reachable!

- Severity

- Critical

- Condition

- Database is not available.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.18

- Metric Used

- appinfo_category_running{category="database"}

- Recommended Actions

-

Alert gets triggered when database is not reachable.

For any additional guidance, contact My Oracle Support.

8.3.1.24 CPU_USAGE_PER_SERVICE_ABOVE_MINOR_THRESHOLD

- Name in Alert Yaml File

- CPUUsagePerServiceAboveMinorThreshold

- Description

- CPU usage for {{$labels.service}} service is above 60

- Summary

- CPU usage for {{$labels.service}} service is above 60

- Severity

- Minor

- Condition

- A service pod has reached the configured minor threshold (60%) of its CPU usage limits.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.19

- Metric Used

- container_cpu_usage_seconds_total

Note:

This is a Kubernetes used for instance availability monitoring. If the metric is not available, use similar metrics exposed by the monitoring system. - Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. If the CPU utilization crosses the minor threshold then the alert shall be raised. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.The alert gets cleared when the CPU utilization falls below the minor threshold or crosses the major threshold or when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.25 CPU_USAGE_PER_SERVICE_ABOVE_MAJOR_THRESHOLD

- Name in Alert Yaml File

- CPUUsagePerServiceAboveMajorThreshold

- Description

- CPU usage for {{$labels.service}} service is above 80

- Summary

- CPU usage for {{$labels.service}} service is above 80

- Severity

- Major

- Condition

- A service pod has reached the configured major threshold (80%) of its CPU usage limits.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.20

- Metric Used

- container_cpu_usage_seconds_total

Note:

This is a Kubernetes used for instance availability monitoring. If the metric is not available, use similar metrics exposed by the monitoring system. - Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. If the CPU utilization crosses the major threshold then the alert shall be raised. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.The alert gets cleared when the CPU utilization falls below the minor threshold or crosses the major threshold or when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.26 CPU_USAGE_PER_SERVICE_ABOVE_CRITICAL_THRESHOLD

- Name in Alert Yaml File

- CPUUsagePerServiceAboveCriticalThreshold

- Description

- CPU usage for {{$labels.service}} service is above 90

- Summary

- CPU usage for {{$labels.service}} service is above 90

- Severity

- Critical

- Condition

- A service pod has reached the configured critical threshold (90%) of its CPU usage limits.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.21

- Metric Used

- container_cpu_usage_seconds_total

Note:

This is a Kubernetes used for instance availability monitoring. If the metric is not available, use similar metrics exposed by the monitoring system. - Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. If the CPU utilization crosses the critical threshold then the alert shall be raised. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.The alert gets cleared when the CPU utilization falls below the critical threshold or when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.27 MEMORY_USAGE_PER_SERVICE_ABOVE_MINOR_THRESHOLD

- Name in Alert Yaml File

- MemoryUsagePerServiceAboveMinorThreshold

- Description

- Memory usage for {{$labels.service}} service is above 60

- Summary

- Memory usage for {{$labels.service}} service is above 60

- Severity

- Minor

- Condition

- A service pod has reached the configured minor threshold (60%) of its memory usage limits.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.22

- Metric Used

- container_memory_usage_bytes

Note:

This is a Kubernetes used for instance availability monitoring. If the metric is not available, use similar metrics exposed by the monitoring system. - Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. If the Memory utilization crosses the minor threshold then the alert shall be raised. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.The alert gets cleared when the memory utilization falls below the minor threshold or crosses the major threshold or when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.28 MEMORY_USAGE_PER_SERVICE_ABOVE_MAJOR_THRESHOLD

- Name in Alert Yaml File

- MemoryUsagePerServiceAboveMajorThreshold

- Description

- Memory usage for {{$labels.service}} service is above 80

- Summary

- Memory usage for {{$labels.service}} service is above 80

- Severity

- Major

- Condition

- A service pod has reached the configured major threshold (80%) of its memory usage limits.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.23

- Metric Used

- container_memory_usage_bytes

Note:

This is a Kubernetes used for instance availability monitoring. If the metric is not available, use similar metrics exposed by the monitoring system. - Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. If the memory utilization crosses the major threshold then the alert shall be raised. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.The alert gets cleared when the memory utilization falls below the minor threshold or crosses the major threshold or when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.29 MEMORY_USAGE_PER_SERVICE_ABOVE_CRITICAL_THRESHOLD

- Name in Alert Yaml File

- MemoryUsagePerServiceAboveCriticalThreshold

- Description

- Memory usage for {{$labels.service}} service is above 90

- Summary

- Memory usage for {{$labels.service}} service is above 90

- Severity

- Critical

- Condition

- A service pod has reached the configured critical threshold (90%) of its memory usage limits.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.24

- Metric Used

- container_memory_usage_bytes

Note:

This is a Kubernetes used for instance availability monitoring. If the metric is not available, use similar metrics exposed by the monitoring system. - Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. If the CPU utilization crosses the critical threshold then the alert shall be raised. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.The alert gets cleared when the memory utilization falls below the critical threshold or when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.30 POD_CONGESTED

- Name in Alert Yaml File

- PodCongested

- Description

- Pod Congestion status of {{$labels.service}} service is congested

- Summary

- Pod Congestion status of {{$labels.service}} service is congested

- Severity

- Critical

- Condition

- The pod congestion status is set to congested.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.26

- Metric Used

- occnp_pod_congestion_state

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.31 POD_DANGER_OF_CONGESTION

- Name in Alert Yaml File

- PodDoC

- Description

- Pod Congestion status of {{$labels.service}} service is DoC

- Summary

- Pod Congestion status of {{$labels.service}} service is DoC

- Severity

- Major

- Condition

- The pod congestion status is set to Danger of Congestion.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.25

- Metric Used

- occnp_pod_congestion_state

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.32 POD_PENDING_REQUEST_CONGESTED

- Name in Alert Yaml File

- PodPendingRequestCongested

- Description

- Pod Resource Congestion status of {{$labels.service}} service is congested for PendingRequest type

- Summary

- Pod Resource Congestion status of {{$labels.service}} service is congested for PendingRequest type

- Severity

- Critical

- Condition

- The pod congestion status is set to congested for PendingRequest.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.28

- Metric Used

- occnp_pod_resource_congestion_state{type="queue"}

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the pending requests in the queue comes below the configured threshold value.

For any additional guidance, contact My Oracle Support.

8.3.1.33 POD_PENDING_REQUEST_DANGER_OF_CONGESTION

- Name in Alert Yaml File

- PodPendingRequestDoC

- Description

- Pod Resource Congestion status of {{$labels.service}} service is DoC for PendingRequest type

- Summary

- Pod Resource Congestion status of {{$labels.service}} service is DoC for PendingRequest type

- Severity

- Major

- Condition

- The pod congestion status is set to DoC for pending requests.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.27

- Metric Used

- occnp_pod_resource_congestion_state{type="queue"}

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the pending requests in the queue comes below the configured threshold value.

For any additional guidance, contact My Oracle Support.

8.3.1.34 POD_CPU_CONGESTED

- Name in Alert Yaml File

- PodCPUCongested

- Description

- Pod Resource Congestion status of {{$labels.service}} service is congested for CPU type

- Summary

- Pod Resource Congestion status of {{$labels.service}} service is congested for CPU type

- Severity

- Critical

- Condition

- The pod congestion status is set to congested for CPU.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.30

- Metric Used

- occnp_pod_resource_congestion_state{type="cpu"}

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the system CPU usage comes below the configured threshold value.

For any additional guidance, contact My Oracle Support.

8.3.1.35 POD_CPU_DANGER_OF_CONGESTION

- Name in Alert Yaml File

- PodCPUDoC

- Description

- Pod Resource Congestion status of {{$labels.service}} service is DoC for CPU type

- Summary

- Pod Resource Congestion status of {{$labels.service}} service is DoC for CPU type

- Severity

- Major

- Condition

- The pod congestion status is set to DoC for CPU.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.29

- Metric Used

- occnp_pod_resource_congestion_state{type="cpu"}

- Recommended Actions

-

Alert triggers based on the resource limit usage and load shedding configurations in congestion control. The CPU, Memory, and Queue usage can be referred using the Grafana Dashboard.

The alert gets cleared when the system CPU usage comes below the configured threshold value.

For any additional guidance, contact My Oracle Support.

8.3.1.36 SERVICE_OVERLOADED

- Description

- Overload Level of {{$labels.service}} service is L1

- Summary

- Overload Level of {{$labels.service}} service is L1

- Severity

- Minor

- Condition

- The overload level of the service is L1.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.40

- Metric Used

- load_level

- Recommended Actions

- The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- Overload Level of {{$labels.service}} service is L2

- Summary

- Overload Level of {{$labels.service}} service is L2

- Severity

- Major

- Condition

- The overload level of the service is L2.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.40

- Metric Used

- load_level

- Recommended Actions

- The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- Overload Level of {{$labels.service}} service is L3

- Summary

- Overload Level of {{$labels.service}} service is L3

- Severity

- Critical

- Condition

- The overload level of the service is L3.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.40

- Metric Used

- load_level

- Recommended Actions

- The alert gets cleared when the system is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.37 SERVICE_RESOURCE_OVERLOADED

Alerts when service is in overload state due to memory usage

- Description

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Severity

- Minor

- Condition

- The overload level of the service is L1 due to memory usage.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="memory"}

- Recommended Actions

- The alert gets cleared when the memory usage of the service is

back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Severity

- Major

- Condition

- The overload level of the service is L2 due to memory usage.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="memory"}

- Recommended Actions

- The alert gets cleared when the memory usage of the service is

back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Severity

- Critical

- Condition

- The overload level of the service is L3 due to memory usage.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="memory"}

- Recommended Actions

- The alert gets cleared when the memory usage of the service is

back to normal state.

For any additional guidance, contact My Oracle Support.

Alerts when service is in overload state due to CPU usage

- Description

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Severity

- Minor

- Condition

- The overload level of the service is L1 due to CPU usage.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="cpu"}

- Recommended Actions

- The alert gets cleared when the CPU usage of the service is

back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Severity

- Major

- Condition

- The overload level of the service is L2 due to CPU usage.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="cpu"}

- Recommended Actions

- The alert gets cleared when the CPU usage of the service is

back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Severity

- Critical

- Condition

- The overload level of the service is L3 due to CPU usage.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="cpu"}

- Recommended Actions

- The alert gets cleared when the CPU usage of the service is

back to normal state.

For any additional guidance, contact My Oracle Support.

Alerts when service is in overload state due to number of pending messages

- Description

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Severity

- Minor

- Condition

- The overload level of the service is L1 due to number of pending messages.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="svc_pending_count"}

- Recommended Actions

- The alert gets cleared when the number of pending messages of

the service is back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Severity

- Major

- Condition

- The overload level of the service is L2 due to number of pending messages.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="svc_pending_count"}

- Recommended Actions

- The alert gets cleared when the number of pending messages of

the service is back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Severity

- Critical

- Condition

- The overload level of the service is L3 due to number of pending messages.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="svc_pending_count"}

- Recommended Actions

- The alert gets cleared when the number of pending messages of

the service is back to normal state.

For any additional guidance, contact My Oracle Support.

Alerts when service is in overload state due to number of failed requests

- Description

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L1 for {{$labels.type}} type

- Severity

- Minor

- Condition

- The overload level of the service is L1 due to number of failed requests.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="svc_failure_count"}

- Recommended Actions

- The alert gets cleared when the number of failed messages of

the service is back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L2 for {{$labels.type}} type

- Severity

- Major

- Condition

- The overload level of the service is L2 due to number of failed requests.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="svc_failure_count"}

- Recommended Actions

- The alert gets cleared when the number of failed messages of

the service is back to normal state.

For any additional guidance, contact My Oracle Support.

- Description

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Summary

- {{$labels.service}} service is L3 for {{$labels.type}} type

- Severity

- Critical

- Condition

- The overload level of the service is L3 due to number of failed requests.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.41

- Metric Used

- service_resource_overload_level{type="svc_failure_count"}

- Recommended Actions

- The alert gets cleared when the number of failed messages of

the service is back to normal state.

For any additional guidance, contact My Oracle Support.

8.3.1.38 SUBSCRIBER_NOTIFICATION_ERROR_EXCEEDS_CRITICAL_THRESHOLD

- Description

- Notification Transaction Error exceeds the critical threshold limit for a given Subscriber Notification server

- Summary

- Transaction Error exceeds the critical threshold limit for a given Subscriber Notification server

- Severity

- Critical

- Condition

- The number of error responses for a given subscriber notification server exceeds the critical threshold of 1000.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.42

- Metric Used

- http_notification_response_total{responseCode!~"2.*"}

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

- Description

- Notification Transaction Error exceeds the major threshold limit for a given Subscriber Notification server

- Summary

- Transaction Error exceeds the major threshold limit for a given Subscriber Notification server

- Severity

- Major

- Condition

- The number of error responses for a given subscriber notification server exceeds the major threshold value, that is, between 750 and 1000.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.42

- Metric Used

- http_notification_response_total{responseCode!~"2.*"}

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

- Description

- Notification Transaction Error exceeds the minor threshold limit for a given Subscriber Notification server

- Summary

- Transaction Error exceeds the minor threshold limit for a given Subscriber Notification server

- Severity

- Minor

- Condition

- The number of error responses for a given subscriber notification server exceeds the minor threshold value, that is, between 500 and 750.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.42

- Metric Used

- http_notification_response_total{responseCode!~"2.*"}

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.39 SYSTEM_IMPAIRMENT_MAJOR

- Description

- Major Impairment alert raised for REPLICATION_FAILED or REPLICATION_CHANNEL_DOWN or BINLOG_STORAGE usage

- Summary

- Major Impairment alert raised for REPLICATION_FAILED or REPLICATION_CHANNEL_DOWN or BINLOG_STORAGE usage

- Severity

- Major

- Condition

- Major Impairment alert

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.43

- Metric Used

- db_tier_replication_status

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.40 SYSTEM_IMPAIRMENT_CRITICAL

- Description

- Critical Impairment alert raised for REPLICATION_FAILED or REPLICATION_CHANNEL_DOWN or BINLOG_STORAGE usage

- Summary

- Critical Impairment alert raised for REPLICATION_FAILED or REPLICATION_CHANNEL_DOWN or BINLOG_STORAGE usage

- Severity

- Critical

- Condition

- Critical Impairment alert

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.43

- Metric Used

- db_tier_replication_status

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.41 SYSTEM_OPERATIONAL_STATE_NORMAL

- Description

- System Operational State is now in normal state

- Summary

- System Operational State is now in normal state

- Severity

- Info

- Condition

- System Operational State is now in normal state

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.44

- Metric Used

- system_operational_state == 1

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.42 SYSTEM_OPERATIONAL_STATE_PARTIAL_SHUTDOWN

- Description

- System Operational State is now in partial shutdown state

- Summary

- System Operational State is now in partial shutdown state

- Severity

- Info

- Condition

- System Operational State is now in partial shutdown state

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.44

- Metric Used

- system_operational_state == 2

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.43 SYSTEM_OPERATIONAL_STATE_COMPLETE_SHUTDOWN

- Description

- System Operational State is now in complete shutdown state

- Summary

- System Operational State is now in complete shutdown state

- Severity

- Info

- Condition

- System Operational State is now in complete shutdown state

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.44

- Metric Used

- system_operational_state == 3

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.44 TDF_CONNECTION_DOWN

- Summary

- TDF connection is down.

- Description

- TDF connection is down.

- Severity

- Critical

- Condition

- occnp_diam_conn_app_network{applicationName="Sd"} == 0

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.48

- Metric Used

-

occnp_diam_conn_app_network

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.45 DIAM_CONN_PEER_DOWN

- Summary

- Diameter connection to peer is down.

- Description

- Diameter connection to peer is down.

- Severity

- Major

- Condition

- (sum by (kubernetes_namespace,origHost)(occnp_diam_conn_network) == 0) and (sum by (kubernetes_namespace,origHost)(max_over_time(occnp_diam_conn_network[24h])) != 0)

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.50

- Metric Used

-

occnp_diam_conn_network

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.46 DIAM_CONN_NETWORK_DOWN

- Summary

- All the diameter network connections are down.

- Description

- All the diameter network connections are down.

- Severity

- Critical

- Condition

- sum by (kubernetes_namespace)(occnp_diam_conn_network) == 0

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.51

- Metric Used

-

occnp_diam_conn_network

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.47 DIAM_CONN_BACKEND_DOWN

- Summary

- All the diameter backend connections are down.

- Description

- All the diameter backend connections are down.

- Severity

- Critical

- Condition

-

sum by (kubernetes_namespace)(occnp_diam_conn_backend) == 0

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.52

- Metric Used

-

occnp_diam_conn_network

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.48 PerfInfoActiveOverloadThresholdFetchFailed

- Summary

- The application fails to get the current active overload level threshold data.

- Description

- The application raises this alert when it fails to fetch the current

active overload level threshold data and

active_overload_threshold_fetch_failed == 1. - Severity

- Major

- Condition

- active_overload_threshold_fetch_failed == 1

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.53

- Metric Used

-

active_overload_threshold_fetch_failed

- Recommended Actions

-

The alert gets cleared when the application fetches the current active overload level threshold data.

For any additional guidance, contact My Oracle Support.

8.3.1.49 SLASYFailCountExceedsCritcalThreshold

- Summary

-

SLA Sy fail count exceeds the critical threshold limit

- Description

-

SLA Sy fail count exceeds the critical threshold limit

- Severity

- Critical

- Condition

-

sum(rate(occnp_diam_response_local_total{msgType="SLA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SLA"}[5m])) * 100 > 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.58

- Metric Used

-

occnp_diam_response_local_total

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present. If the user hasn't been added in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.50 SLASYFailCountExceedsMajorThreshold

- Summary

-

SLA Sy fail count exceeds the major threshold limit

- Description

-

SLA Sy fail count exceeds the major threshold limit

- Severity

- Critical

- Condition

-

sum(rate(occnp_diam_response_local_total{msgType="SLA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SLA"}[5m])) * 100 > 80 and sum(rate(occnp_diam_response_local_total{msgType="SLA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SLA"}[5m])) * 100 <= 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.58

- Metric Used

-

occnp_diam_response_local_total

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present. If the user hasn't been added in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.51 SLASYFailCountExceedsMinorThreshold

- Summary

-

SLA Sy fail count exceeds the minor threshold limit

- Description

-

SLA Sy fail count exceeds the minor threshold limit

- Severity

- Minor

- Condition

-

sum(rate(occnp_diam_response_local_total{msgType="SLA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SLA"}[5m])) * 100 > 60 and sum(rate(occnp_diam_response_local_total{msgType="SLA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SLA"}[5m])) * 100 <= 80

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.58

- Metric Used

-

occnp_diam_response_local_total

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present. If the user hasn't been added in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.52 STASYFailCountExceedsCritcalThreshold

- Summary

-

STA Sy fail count exceeds the critical threshold limit.

- Description

-

STA Sy fail count exceeds the critical threshold limit.

- Severity

- Critical

- Condition

-

The failure rate of Sy STA responses is more than 90% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302"}[5m])) * 100 > 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.59

- Metric Used

-

occnp_diam_response_local_total

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present. If the user hasn't been added in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.53 STASYFailCountExceedsMajorThreshold

- Summary

-

STA Sy fail count exceeds the major threshold limit.

- Description

-

STA Sy fail count exceeds the major threshold limit.

- Severity

- major

- Condition

-

The failure rate of Sy STA responses is more than 80% and less and or equal to 90% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302"}[5m])) * 100 > 80 and sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302"}[5m])) * 100 <= 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.59

- Metric Used

-

occnp_diam_response_local_total

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present. If the user hasn't been added in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.54 STASYFailCountExceedsMinorThreshold

- Summary

-

STA Sy fail count exceeds the minor threshold limit.

- Description

-

STA Sy fail count exceeds the minor threshold limit.

- Severity

- minor

- Condition

-

The failure rate of Sy STA responses is more than 60% and less and or equal to 80% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302"}[5m])) * 100 > 60 and sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777302"}[5m])) * 100 <= 80

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.59

- Metric Used

-

occnp_diam_response_local_total

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present. If the user hasn't been added in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.55 SMSC_CONNECTION_DOWN

- Description

- This alert is triggered when connection to SMSC host is down.

- Summary

- Connection to SMSC peer {{$labels.smscName}} is down in notifier service pod {{$labels.pod}}

- Severity

- Major

- Condition

- sum by(namespace, pod, smscName)(occnp_active_smsc_conn_count) == 0

- OID

- 1.3.6.1.4.1.323.5.3.52.1.2.63

- Metric Used

- occnp_active_smsc_conn_count

- Recommended Actions

-

For any additional guidance, contact My Oracle Support.

8.3.1.56 STA_RX_FAIL_COUNT_EXCEEDS_CRITICAL_THRESHOLD

- Summary

-

STA Rx fail count exceeds the critical threshold limit.

- Description

-

STA Rx fail count exceeds the critical threshold limit.

- Severity

- Critical

- Condition

-

The failure rate of Rx STA responses is more than 90% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236"}[5m])) * 100 > 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.64

- Metric Used

-

occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present.

Check that the session and user hasn't been removed in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.57 STA_RX_FAIL_COUNT_EXCEEDS_MAJOR_THRESHOLD

- Summary

-

STA Rx fail count exceeds the major threshold limit

- Description

-

STA Rx fail count exceeds the major threshold limit

- Severity

- Major

- Condition

-

The failure rate of Rx STA responses is more than 80% and less and or equal to 90% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236"}[5m])) * 100 > 80 and sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236"}[5m])) * 100 <= 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.64

- Metric Used

-

occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) & AF and ensure connectivity is present.

Check that the session and user is valid and hasn't been removed in the Policy database, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.58 STA_RX_FAIL_COUNT_EXCEEDS_MINOR_THRESHOLD

- Summary

-

STA Rx fail count exceeds the minor threshold limit

- Description

-

STA Rx fail count exceeds the minor threshold limit

- Severity

- Minor

- Condition

-

The failure rate of Rx STA responses is more than 60% and less and or equal to 80% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236"}[5m])) * 100 > 60 and sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="STA", appId="16777236"}[5m])) * 100 <= 80

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.64

- Metric Used

-

occnp_diam_response_local_total{msgType="STA", appId="16777236", responseCode!~"2.*"}

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) & AF and ensure connectivity is present.

Check that the session and user is valid and hasn't been removed in the Policy database, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.59 SNA_SY_FAIL_COUNT_EXCEEDS_CRITICAL_THRESHOLD

- Summary

-

SNA Sy fail count exceeds the critical threshold limit

- Description

-

SNA Sy fail count exceeds the critical threshold limit

- Severity

- Critical

- Condition

-

The failure rate of Sy SNA responses is more than 90% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="SNA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SNA"}[5m])) * 100 > 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.65

- Metric Used

-

occnp_diam_response_local_total{msgType="SNA", responseCode!~"2.*"}

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present.

Check that the session and user hasn't been removed in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.60 SNA_SY_FAIL_COUNT_EXCEEDS_MAJOR_THRESHOLD

- Summary

-

SNA Sy fail count exceeds the major threshold limit

- Description

-

SNA Sy fail count exceeds the major threshold limit

- Severity

- Major

- Condition

-

The failure rate of Sy SNA responses is more than 80% and less and or equal to 90% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="SNA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SNA"}[5m])) * 100 > 80 and sum(rate(occnp_diam_response_local_total{msgType="SNA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SNA"}[5m])) * 100 <= 90

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.65

- Metric Used

-

occnp_diam_response_local_total{msgType="SNA", responseCode!~"2.*"}

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present.

Check that the session and user hasn't been removed in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.1.61 SNA_SY_FAIL_COUNT_EXCEEDS_MINOR_THRESHOLD

- Summary

-

SNA Sy fail count exceeds the minor threshold limit

- Description

-

SNA Sy fail count exceeds the minor threshold limit

- Severity

- Minor

- Condition

-

The failure rate of Sy STA responses is more than 60% and less and or equal to 80% of the total responses.

- Expression

-

sum(rate(occnp_diam_response_local_total{msgType="SNA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SNA"}[5m])) * 100 > 60 and sum(rate(occnp_diam_response_local_total{msgType="SNA", responseCode!~"2.*"}[5m])) / sum(rate(occnp_diam_response_local_total{msgType="SNA"}[5m])) * 100 <= 80

- OID

-

1.3.6.1.4.1.323.5.3.52.1.2.65

- Metric Used

-

occnp_diam_response_local_total{msgType="STA", responseCode!~"2.*"}

- Recommended Actions

-

Check the connectivity between diam-gw pod(s) and OCS server and ensure connectivity is present.

Check that the session and user hasn't been removed in the OCS configuration, then configure the user(s).

For any additional guidance, contact My Oracle Support.

8.3.2 PCF Alerts

This section provides information on PCF alerts.

8.3.2.1 INGRESS_ERROR_RATE_ABOVE_10_PERCENT_PER_POD

- Name in Alert Yaml File

- IngressErrorRateAbove10PercentPerPod

- Description

- Ingress Error Rate above 10 Percent in {{$labels.kubernetes_name}} in {{$labels.kubernetes_namespace}}

- Summary

- Transaction Error Rate in {{$labels.kubernetes_node}} (current value is: {{ $value }})

- Severity

- Critical

- Condition

- The total number of failed transactions per pod is above 10 percent of the total transactions.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.2

- Metric Used

- ocpm_ingress_response_total

- Recommended Actions

- The alert gets cleared when the number of failed transactions are

below 10% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the service specific errors.

- The service specific errors can be further filtered for errors specific to a method such as GET, PUT, POST, DELETE, and PATCH.

For any additional guidance, contact My Oracle Support.

8.3.2.2 SM_TRAFFIC_RATE_ABOVE_THRESHOLD

- Name in Alert Yaml File

- SMTrafficRateAboveThreshold

- Description

- SM service Ingress traffic Rate is above threshold of Max MPS (current value is: {{ $value }})

- Summary

- Traffic Rate is above 90 Percent of Max requests per second

- Severity

- Major

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.3

- Condition

- The total SM service Ingress traffic rate has crossed the configured

threshold of 900 TPS.

Default value of this alert trigger point in PCF_Alertrules.yaml file is when SM service Ingress Rate crosses 90% of maximum ingress requests per second.

- Metric Used

- ocpm_ingress_request_total{servicename_3gpp="npcf-smpolicycontrol"}

- Recommended Actions

- The alert gets cleared when the Ingress traffic rate falls below

the threshold.

Note:

Threshold levels can be configured using thePCF_Alertrules.yamlfile.It is recommended to assess the reason for additional traffic. Perform the following steps to analyze the cause of increased traffic:- Refer Ingress Gateway section in Grafana to determine increase in 4xx and 5xx error response codes.

- Check Ingress Gateway logs on Kibana to determine the reason for the errors.

For any additional guidance, contact My Oracle Support.

8.3.2.3 SM_INGRESS_ERROR_RATE_ABOVE_10_PERCENT

- Name in Alert Yaml File

- SMIngressErrorRateAbove10Percent

- Description

- Transaction Error Rate detected above 10 Percent of Total on SM service (current value is: {{ $value }})

- Summary

- Transaction Error Rate detected above 10 Percent of Total Transactions

- Severity

- Critical

- Condition

- The number of failed transactions is above 10 percent of the total transactions.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.4

- Metric Used

- ocpm_ingress_response_total

- Recommended Actions

- The alert gets cleared when the number of failed transactions are

below 10% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the

service specific errors. For instance:

ocpm_ingress_response_total{servicename_3gpp="npcf-smpolicycontrol",response_code!~"2.*"} - The service specific errors can be further filtered for errors specific to a method such as GET, PUT, POST, DELETE, and PATCH.

For any additional guidance, contact My Oracle Support.

- Check the service specific metrics to understand the

service specific errors. For instance:

8.3.2.4 SM_EGRESS_ERROR_RATE_ABOVE_1_PERCENT

- Name in Alert Yaml File

- SMEgressErrorRateAbove1Percent

- Description

- Egress Transaction Error Rate detected above 1 Percent of Total Transactions (current value is: {{ $value }})

- Summary

- Transaction Error Rate detected above 1 Percent of Total Transactions

- Severity

- Minor

- Condition

- The number of failed transactions is above 1 percent of the total transactions.

- OID

- 1.3.6.1.4.1.323.5.3.36.1.2.5

- Metric Used

- ocpm_egress_response_total

- Recommended Actions

- The alert gets cleared when the number of failed transactions are

below 1% of the total transactions.

To assess the reason for failed transactions, perform the following steps:

- Check the service specific metrics to understand the

service specific errors. For instance: