2 cnDBTier Architecture

cnDBTier comprises various microservices deployed in Kubernetes-based cloud native environments, for example: Oracle Communications Cloud Native Core, Cloud Native Environment (CNE) or Oracle Cloud Infrastructure (OCI). Cloud native environments provide various common services such as logs, metric data collection, analysis, graphs, and chart visualization. cnDBTier microservices integrate with these common services and provide them with the necessary data such as logs and metrics.

Figure 2-1 cnDBTier Architecture

- Management Microservice:

- The Management microservice is responsible for managing other nodes within the NDB Cluster. It performs functions such as providing configuration data and running backups.

- This microservice node is started first before any other node as it manages the configurations of other nodes in the cluster.

- This microservice manages and reads the cluster configuration file and distributes the information to all nodes in the cluster that request for it.

- This microservice maintains a log of cluster activities. Management clients can connect to the management server to check the status of the cluster.

- The management server manages the cluster logs. The data nodes transfer information about any events that occur on the data nodes to the management server. The management server in turn writes these information to the cluster log.

- Data Microservice:

- The Data microservice stores all the cluster data in key-value pairs.

- cnDBTier supports two replicas, that is a single node group can have up to two data nodes. For example, if there are four data nodes, then there will be two node groups, where the first group consists of the first two data nodes and the second group consists of the last two data nodes. All the data nodes of a single node group store the same data for redundancy and high availability. Therefore, at least one data node from each node group must be alive all the time to process or support CRUD (Create, Retrieve, Update, Delete) operations.

- The Data microservice is used to handle all the data in the tables using the NDB Cluster storage engine.

- This microservice empowers a data node to accomplish many activities, but not

limited to the following:

- Distributed transaction handling

- Node recovery

- Checkpointing to disk

- Online backup

- Replication SQL (API) Microservice:

- The Replication SQL microservice hosts a MySQL server that connects to the data pods and provides bidirectional georeplication support to the remote sites.

- This microservice receives the events from the data nodes, maintains binlogs on PVC, and uses the binlogs to perform replication.

- cnDBTier uses a set of two Replication SQL microservices to establish replication with the mate site. Where, one microservice is hosted as an active replication channel and the other hosted as a standby replication channel to handle georeplication failures.

- Application SQL (APP) Microservice:

- The Application SQL microservice is used by the Network Functions (NF) for performing CRUD (Create, Retrieve, Update, Delete) operations on cnDBTier.

- This microservice is responsible for performing cnDBTier initializations such as database creation, table creation, and user creation.

- Monitor Microservice:

- The Monitor microservice stores information about the current behavior of cnDBTier in the form of metrics. These metrics are sent to Prometheus or a similar monitoring tool when the tool requests for these metrics or data.

- The monitor microservice hosts multiple REST APIs. These REST APIs are used for handling many specific use cases such as creating on-demand backups. It also hosts other REST APIs that are used by CNC Console to integrate cnDBTier on CNC Console GUI, thereby making cnDBTier more user friendly. For more information about cnDBTier APIs, see the cnDBTier APIs section.

- Backup Manager Microservice:

- This Backup Manager microservice is responsible for coordinating all kinds of backup creations—scheduled backups by CronJob, on-demand backups by end user, and Fault recovery backups.

- The Backup Manager microservice uses the Backup Executor service to run the

mentioned or instructed commands on the respective data microservice. The Backup

Manager microservice is also responsible for coordinating with the Backup Executor

service to perform the following operations:

- Transferring the backups to local replication service when a fault recovery is triggered.

- Purging the old backups to save space on data microservice and prevent the data microservice from crashing.

- Replication Microservice:

- The Replication microservice is responsible for setting up the replication between cnDBTier sites. It also manages the replication channels between cnDBTier sites.

- This microservice has the following optional features:

- Skip replication errors when there is an irrecoverable error on the replication channels. This helps to resume the replication channels post an error.

- Transfer backups to remote server over a secure channel using SFTP.

- This microservice handles replication fault recovery to recover the failed sites.

When there is a replication failure, this microservice performs the following

tasks:

- Fetches data backup from a healthy site and uses the backup in a failed site to recover the data.

- Reestablishes the replication channel thereby recovering the failed sites.

- This microservice checks the growth in Binlogs and purges the binlogs according to the configuration. This helps to save the space in replication SQL microservice and prevent it from crashing.

2.1 cnDBTier Setups

cnDBTier supports two-site, three-site, and four-site georeplication setups. This section provides information and figures to understand the replication setups between cnDBTier sites based on their topology.

Note:

The setups detailed in this section are for reference only. You must choose your cnDBTier setups depending on your requirements and traffic intensity analysis.Two Site Replication

The following images depict the replication SQL pod connection between two cnDBTier sites with single or multiple replication channel groups. They also depict how replication service pods communicate with each other to set up the replication.

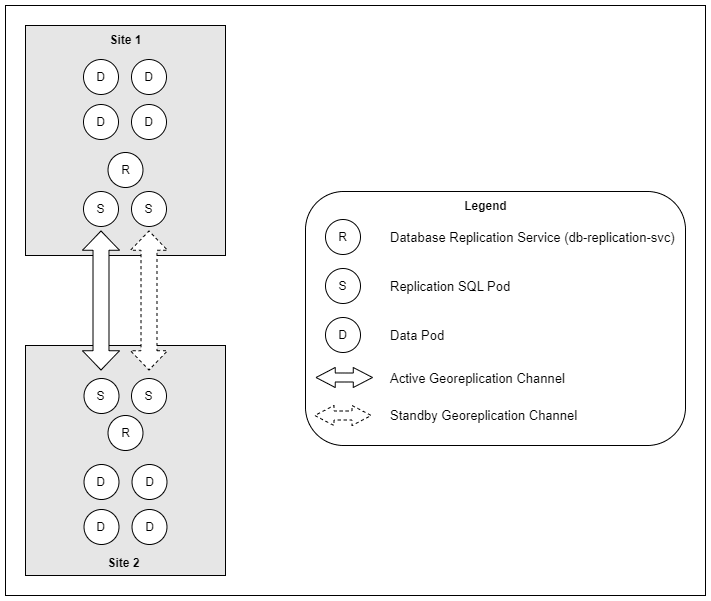

Figure 2-2 Two-Site Setup with Single Replication Channel Group

This sample setup contains two cnDBTier sites (Site 1 and Site 2). Each site has a single replication channel group consisting of a database replication service pod and two replication SQL pods. The replication channel group on both the sites communicate with each other for replication through a georeplication channel. The replication channel group consists of one active and one standby channel where the active channel is responsible for georeplication. When the active replication channel fails, the standby channel switches as the active channel for replication.

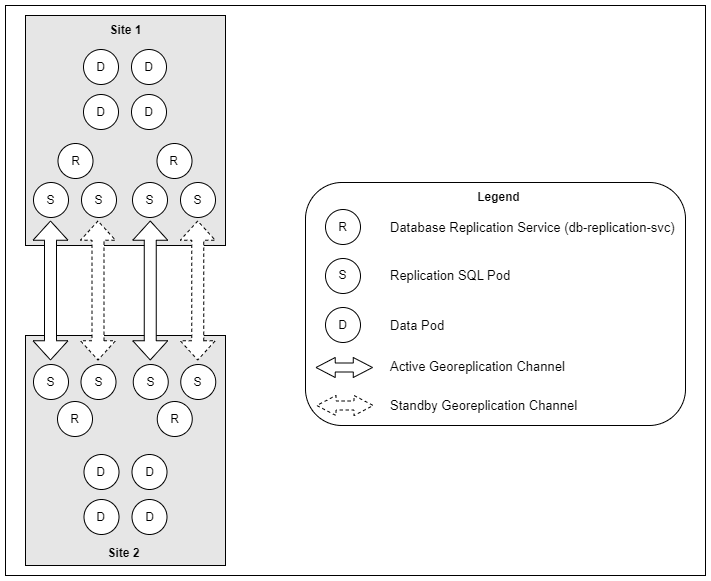

Figure 2-3 Two-Site Setup with Multiple Replication Channel Groups

This sample setup contains two cnDBTier sites (Site 1 and Site 2). Each site has two replication channel groups and each replication channel group contains a database replication service pod and two replication SQL pods. One replication channel group on one site (Site a) communicates with one replication group on the other site (Site 2) through a georeplication channel. Each replication channel group consists of one active and one standby channel, where the active channel is responsible for georeplication. When the active replication channel fails, the standby channel switches as the active channel for replication.

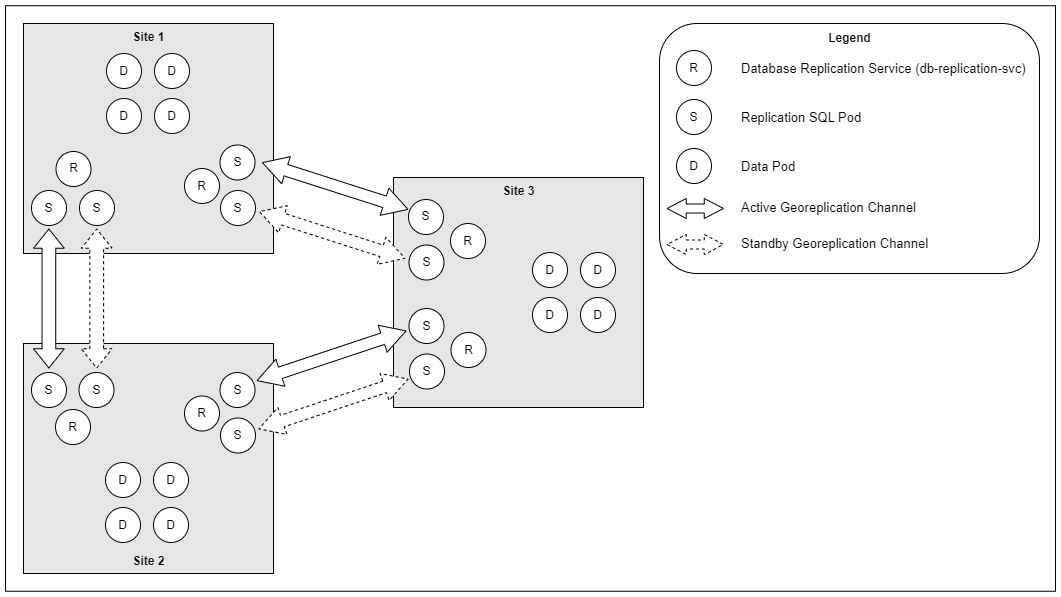

Three Site Replication

The following diagram depicts the replication SQL pod connection between three cnDBTier sites for replication. It also depicts how replication service pods communicate with each other to set up the replication:

Figure 2-4 Three-Site Replication

Note:

You can extend the three site setup for creating a four-site georeplication setup.2.2 Ingress and Egress Communication Over External IPs

If cnDBTier is deployed with two or more clusters, it

requires external Load Balancer IPs to facilitate communication between

these clusters. The db-replication-svc pods in one cluster must be able to

communicate (Ingress or Egress) with the db-replication-svc

pods in all the other clusters to establish and monitor replication

channels. Similarly, each cluster's active and standby

ndbmysqld pods must communicate (Ingress or Egress)

with the active and standby ndbmysqld pods of the other

clusters to perform controller-controller replication.

Figure 2-5 Two_Cluster_Ingress_Egress_Routes

Figure 2-6 Three_Cluster_Ingress_Egress_Routes