2 Troubleshooting OCNADD

Note:

kubectl commands might vary based on the platform

deployment. Replace kubectl with Kubernetes environment-specific

command line tool to configure Kubernetes resources through

kube-api server. The instructions provided in this document

are as per the Oracle Communications Cloud Native Environment (OCCNE) version of

kube-api server.

Generic Checklist

The following sections provide a generic checklists for troubleshooting OCNADD.

Deployment Checklist

- Run the following command to verify if OCNADD deployment, pods, and services created are running and available:

Verify the output, and check the following columns:# kubectl -n <namespace> get deployments,pods,svc- READY, STATUS, and RESTARTS

- PORT(S) of service

Note:

It is normal to observe the Kafka broker restart during deployment. - Verify if the correct image used and correct environment variables set in the deployment.

To check, run the following command:

# kubectl -n <namespace> get deployment <deployment-name> -o yaml - Check if the microservices can access each other through a REST interface.

To check, run the following command:

Example:# kubectl -n <namespace> exec <pod name> -- curl <uri>kubectl exec -it pod/ocnaddconfiguration-6ffc75f956-wnvzx -n ocnadd-system -- curl 'http://ocnaddadminservice:9181/ocnadd-admin-svc/v1/topic'Note:

These commands are in their simple format and display the logs only if ocnaddconfiguration and ocnadd-admin-svc pods are deployed.

The list of URIs for all the microservices:

http://ocnaddconfiguration:<port>/ocnadd-configuration/v1/subscriptionhttp://oncaddalarm:<port>/ocnadd-alarm/v1/alarm?&startTime=<start-time>&endTime=<end-time>Use off-set date time format, for example, 2022-07-12T05:37:26.954477600Z

<ip>:<port>/ocnadd-admin-svc/v1/topic/<ip>:<port>/ocnadd-admin-svc/v1/describe/topic/<topicName><ip>:<port>/ocnadd-admin-svc/v1/alter<ip>:<port>/ocnadd-admin-svc/v1/broker/expand/entry<ip>:<port>/ocnadd-admin-svc/v1/broker/health

Application Checklist

# kubectl -n <namespace> logs -f <pod name>-f to follow the logs or grep option to obtain a specific pattern in the log output.

# kubectl -n ocnadd-system logs -f $(kubectl -n ocnadd-system get pods -o name |cut -d'/' -f 2|grep nrfaggregation)Above command displays the logs of the ocnaddnrfaggregation service.

# kubectl logs -n ocnadd-system <pod name> | grep <pattern>Note:

These commands are in their simple format and display the logs only if there is atleast onenrfaggregation pod deployed.

Helm Install and Upgrade Failure

This section describes the various helm installation or upgrade failure scenarios and the respective troubleshooting procedures:

Incorrect Image Name in ocnadd/values File

Problem

helm install fails if an incorrect image name

is provided in the ocnadd/values.yaml file or if the image is

missing in the image repository.

Error Code or Error Message

When you run kubectl get pods -n <ocnadd_namespace>, the status of the pods might be ImagePullBackOff or ErrImagePull.

Solution

Perform the following steps to verify and correct the image name:

- Edit the

ocnadd/values.yamlfile and provide the release specific image names and tags. - Run the

helm installcommand. - Run the

kubectl get pods -n <ocnadd_namespace>command to verify if all the pods are in Running state.

Docker Registry is Configured Incorrectly

Problem

helm install might fail if the docker registry is not configured in

all primary and secondary nodes.

Error Code or Error Message

When you run kubectl get pods -n <ocnadd_namespace>, the status of

the pods might be ImagePullBackOff or ErrImagePull.

Solution

Configure the docker registry on all primary and secondary nodes. For information about docker registry configuration, see Oracle Communications Cloud Native Environment Installation Guide.

Continuous Restart of Pods

Problem

helm install might fail if MySQL primary or

secondary hosts are not configured properly in

ocnadd/values.yaml.

Error Code or Error Message

When you run kubectl get pods -n

<ocnadd_namespace>, the pods shows restart count increases

continuously or there is a Prometheus alert for continuous pod restart.

-

Verify MySQL connectivity.

MySQL servers(s) may not be configured properly. For more information about the MySQL configuration, see Oracle Communications Network Analytics Data Director Installation Guide.

- Describe the POD to check more details on the error, troubleshoot further based on the reported error.

- Check the POD log for any error, troubleshoot further based on the reported error.

Adapter Pods Does Not Receive Update Notification

Problem

Update notification from configuration service/GUI is not reaching Adapter pods..

Error Code or Error Message

On triggering update notification from GUI/Configuration Service, the configuration changes on Adapter does not take place.

Solution

- Check the Adapter pod logs

with:

"kubectl logs <adapter app name> -n <namespace> - If the update notification logs are not present in the Adapter logs execute the

below command to re-read the configurations with the following

command:

kubectl rollout restart deploy <adapter app name> -n <namespace>

Values.yaml File Parse Failure

This section explains the troubleshooting procedure in case of failure while

parsing the ocnadd/values.yaml file.

Problem

Unable to parse the ocnadd/values.yaml file or any

other while running Helm install.

Error Code or Error Message

Error: failed to parse ocnadd/values.yaml: error

converting YAML to JSON: yaml

Symptom

When parsing the ocnadd/values.yaml file, if

the above mentioned error is received, it indicates that the file is not

parsed because of the following reasons:

- The tree structure may not have been followed

- There may be a tab space in the file

Solution

Download the latest OCNADD custom templates zip file from MOS. For more information, see Oracle Communications Network Analytics Data Director Installation Guide.

Kafka Brokers Continuously Restart after Reinstallation

Problem

When re-installing OCNADD in the same namespace without deleting the PVC that was used for the first installation, Kafka brokers will go into crashloopbackoff status and keep restarting.

Error Code or Error Message

When you run, kubectl get pods -n <ocnadd_namespace> the broker pod's

status might be Error/crashloopbackoff and it might keep restarting continuously, with

"no disk space left on the device" errors in the pod logs.

Solution

- Delete the Stateful set (STS) deployments of the brokers. Run

kubectl get sts -n <ocnadd_namespace>to obtain the Stateful sets in the namespace. - Delete the STS deployments of the services with disk full issue. For example run the command

kubectl delete sts -n <ocnadd_namespace> kafka-broker1 kafka-broker2. - Delete the PVCs in the namespace, which is used by kafka-brokers. Run

kubectl get pvc -n <ocnadd_namespace>to get the PVCs in that namespace.The number of PVCs used is based on the number of brokers deployed. Therefore, select the PVCs that have the name kafka-broker or zookeeper, and delete them. To delete the PVCs, run

kubectl delete pvc -n <ocnadd_namespace> <pvcname1> <pvcname2>.

For example:

For a three broker setup in the namespace ocnadd-deploy, delete the following PVCs:

kubectl delete pvc -n ocnadd-deploy broker1-pvc-kafka-broker1-0, broker2-pvc-kafka-broker2-0, broker3-pvc-kafka-broker3-0, kafka-broker-security-zookeeper-0, kafka-broker-security-zookeeper-1 kafka-broker-security-zookeeper-2Kafka Brokers Continuously Restart After the Disk is Full

Problem

This issue occurs when the disk space is full on the broker or zookeeper.

Error Code or Error Message

When you run kubectl get pods -n <ocnadd_namespace>, the broker pod's status might be error or crashloopbackoff and it might keep restarting continuously.

Solution

- Delete the STS(Stateful set) deployments of the brokers:

- Get the STS's in the namespace with the following

command:

kubectl get sts -n <ocnadd_namespace> - Delete the STS deployments of the services with disk full

issue:

kubectl delete sts -n <ocnadd_namespace> <sts1> <sts2>For example, for three broker setup:kubectl delete sts -n ocnadd-deploy kafka-broker1 kafka-broker2 kafka-broker3 zookeeper

- Get the STS's in the namespace with the following

command:

- Delete the PVCs in that namespace that is used by the removed kafka-brokers. To get

the PVCs in that

namespace:

kubectl get pvc -n <ocnadd_namespace>The number of PVCs used will be based on the number of brokers you deploy. So choose the PVCs that have the name kafka-broker or zookeeper and delete them.

- To delete PVCs,

run:

kubectl delete pvc -n <ocnadd_namespace> <pvcname1> <pvcname2>For example, For a three broker setup in namespace ocnadd-deploy, you will need to delete these PVCs;

kubectl delete pvc -n ocnadd-deploy broker1-pvc-kafka-broker1-0 broker2-pvc-kafka-broker2-0 broker3-pvc-kafka-broker3-0 kafka-broker-security-zookeeper-0 kafka-broker-security-zookeeper-1 kafka-broker-security-zookeeper-2

- To delete PVCs,

run:

-

3. Once the STS and PVC's are deleted for the services, edit the respective broker's values.yaml to increase the PV size of the brokers at the location:

<chartpath>/charts/ocnaddkafka/values.yaml.If any formatting or indentation issues occur while editing, refer to the files in<chartpath>/charts/ocnaddkafka/defaultTo increase the storage edit the fields pvcClaimSize for each broker.

-

4. Upgrade the helm chart after increasing the PV size

helm upgrade <release-name> <chartpath> -n <namespace> - Create the required topics.

Kafka Brokers Restart on Installation

Problem

Kafka brokers re-start during OCNADD installation.

Error Code or Error Message

The output of the command kubectl get pods -n <ocnadd_namespace> displays the broker pod's status as restarted.

Solution

The Kafka Brokers wait for a maximum of 3 minutes for the Zookeepers to come online before they are started. If the Zookeeper cluster does not come online within the given interval, the broker will start before the Zookeeper and will error out as it does not have access to the Zookeeper.This may Zookeeper may start after the 3 interval as the node may take more time to pull the images due to network issues.Therefore, when the zookeeper does not come online within the given time this issue may be observed.

Database Goes into the Deadlock State

Problem

MySQL locks get struck.

Error Code or Error Message

ERROR 1213 (40001): Deadlock found when trying to get lock; try restarting the transaction.

Symptom

Unable to access MySQL.

Solution

Perform the following steps to remove the deadlock:

- Run the following command on each SQL

node:

This command retrieves the list of commands to kill each connection.SELECT CONCAT('KILL ', id, ';') FROM INFORMATION_SCHEMA.PROCESSLIST WHERE `User` = <DbUsername> AND `db` = <DbName>;Example:select CONCAT('KILL ', id, ';') FROM INFORMATION_SCHEMA.PROCESSLIST where `User` = 'ocnadduser' AND `db` = 'ocnadddb'; +--------------------------+ | CONCAT('KILL ', id, ';') | +--------------------------+ | KILL 204491; | | KILL 200332; | | KILL 202845; | +--------------------------+ 3 rows in set (0.00 sec) - Run the kill command on each SQL node.

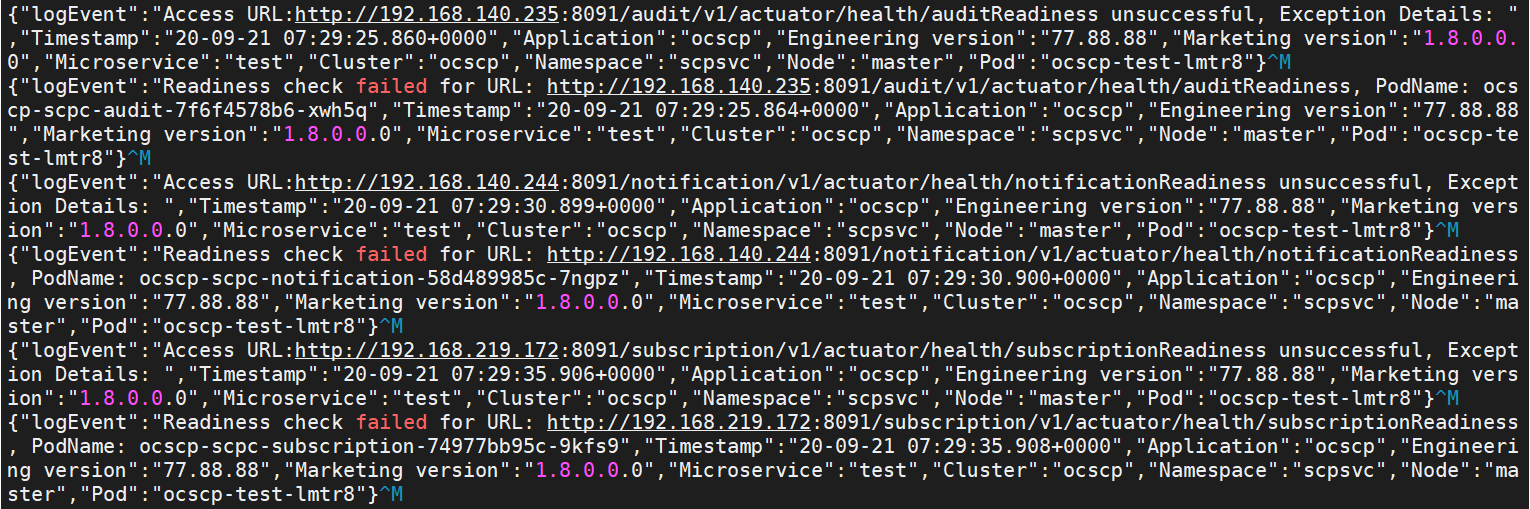

Readiness Probe Failure

The helm install might fail due to the readiness probe URL

failure.

If the following error appears, check for the readiness probe URL

correctness in the particular microservice helm charts under the charts folder:

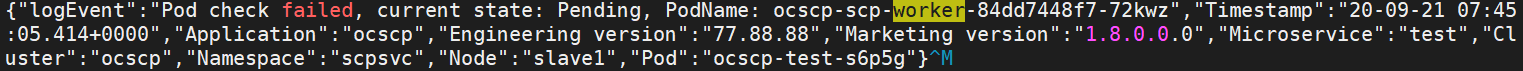

Low Resources

Helm install might fail due to low

resources, and the following error may appear:

In this case, check the CPU and memory availability in the Kubernetes cluster.