15 OCNADD KPIs, Metric, and Alerts

This chapter provides information on OCNADD Metrics, KPIs, and Alerts.

15.1 OCNADD Metrics

This section includes information about Dimensions and Common Attributes of metrics for Oracle Communications Network Analytics Data Director (OCNADD).

Dimension Description

Table 15-1 Dimensions

| Dimension | Values | Description |

|---|---|---|

| HttpVersion | HTTP/2.0 | Specifies HTTP protocol version. |

| Method | GET, PUT, POST, DELETE, PATCH | HTTP method. |

| Scheme | HTTP, HTTPS, UNKNOWN | Specifies the HTTP protocol scheme. |

| route_path | NA | Path predicate that matched the current request. |

| status | NA | HTTP response code. |

| quantile | Integer values | It captures the latency values with ranges as 10ms, 20ms, 40ms, 80ms, 100ms, 200ms, 500ms, 1000ms, and 5000ms. |

| instance_identifier | Prefix configured in Helm, UNKNOWN | Prefix of the pod configured in Helm when there are multiple instances in the same deployment. |

| 3rd_party_consumer_name or consumer_name | - | Name of the 3rd-party consumer application as configured from the UI. |

| destination_endpoint | IP/FQDN | Destination IP address or FQDN. |

| processor_node_id | - | Stream processor node ID in aggregation service. |

| serviceId | serviceType-N | It is the identifier for the service instance used for registration with the health monitoring service. |

| serviceType | CONSUMER_ADAPTER, CONFIGURATION, ALARM, AGGREGATION-NRF, AGGREGATION-SCP, AGGREGATION-SEPP, AGGREGATION-BSF, AGGREGATION-PCF, AGGREGATION-NON-ORACLE, OCNADD-ADMIN | The ocnadd service type. |

| service | ocnaddnrfaggregation, ocnaddseppaggregation, ocnaddscpaggregation, ocnaddbsfaggregation, etc. | The name of the Data Director microservice service. |

| request_type | HTTP2, H2C, TCP, TCP_SECURED | Type of the data feed created using UI; this will be used to identify if the feed is for HTTP2 or synthetic packets. |

| destination_endpoint | URI | It is the REST URI for the 3rd-party monitoring application configured on the data feed. |

| nf_feed_type | SCP, NRF, PCF, BSF, SEPP | The source NF for the feed. |

| error_reason | - | The error reason for the failure of the HTTP request sent to the 3rd-party application from the egress adapter. |

| correlation-id | - | Taken from correlation ID present in the metadata list. |

| way | - | It is taken from the message direction present in the metadata list. |

| srcIP | - | It is taken from the source IP present in the metadata list, global L3L4 configuration, or in the least-priority address configured in the feed. |

| dstIP | - | It is taken from the destination IP present in the metadata list, global L3L4 configuration, or in the least-priority address configured in the feed. |

| srcPort | - | It is taken from the source port present in the metadata list, global L3L4 configuration, or in the least-priority address configured in the feed. |

| dstPort | - | It is taken from the destination port present in the metadata list, global L3L4 configuration, or in the least-priority address configured in the feed. |

| MD | - | It provides information if the value is taken from the metadata list. It is attached with either srcIP, dstIP, srcPort, or dstPort based on mapping. |

| LP | - | It provides information if the value is taken from the least-priority address configured in the feed. It is attached with either srcIP, dstIP, srcPort, or dstPort based on mapping. |

| L3L4 | - | It provides information if the value is taken from the global L3L4 configuration. It is attached with either srcIP, dstIP, srcPort, or dstPort based on mapping. |

| worker_group | String | Name of the worker group in which the corresponding traffic processing service is running. |

Table 15-2 Attributes

| Attribute | Description |

|---|---|

| application | The name of the application that the microservice is a part of |

| microservice | The name of the microservice. |

| namespace | The Kubernetes namespace in which microservice is running. |

| node | The name of the worker node that the microservice is running on. |

| pod | The name of the Kubernetes POD. |

OCNADD Metrics

The following table lists important metrics related to OCNADD:

Table 15-3 Metrics

| Metric Name | Description | Dimensions |

|---|---|---|

| kafka_stream_processor_node_process_total |

The total number of records processed by a source node. The metric will be pegged by the aggregation services (SCP, SEPP, BSF, PCF and NRF) and egress adapter in the data directory and denote the total number of records consumed from the source topic. Metric Type: Counter Namespace will identify the worker group for the corresponding Kafka Cluster |

|

| kafka_stream_processor_node_process_rate | The average number of records processed per second by

a source node.

The metric will be pegged by the aggregation services (SCP, SEPP, BSF, PCF and NRF) and egress adapter in the data director and denotes the records consumed per sec from the source topic. Metric Type: Gauge Namespace will identify the worker group for the corresponding Kafka Cluster |

|

| kafka_stream_task_dropped_records_total |

The total number of records dropped within the stream processing task. The metric will be pegged by the aggregation services (SCP, SEPP, BSF, PCF and NRF) and egress adapter in the data director. Metric Type: Counter Namespace will identify the worker group for the corresponding Kafka Cluster |

|

| kafka_stream_task_dropped_records_rate |

The average number of records dropped within the stream processing task. The metric will be pegged by the aggregation services (SCP, SEPP, BSF, PCF and NRF) and egress adapter in the data director. Metric Type: Gauge Namespace will identify the worker group for the corresponding Kafka Cluster |

|

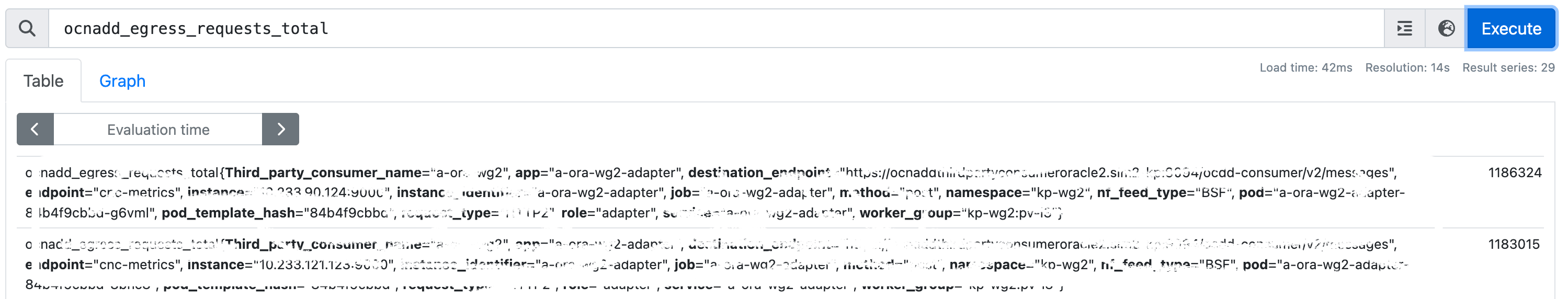

| ocnadd_egress_requests_total |

This metric will be pegged as soon as the request reaches the ocnadd egress adapter. This metric peg count of total request which has to be forwarded to third party. This metric is used for the Egress MPS at DD. Metric Type: Counter |

|

| ocnadd_egress_responses_total |

This metric will be pegged on the Egress adapter when the response is received at egress adapter. This metric is pegged by ocnadd adapter service. This metric pegs count of total responses (success or failed) received from the third party Metric Type: Counter |

|

| ocnadd_egress_failed_request_total |

This metric pegs count of total request which are failed to send to third party application. This metric is pegged by egress adapter service Metric Type: Counter |

|

| ocnadd_egress_e2e_request_processing_latency_seconds_bucket |

The metric is pegged on the egress adapter service. It is the latency between the packet timestamp provided by producer NF and the egress adapter when the request packet is sent to the third-party application. This latency is calculated for each message. Metric Type: Histogram |

|

| ocnadd_egress_e2e_request_processing_latency_seconds_sum |

This is the sum of end-to-end request processing time for all the requests in seconds. It is the latency between the packet timestamp provided by the producer NF and the egress adapter when the packet is sent out. Metric Type: Counter |

|

| ocnadd_egress_e2e_request_processing_latency_seconds_count |

This is the count of batches of messages for which the processing time is summed up. It is the latency between the packet timestamp provided by producer NF and the egress adapter when the packet is sent out. Metric Type: Counter |

|

| ocnadd_egress_service_request_processing_latency_seconds_bucket |

The metric is pegged on the egress adapter service. It is the egress adapter service processing latency and is pegged when a request is sent out from the egress gateway to the third-party application. This latency is calculated for each of the messages. Metric Type: Histogram |

|

| ocnadd_egress_service_request_processing_latency_seconds_sum |

The metric is pegged on the egress adapter service. It is the sum of the egress adapter service processing time for all the requests in seconds and is pegged when a request is sent out from the egress adapter to the third-party application. Metric Type: Counter |

|

| ocnadd_egress_service_request_processing_latency_seconds_count |

The metric is pegged on the egress adapter service. It is the count for which the processing time is summed up and is pegged when a request is sent out from the egress adapter to the third-party application. Metric Type: Counter |

|

| ocnadd_adapter_synthetic_packet_generation_count_total |

This metric pegs the count of synthetic packets generated with either success or failed status. Metric Type: Counter |

|

| ocnadd_egress_filtered_message_total |

This metric pegs the count of messages that is matching with the filter criteria for egress. Metric Type: Counter Filter criteria have been enhanced to provide a description with action and filter rules. |

|

| ocnadd_egress_unmatched_filter_message_total |

This metric pegs the count of messages that do not match the filter criteria for egress. Metric Type: Counter Filter criteria have been enhanced to provide a description with action and filter rules. |

|

| ocnadd_ingress_filtered_message_total |

This metric pegs the count of messages that is matching with the filter criteria for ingress. Metric Type: Counter Filter criteria have been enhanced to provide a description with action and filter rules. |

|

| ocnadd_ingress_unmatched_filter_message_total |

This metric pegs the count of messages that do not match the filter criteria for ingress. Metric Type: Counter Filter criteria have been enhanced to provide a description with action and filter rules. |

|

| ocnadd_health_total_alarm_raised_total |

This metric will be pegged whenever a new alarm is raised to the alarm service from the Health Monitoring service. Metric Type: Counter |

|

| ocnadd_health_total_alarm_cleared_total |

This metric will be pegged whenever a clear alarm is invoked from the Health Monitoring service. Metric Type: Counter |

|

| ocnadd_health_total_active_number_of_alarm_raised_total |

This metric will be pegged whenever a raise/clear alarm is raised to the alarm service from the Health Monitoring service. This denotes the active alarms raised by the healthmonitoring service. Metric Type: Counter |

|

| ocnadd_l3l4mapping_info_count_total |

This metric will be pegged to provide information about L3L4 mapping in synthetic messages. By, default it is disabled in the chart. Metric Type: Counter |

|

| ocnadd_ext_kafka_feed_record_total | This metric will be pegged by the admin service to

provide the total consumed messages by the external Kafka consumer

application. The admin service retrieves the consumer offsets count

from all the partitions of the aggregated topic and pegs the metric

periodically.

Metric Type: Counter |

|

| ocnadd_data_export_failure_records_count |

The metric will be pegged by the export service to provide the total number of records or messages that were not exported successfully. Metric Type: Counter |

|

| ocnadd_xdr_database_records_sent |

The metric will be pegged by the storage adapter service to provide the total number of xDRs sent to the XDR database. The xDRs will be pegged for the correlation service corresponding to the worker group. Metric Type: Counter |

|

| ocnadd_ingress_request_total |

This metric will be pegged as soon as the request reaches the ocnadd ingress adapter. This metric pegs the count of total requests received by the ocnadd ingress adapter from non-Oracle NFs. It is used for the Ingress MPS at OCNADD with respect to non-Oracle NFs. Metric Type: Counter |

|

| ocnadd_ingress_message_processed_total |

This metric will be pegged as soon as the request is processed at the ocnadd ingress adapter. This metric pegs the count of total requests that were processed successfully, failed, or discarded by the ocnadd ingress adapter. Metric Type: Counter |

|

| ocnadd_ingress_service_request_processing_latency_seconds_sum |

The metric is pegged on the ingress adapter service. It is the sum of the ingress adapter service processing latency in seconds and is pegged when a request is completely processed at the ingress adapter. This is pegged for each message. Metric Type: Counter |

|

| ocnadd_ingress_service_request_processing_latency_seconds_count |

The metric is pegged on the ingress adapter service. It is the cumulative count of the messages processed at the ingress adapter service instance. Metric Type: Counter |

|

15.2 OCNADD KPIs

Note:

The "namespace" in the KPIs should be updated to reflect the current namespace used in the Data Director deployment.

Ensure that the queries are tailored per worker group wherever applicable, such as for KPIs related to ingress and egress MPS, failure/success rate, packet drop, etc. Utilize the "worker_group" label to filter based on the worker group name in the KPI queries.

For queries, adhere to PromQL syntax for CNE-based deployments and MQL syntax for OCI-based deployments.

The following KPIs are added in OCNADD 25.1.200.

Table 15-4 ocnadd_ingress_record_count_by_service

| KPI Detail | Measures the total ingress records in Kafka source topics per aggregation service at the current time |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (service)(kafka_stream_processor_node_process_total{namespace="$NAMESPACE", service=~".*aggregation.*"}) |

| Metric Used for the KPI (OCI) | MQL: kafka_stream_processor_node_process_total[10m]{microservice=~"*aggregation*", k8Namespace="$NAMESPACE"}.groupby(microservice).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-5 ocnadd_ingress_record_count_total

| KPI Detail | Measures the total ingress records in Kafka source topics at the current time |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum (kafka_stream_processor_node_process_total{namespace="$NAMESPACE", service=~".*aggregation.*"}) |

| Metric Used for the KPI (OCI) | MQL: kafka_stream_processor_node_process_total[10m]{microservice=~"*aggregation*", k8Namespace="$NAMESPACE"}.sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-6 ocnadd_ingress_mps_per_service_10mAgg

| KPI Detail | Measures the ingress MPS per service aggregated over 10min |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (service)(rate(kafka_stream_processor_node_process_total{namespace="$NAMESPACE",service=~".*aggregation.*"}[10m])) |

| Metric Used for the KPI (OCI) | MQL: kafka_stream_processor_node_process_total[10m]{microservice=~"*aggregation*"}.rate().groupby(k8Namespace,microservice).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-7 ocnadd_ingress_mps_10mAgg

| KPI Detail | Measures the ingress MPS aggregated over 10min |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum(rate(kafka_stream_processor_node_process_total{namespace="$NAMESPACE",service=~".*aggregation.*"}[10m])) |

| Metric Used for the KPI (OCI) | MQL: kafka_stream_processor_node_process_total[10m]{microservice=~"*aggregation*", k8Namespace="$NAMESPACE"}.rate().grouping().sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-8 ocnadd_ingress_mps_per_service_10mAgg_last_24h

| KPI Detail | Measures the ingress MPS per service aggregated over 10min for the last 24 hours |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (service)(rate(kafka_stream_processor_node_process_total{namespace="$NAMESPACE",service=~".*aggregation.*"}[10m]))[24h:5m] |

| Metric Used for the KPI (OCI) | MQL: No valid MQL equivalent is available |

| Service Operation | NA |

| Response Code | NA |

Table 15-9 ocnadd_ingress_record_count_per_service_10mAgg_last_24h

| KPI Detail | Measures the ingress messages per service aggregated over 10min for the last 24 hours |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (service)(increase(kafka_stream_processor_node_process_total{namespace="$NAMESPACE",service=~".*aggregation.*"}[10m]))[24h:5m] |

| Metric Used for the KPI (OCI) | MQL: No valid MQL equivalent is available |

| Service Operation | NA |

| Response Code | NA |

Table 15-10 ocnadd_kafka_ingress_record_drop_rate_10minAgg

| KPI Detail | Measures the total ingress message drop rate aggregated over 10min |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum(rate(kafka_stream_task_dropped_records_total{namespace="$NAMESPACE",service=~".*aggregation.*"}[10m])) |

| Metric Used for the KPI (OCI) | MQL: kafka_stream_task_dropped_records_total[10m]{microservice=~"*aggregation"}.rate().grouping().sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-11 ocnadd_kafka_ingress_record_drop_rate_per_service_10minAgg

| KPI Detail | Measures the total ingress message drop rate per service aggregated over 10min |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum(rate(kafka_stream_task_dropped_records_total{namespace="$NAMESPACE",service=~".*aggregation.*}[10m])) by (service,pod) |

| Metric Used for the KPI (OCI) | MQL: kafka_stream_task_dropped_records_total[10m]{microservice=~"*aggregation"}.rate().groupby(nodeName, microservice).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-12 ocnadd_egress_request_count_total_by_3rdparty_destination_endpoint

| KPI Detail | Total egress requests per third-party application per destination endpoint |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (instance_identifier,destination_endpoint)(ocnadd_egress_requests_total{namespace="$NAMESPACE"}) |

| Metric Used for the KPI (OCI) | MQL: ocnadd_egress_requests_total[10m]{namespace="$NAMESPACE"}.groupby(instance_identifier,destination_endpoint).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-13 ocnadd_egress_response_count_total_by_3rdparty_destination_endpoint

| KPI Detail | Total egress responses per third-party application per destination endpoint |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (instance_identifier,destination_endpoint)(ocnadd_egress_responses_total{namespace="$NAMESPACE"}) |

| Metric Used for the KPI (OCI) | MQL: ocnadd_egress_responses_total[10m]{namespace="$NAMESPACE"}.groupby(instance_identifier,destination_endpoint).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-14 ocnadd_egress_failure_count_total_by_3rdparty_destination_endpoint

| KPI Detail | Total egress failure count per third-party application per destination endpoint |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (instance_identifier,destination_endpoint)(ocnadd_egress_failed_request_total{namespace="$NAMESPACE"}) |

| Metric Used for the KPI (OCI) | MQL: ocnadd_egress_failed_request_total[10m]{namespace="$NAMESPACE"}.groupby(instance_identifier,destination_endpoint).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-15 ocnadd_egress_request_rate_by_3rdparty_10mAgg

| KPI Detail | Total egress request rate per third-party application in 10min Aggregation |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum by (instance_identifier)(rate(ocnadd_egress_requests_total{namespace="$NAMESPACE"}[10m])) |

| Metric Used for the KPI (OCI) | MQL: ocnadd_egress_requests_total[10m]{app=~"*adapter*"}.rate().groupby(worker_group,app).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-16 ocnadd_egress_failure_rate_by_3rdparty_10mAgg

| KPI Detail | Total egress failure rate per third-party application in 10min Aggregation |

|---|---|

| Metric Used for the KPI (CNE) |

PromQL: sum by (instance_identifier)(irate(ocnadd_egress_failed_request_total{namespace="$NAMESPACE"}[10m])) / sum by (instance_identifier) (irate(ocnadd_egress_requests_total{namespace="$NAMESPACE"}[10m])) |

| Metric Used for the KPI (OCI) |

MQL: ocnadd_egress_failed_request_total[10m]{namespace="$NAMESPACE"}.groupby(instance_identifier).sum() / ocnadd_egress_requests_total[10m]{namespace="$NAMESPACE"}.groupby(instance_identifier).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-17 ocnadd_egress_failure_rate_by_3rdparty_per_destination_endpoint_10mAgg

| KPI Detail | Total egress failure rate per third-party application per destination endpoint in 10min Aggregation |

|---|---|

| Metric Used for the KPI (CNE) |

PromQL: sum by (instance_identifier, destination_endpoint)(irate(ocnadd_egress_failed_request_total{namespace="$NAMESPACE"}[10m])) / sum by (instance_identifier, destination_endpoint) (irate(ocnadd_egress_requests_total{namespace="$NAMESPACE"}[10m])) |

| Metric Used for the KPI (OCI) |

MQL: ocnadd_egress_failed_request_total[10m]{namespace="$NAMESPACE"}.groupby(instance_identifier,destination_endpoint).sum() / ocnadd_egress_requests_total[10m]{namespace="$NAMESPACE"}.groupby(instance_identifier,destination_endpoint).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-18 ocnadd_e2e_avg_latency_by_3rdparty

| KPI Detail | Total e2e average latency per third-party application in 10min Aggregation |

|---|---|

| Metric Used for the KPI (CNE) |

PromQL: (sum (irate(ocnadd_egress_e2e_request_processing_latency_seconds_sum{namespace="$NAMESPACE"}[10m])) by (instance_identifier) / (sum (irate(ocnadd_egress_e2e_request_processing_latency_seconds_count{namespace="$NAMESPACE"}[10m])) by (instance_identifier))) |

| Metric Used for the KPI (OCI) | MQL: ocnadd_egress_service_request_processing_latency_seconds_sum[10m]{app=~"*adapter*"}.rate().groupby(app, worker_group).sum() / ocnadd_egress_service_request_processing_latency_seconds_count[10m]{app=~"*adapter*"}.rate().groupby(app, worker_group).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-19 ocnadd_e2e_avg_latency_by_3rdparty_per_adapter_pod

| KPI Detail | Total e2e average latency per third-party application per egress adapter POD in 10min aggregation |

|---|---|

| Metric Used for the KPI (CNE) |

PromQL: (sum (irate(ocnadd_egress_e2e_request_processing_latency_seconds_sum{namespace="$NAMESPACE"}[10m])) by (instance_identifier,pod) / (sum (irate(ocnadd_egress_e2e_request_processing_latency_seconds_count{namespace="$NAMESPACE"}[10m])) by (instance_identifier,pod))) |

| Metric Used for the KPI (OCI) |

MQL: (ocnadd_egress_e2e_request_processing_latency_seconds_sum[10m]{app=~"*adapter*"}.rate().groupBy(worker_group,app).sum() / ocnadd_egress_e2e_request_processing_latency_seconds_count[10m]{app=~"*adapter*"}.rate().groupBy(worker_group,app).sum()) |

| Service Operation | NA |

| Response Code | NA |

Table 15-20 ocnadd_egress_adapter_processing_avg_latency_by_3rdparty_per_adapter_pod

| KPI Detail | Total service processing average latency per third-party application per adapter POD in 10min aggregation |

|---|---|

| Metric Used for the KPI (CNE) |

PromQL: (sum (irate(ocnadd_egress_service_request_processing_latency_seconds_sum{namespace="$NAMESPACE"}[10m])) by (instance_identifier,pod) / (sum (irate(ocnadd_egress_service_request_processing_latency_seconds_count{namespace="$NAMESPACE"}[10m])) by (instance_identifier,pod))) |

| Metric Used for the KPI (OCI) |

MQL: ocnadd_egress_service_request_processing_latency_seconds_sum[10m]{app=~"*adapter*"}.rate().groupby(app, worker_group).sum() / ocnadd_egress_service_request_processing_latency_seconds_count[10m]{app=~"*adapter*"}.rate().groupby(app, worker_group).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-21 ocnadd_egress_e2e_avg_latency_buckets

| KPI Detail | The latency buckets for the feed in a worker group namespace |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum(rate(ocnadd_egress_e2e_request_processing_latency_seconds_bucket{app=~".*adapter.*"}[10m])) by (le,namespace,service) |

| Metric Used for the KPI (OCI) | MQL: (ocnadd_egress_e2e_request_processing_latency_seconds_bucket[10m]{app=~"*adapter*"}.rate().groupby(k8Namespace,app,le).sum()) |

| Service Operation | NA |

| Response Code | NA |

Table 15-22 ocnadd_ext_kafka_feed_record_total per external feed rate(MPS)

| KPI Detail | The rate of messages consumed per sec per external Kafka consumer, calculated over a period of 5min |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum(rate(ocnadd_ext_kafka_feed_record_total{namespace="$Namespace"}[5m])) by (feed_name) |

| Metric Used for the KPI (OCI) | MQL: ocnadd_ext_kafka_feed_record_total[10m].rate().groupby(k8Namespace,feed_name).sum() |

| Service Operation | NA |

| Response Code | NA |

This KPI should be used in the context of the management group and worker group, the namespaces may differ for the management and worker group if there is no default worker group

Table 15-23 Memory Usage per POD

| KPI Detail | Measures the memory usage per POD |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum(container_memory_working_set_bytes{namespace=~"$Namespace",image!=""}/(1024*1024*1024)) by (pod) |

| Metric Used for the KPI (OCI) | MQL: (container_memory_working_set_bytes[10m]{container=~\"*ocnadd*|*zookeeper*|*kafka*|*adapter*|corr*\"}.groupby(namespace,pod).mean())/1000000 |

| Service Operation | NA |

| Response Code | NA |

This KPI should be used in the context of the management group and worker group, the namespaces may differ for the management and worker group if there is no default worker group

Table 15-24 CPU Usage per POD

| KPI Detail | Measures the CPU usage per POD |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: sum(rate(container_cpu_usage_seconds_total{namespace=~"$Namespace",image!=""}[2m])) by (pod) * 1000 |

| Metric Used for the KPI (OCI) | MQL: container_cpu_usage_seconds_total[10m]{pod=~\"*ocnadd*|*kafka*|*zookeeper*|*adapter*|*corr*|*export*\"}.rate().groupby(namespace,pod).sum() |

| Service Operation | NA |

| Response Code | NA |

Table 15-25 Service Status

| KPI Detail | Provide the status of each of the Data Director service running in the namespace provided |

|---|---|

| Metric Used for the KPI (CNE) | PromQL: up{namespace="$NAMESPACE"} |

| Metric Used for the KPI (OCI) | MQL:podStatus[10m]{podOwner=~"*adapter*|*ocnadd*|*kafka*|*zookeeper*|corr*|*export*\"}.groupby(clusterNamespace,podName).mean() |

| Service Operation | NA |

| Response Code | NA |

15.3 OCNADD Alerts

This section provides information on Oracle Communications Network Analytics Data Director (OCNADD) alerts and their configuration.

Alerts Interpretation

The following table defines the alerts severity interpretation based on the infrastructure.

Table 15-26 Alerts Interpretation

| CNE | OCI |

|---|---|

| Critical | Critical |

| Major | Error |

| Minor | Error |

| Warning | Warning |

| Info | Info |

Note:

Alert OIDs are deprecated for OCI deployments.15.3.1 OCNADD Alert Configuration

This section describes how to configure alerts in OCNADD.

OCNADD on OCCNE

Note:

The label used to update the Prometheus Server is "role: cnc-alerting-rules," which is added by default in helm

charts.

OCNADD on OCI

Alerts on OCI are made available by the OCI Alarm service. The monitoring service on OCI fetches metrics from OCNADD services, and the Alarm service triggers alarms when the defined threshold is breached. Metrics on OCI are fetched using MQL, and MQL queries are used in the Alarm template on OCI. Alarms can be created using the OCI GUI. OCNADD provides a Terraform script to create supported alarms on OCI:

- Extract the Terraform script provided in the OCNADD package under

<release-name>/custom-templates/oci/terraform. - Follow these steps:

- Log in to the OCI console.

- Click Hamburger menu and select Developer Services.

- Under Developer Services, select Resource Manager.

- Under Resource Manager, select Stacks.

- Click Create stack button.

- Select the default My Configuration radio button.

- Under Stack configuration, click on the folder

radio button and upload the Terraform package

<release-name>/custom-templates/oci/terraform. - Enter the Name and Description and select the compartment.

- Click Next.

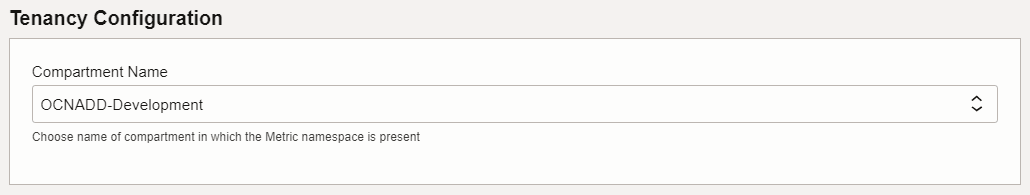

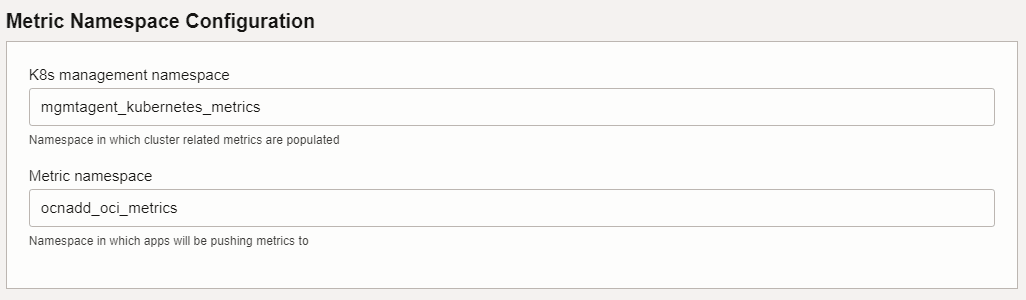

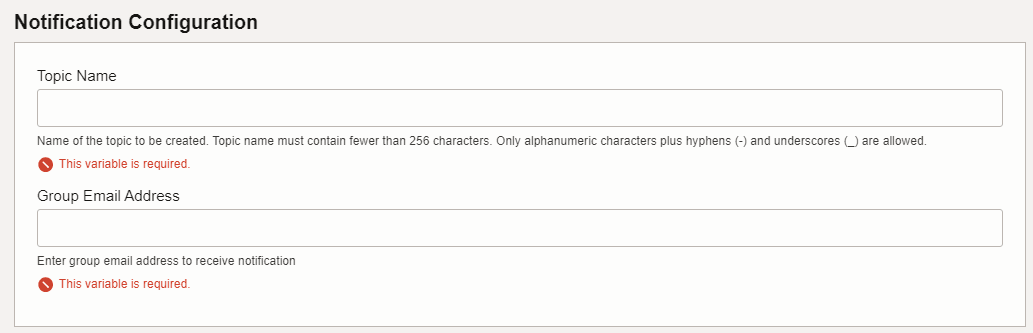

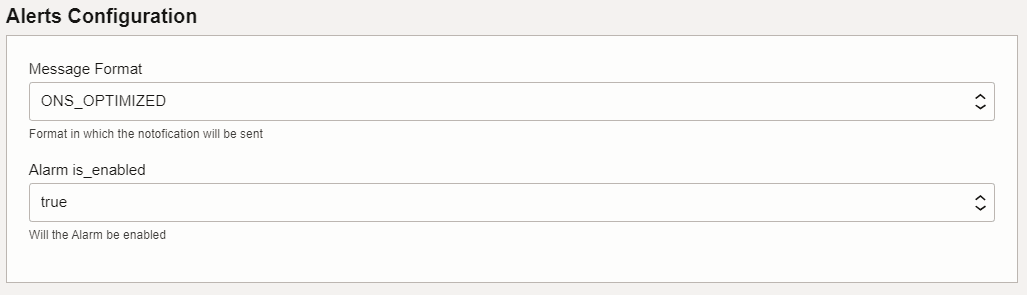

- Provide appropriate values for the parameters requested in the Terraform

script as shown in the following screenshots:

OCNADD supports alarm subscription through email on OCI, and here are some important points for configuring alarms:

- Alarm Categories in OCI: Alarms in OCI are categorized into critical, warning, info, and error. Note that the error category is not available in Prometheus alert rules. Therefore, alarms with severity minor and major in Prometheus are converted to error in OCI. For more information, see OCI Alert Template.

- Notification and Topic Setup: During the execution of the Terraform script, notifications and topics for the alerts will be automatically created.

- User Modification/Deletion: If users need to create new alarms or modify and delete the alarms added through Terraform, they can perform these actions by editing the corresponding alarm definitions through the OCI Console.

- OCI Notification Reference: For more information on OCI Notification, see OCI Notification.

OCNADD Configuration When Prometheus is Deployed Without Operator

This section covers the steps to follow when Prometheus is deployed without Operator

support (occne-nf-cnc-servicemonitor service), in order to receive

all metrics on the OCNADD UI.

- Changes in Custom Values of Management

Group:

PROMETHEUS_API: http://<prometheus-service-name>.<prometheus-namespace>.svc.<cluster-domain>:80 # Replace the placeholders with correct information. # Example: PROMETHEUS_API: http://occne-kube-prom-stack-kube-prometheus.occne-infra.ocnadd:80 DD_PROMETHEUS_PATH: /prometheus/api/v1/query_range # Replace the default DD_PROMETHEUS_PATH with this - Add Prometheus Annotations in All Deployments and StatefulSets:

Steps to Update Annotations in All Deployments and StatefulSets:

- Run:

kubectl edit deployment <deployment-name> -n <namespace>Add the Prometheus annotations as shown below to the respective deployments:

Edit Deployment Example for Adapter

Before:... template: metadata: creationTimestamp: null labels: app: app-1-adapter role: adapter ...After Adding Annotations:... template: metadata: annotations: # Add these Prometheus annotations to charts prometheus.io/path: /actuator/prometheus prometheus.io/port: "9000" prometheus.io/scrape: "true" creationTimestamp: null ... - Edit the chart files at the specified locations (paths mentioned in the table below) to include the same Prometheus annotations, ensuring changes persist during upgrades.

- Verification of Changes:

- Run the following to verify annotations are

applied:

kubectl describe deployments.apps -n <namespace> app-1-adapter | grep "prometheus"Expected Output:Annotations: prometheus.io/path: /actuator/prometheus prometheus.io/port: 9000 prometheus.io/scrape: true - Verify metrics availability in Prometheus.

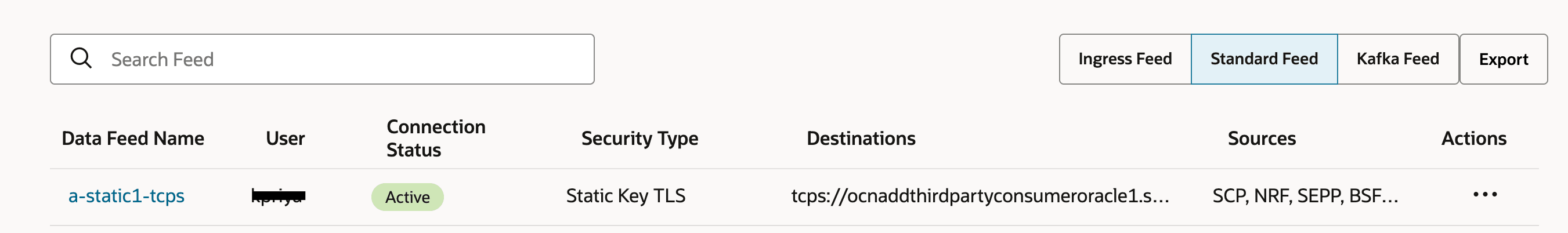

- Confirm "ACTIVE" status of feeds on the DD UI when traffic is successfully

flowing.

- Run the following to verify annotations are

applied:

- Run:

Chart paths for adding annotations manually:

| Services | Path |

|---|---|

| kafka | ocnadd/charts/ocnaddkafka/templates/ocnaddkafkaBroker.yaml |

| zookeeper | ocnadd/charts/ocnaddkafka/templates/ocnadd-zookeeper.yaml |

| admin svc | ocnadd/charts/ocnaddadminsvc/templates/ocnaddadminservice.yaml |

| correlation svc | ocnadd/charts/ocnaddadminsvc/templates/correlation-deploy.yaml |

| storage adapter | ocnadd/charts/ocnaddadminsvc/templates/ocnaddstorageadapter-deploy.yaml |

| consumer adapter | ocnadd/charts/ocnaddadminsvc/templates/ocnaddingressadapter-deploy.yaml |

| alarm svc | ocnadd/charts/ocnaddalarm/templates/ocnadd-alarm.yaml |

| configuration svc | ocnadd/charts/ocnaddconfiguration/templates/ocnadd-configuration.yaml |

| healthmonitoring svc | ocnadd/charts/ocnaddhealthmonitoring/templates/ocnadd-health.yaml |

| aggregation svc | ocnadd/charts/ocnaddaggregation/templates/ocnadd-<NF>aggregation.yaml (NF - scp,sepp,pcf,nrf,bsf) |

| export svc | ocnadd/charts/ocnaddexport/templates/ocnadd-export.yaml |

15.3.2 List of Alerts

This section provides detailed information about the alert rules defined for OCNADD.

15.3.2.1 System Level Alerts

This section lists the system level alerts for OCNADD.

Table 15-27 OCNADD_POD_CPU_USAGE_ALERT

| Field | Details |

|---|---|

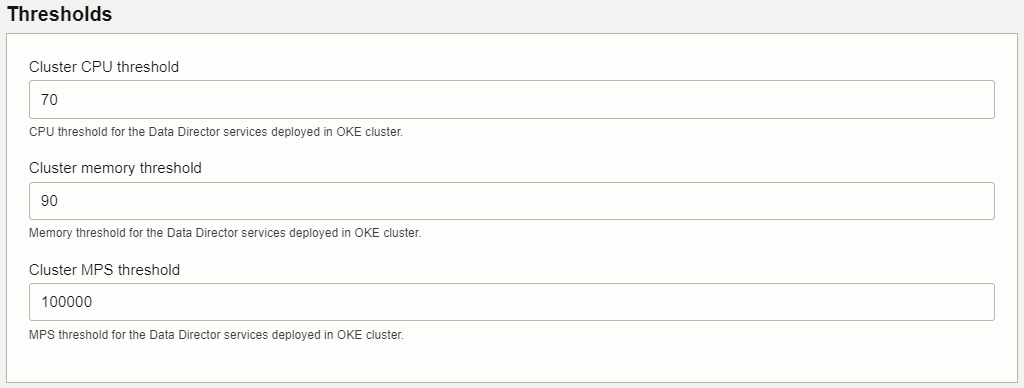

| Triggering Condition | POD CPU usage is above the set threshold (default 85%) |

| Severity | Major |

| Description | OCNADD Pod High CPU usage detected for a continuous period of 5min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: CPU usage is {{ "{{" }} $value | printf "%.2f" }} which is above threshold {{ .Values.global.cluster.cpu_threshold }} % ' PromQL Expression: expr: (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*aggregation.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*2) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*kafka.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*6) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*kraft.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*adapter.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*3) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*correlation.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*1) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*filter.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*1) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*configuration.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*1) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*admin.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*1) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*health.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*1) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*alarm.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*1) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*ui.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*1) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*export.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*4) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*storageadapter.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*3) or (sum(rate(container_cpu_usage_seconds_total{image!="" , pod=~".*ingressadapter.*"}[5m])) by (pod,namespace) > {{ .Values.global.cluster.cpu_threshold }}*3) |

| Alert Details OCI |

Summary: Alarm "OCNADD_POD_CPU_USAGE_ALERT" is in a "X" state; because n metrics meet the trigger rule: "pod_cpu_usage_seconds_total[10m]{pod=~"*alarm*|*admin*|*health*|*config*|*kraft*"}.rate().groupby(namespace,pod).sum()*100>=85||pod_cpu_usage_seconds_total[10m]{pod=~"*ui*|*aggregation*|*filter*"}.rate().groupby(namespace,pod).sum()*100>=2*85||pod_cpu_usage_seconds_total[10m]{pod=~"*corr*"}.rate().groupby(namespace,pod).sum()*100>=3*85||pod_cpu_usage_seconds_total[10m]{pod=~"*kafka*"}.rate().groupby(namespace,pod).sum()*100>=6*85|| pod_cpu_usage_seconds_total[10m]{pod=~"*export*"}.rate().groupby(namespace,pod).sum()*100>=4*85|| pod_cpu_usage_seconds_total[10m]{pod=~"*storageadapter*"}.rate().groupby(namespace,pod).sum()*100>=3*85|| pod_cpu_usage_seconds_total[10m]{pod=~"*ingressadapter*"}.rate().groupby(namespace,pod).sum()*100>=3*85", with a trigger delay of 1 minute where, X = FIRING/OK, n = Different services that violated the rule.

MQL Expression: pod_cpu_usage_seconds_total[10m]{pod=~"*alarm*|*admin*|*health*|*config*|*kraft*"}.rate().groupby(namespace,pod).sum()*100>={{ CPU Threshold }}||pod_cpu_usage_seconds_total[10m]{pod=~"*ui*|*aggregation*|*filter*"}.rate().groupby(namespace,pod).sum()*100>=2*{{ CPU Threshold }}||pod_cpu_usage_seconds_total[10m]{pod=~"corr*"}.rate().groupby(namespace,pod).sum()*100>=3*{{ CPU Threshold }}||pod_cpu_usage_seconds_total[10m]{pod=~"*kafka*"}.rate().groupby(namespace,pod).sum()*100>=6*{{ CPU Threshold }} || pod_cpu_usage_seconds_total[10m]{pod=~"*export*"}.rate().groupby(namespace,pod).sum()*100>=4*{{ CPU Threshold }} || pod_cpu_usage_seconds_total[10m]{pod=~"*storageadapter*"}.rate().groupby(namespace,pod).sum()*100>=3*{{ CPU Threshold }} || pod_cpu_usage_seconds_total[10m]{pod=~"*ingressadapter*"}.rate().groupby(namespace,pod).sum()*100>=3*{{ CPU Threshold }} Note: CPU Threshold will be assigned will executing the terraform script |

| OID | 1.3.6.1.4.1.323.5.3.53.1.29.4002 |

| Metric Used |

container_cpu_usage_seconds_total Note: This is a Kubernetes metric used for instance availability monitoring. If the metric is not available, use a similar metric as exposed by the monitoring system |

| Resolution |

The alert gets cleared when the CPU utilization is below the critical threshold. Note: The threshold is configurable in the

|

Table 15-28 OCNADD_POD_MEMORY_USAGE_ALERT

| Field | Details |

|---|---|

| Triggering Condition | POD Memory usage is above set threshold (default 90%) |

| Severity | Major |

| Description | OCNADD Pod High Memory usage detected for a continuous period of 5min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Memory usage is {{ "{{" }} $value | printf "%.2f" }} which is above threshold {{ .Values.global.cluster.memory_threshold }} % ' PromQL Expression: expr: (sum(container_memory_working_set_bytes{image!="" , pod=~".*aggregation.*"}) by (pod,namespace) > 3*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*kafka.*"}) by (pod,namespace) > 64*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*kraft.*"}) by (pod,namespace) > 1*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*filter.*"}) by (pod,namespace) > 3*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*adapter.*"}) by (pod,namespace) > 4*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*correlation.*"}) by (pod,namespace) > 4*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*configuration.*"}) by (pod,namespace) > 4*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*admin.*"}) by (pod,namespace) > 2*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*health.*"}) by (pod,namespace) > 2*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*alarm.*"}) by (pod,namespace) > 2*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*ui.*"}) by (pod,namespace) > 2*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*export.*"}) by (pod,namespace) > 64*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*storageadapter.*"}) by (pod,namespace) > 64*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) or (sum(container_memory_working_set_bytes{image!="" , pod=~".*ingressadapter.*"}) by (pod,namespace) > 8*1024*1024*1024*{{ .Values.global.cluster.memory_threshold }}/100) |

| Alert Details OCI |

Summary: Alarm "OCNADD_POD_MEMORY_USAGE_ALERT" is in a "X" state; because n metrics meet the trigger rule: "container_memory_usage_bytes[5m]{pod=~"*adapter*|*kafka*|*kraft*|*ocnadd*|*corr*|*export*|*storageadapter*|*ingressadapter*"}.groupby(namespace,pod).sum()/container_spec_memory_limit_bytes[5m]{pod=~"*adapter*|*kafka*|*kraft*|*ocnadd*|*corr*|*export*|*storageadapter*|*ingressadapter*"}.groupby(namespace,pod).sum()*100>=90", with a trigger delay of 1 minute where, X = FIRING/OK, n = Different services that violated the rule. MQL Expression: container_memory_usage_bytes[10m]{pod=~"*adapter*|*kafka*|*kraft*|*ocnadd*|corr*|*export*|*storageadapter*|*ingressadapter*"}.groupby(namespace,pod).sum()/container_spec_memory_limit_bytes[10m]{pod=~"*adapter*|*kafka*|*kraft*|*ocnadd*|corr*|*export*|*storageadapter*|*ingressadapter*"}.groupby(namespace,pod).sum()*100>={{ Memory Threshold }} |

| OID | 1.3.6.1.4.1.323.5.3.53.1.29.4005 |

| Metric Used |

container_memory_working_set_bytes Note: This is a Kubernetes metric used for instance availability monitoring. If the metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert gets cleared when the memory utilization is below the critical threshold. Note: The threshold is configurable in the

|

Table 15-29 OCNADD_POD_RESTARTED

| Field | Details |

|---|---|

| Triggering Condition | A POD has restarted |

| Severity | Minor |

| Description | A POD has restarted in the last 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: A Pod has restarted' PromQL Expression: expr: kube_pod_container_status_restarts_total{namespace="{{ .Values.global.cluster.nameSpace.name }}"} > 1 |

| Alert Details OCI |

MQL Expression: No MQL equivalent is available |

| OID | 1.3.6.1.4.1.323.5.3.53.1.29.5006 |

| Metric Used |

kube_pod_container_status_restarts_total Note: This is a Kubernetes metric used for instance availability monitoring. If the metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically if the specific pod is up. Steps: 1. Check the application logs. Check for database related failures such as connectivity, Kubernetes secrets, and so on. 2. Run the following command to check orchestration logs for liveness or readiness probe failures: kubectl get po -n <namespace> Note the full name of the pod that is not running, and use it in the following command: kubectl describe pod <desired full pod name> -n <namespace> 3. Check the database status. For more information, see "Oracle Communications Cloud Native Core DBTier User Guide". 4. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support, If guidance is required. |

15.3.2.2 Application Level Alerts

This section lists the application level alerts for OCNADD.

Table 15-30 OCNADD_CONFIG_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The configuration service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Configuration service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddconfiguration service is down' PromQL Expression: expr: up{service="ocnaddconfiguration"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_CONFIG_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddconfiguration"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddconfiguration"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.20.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Configuration service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support.. |

Table 15-31 OCNADD_ALARM_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The alarm service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Alarm service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddalarm service is down' PromQL Expression: expr: up{service="ocnaddalarm"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_ALARM_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddalarm"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddalarm"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.24.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Alarm service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-32 OCNADD_HEALTH_MONITORING_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The health monitoring service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Health monitoring service not available for more than 2 min |

| Alert Details CNE |

Summary: summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddhealthmonitoring service is down' PromQL Expression: expr: up{service="ocnaddhealthmonitoring"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_HEALTH_MONITORING_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddhealthmonitoring"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddhealthmonitoring"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.28.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Health monitoring service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-33 OCNADD_SCP_AGGREGATION_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The SCP Aggregation service went down or not accessible |

| Severity | Critical |

| Description | OCNADD SCP Aggregation service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddscpaggregation service is down' PromQL Expression: expr: up{service="ocnaddscpaggregation"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_SCP_AGGREGATION_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddscpaggregation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddscpaggregation"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.22.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD SCP Aggregation service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-34 OCNADD_NRF_AGGREGATION_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The NRF Aggregation service went down or not accessible |

| Severity | Critical |

| Description | OCNADD NRF Aggregation service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddnrfaggregation service is down' PromQL Expression: expr: up{service="ocnaddnrfaggregation"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_NRF_AGGREGATION_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddnrfaggregation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddnrfaggregation"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.31.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD NRF Aggregation service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-35 OCNADD_SEPP_AGGREGATION_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The SEPP Aggregation service went down or not accessible |

| Severity | Critical |

| Description | OCNADD SEPP Aggregation service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddseppaggregation service is down' PromQL Expression: expr: up{service="ocnaddseppaggregation"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_SEPP_AGGREGATION_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddseppaggregation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddseppaggregation"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.32.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD SEPP Aggregation service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-36 OCNADD_BSF_AGGREGATION_SVC_DOWN

| Triggering Condition | The BSF Aggregation service went down or not accessible |

| Severity | Critical |

| Description | OCNADD BSF Aggregation service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddbsfaggregation service is down' PromQL Expression: expr: up{service="ocnaddbsfaggregation"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_BSF_AGGREGATION_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddbsfaggregation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddbsfaggregation"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.40.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD BSF Aggregation service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-37 OCNADD_PCF_AGGREGATION_SVC_DOWN

| Triggering Condition | The PCF Aggregation service went down or not accessible |

| Severity | Critical |

| Description | OCNADD PCF Aggregation service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddpcfaggregation service is down' PromQL Expression: expr: up{service="ocnaddpcfaggregation"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_PCF_AGGREGATION_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddpcfaggregation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddpcfaggregation"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.41.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD PCF Aggregation service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support, If guidance is required. |

Table 15-38 OCNADD_NON_ORACLE_AGGREGATION_SVC_DOWN

| Triggering Condition | The Non Oracle Aggregation service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Non Oracle Aggregation service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddnonoracleaggregation service is down' PromQL Expression: expr: up{service="ocnaddnonoracleaggregation"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_NON_ORACLE_AGGREGATION_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddnonoracleaggregation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ocnaddnonoracleaggregation"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.37.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Non Oracle Aggregation service starts instance becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-39 OCNADD_ADMIN_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The OCNADD Admin service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Admin service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: ocnaddadminservice service is down'PromQL Expression: expr: up{service="ocnaddadminservice"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_ADMIN_SVC_DOWN" is in a "OK/FIRING"

state; because 0/1 metrics meet the trigger rule:

"podStatus[10m]{podOwner=" MQL Expression: podStatus[10m]{podOwner="ocnaddadminservice"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.30.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Admin service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-40 OCNADD_CONSUMER_ADAPTER_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The OCNADD Consumer Adapter service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Consumer Adapter service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Consumer Adapter service is down'PromQL Expression: expr: up{service=~".*adapter.*", role="adapter"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_CONSUMER_ADAPTER_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="correlation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner=~"adapter*"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.25.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Consumer Adapter service start becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in “Running” state: kubectl –n <namespace> get pod If it is not in running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-41 OCNADD_FILTER_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The OCNADD Filter service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Filter service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Filter service is down'PromQL Expression: expr: up{service=~".*filter.*"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_FILTER_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ocnaddfilter"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner=" |

| OID | 1.3.6.1.4.1.323.5.3.53.1.34.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Filter service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-42 OCNADD_CORRELATION_SVC_DOWN

| Field | Details |

|---|---|

| Triggering Condition | The OCNADD Correlation service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Correlation service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Correlation service is down'PromQL Expression: expr: up{service=~".*correlation.*"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_CORRELATION_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="correlation"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="correlation"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.33.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Correlation service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-43 OCNADD_EXPORT_SVC_DOWN

| Triggering Condition | The OCNADD Export service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Export service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Export service is down'PromQL Expression: expr: up{service=~".*export.*"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_EXPORT_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="export"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="export"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.39.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD export service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-44 OCNADD_STORAGE_ADAPTER_SVC_DOWN

| Triggering Condition | The OCNADD Storage adapter service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Storage adapter service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Storage adapter service is down'PromQL Expression: expr: up{service=~".*storage-adapter.*", role="storageAdapter"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_STORAGE_ADAPTER_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="storageadapter"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="storageadapter"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.38.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Storage adapter service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-45 OCNADD_INGRESS_ADAPTER_SVC_DOWN

| Triggering Condition | The OCNADD Ingress Adapter service went down or not accessible |

| Severity | Critical |

| Description | OCNADD Ingress Adapter service not available for more than 2 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Ingress Adapter service is down'PromQL Expression: expr: up{service=~".*ingress-adapter.*", role="ingressadapter"} != 1 |

| Alert Details OCI |

Summary: Alarm "OCNADD_INGRESS_ADAPTER_SVC_DOWN" is in a "OK/FIRING" state; because 0/1 metrics meet the trigger rule: "podStatus[10m]{podOwner="ingressadapter"}.mean()!=1", with a trigger delay of 1 minute MQL Expression: podStatus[10m]{podOwner="ingressadapter"}.mean()!=1 |

| OID | 1.3.6.1.4.1.323.5.3.53.1.36.2002 |

| Metric Used |

'up' Note: This is a Prometheus metric used for instance availability monitoring. If this metric is not available, use a similar metric as exposed by the monitoring system. |

| Resolution |

The alert is cleared automatically when the OCNADD Ingress Adapter service starts becoming available. Steps: 1. Check for service specific alerts which may be causing the issues with service exposure. 2. Run the following command to check if the pod’s status is in the “Running” state: kubectl –n <namespace> get pod If it is not in a running state, capture the pod logs and events. Run the following command to fetch the events as follows:

3. Refer to the application logs and check for database related failures such as connectivity, invalid secrets, and so on. 4. Run the following command to check Helm status and make sure there are no errors: Helm status <helm release name of data director> -n<namespace> If it is not in “STATUS: DEPLOYED”, then again capture logs and events. 5. If the issue persists, capture all the outputs from the above steps and contact My Oracle Support. |

Table 15-46 OCNADD_MPS_WARNING_INGRESS_THRESHOLD_CROSSED

| Field | Details |

|---|---|

| Triggering Condition | The total ingress MPS crossed the warning threshold of 80% of the supported MPS |

| Severity | Warn |

| Description | Total Ingress Message Rate is above the configured warning threshold (80%) for the period of 5 min |

| Alert Details CNE |

Summary: 'namespace: {{ "{{" }}$labels.namespace}}, podname: {{ "{{" }}$labels.pod}}, timestamp: {{ "{{" }} with query "time()" }}{{ "{{" }} . | first | value | humanizeTimestamp }}{{ "{{" }} end }}: Message Rate is above 80 Percent of Max messages per second:{{ .Values.global.cluster.mps }}'PromQL Expression: expr: sum(irate(kafka_stream_processor_node_process_total{service=~".*aggregation.*"}[5m])) by (namespace) > 0.8*{{ .Values.global.cluster.mps }} |

| Alert Details OCI |