3 Logging and Visualization Feature Configuration

This chapter describes the procedures for configuring the Logging and Visualization feature in the EAGLE.

3.1 Introduction

This chapter identifies the prerequisites and the procedures for configuring the EAGLE Logging and Visualization feature.

3.1.1 Front Panel LED Operation

On the SLIC card, the Ethernet Interface 3 (mapped to port C) is used for visualization connectivity.

The following table captures the LED operations required for the Ethernet interfaces:

Table 3-1 Front Panel LED Operation

| IP Interface Status | Signaling Link/connections Status on IP Port 3 (C) | Signaling connection | |

|---|---|---|---|

| PORT LED | LINK LED | ||

| IP Port Not configured |

N/A

|

Off

|

Off

|

| Card Inhibited | |||

| Cable removed and/or not synced | N/A | Red | Red |

| Sync | Not configured | Green | Red |

| Sync and/or act-ip-lnk | Configured but Visualization TCP connection CLOSED (open=no) or disconnected. | Green | Red |

| Visualization TCP Connection is ACTIVE (open=yes) and connected. | Green | Green | |

| dact-ip-lnk | N/A | Green | Red |

3.1.2 Logging and Visualization Feature Prerequisites

Before accessing and configuring the dashboard for Logging and Visualization, the user needs to install and configure the following modules on the Visualization server:

- Elasticsearch

- Filebeat

- Kibana

Note:

Time or clock must be sychronized with all Visualization servers.3.1.2.1 Installation

This chapter describes the installation of Elasticsearch, Filebeat, and Kibana.

Note:

Before installing modules, the user must have permissions to install the software on the Visualization server.3.1.2.1.1 Elasticsearch Installation

3.1.2.2 Configuration

This chapter describes the configuration of Elasticsearch, Filebeat, and Kibana.

3.1.2.2.1 Elasticsearch Configuration

3.2 Dashboard

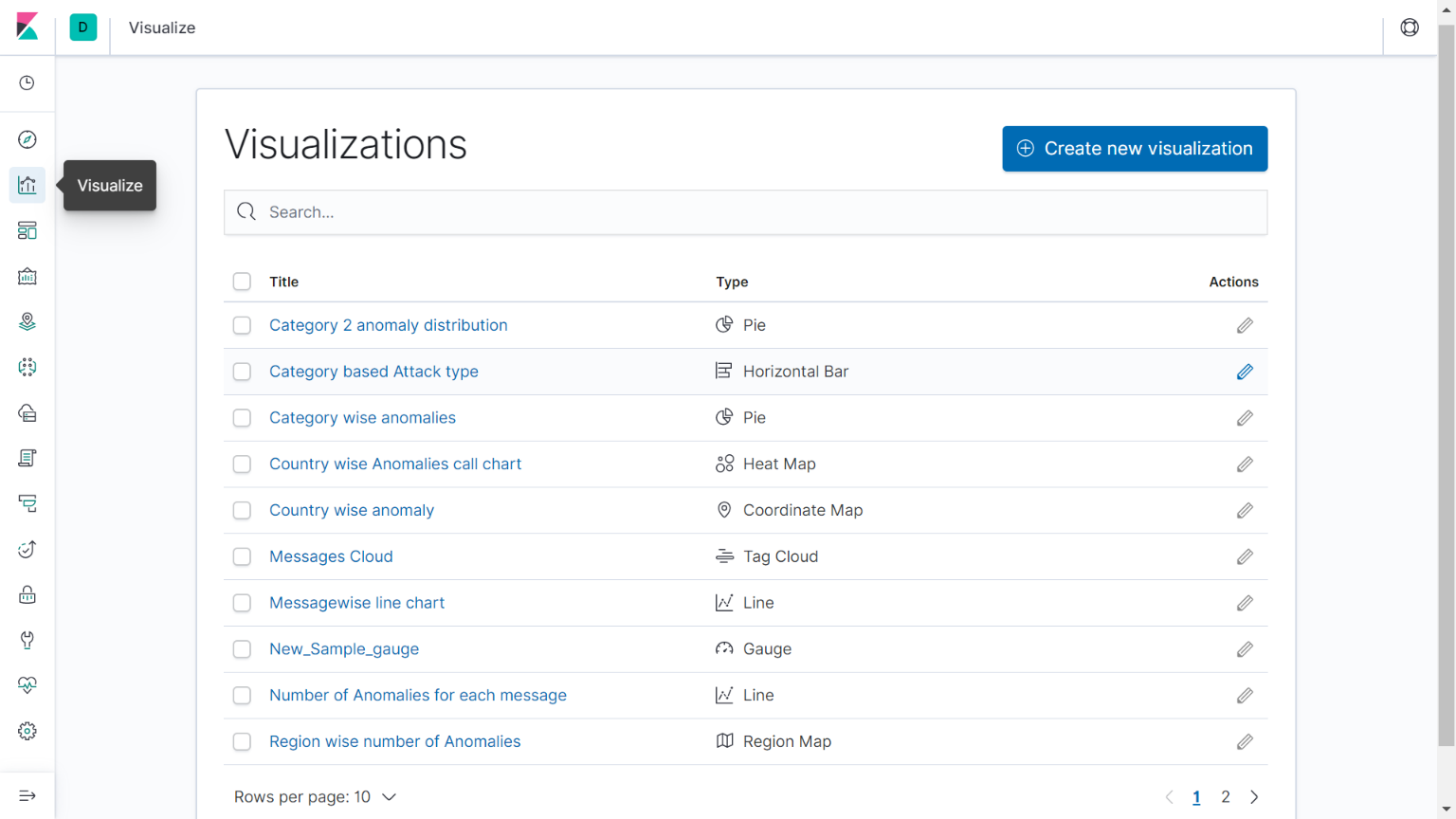

The chapter describes the procedures to access the default dashboard, create index patterns, create a new visualization, and create a new dashboard.

3.2.2 Creating Index Patterns

Refer to the standard Kibana product documentation for information on how to create index patterns.