Virtual SBCs

Operating the Oracle® Enterprise Session Border Controller (ESBC), as well as Oracle's other session delivery products, as a VM introduces configuration requirements that define resource utilization by the virtual machine. The applicable configuration elements allow the user to optimize resource utilization based on the application's needs and VM resource sharing. See your product and software version's Release Notes to verify your product's support for deployment as a virtual machine.

VM deployment types include:

- A standalone (not orchestrated) instance Oracle® Enterprise Session Border Controller operating as a virtual machine running on a hypervisor

- Virtual Machine(s) deployed within private or public Cloud environments

Standalone ESBC VM deployment instances supported for all platforms. Support within an orchestrated environment is dependent on orchestrator and ESBC version. High Availability configurations are supported by both deployment types.

ESBC configuration for VM includes settings to address:

- media-manager — Set media manager configuration elements to constrain bandwidth utilization, based on traffic type. See Media Manager Configuration for Virtual Machines in the Realms and Nested Realms Chapter.

- system-config, [core configuration parameters] — Set these parameters to specify CPU resources available to DoS, forwarding and transcoding processes. This configuration applies to initial deployment and tuning tasks. You may need to change the default core configuration for functionality purposes during deployment; you may decide to change core configuration for performance purposes after deployment.

- system-config, [use-sibling-core-datapath] — Enable this parameter to allow the ESBC to utilize the underlying platform's CPU hyperthreading (also called SMT) capabilities for datapath cores, including Forwarding, DoS and transcoding cores. Considerations include your environment's support of the feature and its impact on your implementation. A key consideration beyond support is the ability of your platform to provide information on sibling CPUs to the ESBC . The ESBC supports hyperthreading of signaling cores without additional configuration.

VLAN Support

Oracle recommends that you evaluate the VLAN support of your deployment's hypervisor and interface I/O mode before implementation to ensure secure support for the transmission and receiving of VLAN-tagged traffic. Please consult your hypervisor’s vendor documentation for details.

Note that when you configure a VLAN, the ESBC requires VLAN tags to be included in the packets delivered to and from the VM.

Hypervisor and cloud platform and resource requirements are version-specific. Refer to your Release Notes for applicable requirements, recommendations and caveats for qualified platforms.

CPU Core Configuration

You can configure CPU core settings using system-config parameters. This configuration is based on the specific needs of individual implementations. These parameters allow you to set and change the number of cores you want to assign to forwarding, DoS, and transcoding functionality. The system determines which cores perform those functions automatically.

You can determine and manage your core configuration based on the services you need. The system allocates cores to signaling upon installation. You can add forwarding cores to match your needs for handling media. You can also add DoS and transcoding cores if you need those functions in your deployment. If you want to reduce the size of your ESBC deployment footprint or if you do not need the maximum number of cores and amount of memory available, you can deploy the ESBC virtually with fewer cores and memory requirements. For smaller scale deployments, the VNF software supports a deployment with 2 virtual cores, 2 GB RAM, 20GB storage, and 2 interfaces.

Note the following:

- By default, core 0 is always set to signaling.

- The system selects cores based on function. You cannot assign core functions.

- The system sets unassigned cores to signaling, with a

maximum of 24.

Note:

Your hyperthreading configuration may impact these assignments. - You must reboot the system for core configuration changes to take effect.

When you make core assignments, the (ESBC) provides an error message if the system detects an issue. In addition, the system performs a check when you issue the verify-config command to ensure that the total number of forwarding, plus DOS, plus transcoding cores does not exceed the maximum number of physical cores. After you save and activate a configuration that includes a change to the core configuration, the system displays a prompt to remind you that a reboot is required for the changes to take place.

You can verify core configuration from the ACLI, using the show datapath-config command or after core configuration changes during the save and activation processes. The ESBC uses the following lettering (upper- and lower-case) in the ACLI to show core assignments:

- S - Signaling

- D - DoS

- F - Forwarding

- X - Transcoding

When using hyperthreading, which divides cores into a single physical (primary) and a single logical (secondary) core, this display may differ. ESBC rules for displaying cores include:

- Physical cores (no hyperthreading) in upper-case letters

- "Primary" hyperthreaded sibling cores in upper-case letters

- "Secondary" hyperthreaded sibling cores in lower-case letters

- Stale (unused) hyperthreaded cores using the lower-case letter "n"

The system-config element includes the following parameters for core assignment:

- dos-cores— Sets the number of cores the system must allocate for DOS functionality. A maximum of one core is allowed.

- forwarding-cores—Sets the number of cores the system must allocate for the forwarding engine.

- transcoding-cores—Sets the number of cores the system must allocate for transcoding. The default value is 0.

- use-sibling-core-datapath—Enables the ESBC to utilize the platform's SMT capability, impacting how the ESBC uses sibling cores.

The ESBC does not have a maximum number of cores, but your deployment does, based on host resources. The system checks CPU core resources before every boot, as configuration can affect resource requirements. Examples of such resource requirement variations include:

- There are at least 2 CPUs assigned to signaling (by

the system).

Note:

The exception to this is the Small Footprint deployment. - If DoS is required, then there are at least 1 CPU assigned to forwarding and 1 to DoS.

- If DoS is not required, then there is at least 1 CPU

assigned to forwarding.

Note:

Poll mode drivers, including vmxnet3, failsafe, MLX4 and Ixgbvf, only support a number of rxqueues that is a power of 2. When using these drivers, you should configure the number of forwarding cores to also be a power of 2. If there is a mismatch, the system changes the number of forwarding cores that it uses to the nearest power of 2 value. The remaining cores become stale; stale cores remain reserved by the system, but are not used.

The system performs resource utilization checks every time it boots for CPU, memory, and hard-disk to avoid configuration and resource conflicts.

Core configuration is supported by HA. For HA systems, resource utilization on the backup must be the same as the primary.

Note:

The hypervisor always reports the datapath CPU usage as fully utilized. This isolates a physical CPU to this work load, but may cause the hypervisor to generate a persistent alarm indicating that the VM is using an excessive amount of CPU. The alarm may trigger throttling. Oracle recommends that you configure the hypervisor monitoring appropriately, to avoid throttling.In HA environments, when the primary node's core configuration changes, the ESBC raises an alarm to warn that a reboot is required. After the configuration syncs, the secondary node raises the same alarm to warn that a reboot is required.

Host Hypervisor CPU Affinity (Pinning)

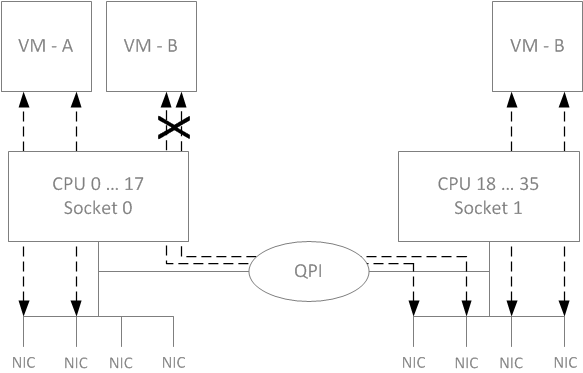

Many hardware platforms have built in optimizations related to VM placement. For example, some CPU sockets may have faster local access to Peripheral Component Interconnect (PCI) resources than other CPU sockets. Users should ensure that VMs requiring high media throughput are optimally placed by the hypervisor, so that traversal of cross-domain bridges, such as QuickPath Interconnect (QPI), is avoided or minimized.

Some hypervisors implement Non-Uniform Memory Access (NUMA) topology rules to automatically enforce such placements. All hypervisors provide manual methods to perform CPU pinning, achieving the same result.

The diagram below displays two paths between the system's NICs and VM-B. Without configuring pinning, VM-B runs on Socket 0, and has to traverse the QPI to access Socket 1's NICs. The preferred path pins VM-B to Socket 1, for direct access to the local NICs, avoiding the QPI.

Oracle recommends you configure CPU affinities on the hypervisor to ensure mapping from only one virtual CPU to each physical CPU core. Learn how to configure CPU affinity and pin CPUs from your hypervisor documentation.

Note:

The ESBC relies on multiple queues on virtual NICs to scale session capacity. Multiple queues enable the ESBC to scale through multiple forwarding cores. This configuration is platform dependent: physical NIC, Hypervisor, virtual NIC, and vSwitch.Support for Hyperthreading Datapath CPUs

You can configure the ESBC to utilize hyperthreading (SMT) support for datapath cores, including forwarding, DoS and transcoding cores. This configuration allows datapath CPUs to utilize two virtual CPUs (vCPUs) as "siblings" on the same physical CPU when the platform host supports hyperthreading. Refer to your software version's Release Notes to determine platforms that support this feature.

If you do not apply this configuration, the ESBC only uses one of the two logical virtual CPUs for the datapath (DPDK) cores, and marks the virtual CPU's sibling as stale. Most platforms have their own methods of determining whether hyperthreading is available; some platforms have the support, but do not expose it to the vSBC. Use of hyperthreading by vSBC datapath cores is dependent on both the availability and the visibility of the technology. A quick check to see if you are not utilizing SMT, because the host does not support SMT, does not make its CPU topology visible, or you keep the feature disabled, is that the ESBC displays all core assignments in upper case.

If hyper-threading is supported and exposed by the host to the guest configuration, 8 physical cores allocated to the ESBC translates to 16 logical cores. Signaling cores automatically set up as siblings. You enable this support for datapath cores using the use-sibling-core-datapath parameter in the system-config element.

Consider a use case where use-sibling-core-datapath remains disabled and you configure 1 forwarding core and 1 DOS core. If the host system allocates 8 vCPUs to this ESBC system, the system assigns 2 cores for DPDK (or datapath) and the remaining 6 cores for signaling. This would consume 8 physical CPUs, displayed by the show datapath-config command as (S-S-S-S-S-S-F-D).

By enabling hyper-threading feature in the host system’s BIOS and hypervisor and the ESBC, the updated core map allocation with the same datapath core configuration (1F, 1D) for 8 vCPUs in the current implementation would consume only 4 physical CPUs, displayed by the show datapath-config command as (S-s-S-s-F-n-D-n).

Note:

The Xen hypervisor displays lower-case vCPU siblings together at the end of the vCPU list. Xen pairs the first upper-case vCPU with the first lower-case vCPU, and so forth.Note:

When performing CPU pinning, neither you nor the hypervisor need to allocate cores on the Host in numerical sequence. The ESBC does not require that cores be sequentially numbered.Datapath Hyperthreading Configuration

The use-sibling-core-datapath parameter, within the system-config, supports two values, and requires that hyperthreading be both enabled and visible from the host:

- disabled (Default)— The system allocates one vCPU sibling, and marks the other as stale.

- enabled—The system allocates all vCPUs siblings.

Note:

When enabled, Oracle recommends you configure an even number of datapath cores for optimal performance.Platform hosts provide more resources to a physical core on a vSBC than a hyper-threaded core. If your deployment performs Rx and Tx processing on a single core, you should consider leaving hyper-threading disabled.

The verify-config command notifies you about invalid configuration, including:

- You enabled the use-sibling-core-datapath parameter, but the CPU topology is not exposed to the vSBC. If hyper-threading is not exposed to the vSBC or enabled on the host, the use-sibling-core-datapath parameter is not applicable and has no impact on core allocation.

- When the number of signaling cores is less than its minimum (2)

- There is an error with CPU assignment, including improperly configured hyper-threaded sibling CPUs.

Applicable ACLI Command Output

After core configuration, you can use the show datapath-config and the show platform cpu commands to display CPU core configuration.

You can verify and troubleshoot the ESBC CPU assignments using, for example, the show datapath-config command.

ORACLE# show datapath-config

Number of cores assigned: 8

Current core assignments: S-s-S-s-F-n-D-n

Default hugepage size: 2 MB

Number of 1 GB hugepages: 0

Number of 2 MB hugepages: 980

Total system memory: 7835 MB

Memory reserved for datapath: 1960 MB

You can also use the show platform cpu command to see if your host provides SMT awareness.

Note:

This is only true for the OCSBC products.ORACLE# show platform cpu

CPU count : 8

CPU speed : 2294 MHz

CPU model : Intel Core Processor ...

SMT Topology aware : True

Note also the CPU count output, which verifies your configuration.

Datapath CPU Hyperthreading Considerations

You need to reboot your ESBC whenever you enable of disable the use-sibling-core-datapath parameter. You should consider whether or not to enable the feature as a means of tuning system performance. The feature does not impact or conflict with any other configuration.

You use the following criteria to decide whether or not to enable sibling datapath CPUs:

- Predictability and maintainability

- Effective utilization of all cores

- Throughput

Even if the topology is supported and known to the vSBC, you may not want to enable the new attribute. Consider the fact that the maximum number of forwarding cores is dependent on the maximum number of queue pairs supported on the network interface. An SRIOV interface with the Intel i40e driver, for example, has a limit of 4 Rx/Tx queue pairs, which means the most forwarding cores you can assign to the vSBC is 4. The result of your hyperthreading configurations are:

- Disabled: F-n-F-n-F-n-F-n—System throughput is better when disabled because the host is using 4 full physical cores. If your intent is to achieve highest concurrent session capacity, and there is no constraint on the number of cores available to the vSBC, Oracle recommends you keep this feature disabled.

- Enabled: F-f-F-f—When enabled, it only uses 2. Oracle recommends enabling the feature if your intent is to consume fewer physical cores.

Note the following guidelines when configuring hyperthreading:

- Assign the vCPUs siblings of a single physical core on the host to the same guest machine. Do not mix virtual machines on vCPU siblings.

- If hyper-threading is not supported by your platform, enabling or disabling this parameter has no impact on core allocation.

- If you enable this parameter on supported platforms, Oracle recommends that you configure the number of datapath cores for your vSBC to be divisible by 2 for optimal performance.

- If you enable Hyperthreading on an existing VM you must double-boot the ESBC to accommodate the increase in the number of Signaling cores.

Supported Platforms

Of the supported hypervisors, only VMware does not expose SMT capability to the ESBC. Of the supported clouds, OCI and AWS enable SMT by default and expose it to the ESBC. Azure shapes that enable and expose SMT vary. Please see the Release Notes for your software version to determine which Azure Shapes apply,

System Shutdown

Use the system's halt command to gracefully shutdown the VNF.

ACMEPACKET# halt

-------------------------------------------------------

WARNING: you are about to halt this SD!

-------------------------------------------------------

Halt this SD [y/n]?:See the ACLI Reference Guide for further information about this command.

Small Footprint VNF

Oracle® Enterprise Session Border Controller (ESBC) customers who want to reduce the size of their SBC deployment footprints or who may not need the maximum number of cores and amount of memory can deploy the SBC virtually with fewer cores and memory requirements. For smaller scale deployments, the VNF software supports a minimum deployment of 2 virtual cores, 4GB RAM, 20GB storage, and 2 interfaces. In this way, Oracle provides a flexible solution that you can tailor to your requirements.

Note that small footprint for VNF does not support transcoding.

Note:

The small footprint deployment supports a minimum of 1 signaling core.Configuration Overview

Oracle® Enterprise Session Border Controller Virtual Machine (VM) deployments require configuration of the VM environment and, separately, configuration of the ESBC itself. VM-specific configuration on the ESBC includes boot parameter configuration, enabling functionality and performance tuning.

During VM installation, you can configure vSBC boot parameters, including:

- IP address

- Host name

During VM installation, the ESBC sets default functionality, assigning cores to signaling and media forwarding. If you need DoS and/or transcoding functionality, you configure the applicable cores after installation. Applicable performance tuning configuration after deployment includes:

- Media manager traffic/bandwidth utilization tuning

- Datapath-related CPU core allocation

Note:

For Xen-based hypervisors, the default boot mode uses DHCP to obtain an IP address for the first management interface (wancom0) unless a static IP is provisioned. Note that DHCP on wancom0 does not support lease expiry, so the hypervisor must provide persistent IP address mapping. If persistent IP address mapping is not provided, the user must manually restart the VM whenever the wancom0 IP address changes due to a manual change or DHCP lease expiry.Beyond installation, VM-related functional support, and VM-related tuning, you perform basic ESBC configuration procedures after installation, including:

- Setting passwords

- Setup product

- Setup entitlements

- Assign Cores

- Enable hyperthreading for forwarding, DoS and transcoding cores

- Service configuration

Configure Cores

The (ESBC) allows you to specify the function of the CPU cores available from the host.

Configure Hyperthreading Support

The (ESBC) allows you to enable the use of host hyperthreading. This parameter is applicable only when hyper-threading is supported by the platform. You can refer to the platform support list in your software version's Release Notes to identify platform applicability.