SQL Development and Tuning Best Practices

• Length of the DataType Matters.

For example if you define a column with VARCHAR2(4000) (just the maximum limit) then you may outflow you array as given in the example below.

Varchar2(n) where n is "right sized" | Varchar2(4000) for everything (just in case) |

|---|---|

Assume 10 columns, average width is 40 characters (some are 80, some are 10...). | Assume 10 columns, average, minimum, maximum width is 4000. |

400 bytes per row on a fetch. | 40,000 bytes per row on a fetch. |

Assume array fetch of 100 rows, so array fetch buffer or 40,000 bytes. | Assume array fetch of 100 rows, so array fetch buffer or 4,000,000 bytes. |

Assume 25 open cursors, so 1,000,000 bytes of array fetch buffers. | Assume 25 open cursors, so 100,000,000 bytes. |

Assume connection pool with 30 connections — 30MB. | Assume connection pool with 30 connections — 3GB. |

• NOT Null columns should be preferred over Null able columns. The reason is if you have an Index on a Null able column then it would not be used by the SQL as the optimizer thinks that it would not find any values in some of the columns so prefer a full scan.

As a workaround for columns with NULL data types the Index create SQL should look like:

Create INDEX ABC ON TAB1 (COLUMN1, 0);

This will make sure that in case the Column1 is null the optimizer will consider the value as 0 and leads to index scan as compared to Full scans.

• Always try to substitute the Bind Variables in a SQL with the actual constant value if there is only one possible. Having too much Bind variables sometimes confuses the Optimizer to take the right access path. So this is good for the stability of the SQL plans.

• Fields which are foreign keys to other tables and are used in SQLs for the Join criterion are good candidates for creating Indexes on.

• Do not create any objects in the database of which the name may collide with any SQL reserved words.

• Views are generally used to show specific data to specific users based on their interest. Views are also used to restrict access to the base tables by granting permission only on views. Yet another significant use of views is that they simplify your queries. Incorporate your frequently required, complicated joins and calculations into a view so that you don't have to repeat those joins/calculations in all your queries. Instead, just select from the view.

• Avoid creating views within views as it affects the performance.

• Offload tasks, like string manipulations, concatenations, row numbering, case conversions, type conversions etc., to the front-end applications if these operations are going to consume more CPU cycles on the database server. Also try to do basic validations in the front-end itself during data entry. This saves unnecessary network roundtrips.

• Always be consistent with the usage of case in your code. On a case insensitive server, your code might work fine, but it will fail on a case-sensitive server if your code is not consistent in case.

• Make sure you normalize your data at least to the 3rd normal form. At the same time, do not compromise on query performance. A little bit of denormalization helps queries perform faster.

• Consider indexing those columns if they are frequently used in the ORDER clause of SQL statements.

• Use tools like Tkprofs and AWR Report for measuring the Performance of your SQLs.

• In the SQL Explain Plans, usage of Nested Loops are good when there are table joins involved.

• Always looks for Autotrace to measure the SQL plan as it is closer to the plan which the optimizer takes during the actual execution of the SQL. This can be get easily through SQL Developer and other database monitoring tools.

• While looking at the Autotrace Plans look for consistent gets and make sure they are low. The other thing reported by the Autotrace is COST. Do not worry too much about cost if the Consistent gets is low and you are getting a desirable Plan.

• Make sure that the Statistics are current and not stale while you are trying to Tune a SQL.

• Having Secondary Unique Indexes help in achieving Index Unique scans. This will eliminate the Table scans. It is worth trying and see if that makes a difference.

• Oracle Optimizer executes the explain plans of a SQL from Inner to the outer area and from bottom to the top. So make sure that the cardinality of the inner most Join criterion should be low.

• Always keep in mind the usage of the SQL in a real production scenario where the data in the tables can go exponentially. Make sure that the SQLs can handle it and the Explain plan should be accordingly tuned.

• Usage of <<, != make the Index NOT to be used. Instead of this use the greater than or less than statements.

• If you wrap a column a column with some functions like TO_DATE, TO_CHAR, SUBSTR and so on then the Index on the Column would not be used.

• Avoid using UNION and make sure you use UNION ALL if possible. This will boost performance.

• Using EXISTS , NOT EXISTS are better than using IN , NOT IN statements respectively.

• Usage of Leading Hints helps in choosing what Table should be the first table in the join order.

/*+ LEADING(Table1 Table2) */

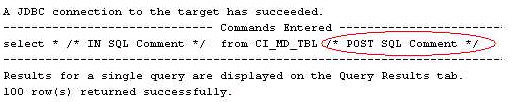

• When writing comments within SQL statements make sure that the comments are not added at the beginning because DB2 will not be able to parse it. You can instead put the Comments at the end and it will work. For Oracle this is not an issue.

Additional Resources

Additional information on optimizing SQL in your OUAF applications can be found in the Oracle Utilities Application Framework - Technical Best Practices whitepaper available on the My Oracle Support (MOS) Knowledge Base (article 560367.1).

Parent topic