Data Upload Orchestration

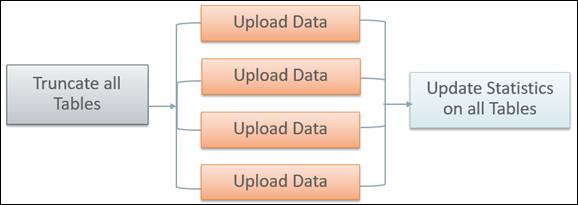

The SQL Loader is running in multiple threads and therefore it is not performing table truncation before loading (command APPEND). Hence, the target tables should be truncated prior to the load. For better performance the indexes have to be disabled before the load and re-enabled/statistics updated after the load. The batch jobs can be organized into various chain structures, as shown in the examples below.

Single Table Upload

Multiple Tables or MOs Upload

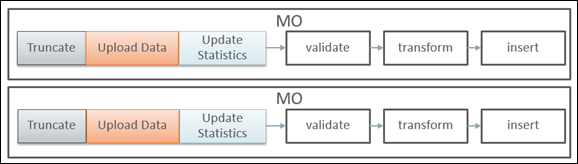

There are multiple strategies to orchestrate the entire conversion run and to build the optimal sequence of the conversion processes. Below are some of the many possibilities:

• upload all legacy data extract files simultaneously, then run the subsequent validation and transformation processes for the converted object in a certain order of precedence, to preserve referential integrity

• begin the upload of very large tables in advance, so all upload is finished simultaneously, then validate & transform

• include legacy data upload batch(es) in the batch job chain for the target object

• upload some of the data by maintenance object, some table by table

• process maintenance objects end-to-end simultaneously, if there are no inter-dependencies

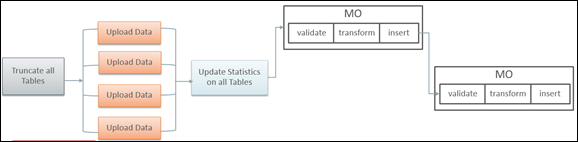

Full Conversion Chain per MO, Parallel Run

Upload All + Subsequent Validate/Transform MOs