2.2 Matching

Once the working and watch list records have been divided into clusters, the rows within each cluster are compared to one another according to the match rules defined for the matching processor. Each match rule defines a set of criteria, specified as comparisons, that the pair of records must satisfy in order to qualify as a match under that rule. The match rule also defines a decision to be applied to any records which satisfy the conditions of the rule. Most rules have a Review decision, meaning matches that hit the rule need to be reviewed. However, there are also elimination rules, where if the records being compared meet the rule’s criteria, a No Match decision is reached, and the two records will not be considered a match.

The rules are applied as a decision table, so if a pair of records qualifies as a match under a rule higher in the table, it will not be compared using any rules below that. All rules are configured to operate on a case-insensitive basis. Unless stated otherwise, all noise and whitespace characters are removed or normalized before matching.

Matches are generated based on a defined set of attributes for each rule. A weighted average of the score is generated for each of the attribute level matches.

TF explicitly passes the strings as values in the request which forms “the strings to be matched” against “all the values in a column name”. Then, based on the matches received for the source string from search engine, the score and the feature vector for the matched strings (source and target) are generated. Scores which exceed the configured thresholds are taken and collected.

Example 1:

- Evaluation Logic-> 46,4,26

- Evaluation score -> 0.88

- Word Match count (WMC_1) >= 2

- Abbreviated and CMP >=66

If the first name in Mapping Source Attribute column and Target Attribute column matched with a score of 0.88 and the Set threshold value is 0.75. The first name score is greater than the threshold value. Then 0.88x100x weightage (0.8) is provided in matching rule which gives you the score -> 70.4.

If Mapping Source Attribute column and Target Attribute column has the city data and the score value doesn't cross the provided threshold then it will not contribute to the score. Score is still 70.4

If city data cross the provided threshold, then it will contribute to the score as 100 (exact match). Then 100x0.05x weightage (0.05) => 5 is provided in matching rule which gives you the score 75.8.

If Mapping Source Attribute column and Target Attribute column has no city data, then score will cross the provided threshold. Then 50x0.05 => 2.5 is provided in matching rule which gives you the score 72.9.

Table 2-1 Match Types Descriptions and Examples

| Ruleset Name | Source Node Type | Target Node Type |

|---|---|---|

| Exact | Considers two values and determines whether they match exactly. Applies only if Exact Match is selected. It does not apply when using Fuzzy Match. | If the source attribute is “John smith” and target attribute is “John smith”, then the match is an exact match. |

| Character Edit Distance (CED) | Considers two String tokens and determines how closely they match each other by calculating the minimum number of character edits (deletions, insertions and substitutions) needed to transform one value into the other. |

For entities, stop words are not considered.

The CED for Orcl is 2 and CED for finance is 3, so the overall CED is 3. |

| Character Match Percentage (CMP) � | Determines how closely two values match each other by calculating the Character Edit Distance between two String tokens and considering the length of the shorter of the two tokens, by character count. | If the source attribute is “John smith” and target attribute is “Jon smith”, then the CMP is calculated using the formula (length of shorter string – CED) * 100 / length of longer string. In this case, it is (9-1) * 100/8 = 77.77%. � |

| Word Edit Distance (WED) | Determines how well multi-word String values match each other by calculating the minimum number of word edits (word insertions, deletions and substitutions) required to transform one value to another. |

If the source attribute is “John smith” and target attribute is “Jon smith”, then the WED is calculated by checking the number of words that did not match with the target words after allowing for character tolerance, which is the number of words in the source attribute that did not match the target attribute. For example, the source string is Yohan Russel Smith and target

string is Smith Johaan Rusel. First, we determine the CED for

each word:

If we consider a character tolerance of 1, we can observe the

following:

Based on these observations, we can conclude that one word does not match. This means that the WED is 1. |

| Word Match Percentage (WMP) | Determines how closely, by percentage, two multi-word values match each other by calculating the Word Edit Distance between two Strings and taking into account the length of the longer or the shorter of the two values, by word count. | The WMP is calculated using the formula (WMC/minimum word length) * 100. If the source attribute is “John smith” and target attribute is “Jon smith”, then the WMP is calculated as (2/5) * 100 = 40 %. |

| Word Match Count (WMC | Determines how closely two multi-word values match each other by calculating the Word Edit Distance between two Strings and taking into account the length of the longer or the shorter of the two values, by word count. | The WMC is like WED, with the difference being that WMC gives the number of matches between 2 words and WED gives the number of words that did not match between 2 words. If the source attribute is “John smith” and target attribute is “Jon smith”, then the WMC is 2 as two words have matched (allowing for the character tolerance). |

| Exact String Match | Considers two String values and determines whether they match exactly. | |

| Abbreviation | Checks if the first character matches with the first character of source and target values. | |

| Starts With | Compares two values and determines whether either value starts with the whole of the other value. It therefore matches both exact matches and matches where one of the values starts the same as the other but contains extra information. | |

| Jaro Winkler or Reverse Jaro Winkler | The Jaro Winkler similarity is the measure of the edit distance between two strings. Click here for more information. In the Reverse Jaro Winkler, matches are generated even if the string is reversed. For example, if the source string is Mohammed Ali and the target string is Ali Mohammed, then the similarity = 1. | If the source string is Mohammed Ali and the target string is Mohammed Ali, then the similarity = 1. |

| Levenshtein | The Levenshtein Distance (LD) or edit distance provides the distance, or the number of edits (deletions, insertions, or substitutions) needed to transform the source string into the target string. Click here for more information. | For example, if the source string is Mohamed and the target string is Mohammed, then the LD = 1, because there is one edit (insertion) required to match the source and target strings. |

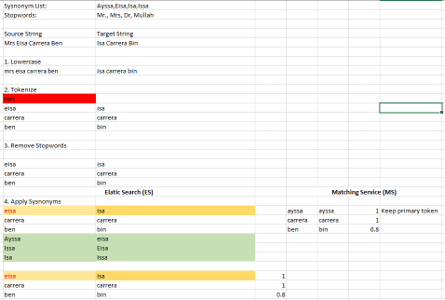

Example 2:

Source String: Mrs. Eisa Carrera Ben

Target String: Isa Carrera Bin

Synonym List: Ayssa, Eisa, Isa, Issa

Stopwords: Mr., Mrs., Dr., Mullah

Figure 2-1 Synonyms and Stopwords for OS and MS