2 Monitor ELK

This topic describes the procedure for installing and configuring the ELK.

- Elasticsearch: It is an open-source, full-text search, and analysis engine based on the Apache Lucene search engine.

- Logstash: Logstash is a log aggregator that collects data from various input sources, executes different transitions and enhancements, and then transports the data to various supported output destinations.

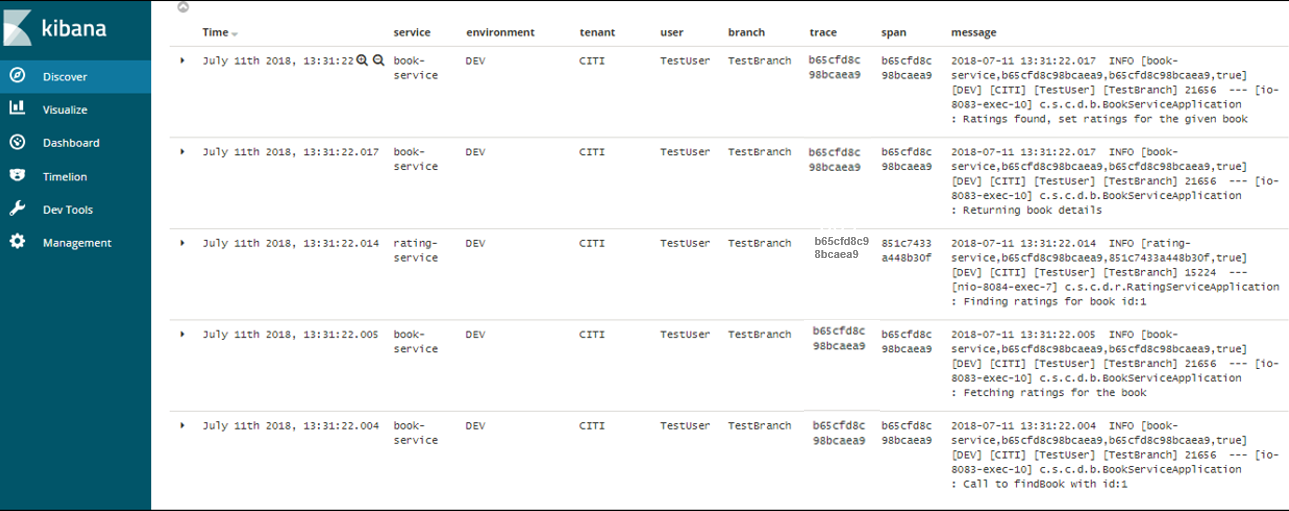

- Kibana: Kibana is a visualization layer that works on top of Elasticsearch, providing users with the ability to analyze and visualize the data.

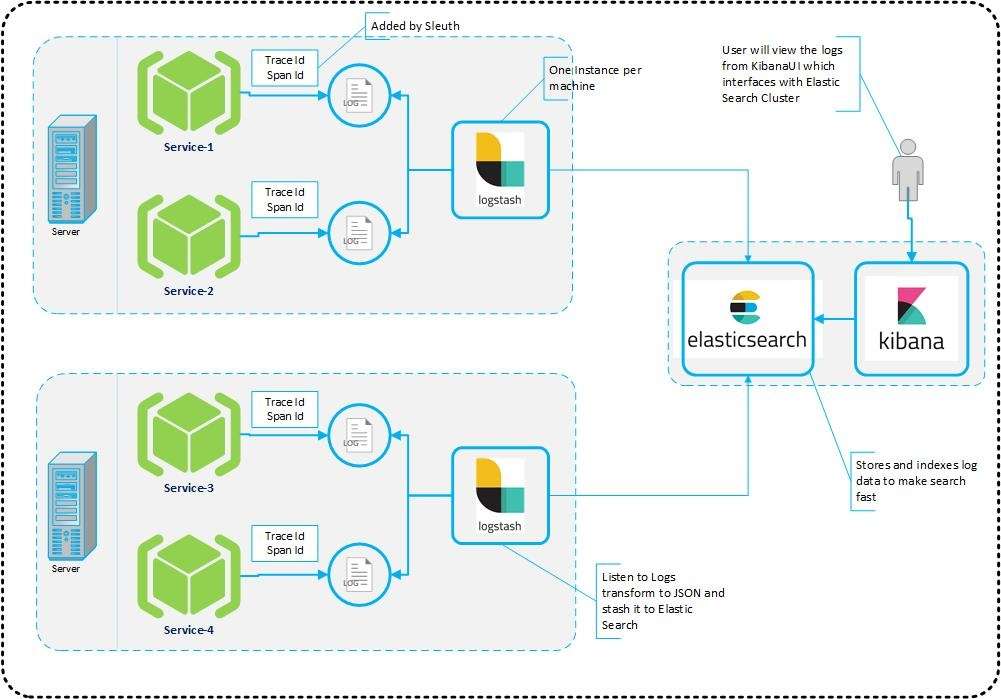

These components together are most commonly used for monitoring, troubleshooting, and securing IT environments. Logstash takes care of data collection and processing, Elasticsearch indexes and stores the data, and Kibana provides a user interface for querying the data and visualizing it.

2.1 Architecture

This topic describes about architecture.

It provides a comprehensive solution for handling all the required facets.

Spring Cloud Sleuth also provides additional functionality to trace the application calls by providing us with a way to create intermediate logging events. Therefore, Spring Cloud Sleuth dependency must be added to the applications.

2.2 Install and Configure ELK

This topic describes about the installation and configuration of ELK.

Note:

To install and configure ELK Stack, make sure the versions of the three software’s are the same. For the exact version to be installed, refer to Software Prerequisites section in Release Notes.